Abstract

Document-level Sentiment Analysis is a complex task that implies the analysis of large textual content that can incorporate multiple contradictory polarities at the phrase and word levels. Most of the current approaches either represent textual data using pre-trained word embeddings without considering the local context that can be extracted from the dataset, or they detect the overall topic polarity without considering both the local and global context. In this paper, we propose a novel document-topic embedding model, DocTopic2Vec, for document-level polarity detection in large texts by employing general and specific contextual cues obtained through the use of document embeddings (Doc2Vec) and Topic Modeling. In our approach, (1) we use a large dataset with game reviews to create different word embeddings by applying Word2Vec, FastText, and GloVe, (2) we create Doc2Vecs enriched with the local context given by the word embeddings for each review, (3) we construct topic embeddings Topic2Vec using three Topic Modeling algorithms, i.e., LDA, NMF, and LSI, to enhance the global context of the Sentiment Analysis task, (4) for each document and its dominant topic, we build the new DocTopic2Vec by concatenating the Doc2Vec with the Topic2Vec created with the same word embedding. We also design six new Convolutional-based (Bidirectional) Recurrent Deep Neural Network Architectures that show promising results for this task. The proposed DocTopic2Vecs are used to benchmark multiple Machine and Deep Learning models, i.e., a Logistic Regression model, used as a baseline, and 18 Deep Neural Networks Architectures. The experimental results show that the new embedding and the new Deep Neural Network Architectures achieve better results than the baseline, i.e., Logistic Regression and Doc2Vec.

1. Introduction

Opinion Mining and Sentiment Analysis are related research topics, at the intersection of Machine Learning and Natural Language Processing, that, recently, have been studied intensively [1,2,3,4,5,6]. The interest in these related topics is due to the wide range of applications where they can be used (e.g., advertising, politics, business, etc.) and the availability of large amounts of textual data. They are generally used to identify opinions and recognize the sentiments expressed, as well as the general polarity of a text, e.g., subjective or objective, positive or negative. The data sources that are mostly used in Opinion and Sentiment Analysis tasks are represented by blogs, posts from social media, comments from movie and product reviews sites or new articles [7]. These can be used to complete different tasks, such as emotion detection and sentiment classification.

Various types of neural networks have been employed to solve more accurately specific Opinion and Sentiment Analysis tasks, e.g., Recurrent Neural Networks (RNNs), Convolutional Neural Networks (CNNs). RNNs have been proven to offer good results for text analysis tasks [8]. Different types of RNNs, such as GRU (Gated Recurrent Unit) or LSTM (Long Short Term Memory), were developed to overcome the flaws of other feed-forward Perceptron-based neural networks. RNNs can capture information about the input, such as context dependency between words, and share parameters across epochs. CNNs [9,10] are a type of feed-forward networks very popular due to the minimal preprocessing requirement. These types of networks are regarded as more powerful than RNNs. Although CNNs are ideal for image processing and their accuracy is dependent on the initial parameter tuning, they turned out to also bring increased performance in text processing, especially combined with other neural networks.

The main motivation of this paper is to improve the accuracy of document-level Sentiment Analysis (Definition 1) using Deep Learning models which employ contextual cues (Definition 2). Thus, we aim to introduce specific/local (Definition 3) and general/global (Definition 4) contextual cues by employing word embeddings (WordEmbs) and Topic Modeling in order to improve the accuracy of polarity detection. Thus, to improve the context of Sentiment Analysis, we enhance document embedding (Doc2Vec) using contextual cues through the use of different WordEmbs, which adds local context by training the embeddings on the documents within a set of documents D, and Topic Modeling algorithms, which adds global context by extracting topics from the set of documents D. To add context, we employ Doc2Vecs and topic embeddings (Topic2Vecs) to create a new embedding, i.e., DocTopic2Vec, as a concatenation between a Doc2Vec and a Topic2Vec.

Definition 1

(Document-level Sentiment Analysis). Document-level Sentiment Analysis is the task used to determine for a document belonging to a set of documents D whether its text has a positive, neutral, or negative polarity.

Definition 2

(Contextual cues). The contextual cues consist of the local and global lexical, semantic, and syntactic information of a word given to a Machine/Deep Learning model to solve a task t. (Note: We use both local and global context for document-level Sentiment Analysis.)

Definition 3

(Local Context). The local context refers to the local lexical, semantic, and syntactic information of a word within a document d. (Note: We extract the local context by training word embeddings for each word . Thus, the embedding encodes the local context by preserving the word’s lexical, semantic, and syntactic similarity as well as its relation with other words within the same document).

Definition 4

(Global Context). The global context refers to the global lexical, semantic, and syntactic information of a word within a set of documents D. (Note: We extract the global context by detecting the most important topic for each document . Thus, documents belonging to the same topic also belong to the same context and the context given by a topic is seen as a global context for the document belonging to this topic).

A Doc2Vec is constructed as the average of the WordEmbs for the terms in the document. This embedding manages to preserve the contexts and semantics of words at the document level [11]. WordEmbs add semantic context by encoding the position for words in a sentence before vectorizing the text. We use five WordEmb: (1) Word2Vec CBOW (Continuous Bag-of-Words) model; (2) Word2Vec Skip-Gram model; (3) FastText CBOW model; (4) FastText Skip-Gram model, and (5) GloVe model. Word2Vec captures the context of a word in a document and the relationship with the words surrounding it. Furthermore, this embedding manages to encode the semantic and syntactic similarity of the words within the document. Word2Vec uses two models to determine the local context: CBOW and Skip-Gram. The CBOW model predicts the word’s individual context by taking into account the context of all the words within the corpus. The Skip-Gram takes a word and determines the words that are in the same context. FastText extends Word2Vec by learning embedding vectors for the n-grams that are found within each word. FastText also uses CBOW and Skip-Gram models. GloVe enhances the local context information of words using global statistics, i.e., word co-occurrence.

We use Topic Modeling to extract the hidden latent semantic patterns and to add a general context to Sentiment Analysis by detecting and grouping document with similar characteristics by the subjects of interest. We employ different Topic Modeling algorithms, i.e., Latent Dirichlet allocation (LDA) [12], Non-Negative Matrix Factorization (NMF) [13], Latent Semantic Indexing (LSI) [14]. We encode these hidden patterns that add a general context to Sentiment Analysis into Topic2Vecs. Topic2Vecs are built as the average between the topics top-k terms’ relevance and their WordEmbs. By employing Topic2Vecs, we manage to encode context-based document grouping and to enhance each document’s context by constructing the DocTopic2Vec using the dominant topic as a concatenation between each document’s Doc2Vec and Topic2Vec. Thus, documents that are similar in meaning and context, including polarity and opinion, will be closer to each other in the vector space than texts which are not necessarily related.

For the experiments, we use a large dataset consisting of game reviews. We create the DocTopic2Vecs using the discussed WordEmbs and Topic Modeling algorithms. Each DocTopic2Vec embedding is used in classification tasks that apply Logistic Regression (LogReg) and neural networks with LSTM, GRU, Bidirectional, Dense, and CNN layers. We also design six news Convolutional-based (Bidirectional) Recurrent Deep Neural Network (CNN-(Bi)RNN) Architectures for the task of determining accurate document-level polarity. The results of our benchmark show that the accuracy is improved by about 5% when adding Doc2Vec contextual clues with NMF and LSI Topic Modeling algorithms, compared to the baseline, i.e., Doc2Vec-based LogReg Sentiment Analysis. Furthermore, the proposed new architectures outperformed the state of the art solution proposed in [3].

The main research questions we want to answer are:

- ()

- Does a Topic Modeling approach improve the overall accuracy of detecting the polarity of textual data?

- ()

- Can local context added by Word Embeddings and global context added by Topic Modeling improve the accuracy of the Sentiment Analysis task?

- ()

- Can a novel CNN-(Bi)RNN architecture prove to be a better model for the Sentiment Analysis task?

Thus, by answering these questions, the main objective of this work is three-fold:

- ()

- Analyze the impact of Topic Modeling on the Sentiment Analysis task;

- ()

- Construct a novel embedding DocTopic2Vec that encapsulates both local and global context in order to improve the accuracy of detecting the polarity of textual data;

- ()

- Build a novel CNN-(Bi)RNN architecture to increase the accuracy of the Sentiment Analysis task.

This paper is structured as follows. In Section 2, we discuss the current advancement in Sentiment Analysis techniques. Section 3 presents the proposed architecture and describes each component module, together with the used algorithms and techniques. In Section 4, we describe the dataset and our set of experiments. Finally, we analyze and interpret the results. Section 5 is drawing the final conclusions and provides several future directions.

2. Related Work

Sentiment Analysis approaches can be classified into three categories: Machine Learning, Lexicon-based, and Hybrid [15]. Furthermore, these techniques are divided, based on the granularity level, in word (or aspect), sentence (or short text), and document (or long text) level.

There are not many solutions focusing on context-based Sentiment Analysis models. A context enrichment model for Sentiment Analysis is proposed in [4]. The authors add several processing steps, prior to sentiment classification, in order to augment the dataset with context. One important step discussed here is the prior-polarity identification with SentiWordNet. Unfortunately, the authors do not clearly specify what are the advantages of prior-polarity identification, and their model is just conceptual without any real experiments.

Most of the related previous works primarily use either only embeddings as text representation that are incorporated into the Sentiment Analysis model (e.g., [2,3]) or they consider Topic Modeling for determining the opinion by topic, and not to add context to the model (e.g., [16,17]).

In [3], the authors propose a Deep Learning 4CNN-BiLSTM model for document-level Sentiment Analysis. Their model consists of four CNN layers and one BiLSTM layer. For the experiments, they use a relatively small amount of documents, i.e., 2003 articles from French newspapers. They employ two optimizers, SGD and Adam, and Word2Vec as WordEmbs solution. The proposed model is compared with CNN, LSTM, BiLSTM, and CNN-LSTM, and they conclude that it achieves the best accuracy. Although they obtained a high accuracy for the 4CNN-BiLSTM model, the results are not conclusive, as the experiments are performed on a small dataset. In our experiments, we also analyze their model, both the version proposed by them and also by adding Topic Modeling.

Attention mechanisms condition the Sentiment Analysis model to pay attention to the features which contribute the most to the task. The authors of the paper [18] propose a model based on LSTM layers with an attention mechanism. They used different approaches for the attention mechanism, i.e., convolution-based and pooling-based attention mechanism, and the word-vectors used for training, i.e., pre-trained word vectors from Word2Vec and randomly initialized word-vectors. Their model obtained better results than baseline methods on two out of three datasets. Attention-based Bidirectional CNN-RNN Deep Model (ABCDM) [19], another attention-based solution, use independent BiLSTM and GRU layers to extract both past and future contexts and an attention mechanism to put more or less emphasis on different words. To reduce the dimensionality and create new feature representations, the ABCDM model utilizes both convolutional layers and pooling techniques. This model achieves state-of-the-art performance when compared with other Neural Network architectures for the task of Sentiment Analysis on reviews and Twitter datasets.

An improved method for generating WordEmbs used in Sentiment analysis is proposed in [2]. This method, Improved Word Vectors, uses Part-of-Speech, lexicon-based, and word position techniques together with Word2Vec or GloVe models. The performance of the proposed solution is tested using four different Deep Learning models and benchmark sentiment datasets. The results show that when using these embeddings, the accuracy of the model is slightly increased.

One solution that uses Topic Modeling for sentiment detection is presented in [17]. The authors combine shrinkage regression and Topic Modeling for detecting polarity in a Twitter dataset. The proposed model consists of two stages. In the first stage, they detect the polarity of the tweets using two shrinkage regression models. This type of regression adds a penalty in the way the loss function is calculated for models that have too many variables. During the second stage, the relevant topics are identified using LDA. The model estimates the sentiment of each topic using term sentiment scores.

Topic Modeling and WordEmbs have been used together to analyze the sentiment of topics. However they have never been applied in Sentiment Analysis at the document level, as we propose in this paper. This approach is used for aspect-based topic Sentiment Analysis [20,21]. In this case, Topic Modeling is used for aspect extraction and categorization without considering the global context. In [21], the authors combine domain-trained WordEmb and Topic Modeling for categorizing aspect-terms from online reviews. Their proposed model uses continuous WordEmb and LDA algorithm. The model is tested using a small dataset, i.e., the restaurant reviews from the SemEval-2014 dataset consisting of 3841 sentences. One important limitation of their model is that it has a longer convergence time than the standard model and has lower performance than supervised models.

Several recent works also explore pre-trained language models for the Sentiment Analysis task, e.g., BERT [22], RoBERTa [23], ALBERT [24]. In [25], BERT is compared with an LSTM-based architecture and achieves an overall better f-measure. In [26], a RoBERTa Sentiment Analysis model is combined with key entity detection, based on the presumption that people are more prone to observe negative information. This approach improves the accuracy of the Sentiment Analysis task when compared with architectures consisting of BERT or RoBERTa transformers combined with SVM, LR, or NBM. [1] is another transformer-based method for sentiment analysis. The novelty of is that it enhances the data quality by handling noises within contexts. For this, it uses six types of embeddings, i.e., character embeddings, GloVe, Part-of-Speech embeddings, Lexicon embeddings, ELMo [27] and BERT-based embeddings. The concatenated embeddings are fed to a BiLSTM network with attention. has higher performance compared with Sentiment Analysis methods that use the standard one-type of embeddings, e.g., Glove or Word2Vec, or other pre-processing methods, e.g., TFIDF.

3. Methodology

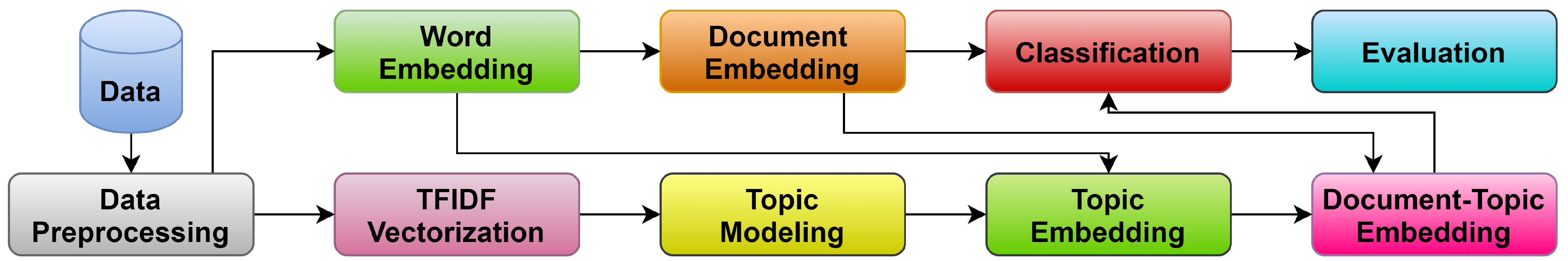

Figure 1 presents the proposed architecture for our topic-based Sentiment Analysis using a contextual cues model.

Figure 1.

The proposed topic-based Sentiment Analysis using a contextual cues architecture.

The Data Preprocessing module cleans and transforms the textual data to make them suitable for analysis. The Word Embedding and TFIDF Vectorization modules encode the documents’ words into vector representations. The Document Embedding module computes a vector for each document based on the Word Embedding. The Topic Modeling uses the TFIDF document vectorization to extract the topics and the most relevant keywords. The Topic Embedding module constructs the vector representation of topics using word embedding. The Document-Topic Embedding module computes the new context enhanced document embeddings using the topic and document embeddings that add bot semantic and syntactic context to the vector representation. The classification module uses the new document-topic embeddings to classify documents and extract their polarity. The Evaluation module uses different metrics to determine the accuracy of the classification and determine the quality of the resulting models.

3.1. Data Preprocessing Module

The preprocessing step is important because the text written by people can contain misspelled words, symbols, abbreviations etc. that need to be removed or replaced to facilitate the execution of the subsequently tasks with greater accuracy [28]. The initial text is preprocessed using the following steps:

- (1)

- The text is cleaned by removing all JavaScript functions, HTML tags, and URL;

- (2)

- The contractions are expanded;

- (3)

- The named entities are extracted while the rest of the text is lemmatized;

- (4)

- The punctuation and stop words excluding negations (i.e., no, not, etc.) are removed;

- (5)

- The text is transformed to lowercase and then split into tokens;

- (6)

- The tokens that have a length greater than 3 or are negations are kept. Using this aggressive text preprocessing improves the algorithms’ time performance, as the vocabulary is minimized to the essential tokens without excluding the terms which impact the polarity.

3.2. Word Embedding Module

The word embedding models used in this paper are Word2Vec, FastText, and GloVe. Each embedding model (WordEmb) generates word representations in a vector space. The context of each word within a document is captured when employing these embeddings. Moreover, these models also encode both the relationship and the similarity between words from a semantic and syntactic perspective.

3.2.1. Word2Vec

Word2Vec represents a textual dataset as a set of vectors and outputs a vector space [29]. The context similarity of a word within the dataset is determined by measuring the distance between the corresponding vectors in this space. Word2Vec use either the Continuous Bag-Of-Words (CBOW) or Skip-Gram model to create the representation of words.

The CBOW model utilizes the context of a word as input and attempts to predict the word itself. The input layer of the model is represented by the one-hot encoded vectors corresponding to each context words. The average of the vectors from this layer is used to compute the input for the hidden layer. The weighted sum of the inputs, computed by the hidden layer, is sent to the next layer. The hidden layer sends the weighted sum of the inputs to the next layer. Each terms’ probability value is computed by the network’s last layer and is given as a final result in the form of a vector.

The Skip-Gram model, as opposed to CBOW, starts with the word as input and tries to generate its context. The input layer is the target word vector, while the output layer consists of the vectors with the probability values of the words appearing in the context of a target word. The hidden layer sends the weighted input to the following layer. The Skip-Gram model is generally used to discover the semantic similarity between words. Therefore, if two words have a similar context, these words might also have a similar semantic.

3.2.2. FastText

FastText is an unsupervised algorithm that uses the CBOW and Skip-Gram models for learning word embeddings [30]. This embedding is considered an extension of Word2Vec as it follows a similar approach [31]. The difference is that the word is not considered the basic unit, but a bag of character n-grams. This facilitates better accuracy and a faster training time compared to Word2Vec.

3.2.3. GloVe

GloVe (Global Vectors) is an unsupervised model applied for learning word embeddings [32]. In comparison to the other models described, i.e., Word2Vec, FastText, GloVe consider both local and global statistics of word–word co-occurrences in the corpus to obtain the vector representations of the words. It uses a term co-occurrence matrix that stores, for each word, the frequency of its appearance in the same context with another word. GloVe captures the relationship between words by using the ratio of co-occurrence probability. Using the co-occurrence probability ratio, it extracts information from all the word vectors and identifies word analogies or synonyms within the same contexts.

3.3. Document Embedding Module

The document embeddings Doc2Vec (Equation (1)) are generated for each document in the dataset by adding the word embeddings for all the terms t (WordEmb(t)) in the document and divide the sum by the number of terms in the document (). We build a Doc2Vec for each WordEmb we previously discussed.

3.4. TFIDF Vectorization

The TFIDF (term frequency-inverse document frequency) Vectorization module uses a bag-of-word approach to vectorize the news articles given:

- (1)

- A textual corpus of size that contains documents ;

- (2)

- A vocabulary of size that contains the unique words or terms in the dataset D.

A document of length is a multi-set of V, i.e., with the multiplicity (co-occurrences) function which denotes the number of times appears in document . For simplicity, we will denote as , thus, .

TFIDF (Equation (2)) is defined using:

- (1)

- Term frequency (Equation (3)) that computes the co-occurrences of a term in a document ;

- (2)

- The inverse-document frequency (Equation (4)) which uses the number document where a term appears to penalize frequent terms that bring no information gain;

- (3)

- The normalization factor (Equation (5)) to normalize TFIDF in the range .

Using the term weights, we can construct a document–term matrix , where rows correspond to documents and terms to columns. The cell value is the weight (e.g., , , etc.) of term in document .

3.5. Topic Modeling Module

This module utilizes statistical unsupervised methods to extract hidden latent semantic patterns within our dataset. We use the following models for this module.

This module utilizes statistical unsupervised Machine Learning methods, i.e., Topic Modeling, to extract hidden latent semantic patterns within our dataset. We use three generative statistical models for this module, i.e., Latent Dirichlet allocation (LDA) [12], Non-Negative Matrix Factorization (NMF) [13], Latent Semantic Indexing (LSI) [14], also known as Latent Semantic Analysis (LSA). The Topic Modeling algorithms use the document–term matrix A as input.

3.5.1. Latent Dirichlet Allocation

Latent Dirichlet allocation (LDA) is a probabilistic model that groups various terms with similar meaning that represent the same notions [12]. It is one of the most popular Topic Modeling approaches [33]. LDA algorithm relies on the assumption that random mixtures over latent topics can be used to generate documents. In this context, each topic is described by a multinomial distribution over the unique terms in the vocabulary. Thus, we can generate documents using techniques such as Gibbs that samples <> pairs from a random mixture.

For k topics and a corpus of n documents where each document is a sequence of words modeled as Poisson distributions, i.e., LDA uses the following process:

- (1)

- Determine a distribution of topics for each document ;

- (2)

- Determine a distribution of words in a topic ;

- (3)

- For each word in document :

- (a)

- Determine a topic ;

- (b)

- Determine a word .

The distribution of topics in document is a Dirichlet distribution over the number of topics where is a k-dimensional vector of probabilities, is the probability of topic occurring in document , and is a k-dimensional vector of positive reals .

The distribution of words in topic is also a Dirichlet distribution over the vocabulary where is a m-dimensional vector of probabilities, is the probability of a word probability of word occurring in topic , and is a m-dimensional vector of positive reals .

For each document (), we define described by a set of words () of size . Both and are multinomial distributions, i.e., and .

3.5.2. Non-Negative Matrix Factorization

Non-Negative Matrix Factorization (NMF) is a dimensionality reduction paradigm based on linear algebra [34]. Experimental results prove that NMF is the best choice for extracting topics [35]. It is constructed on the premises that a matrix can be created as a product of two non-negative matrices. Thus, NMF factorizes a matrix into two non-negative matrices and . With regard to Topic Modeling, these matrices have the following signification:

- (1)

- A is a document–term matrix constructed using weighted term frequencies for a corpus containing n documents and a vocabulary of size m terms;

- (2)

- W is the document–topic matrix that assigns a document membership to each topic k;

- (3)

- H is the topic–term matrix that assigns to each topic k the importance of a term.

To determine W and H, the objective function must be minimized by respecting the constraint that all the elements of W and H are non-negative. Equation (6) presents the objective function, where is the Frobenius norm.

3.5.3. Latent Semantic Indexing

Latent Semantic Indexing (LSI) tries to solve the problem of synonyms by identifying terms that statistically appear together. The algorithm’s main consideration is that the randomness of word choice within documents hides an underlying latent semantic structure. To determine this latent structure, LSI employs the matrix factorization technique called Singular Value Decomposition (SVD). It identifies syntactical different but semantically similar terms using a structure called hidden “concept” space.

Given the document–term matrix A with the size (n is number of documents, m is the number of terms in the vocabulary), LSI uses SVD to interactively factorize A into a product of three matrices, i.e., .

- (1)

- U is an matrix that denotes the document–topics association. The columns of U are the eigenvectors of . Thus, these vectors identify the k non-zero eigenvalues of . Moreover, are unit orthogonal vectors, i.e., and are also called left singular values because they satisfy the condition .

- (2)

- is an matrix that denotes the topic–keywords association. The columns of V are the eigenvectors of . Thus, these vectors identify the r non-zero eigenvalues of . Moreover, are unit orthogonal vectors, i.e., , and are also called right singular values because they satisfy the condition .

- (3)

- is a diagonal matrix which has on the diagonal the singular values or eigenvalues . Thus, this diagonal matrix is defined as where each value is sorted in decreasing order from the one that holds the highest value to the one that represents the smallest one, i.e., .

3.6. Topic Embedding Module

To encode the global context that is hidden in the latent semantic structures defined by the randomness of words, we employ a topic vector embedding Topic2Vec that encodes the keyword for the k topics extracted using one of the Topic Modeling algorithms. Topic2Vec takes the weighted average of the word embeddings WordEmb of each relevant term t belonging to the topic () and its probability distribution within the topic . Equation (9) presents the proposed encoding, where the number of keywords considered for a topic is . We build a Topic2Vec for each topic model and WordEmb we previously discussed.

3.7. Document-Topic Embedding Module

The document with topics embeddings DocTopic2Vec (Equation (10)) are generated by concatenating (operator ⊕) the Topic2Vec of the most dominant topic of a document with the document’s Doc2Vec. We build a DocTopic2Vec for each Topic2Vec we previously discussed using the same WordEmb for both the Doc2Vec and the Topic2Vec. By concatenating the Doc2Vec with Topic2Vec and obtaining the DocTopic2Vec we manage to encode the local context given by the document embedding (Doc2Vec) with the global context given by the topic embedding (Topic2Vec).

3.8. Classification Module

For classification, we use the Logistic Regression (LogReg) algorithm, which serves as a baseline, and multiple Deep Neural Network (DNN) Architectures.

3.8.1. Logistic Regression

Logistic Regression (LogReg) is a classification algorithm successfully used, in many cases, as a baseline for the Sentiment Analysis task to predict the class in which an observation can be categorized [36,37]. The algorithm tries to minimize the error of the estimations made using the log-likelihood and to determine the parameters that produce the best estimations using gradient descent [38]. The log-likelihood functions guarantee that the gradient descent algorithm can converge to the global minimum.

3.8.2. Deep Neural Network

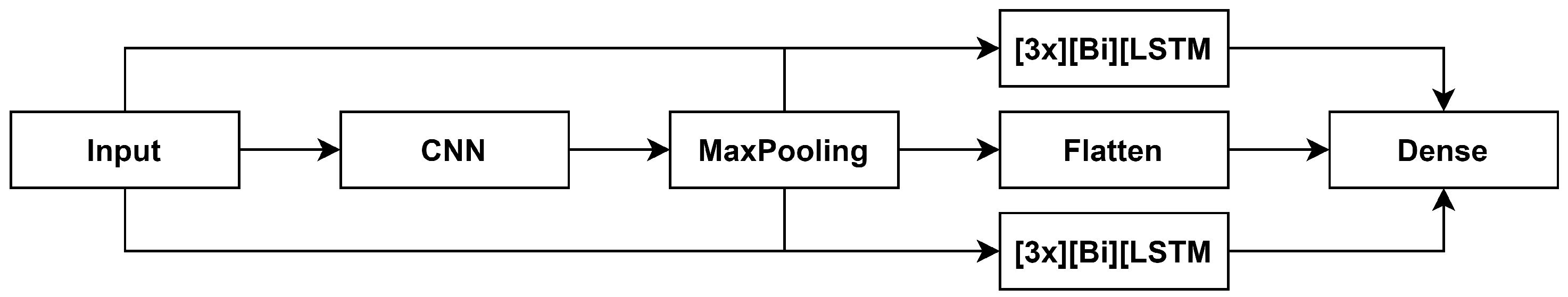

Deep Neural Network (DNN) Architectures are used to classify the textual data and extract the polarity at the document level using Doc2Vec and DocTopic2Vec. These architectures are developed using different fully connected or convolutional layers. The neural network units that make up these layers are Perceptron, Gated Recurrent Unit (GRU), and Long Short-Term Memory (LSTM), Bidirectional GRU (BiGRU), and Bidirectional LSTM (BiLSTM). Figure 2 presents the combinations we use between these layers to create 17 DNN Architectures.

Figure 2.

DNN architecture.

A Perceptron is a processing unit used to predict the label of an observation . The function is used to map all the possible feature representation <> pairs to a new feature vector and multiplies them by a weight vector . The vector must fulfill the following conditions: (1) it has a positive number of elements, and (2) the values of its elements are real value numbers.

GRU is a recurrent unit that has two gating mechanisms: (1) the update gate, and (2) the reset gate. The update gate is used as both the forget gate and the input gate. The reset gate determines what percentage of the previous hidden state contributes to the candidate state of the new step. Furthermore, the GRU has only one state component, i.e., the hidden state.

LSTM is a recurrent unit that uses in its design two components to represent its state: (1) the hidden state is given by a short-term memory component, and (2) the current cell state is achieved by the long-term memory component. The LSTM unit comprise of a gating mechanism with three gates and a memory cell. The gating mechanism has the following gates: (1) the input gate, (2) the forget gate, and (3) the output gate. LSTM controls the gradients’ values and avoids the problems of vanishing and exploding gradients by using the forget gate and the properties of the additive functions which compose the cell state gradients.

Bidirectional RNN (BiRNN) units allow for the use of information from both the previous and next state to make predictions about the current state. We use both BiGRU and BiLSTM in our models.

Dense layers are regular deeply connected neural network layers that contain only Perceptron units.

CNN are Deep Neural Networks containing multiple convolution hidden layers that apply a filter to the activation function. After a convolutional layer, it is customary to use a layer that employs a pooling mechanism. The pooling layer reduces the dimensions of the data returned by the convolutional layer. This reduction is achieved by combining the results of the previous layer into a single layer neuron. The output of this single layer neuron is then used as the input of the following layer

Considering these layers, we propose six new CNN-(Bi)RNN architectures: CNN-BiGRU, CNN-3GRU, CNN-3BiGRU, CNN-BiLSTM, CNN-3LSTM, and CNN-3BiLSTM. When multiple recurrent layers are used, they form a stacked architecture.

These architectures are designed as follows:

- (1)

- The input layer that accepts the Doc2Vec or DocTopic2Vec;

- (2)

- The CNN layer;

- (3)

- MaxPooling;

- (4)

- (Stacked) Recurrent layer(s), either BiLSTM or BiGRU;

- (5)

- Dense layer containing Perceptron.

Moreover, we implement the DNN Architecture presented in [3]. We use the same configurations for this DNN as presented in the original work. In the experiments, we name this architecture 4CNN-BiLSTM.

3.9. Evaluation Module

Evaluation metrics are used to better understand the performance of a model and for fine-tuning the model on a given classification task. In our case, we are solving a multi-class classification problem where we are trying to determine the different polarities of a given text. Thus, we use the weighted accuracy measure for evaluating our models because it takes into account the distribution of classes within the dataset. The weighted accuracy (Equation (11)) measures the per-class effectiveness of a classifier by employing the True/False Positive ( and ) and True/False Negative ( and ) rates. Given k classes () and a dataset with n observations where observations are labeled with class , then we can compute a weight for each class using Equation (12).

4. Experimental Results

4.1. Dataset

For the experiments, we used a game reviews dataset containing context textual data posted on the MetaCritic website (https://www.metacritic.com/, accessed on 28 September 2021). The original version of this dataset is presented in [39] and improved in [40]. From the dataset, we use only the reviews and the polarity assigned for each review, although the collected raw data also contain other information. The polarity was transformed from the initial string format (i.e., positive, neutral, negative) into integer format (i.e., 2, 1, 0). This dataset contains over 90,500 game reviews with a polarity assigned that can be −1 for negative, 1 for positive, or 0 for neutral. After preprocessing, we had with 90,165 with the following distribution of class: 15,721 negative, 22,433 neutral, and 52,011 positive. Out of the total number of 90,165 comments, 99.31% were in English, while 0.69% were in Spanish. As the number of comments in Spanish is negligible, we kept them to see if and how they impact our analysis. The vocabulary size is 23,016. The reviews contain from 1 to 1217 terms, with an average of 44.02. The reviews with a length between 1 and 50 words are the most common in the dataset, i.e., 66,713. The number of reviews with more than 100 words is 8538.

Experimentally, we have identified that the classification tasks perform better when the training and testing sets keep the proportions of the polarities of the entire dataset. For example, on a LogReg classification experiment, if the data are split poorly, e.g., mostly positive reviews are used in the training dataset, the accuracy is lower than 55%. If equal proportions are created, based on three-quarters of the initial dataset, the accuracy improves up to 67%, whereas if the dataset is split using the initial proportions, this results in approximately 71% accuracy. Therefore, we conducted the classification experiments using 80% of the dataset for training and 20% for testing, i.e., 72,132 reviews for training and 18,033 for testing. We preserved the polarity distribution of reviews in the both training and testing subsets. Moreover, we identified that the better the data are cleaned, i.e., as little as possible misspelled or foreign words are left in the dataset, the better the accuracy of the classification tasks is, with an increase of even 10% in accuracy compared to other data normalization methods.

4.2. Word Embedding

To identify the best size for each WordEmb, we tested various parameters and evaluated the resulting embeddings using a few approaches:

- (1)

- Computing accuracy by identifying how well the model recognizes analogies; the test is performed using the questions-words dataset [41] that contains pairs of analogies from different domains;

- (2)

- Identifying the cosine similarity between words with positive and negative connotation that appear in the dataset, i.e., (fun, enjoyable), (boring, dull), etc., and

- (3)

- Checking the most similar words with a common word in the dataset.

We determined experimentally using a grid search that (1) the best window size is four; (2) the number of epochs used for training is 30; (3) the initial learning rate is . Table 1 presents the final embedding sizes used for classification determined after evaluation.

Table 1.

Embedding sizes.

4.3. Document Embeddings

Using the five WordEmbs, we construct a Doc2Vec for each review as an average of the WordEmbs for the terms in the document. The size of the Doc2Vec is equal to the size of the WordEmb used.

4.4. Topic Modeling

We identify 10 topics using the TFIDF document–term matrix as input together with the three Topic Modeling algorithms, i.e., LSA, NMF, and LSI. From each topic, the first 15 most relevant features are used in the algorithm for computing the topic embeddings. The number of documents where a topic is the most relevant is presented in Table 2. Table 3, Table 4 and Table 5 present the results for LDA, NMF, and LSI, respectively.

Table 2.

Number of documents by dominant topic.

Table 3.

The most relevant features for topics generated with LDA.

Table 4.

The most relevant features for topics generated with NMF.

Table 5.

The most relevant features for topics generated with LSI.

Analyzing the results of Table 3, we observe that LDA extracts diverse topics that can be interpreted using the keywords, e.g., Topic 0 is related to racing games, Topic 4 is related to sports games. Furthermore, LDA also manages to determine topics that find hidden latent semantic patterns that describe polarity, e.g., Topic 9 and Topic 7. We also note that LDA manages to detect and group together documents that have words in other languages than English, e.g., Topic 3, being the only algorithm among the three used in our analysis that picked up on the this negligible percent (0.69%) of comments.

As in the case of LDA, NMF (Table 4) manages to determine topics related to different games’ genres and polarity. Unlike LDA, NMF manages to discover topics that that group together both polarity and game type, e.g., Topic 0, Topic 1, Topic 2. Furthermore, NMF fails to discover the comments that use a different language to English.

Finally, LSI (Table 5) manages to determine topics related to the overall game play experience and users’ opinion towards this aspect, e.g., Topic 0, Topic 2, Topic 5, Topic 6. Thus, most of the topics detected by LSI contain similar terms, e.g., game, play, to underline some of the polarity, e.g., good, awesome, beautiful, bad, fun, terrible.

4.5. Topic to Vector

For each topic determined by an algorithm, we build a Topic2Vec as the weighted average of the WordEmb and the importance of each relevant word that describes the topic. Thus, the size of the Topic2Vec is same as the size of the used WordEmb.

4.6. Document-Topic to Vector

A DocTopic2Vec is created by concatenating the Doc2Vec with the Topic2Vec of the dominant topic for a document. The same WordEmb is used when constructing the Doc2Vec and Topic2Vec embeddings that are concatenated for building the DocTopic2Vec embedding. Thus, the size of the DocTopic2Vec is twice the size of the used WordEmb.

4.7. Classification Algorithms

The classification experiments with LogReg are computed using both Doc2Vec and DocTopic2Vec. For this model to achieve a stronger regularization, we set the inverse regularization parameter C to .

Using the GRU units, we built multiple models:

- (1)

- One with a single GRU Layer;

- (2)

- One with three GRU layers (3GRU);

- (3)

- One with a single BiGRU Layer, and

- (4)

- One with three BiGRU layers (3BiGRU).

All these models have a final Dense Layer used for the final classification. Each GRU layer is initialized with 128 units and a dropout of 0.2. The activation for the update gate is the sigmoid function (Equation (13)) and for the reset gate the hyperbolic tangent function (Equation (14)). The sigmoid function is defined in and is used for models that utilize the probability of a variable. The hyperbolic tangent function is defined on the interval and it is mainly used to better differentiate between the strongly negative values and 0. The Dense output layer is initialized using the softmax activation function (Equation (15)) and with three as the dimension, corresponding with the number of possible values for the polarity. For multiclass classification, the softmax function is a generalized logistic activation function used to normalize the output of a network to a probability distribution over predicted output classes . In our case, we set , as we are predicting the positive, negative, or neutral polarity.

Using the LSTM units, we built multiple models to mirror the GRU architectures:

- (1)

- One with a single LSTM Layer;

- (2)

- One with three LSTM layers (3LSTM);

- (3)

- One with a single BiLSTM Layer, and

- (4)

- One with three BiLSTM layers (3BiLSTM).

We keep the same initialization parameters for the LSTM and Dense layer as for the GRU architectures. The activation for the input, output, and forget gates is the sigmoid function, while for the hidden state and the cell input activation vector is the hyperbolic tangent function. The LSTM models use the same loss function and optimizer parameter.

As CNN architectures proved to be an asset for text classification [10], we build a CNN Sentiment Analysis architecture with three layers: CNN, MaxPooling, and Dense. We initialize the filters to 64 and the kernel size to half the size of the input vector, i.e., Doc2Vec or DocTopic2Vec. We also add the CNN and MaxPooling layers on top of the four GRU and four LSTM architectures to determine if convolutions on top of recurrent layers improve the classification as in [9]. Moreover, we implement the Deep Learning Architecture presented in [3] using the same configuration. In the experiments, we name this architecture 4CNN-BiLSTM.

For all the Deep Neural Network Architectures, we utilize a batch size of 5000 to accurately estimate the gradient error in the detriment of the drawback known as slowing the convergence of the learning process. The loss is computed using categorical cross entropy and the applied optimizer is Adam. Each network is trained with a maximum of 200 epochs, using an automated stopping mechanism that stops the execution if the accuracy is not improved during 20 successive epochs.

4.8. Implementation

The entire pipeline is implemented in Python3.7. For named entity recognition and lemmatization we used the en_core_web_sm from the SpaCy [42] package. We use the gensim [43] and python-glove [44] packages for the WordEmbs and the scikit-learn [45] package for the TFIDF vectorization, LogReg classifier, and Topic Modeling algorithms. All the DNN Architectures are implemented in Keras [46] with TensorFlow [47] as the tensor backend engine. The experiments are run on an NVIDIA® DGX Station™. The code is freely available online on GitHub at https://github.com/cipriantruica/DocTopic2Vec.

4.9. Results

Table 6, Table 7, Table 8, Table 9 and Table 10 present the average accuracy obtained after 10 distinct training experiments. As a baseline for the embeddings, we use the Doc2Vec, while, for classification, we use LogReg. We utilize Stratified Cross-Validation for splitting the dataset into 80–20% training–testing sets with random seeding, i.e., 72,132 reviews for training and 18,033 for testing. Furthermore, we identified that the better the data are cleaned, i.e., as little as possible misspelled or foreign words left in the dataset, the better the accuracy of the classification tasks is, with an increase of even 10% in accuracy compared to other data normalization methods.

Table 6.

Experiments using the Word2Vec CBOW embeddings of size 256. (Note: Bold marks the best accuracy obtained for the combination of Algorithm and Doc2Vec/DocTopic2Vec).

Table 7.

Experiments using the Word2Vec Skip-Gram embeddings of size 128. (Note: Bold marks the best accuracy obtained for the combination of Algorithm and Doc2Vec/DocTopic2Vec).

Table 8.

Experiments using the FastText CBOW embeddings of size 256. (Note: Bold marks the best accuracy obtained for the combination of Algorithm and Doc2Vec/DocTopic2Vec).

Table 9.

Experiments using the FastText Skip-Gram embeddings of size 128. (Note: Bold marks the best accuracy obtained for the combination of Algorithm and Doc2Vec/DocTopic2Vec).

Table 10.

Experiments using the GloVe embeddings of size 128. (Note: Bold marks the best accuracy obtained for the combination of Algorithm and Doc2Vec/DocTopic2Vec).

The proposed DocTopic2Vec improves significantly the detection of polarity at document-level, for both NMF and LSI, over the simple implementation of Doc2Vec with over 5%. In the case of the LDA, we observe a decrease in accuracy. When using the Word2Vec CBOW model to construct DocTopic2Vec (Table 6), we obtain the best results and the overall best accuracy (i.e., 0.7718) for all our experiments with the GRU architecture and LSI topic model. For the Word2Vec Skip-Gram (Table 7), the CNN-BiGRU architecture with the DocTopic2Vec for NMF obtain the best results. The CNN-BiGRU architecture also achieves the best results when building the DocTopic2Vec using FastText and LSI (Table 8 and Table 9). When using GloVe and the LSI topic model to construct the DocTopic2Vec (Table 10), the best results are obtained with the novel CNN-3BiGRU architecture.

The experimental results show that the polarity detection accuracy is improved if the Topic Modeling algorithms meet at least one of the following two conditions:

- (1)

- The document to dominant topic distribution is balanced and manages to group context-related documents together;

- (2)

- The importance of the terms that belong to the topic have a small value range in order to enhance the document vectorization with the context-dependent terms.

Thus, depending on the used Topic Modeling algorithm, the overall performance of the proposed model changes.

LSI manages to meet the first condition needed to improve the accuracy of the polarity detection task. The importance of the relevant keywords for a topic detected with LSI has values ≤1. These values influence the Topic2Vec values (Table 5). Thus, the final DocTopic2Vec’s values remain balanced for the entire encoding, and the context extracted thought Topic Modeling in conjunction with the distribution of document to dominant topic improves the classification task (Table 2).

NMF satisfies both conditions needed to improve the accuracy of the Sentiment Analysis task. For NMF, the importance of the relevant keywords is not normalized and has values in the range , but the majority of the values are still ≤1 (Table 4). When building the Topic2Vec for NMF, some dimensions are going to have higher values which add more importance to the context-related words. During the training of the model, the higher values introduce bias to these dimensions in the classification task and manage to influence the accuracy of detecting the document-level polarity by better grouping documents together. Moreover, the more balanced distribution of documents to the dominant topic obtained by the NMF (Table 2) also influences the context-based grouping of documents.

LSA does not meet any of the two conditions needed for an improved polarity detection model; thus, the accuracy decreases. When using LDA to build the DocTopic2Vec, LogReg and RNN results are influenced by the importance of a word to a topic and the distribution of the document to the dominant topic. The relevant words for some topics have high importance (Table 3). Thus, the Topic2Vec values are larger than the Doc2Vec values. Because the distributions of document to dominant topic is not balanced (Table 2), the Topic2Vec with the highest values is assigned to the majority of documents. When concatenating the DocTopic2Vec, the second half of the embedding and the imbalanced distribution of the document to dominant topic influences the classification task and the results are similar to flipping a coin.

For the CNN with bidirectional RNNs models, we obtain better results for DocTopic2Vec constructed with LDA, as the Deep Neural Network uses convolutions to select values. Therefore, the impact of the second half of the Topic2Vec values, as well as the imbalanced document grouping, are minimized, and for some tests, we obtain better results.

We observe that, on average, we obtain better results when using bidirectional models for both the fully connected (e.g., BiGRU, 3BiGRU, etc.) and convolutional architectures (e.g., CNN-BiGRU, CNN-3BiGRU, etc.). We note that, on average, the proposed new architectures perform better for this task. Furthermore, our models outperform the state of the art 4CNN-BiLSTM architecture. Stacking multiple layers of RNNs (e.g., 3GRU, 3BiGRU, 3GRU, etc.) with or without using a CNN brings very little improvement in accuracy over the architectures with a single layer. In case they are better, they only bring a ∼ improvement. The same observations can be deducted for the architectures that stacks multiple CNNs, i.e., 4CNN-BiLSTM.

As a final remark, we compare our results with the results obtained on the same dataset in [48] and in [40]. Our proposed Deep Neural Network architectures outperform with ∼ the Transformer-based models in [40] that obtained an accuracy of only 0.67.

5. Conclusions

In this paper, we propose DocTopic2Vec, a novel embedding that incorporates contextual cures through the use of Topic Modeling. We use a dataset with game reviews to learn different WordEmb models, i.e., Word2Vec, FastText, and GloVe. Applying the different WordEmb, we create Doc2Vecs for each review and Topic2Vecs for each topic extracted by LDA, NMF, and LSI. A DocTopic2Vec is constructed for each review as the concatenation of its Doc2Vec with the Topic2Vec for its dominant topic. Both Doc2Vec and Topic2Vec use the same WordEmb when are concatenated into the DocTopic2Vec. To prove the efficiency of the new proposed DocTopic2Vec in the task of Document-Level Sentiment Analysis, we implement different Deep Neural Network (DNN) Architectures using combinations of fully connected (i.e., GRU, LSTM, BiGRU, BiLSTM, Dense) and convolutional (CNN) layers. Furthermore, we propose six novel Convolutional-based Recurrent DNN Architectures that outperform the state of the art 4CNN-BiLSTM architecture [3].

The experimental results show an improvement in accuracy in determining the document-lever polarity of ∼ when employing the new proposed context-enhanced DocTopic2Vec for the NMF- and LSI-based topic embeddings over the baseline, i.e., Doc2Vec with LogReg. These embeddings manage to improve the classification by:

- (1)

- Grouping context-related documents together through the document to dominant topic distribution;

- (2)

- Enhancing the document vectorization with the importance of context-dependent terms that belong to the topic.

Furthermore, we observe that if the Topic Modeling algorithm does not meet these requirements, the polarity detection accuracy drops significantly, as in the case of LDA. Finally, we want to note that our proposed CNN-(Bi)RNN architectures outperform the best performing state-of-the-art model with ∼ applied on the same dataset in [40].

By combining Topic Modeling with the Sentiment Analysis task and by obtaining better results, we manage to answer and to fulfill objective . We answer by adding local and global context through the novel DocTopic2Vec embedding and improving the accuracy of detecting the polarity of textual data, thus achieving objective . By introducing novel CNN-(Bi)RNN Deep Learning Architectures that improve the accuracy of the Sentiment Analysis task, we answer our final research question and complete objective .

As future work, we aim to test other embeddings, e.g., Mittens [49] which learns domain-specific representations, MOE [50] which manages word misspellings, BERT [22] which considers the word’s occurrence and position when computing its context. Furthermore, we plan to explore how the WordEmbs used in this paper could be used with other neural networks, such as Hierarchical Attention Networks or Deep Belief Networks.

Author Contributions

Conceptualization, C.-O.T., E.-S.A. and M.-L.Ș.; methodology, C.-O.T., E.-S.A. and M.-L.Ș.; software, C.-O.T., E.-S.A. and M.-L.Ș.; validation, C.-O.T., E.-S.A. and M.-L.Ș.; formal analysis, C.-O.T., E.-S.A., M.-L.Ș. and A.P.; investigation, C.-O.T., E.-S.A., M.-L.Ș. and A.P.; resources, C.-O.T. and E.-S.A.; data curation, E.-S.A. and M.-L.Ș.; writing—original draft preparation, C.-O.T., E.-S.A., M.-L.Ș. and A.P.; writing—review and editing, C.-O.T., E.-S.A. and A.P.; visualization, C.-O.T. and E.-S.A.; supervision, C.-O.T. and E.-S.A.; project administration, C.-O.T., E.-S.A. and A.P.; funding acquisition, A.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Acknowledgments

The research presented in this paper was supported in part by the German Federal Ministry of Education and Research (BMBF) through the project QURATOR (Grant No. 03WKDA1F) and PANQURA (Grant No. 03COV03F), and the German Academic Exchange Service (DAAD) through the projects “Deep-Learning Anomaly Detection for Human and Automated Users Behavior” (Grant No. 91809358) and “AWAKEN: content-Aware and netWork-Aware faKE News mitigation” (Grant No. 91809005).

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| WordEmb | Word Embedding |

| Word2Vec | Word to vector |

| GloVe | Global Vectors |

| Doc2Vec | Document to Vector |

| CBOW | Continuous Bag-of-Words |

| Topic2Vec | Topic to Vector |

| DocTopic2Vec | Document-Topic to Vector |

| DNN | Deep Neural Networks |

| RNN | Recurrent Neural Networks |

| BiRNN | Bidirectional Recurrent Neural Networks |

| GRU | Gated Recurrent Unit |

| BiGRU | Bidirectional Gated Recurrent Unit |

| LSTM | Long Short-Term Memory |

| BiLSTM | Bidirectional Long Short-Term Memory |

| CNN | Convolutional Neural Networks |

| LDA | Latent Dirichlet Allocation |

| LSI | Latent Semantic Indexing |

| NMF | Non-Negative Matrix Factorization |

| LogReg | Logistic Regression |

| TF | Term Frequency |

| IDF | Inverse Document Frequency |

| TFIDF | Term Frequency–Inverse Document Frequency |

| ABCDM | Attention-based Bidirectional CNN-RNN Deep Model |

| TP | True Positive |

| FP | False Positive |

| TN | True Negative |

| FN | False Negative |

References

- Naseem, U.; Razzak, I.; Musial, K.; Imran, M. Transformer based Deep Intelligent Contextual Embedding for Twitter sentiment analysis. Future Gener. Comput. Syst. 2020, 113, 58–69. [Google Scholar] [CrossRef]

- Rezaeinia, S.M.; Rahmani, R.; Ghodsi, A.; Veisi, H. Sentiment analysis based on improved pre-trained word embeddings. Expert Syst. Appl. 2019, 117, 139–147. [Google Scholar] [CrossRef]

- Rhanoui, M.; Mikram, M.; Yousfi, S.; Barzali, S. A CNN-BiLSTM Model for Document-Level Sentiment Analysis. Mach. Learn. Knowl. Extr. 2019, 1, 832–847. [Google Scholar] [CrossRef] [Green Version]

- Yusof, N.N.; Mohamed, A.; Abdul-Rahman, S. Context Enrichment Model Based Framework for Sentiment Analysis. In International Conference on Soft Computing in Data Science; Springer: Singapore, 2019; pp. 325–335. [Google Scholar] [CrossRef]

- Yadav, A.; Vishwakarma, D.K. Sentiment analysis using deep learning architectures: A review. Artif. Intell. Rev. 2020, 53, 4335–4385. [Google Scholar] [CrossRef]

- Vijayaragavan, P.; Ponnusamy, R.; Aramudhan, M. An optimal support vector machine based classification model for sentimental analysis of online product reviews. Future Gener. Comput. Syst. 2020, 111, 234–240. [Google Scholar] [CrossRef]

- Nemes, L.; Kiss, A. Social media sentiment analysis based on COVID-19. J. Inf. Telecommun. 2020, 5, 1–15. [Google Scholar] [CrossRef]

- Mikolov, T.; Karafiát, M.; Burget, L.; Černockỳ, J.; Khudanpur, S. Recurrent neural network based language model. In Proceedings of the Conference of the International Speech Communication Association, Chiba, Japan, 26–30 September 2010; pp. 1045–1048. [Google Scholar]

- Kim, Y. Convolutional Neural Networks for Sentence Classification. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Doha, Qatar, 25–29 October 2014; pp. 1746–1751. [Google Scholar] [CrossRef] [Green Version]

- Wang, R.; Li, Z.; Cao, J.; Chen, T.; Wang, L. Convolutional Recurrent Neural Networks for Text Classification. In Proceedings of the International Joint Conference on Neural Networks, Budapest, Hungary, 14–19 July 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Le, Q.; Mikolov, T. Distributed Representations of Sentences and Documents. In Proceedings of the International Conference on Machine Learning, Beijing, China, 21–26 June 2014; pp. 1188–1196. [Google Scholar]

- Blei, D.M.; Ng, A.Y.; Jordan, M.I. Latent dirichlet allocation. J. Mach. Learn. Res. 2003, 3, 993–1022. [Google Scholar]

- Arora, S.; Ge, R.; Moitra, A. Learning Topic Models—Going beyond SVD. In Proceedings of the Annual Symposium on Foundations of Computer Science, New Brunswick, NJ, USA, 20–23 October 2012; pp. 1–10. [Google Scholar] [CrossRef] [Green Version]

- Deerwester, S.; Dumais, S.T.; Furnas, G.W.; Landauer, T.K.; Harshman, R. Indexing by latent semantic analysis. J. Am. Soc. Inf. Sci. 1990, 41, 391–407. [Google Scholar] [CrossRef]

- D’Andrea, A.; Ferri, F.; Grifoni, P.; Guzzo, T. Approaches, tools and applications for sentiment analysis implementation. Int. J. Comput. Appl. 2015, 125, 26–33. [Google Scholar] [CrossRef]

- Aziz, M.N.; Firmanto, A.; Fajrin, A.M.; Ginardi, R.H. Sentiment Analysis and Topic Modelling for Identification of Government Service Satisfaction. In Proceedings of the International Conference on Information Technology, Computer, and Electrical Engineering, Semarang, Indonesia, 27–28 September 2018; pp. 125–130. [Google Scholar] [CrossRef]

- Yoon, H.G.; Kim, H.; Kim, C.O.; Song, M. Opinion polarity detection in Twitter data combining shrinkage regression and topic modeling. J. Inf. 2016, 10, 634–644. [Google Scholar] [CrossRef]

- Usama, M.; Ahmad, B.; Song, E.; Hossain, M.S.; Alrashoud, M.; Muhammad, G. Attention-based sentiment analysis using convolutional and recurrent neural network. Future Gener. Comput. Syst. 2020, 113, 571–578. [Google Scholar] [CrossRef]

- Basiri, M.E.; Nemati, S.; Abdar, M.; Cambria, E.; Acharya, U.R. ABCDM: An Attention-based Bidirectional CNN-RNN Deep Model for sentiment analysis. Future Gener. Comput. Syst. 2021, 115, 279–294. [Google Scholar] [CrossRef]

- García-Pablos, A.; Cuadros, M.; Rigau, G. W2VLDA: Almost unsupervised system for Aspect Based Sentiment Analysis. Expert Syst. Appl. 2018, 91, 127–137. [Google Scholar] [CrossRef] [Green Version]

- Al-Janabi, O.M.; Malim, N.H.A.H.; Cheah, Y.N. Aspect Categorization Using Domain-Trained Word Embedding and Topic Modelling. In Advances in Electronics Engineering; Springer: Singapore, 2020; pp. 191–198. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics, Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar] [CrossRef]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. RoBERTa: A Robustly Optimized BERT Pretraining Approach. arXiv 2019, arXiv:cs.CL/1907.11692. [Google Scholar]

- Lan, Z.; Chen, M.; Goodman, S.; Gimpel, K.; Sharma, P.; Soricut, R. ALBERT: A Lite BERT for Self-supervised Learning of Language Representations. In Proceedings of the International Conference on Learning Representations, Virtual Conference, 26 April–1 May 2020; pp. 1–17. [Google Scholar]

- Biswas, E.; Karabulut, M.E.; Pollock, L.; Vijay-Shanker, K. Achieving Reliable Sentiment Analysis in the Software Engineering Domain using BERT. In Proceedings of the 2020 IEEE International Conference on Software Maintenance and Evolution (ICSME), Adelaide, Australia, 28 September–2 October 2020; pp. 162–173. [Google Scholar] [CrossRef]

- Zhao, L.; Li, L.; Zheng, X.; Zhang, J. A BERT based Sentiment Analysis and Key Entity Detection Approach for Online Financial Texts. In Proceedings of the 2021 IEEE 24th International Conference on Computer Supported Cooperative Work in Design (CSCWD), Dalian, China, 5–7 May 2021; pp. 1233–1238. [Google Scholar] [CrossRef]

- Peters, M.; Neumann, M.; Iyyer, M.; Gardner, M.; Clark, C.; Lee, K.; Zettlemoyer, L. Deep Contextualized Word Representations. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, New Orleans, LA, USA, 1–6 June 2018; Volume 1, pp. 2227–2237. [Google Scholar] [CrossRef] [Green Version]

- Alasadi, S.A.; Bhaya, W.S. Review of data preprocessing techniques in data mining. J. Eng. Appl. Sci. 2017, 12, 4102–4107. [Google Scholar] [CrossRef]

- Mikolov, T.; Chen, K.; Corrado, G.; Dean, J. Efficient estimation of word representations in vector space. In Proceedings of the International Conference on Learning Representations, Scottsdale, AZ, USA, 2–4 May 2013; pp. 1–12. [Google Scholar]

- Bojanowski, P.; Grave, E.; Joulin, A.; Mikolov, T. Enriching word vectors with subword information. Trans. Assoc. Comput. Linguist. 2017, 5, 135–146. [Google Scholar] [CrossRef] [Green Version]

- Mikolov, T.; Grave, E.; Bojanowski, P.; Puhrsch, C.; Joulin, A. Advances in Pre-Training Distributed Word Representations. In Proceedings of the International Conference on Language Resources and Evaluation, Miyazaki, Japan, 7–12 May 2018; pp. 52–55. [Google Scholar]

- Pennington, J.; Socher, R.; Manning, C. GloVe: Global Vectors for Word Representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar] [CrossRef]

- Niu, L.; Dai, X.; Zhang, J.; Chen, J. Topic2Vec: Learning distributed representations of topics. In Proceedings of the International Conference on Asian Language Processing, Suzhou, China, 24–25 October 2015; pp. 193–196. [Google Scholar] [CrossRef]

- Wang, Y.X.; Zhang, Y.J. Nonnegative matrix factorization: A comprehensive review. IEEE Trans. Knowl. Data Eng. 2012, 25, 1336–1353. [Google Scholar] [CrossRef]

- Chen, Y.; Zhang, H.; Liu, R.; Ye, Z.; Lin, J. Experimental explorations on short text topic mining between LDA and NMF based Schemes. Knowl.-Based Syst. 2019, 163, 1–13. [Google Scholar] [CrossRef]

- Petrescu, A.; Truica, C.O.; Apostol, E.S. Sentiment Analysis of Events in Social Media. In Proceedings of the IEEE International Conference on Intelligent Computer Communication and Processing, Cluj-Napoca, Romania, 5–7 September 2019; pp. 143–149. [Google Scholar] [CrossRef]

- Mitroi, M.; Truică, C.O.; Apostol, E.S.; Florea, A.M. Sentiment Analysis using Topic-Document Embeddings. In Proceedings of the 2020 IEEE 16th International Conference on Intelligent Computer Communication and Processing (ICCP), Cluj-Napoca, Romania, 3–5 September 2020; pp. 75–82. [Google Scholar] [CrossRef]

- Yi, D.; Ji, S.; Bu, S. An Enhanced Optimization Scheme Based on Gradient Descent Methods for Machine Learning. Symmetry 2019, 11, 942. [Google Scholar] [CrossRef] [Green Version]

- Secui, A.; Sirbu, M.D.; Dascalu, M.; Crossley, S.; Ruseti, S.; Trausan-Matu, S. Expressing Sentiments in Game Reviews. In Proceedings of the 17th International Conference on Artificial Intelligence: Methodology, Systems, and Applications (AIMSA 2016), Varna, Bulgaria, 7–10 September 2016; pp. 352–355. [Google Scholar] [CrossRef]

- Ruseti, S.; Sirbu, M.D.; Calin, M.A.; Dascalu, M.; Trausan-Matu, S.; Militaru, G. Comprehensive Exploration of Game Reviews Extraction and Opinion Mining Using NLP Techniques. In Advances in Intelligent Systems and Computing; Springer: Singapore, 2019; pp. 323–331. [Google Scholar] [CrossRef]

- Linzen, T. Issues in evaluating semantic spaces using word analogies. In Proceedings of the Workshop on Evaluating Vector-Space Representations for NLP, Berlin, Germany, 7–12 August 2016; pp. 13–18. [Google Scholar] [CrossRef]

- Honnibal, M.; Montani, I. spaCy 3: Industrial-Strength Natural Language Processing. 2020. Available online: https://spacy.io/ (accessed on 13 October 2021).

- Řehůřek, R.; Sojka, P. Software Framework for Topic Modelling with Large Corpora. In Proceedings of the LREC 2010 Workshop on New Challenges for NLP Frameworks, Valletta, Malta, 22 May 2010; pp. 45–50. [Google Scholar]

- Kula, M. glove-python. 2020. Available online: https://github.com/maciejkula/glove-python (accessed on 13 October 2021).

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Chollet, F. Keras. 2015. Available online: https://keras.io (accessed on 8 October 2021).

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems. 2015. Available online: https://www.tensorflow.org/ (accessed on 13 October 2021).

- Sirbu, D.; Secui, A.; Dascalu, M.; Crossley, S.A.; Ruseti, S.; Trausan-Matu, S. Extracting Gamers’ Opinions from Reviews. In Proceedings of the 2016 18th International Symposium on Symbolic and Numeric Algorithms for Scientific Computing (SYNASC), Timisoara, Romania, 24–27 September 2016. [Google Scholar] [CrossRef]

- Dingwall, N.; Potts, C. Mittens: An Extension of GloVe for Learning Domain-Specialized Representations. In Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics, New Orleans, LA, USA, 1–6 June 2018; pp. 212–217. [Google Scholar] [CrossRef]

- Piktus, A.; Edizel, N.B.; Bojanowski, P.; Grave, E.; Ferreira, R.; Silvestri, F. Misspelling Oblivious Word Embeddings. In Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics, Minneapolis, MN, USA, 2–7 June 2019; pp. 3226–3234. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).