Towards Generating and Evaluating Iconographic Image Captions of Artworks

Abstract

:1. Introduction

2. Related Work

3. Methodology

3.1. Datasets

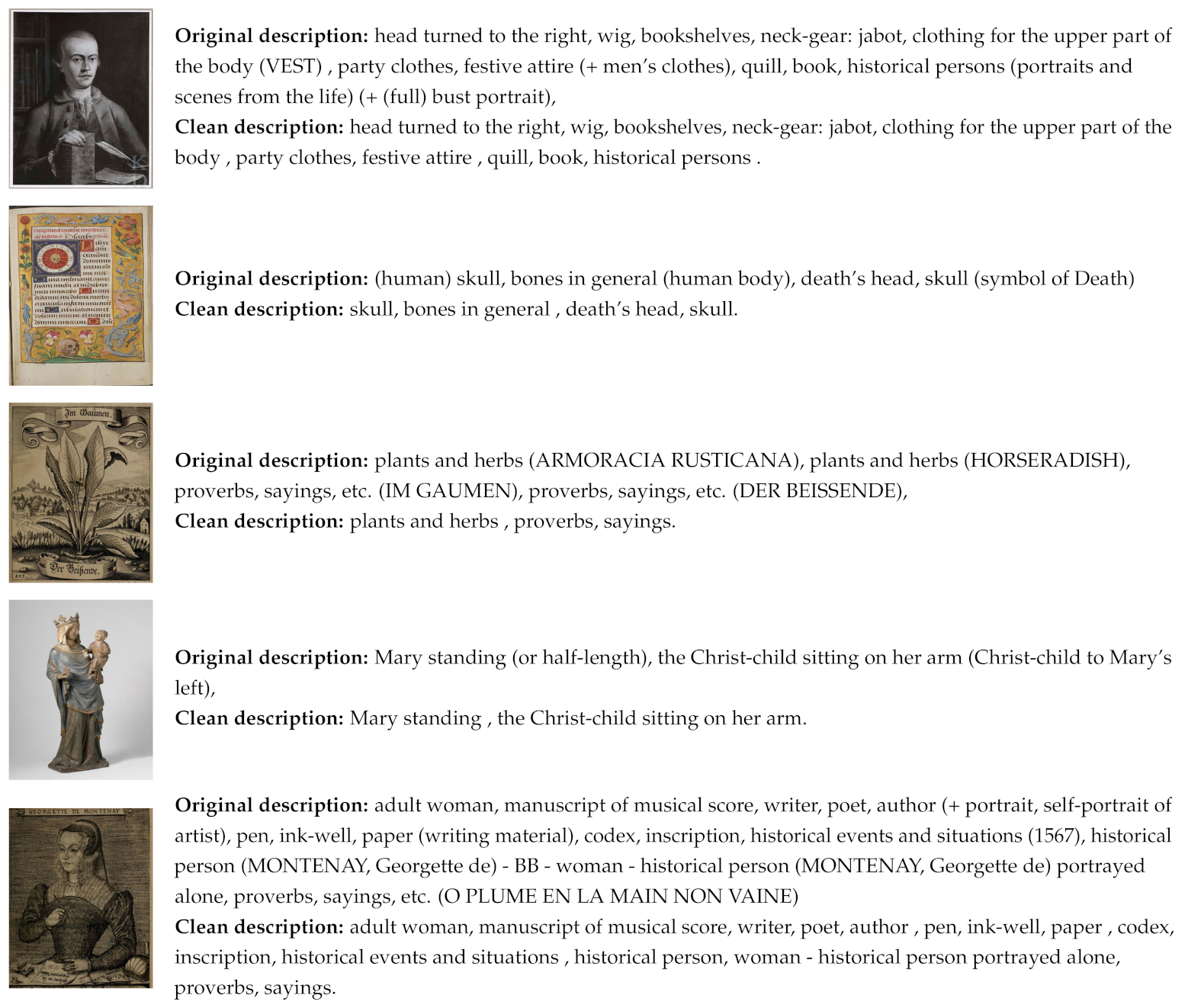

3.1.1. Iconclass Caption Dataset for Training and Evaluation

3.1.2. Wikiart Dataset for Evaluation

3.2. Image Captioning Model

3.3. Evaluation of the Generated Captions

4. Results and Discussion

4.1. Quantitative Results

4.2. Qualitative Analysis

4.2.1. Iconclass Caption Test Set

4.2.2. WikiArt Dataset

5. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Computer Vision—ECCV 2014, Proceedings of the 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Part V, Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2014; Volume 8693, pp. 740–755. [Google Scholar]

- Young, P.; Lai, A.; Hodosh, M.; Hockenmaier, J. From image descriptions to visual denotations: New similarity metrics for semantic inference over event descriptions. Trans. Assoc. Comput. Linguist. 2014, 2, 67–78. [Google Scholar] [CrossRef]

- Krishna, R.; Zhu, Y.; Groth, O.; Johnson, J.; Hata, K.; Kravitz, J.; Chen, S.; Kalantidis, Y.; Li, L.J.; Shamma, D.A.; et al. Visual genome: Connecting language and vision using crowdsourced dense image annotations. Int. J. Comput. Vis. 2017, 123, 32–73. [Google Scholar] [CrossRef] [Green Version]

- Panofsky, E. Studies in Iconology. Humanistic Themes in the Art of the Renaissance, New York; Harper and Row: New York, NY, USA, 1972. [Google Scholar]

- Posthumus, E. Brill Iconclass AI Test Set. 2020. Available online: https://labs.brill.com/ictestset/ (accessed on 20 July 2021).

- Couprie, L.D. Iconclass: An iconographic classification system. Art Libr. J. 1983, 8, 32–49. [Google Scholar] [CrossRef]

- Zhou, L.; Palangi, H.; Zhang, L.; Hu, H.; Corso, J.J.; Gao, J. Unified Vision-Language Pre-Training for Image Captioning and VQA. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; AAAI: Menlo Park, CA, USA; Volume 34, No. 07. pp. 13041–13049. [Google Scholar]

- Cetinic, E. Iconographic Image Captioning for Artworks. In Proceedings of the ICPR International Workshops and Challenges, Virtual Event, Milan, Italy, 10–15 January 2021; Springer: New York, NY, USA; pp. 502–516. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning Transferable Visual Models From Natural Language Supervision. arXiv 2021, arXiv:abs/2103.00020. [Google Scholar]

- Hessel, J.; Holtzman, A.; Forbes, M.; Bras, R.L.; Choi, Y. CLIPScore: A Reference-free Evaluation Metric for Image Captioning. arXiv 2021, arXiv:2104.08718. [Google Scholar]

- Cetinic, E.; Lipic, T.; Grgic, S. Fine-tuning convolutional neural networks for fine art classification. Expert Syst. Appl. 2018, 114, 107–118. [Google Scholar] [CrossRef]

- Sandoval, C.; Pirogova, E.; Lech, M. Two-stage deep learning approach to the classification of fine-art paintings. IEEE Access 2019, 7, 41770–41781. [Google Scholar] [CrossRef]

- Milani, F.; Fraternali, P. A Data Set and a Convolutional Model for Iconography Classification in Paintings. arXiv 2020, arXiv:2010.11697. [Google Scholar]

- Seguin, B.; Striolo, C.; Kaplan, F. Visual link retrieval in a database of paintings. In Proceedings of the Computer Vision (ECCV) 2016, Amsterdam, The Netherlands, 8–16 October 2016; Lecture Notes in Computer Science. Springer: Berlin/Heidelberg, Germany, 2016; Volume 9913, pp. 753–767. [Google Scholar]

- Mao, H.; Cheung, M.; She, J. Deepart: Learning joint representations of visual arts. In Proceedings of the 25th ACM international conference on Multimedia, Mountain View, CA, USA, 23–27 October 2017; pp. 1183–1191. [Google Scholar]

- Castellano, G.; Vessio, G. Towards a tool for visual link retrieval and knowledge discovery in painting datasets. In Digital Libraries: The Era of Big Data and Data Science, Proceedings of the 16th Italian Research Conference on Digital Libraries (IRCDL) 2020, Bari, Italy, 30–31 January 2020; Springer: Berlin, Germany, 2020; Volume 1177, pp. 105–110. [Google Scholar]

- Crowley, E.J.; Zisserman, A. In search of art. In Proceedings of the Computer Vision (ECCV) 2014 Workshops, Zurich, Switzerland, 6–12 September 2014; Lecture Notes in Computer Science. Springer: Berlin, Germany; Volume 8925, pp. 54–70. [Google Scholar]

- Strezoski, G.; Worring, M. Omniart: A large-scale artistic benchmark. ACM Trans. Multimed. Comput. Commun. Appl. (TOMM) 2018, 14, 1–21. [Google Scholar] [CrossRef]

- Madhu, P.; Kosti, R.; Mührenberg, L.; Bell, P.; Maier, A.; Christlein, V. Recognizing Characters in Art History Using Deep Learning. In Proceedings of the 1st Workshop on Structuring and Understanding of Multimedia heritAge Contents, Nice, France, 21–25 October 2019; pp. 15–22. [Google Scholar]

- Jenicek, T.; Chum, O. Linking Art through Human Poses. In Proceedings of the 2019 International Conference on Document Analysis and Recognition (ICDAR), Sydney, Australia, 20–25 September 2019; pp. 1338–1345. [Google Scholar]

- Shen, X.; Efros, A.A.; Aubry, M. Discovering visual patterns in art collections with spatially-consistent feature learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 9278–9287. [Google Scholar]

- Deng, Y.; Tang, F.; Dong, W.; Ma, C.; Huang, F.; Deussen, O.; Xu, C. Exploring the Representativity of Art Paintings. IEEE Trans. Multimed. 2020. [Google Scholar] [CrossRef]

- Cetinic, E.; Lipic, T.; Grgic, S. Learning the Principles of Art History with convolutional neural networks. Pattern Recognit. Lett. 2020, 129, 56–62. [Google Scholar] [CrossRef]

- Elgammal, A.; Liu, B.; Kim, D.; Elhoseiny, M.; Mazzone, M. The shape of art history in the eyes of the machine. In Proceedings of the 32nd AAAI Conference on Artificial Intelligence, AAAI 2018, New Orleans, LA, USA, 2–7 February 2018; AAAI Press: Palo Alto, CA, USA, 2018; pp. 2183–2191. [Google Scholar]

- Hayn-Leichsenring, G.U.; Lehmann, T.; Redies, C. Subjective ratings of beauty and aesthetics: Correlations with statistical image properties in western oil paintings. i-Perception 2017, 8, 2041669517715474. [Google Scholar] [CrossRef] [Green Version]

- Cetinic, E.; Lipic, T.; Grgic, S. A deep learning perspective on beauty, sentiment, and remembrance of art. IEEE Access 2019, 7, 73694–73710. [Google Scholar] [CrossRef]

- Sargentis, G.; Dimitriadis, P.; Koutsoyiannis, D. Aesthetical Issues of Leonardo Da Vinci’s and Pablo Picasso’s Paintings with Stochastic Evaluation. Heritage 2020, 3, 283–305. [Google Scholar] [CrossRef]

- Cetinic, E.; She, J. Understanding and Creating Art with AI: Review and Outlook. arXiv 2021, arXiv:2102.09109. [Google Scholar]

- Castellano, G.; Vessio, G. Deep learning approaches to pattern extraction and recognition in paintings and drawings: An overview. Neural Comput. Appl. 2021, 1–20. [Google Scholar] [CrossRef]

- Fontanella, F.; Colace, F.; Molinara, M.; Di Freca, A.S.; Stanco, F. Pattern Recognition and Artificial Intelligence Techniques for Cultural Heritage. Pattern Recognit. Lett. 2020, 138, 23–29. [Google Scholar] [CrossRef]

- Garcia, N.; Vogiatzis, G. How to read paintings: Semantic art understanding with multi-modal retrieval. In Proceedings of the European Conference on Computer Vision (ECCV) 2018 Workshops, Munich, Germany, 8–14 September 2018; Lecture Notes in Computer Science. Springer: Berlin, Germany; Volume 11130, pp. 676–691. [Google Scholar]

- Baraldi, L.; Cornia, M.; Grana, C.; Cucchiara, R. Aligning text and document illustrations: Towards visually explainable digital humanities. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; pp. 1097–1102. [Google Scholar]

- Stefanini, M.; Cornia, M.; Baraldi, L.; Corsini, M.; Cucchiara, R. Artpedia: A new visual-semantic dataset with visual and contextual sentences in the artistic domain. In Proceedings of the Image Analysis and Processing (ICIAP) 2019, 20th International Conference, Trento, Italy, 9–13 September 2019; Lecture Notes in Computer Science. Springer: Berlin, Germany; Volume 11752, pp. 729–740. [Google Scholar]

- Cornia, M.; Stefanini, M.; Baraldi, L.; Corsini, M.; Cucchiara, R. Explaining digital humanities by aligning images and textual descriptions. Pattern Recognit. Lett. 2020, 129, 166–172. [Google Scholar] [CrossRef]

- Banar, N.; Daelemans, W.; Kestemont, M. Multi-modal Label Retrieval for the Visual Arts: The Case of Iconclass. In Proceedings of the 13th International Conference on Agents and Artificial Intelligence, (ICAART) 2021, Online Streaming, 4–6 February 2021; SciTePress: Setúbal, Portugal; Volume 1, pp. 622–629. [Google Scholar]

- Bongini, P.; Becattini, F.; Bagdanov, A.D.; Del Bimbo, A. Visual Question Answering for Cultural Heritage. arXiv 2020, arXiv:2003.09853. [Google Scholar]

- Garcia, N.; Ye, C.; Liu, Z.; Hu, Q.; Otani, M.; Chu, C.; Nakashima, Y.; Mitamura, T. A Dataset and Baselines for Visual Question Answering on Art. arXiv 2020, arXiv:2008.12520. [Google Scholar]

- Sheng, S.; Moens, M.F. Generating Captions for Images of Ancient Artworks. In Proceedings of the 27th ACM International Conference on Multimedia, (MM) 2019, Nice, France, 21–25 October 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 2478–2486. [Google Scholar]

- Gupta, J.; Madhu, P.; Kosti, R.; Bell, P.; Maier, A.; Christlein, V. Towards Image Caption Generation for Art Historical Data. In Proceedings of the AI Methods for Digital Heritage, Workshop at KI2020 43rd German Conference on Artificial Intelligence, Bamberg, Germany, 21–25 September 2020. [Google Scholar]

- Vinyals, O.; Toshev, A.; Bengio, S.; Erhan, D. Show and tell: A neural image caption generator. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3156–3164. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Tan, H.; Bansal, M. Lxmert: Learning cross-modality encoder representations from transformers. arXiv 2019, arXiv:1908.07490. [Google Scholar]

- Lu, J.; Batra, D.; Parikh, D.; Lee, S. Vilbert: Pretraining task-agnostic visiolinguistic representations for vision-and-language tasks. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; pp. 13–23. [Google Scholar]

- Chen, Y.C.; Li, L.; Yu, L.; Kholy, A.E.; Ahmed, F.; Gan, Z.; Cheng, Y.; Liu, J. Uniter: Learning universal image-text representations. arXiv 2019, arXiv:1909.11740. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 91–99. [Google Scholar]

- Sharma, P.; Ding, N.; Goodman, S.; Soricut, R. Conceptual captions: A cleaned, hypernymed, image alt-text dataset for automatic image captioning. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Melbourne, Australia, 15–20 July 2018; pp. 2556–2565. [Google Scholar]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.J. BLEU: A method for automatic evaluation of machine translation. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, Philadelphia, PA, USA, 6–12 July 2002; pp. 311–318. [Google Scholar]

- Denkowski, M.; Lavie, A. Meteor universal: Language specific translation evaluation for any target language. In Proceedings of the Ninth Workshop on Statistical Machine Translation, Baltimore, MD, USA, 26–27 June 2014; pp. 376–380. [Google Scholar]

- Lin, C.Y. Rouge: A package for automatic evaluation of summaries. In Proceedings of the Text Summarization Branches Out, Barcelona, Spain, 25–26 July 2004; pp. 74–81. [Google Scholar]

- Vedantam, R.; Lawrence Zitnick, C.; Parikh, D. Cider: Consensus-based image description evaluation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4566–4575. [Google Scholar]

- Xia, Q.; Huang, H.; Duan, N.; Zhang, D.; Ji, L.; Sui, Z.; Cui, E.; Bharti, T.; Zhou, M. Xgpt: Cross-modal generative pre-training for image captioning. arXiv 2020, arXiv:2003.01473. [Google Scholar]

| Evaluation Metric | Value (×100) |

|---|---|

| BLEU 1 | |

| BLEU 2 | |

| BLEU 3 | |

| BLEU 4 | |

| METEOR | |

| ROUGE-L | |

| CIDEr | |

| CLIP-S * | |

| RefCLIP-S * |

| Standard Metric | Correlation with CLIP-S | Correlation with Ref CLIP-S |

|---|---|---|

| BLEU-1 | 0.355 | 0.686 |

| BLEU-2 | 0.314 | 0.647 |

| BLEU-3 | 0.281 | 0.629 |

| BLEU-4 | 0.236 | 0.602 |

| METEOR | 0.315 | 0.669 |

| ROUGE-L | 0.298 | 0.647 |

| CIDEr | 0.315 | 0.656 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cetinic, E. Towards Generating and Evaluating Iconographic Image Captions of Artworks. J. Imaging 2021, 7, 123. https://doi.org/10.3390/jimaging7080123

Cetinic E. Towards Generating and Evaluating Iconographic Image Captions of Artworks. Journal of Imaging. 2021; 7(8):123. https://doi.org/10.3390/jimaging7080123

Chicago/Turabian StyleCetinic, Eva. 2021. "Towards Generating and Evaluating Iconographic Image Captions of Artworks" Journal of Imaging 7, no. 8: 123. https://doi.org/10.3390/jimaging7080123

APA StyleCetinic, E. (2021). Towards Generating and Evaluating Iconographic Image Captions of Artworks. Journal of Imaging, 7(8), 123. https://doi.org/10.3390/jimaging7080123