Using Computer Vision to Track Facial Color Changes and Predict Heart Rate

Abstract

:1. Introduction

2. Related Works

3. Materials and Methods

3.1. Data Collection

3.2. Data Procesing

3.2.1. Pre-Processing

3.2.2. Face Detection

3.2.3. Normalization

3.2.4. Color Space Conversion

3.2.5. Patch Averaging

3.2.6. Median Filter

3.2.7. Average Filter

3.3. Statistical Analysis

4. Results

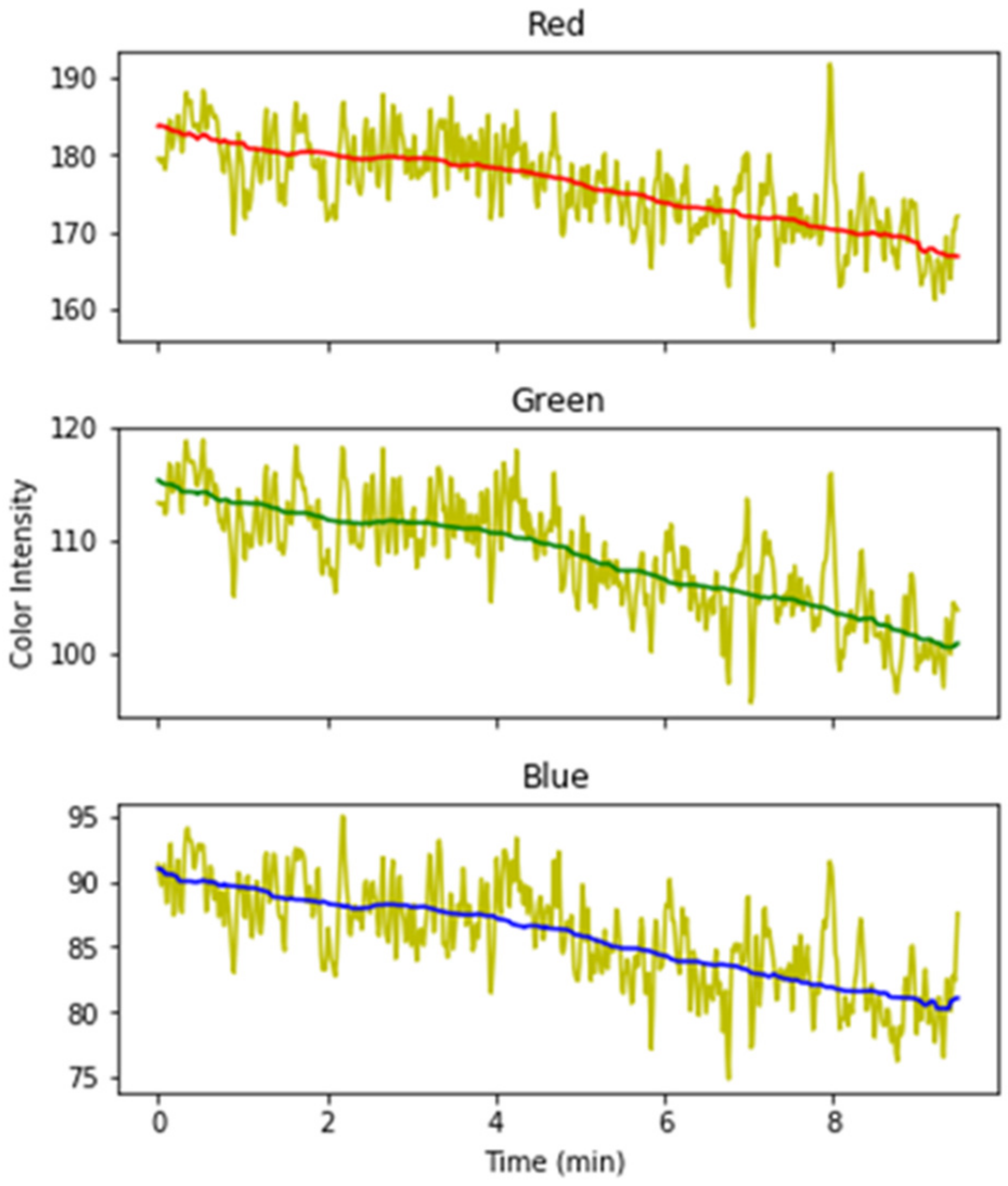

4.1. Color Intensity vs. HR Plot

4.2. Multivariate Autoregression Analysis

4.3. Polynomial Support Vector Regression

5. Discussion

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Nelson, M.E.; Rejeski, W.J.; Blair, S.N.; Duncan, P.W.; Judge, J.O.; King, A.C.; Macera, C.A.; Castaneda-Sceppa, C. Physical activity and public health in older Adults: Recommendation from the American College of Sports Medicine and the American Heart Association. Med. Sci. Sports Exerc. 2007, 39, 1435–1445. [Google Scholar] [CrossRef] [PubMed]

- Warbarton, D.H.; Paterson, D.E. Physical activity and functional limitations in older adults: A systematic review related to Canada’s Physical Activity Guidelines. Int. J. Behav. Nutr. Phys. Act. 2010, 7, 38. [Google Scholar]

- Duking, P.; Achtzehn, S.; Holmberg, H.-C.; Sperlich, B. Integrated Framework of Load Monitoring by a Combination of Smartphone Applications, Wearables and Point-of-Care Testing Provides Feedback that Allows Individual Responsive Adjustments to Activities of Daily Living. Sensors 2018, 18, 1632. [Google Scholar] [CrossRef]

- Borg, G. Borg’s Perceived Exertion and Pain Scales; Human Kinetics: Rimbi, Sweden, 1998. [Google Scholar]

- Dooley, E.E.; Golaszewski, N.M.; Bartholomew, J.B. Estimating Accuracy at Exercise Intensities: A Comparative Study of Self-Monitoring Heart Rate and Physical Activity Wearable Devices. JMIR Mhealth Uhealth 2017, 5, e34. [Google Scholar] [CrossRef]

- Hensen, S.J. Measuring physical activity with heart rate monitors. Am. J. Public Health 2017, 107, e24. [Google Scholar] [CrossRef] [PubMed]

- Miles, K.H.; Clark, B.; Périard, J.D.; Goecke, R.; Thompson, K.G. Facial feature tracking: A psychophysiological measure to assess exercise intensity? J. Sports Sci. 2017, 36, 934–941. [Google Scholar] [CrossRef]

- Kiviniemi, A.M.; Hautala, A.J.; Kinnunen, H.; Nissila, J.; Virtanen, P.; Karjalainen, J.; Tulppo, M.P. Daily Exercise Prescription on the Basis of HR Variability among Men and Women. Med. Sci. Sports Exerc. 2010, 42, 1355–1363. [Google Scholar] [CrossRef]

- Nakamura, F.Y.; Flatt, A.A.; Pereira, L.A.; Campillo, R.R.; Loturco, I.; Esco, M.R. Ultra-Short-Term Heart Rate Variability is Sensitive to Training Effects in Team Sports Players. J. Sports Sci. Med. 2015, 14, 602–605. [Google Scholar]

- Orini, M.; Tinker, A.; Munroe, P.B.; Lambiase, P.D. Long-term intra-individual reproducibility of heart rate dynamics during exercise and recovery in the UK Biobank cohort. PLoS ONE 2017, 12, 183732. [Google Scholar] [CrossRef]

- Hunt, K.J.; Grunder, R.; Zahnd, A. Identification and comparison of heart-rate dynamics during cycle ergometer and treadmill exercise. PLoS ONE 2019, 14, 220826. [Google Scholar] [CrossRef]

- Mackingnon, S.N. Relating heart rate and rate of perceived exertion in two simulated occupational tasks. Ergonomics 1999, 42, 761–766. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.L.; Chin, C.C.; Hsia, P.Y.; Lin, S.K. Relationships of Borg’s RPE 6-20 scale and heart rate in dynamic and static exercises among a sample of young Taiwanese men. Percept. Mot. Ski. Phys. Dev. Meas. 2013, 117, 971–982. [Google Scholar] [CrossRef] [PubMed]

- Dias, D.; Cunha, J. Wearable Health Devices—Vital Sign Monitoring, Systems and Technologies. Sensors 2018, 18, 2414. [Google Scholar] [CrossRef] [PubMed]

- Sun, G.; Matsui, T.; Watai, Y.; Kim, S.; Kirimoto, T.; Suzuki, S.; Hakozaki, Y. Vital-SCOPE: Design and Evaluation of a Smart Vital Sign Monitor for Simultaneous Measurement of Pulse Rate, Respiratory Rate, and Body Temperature for Patient Monitoring. J. Sens. 2018, 2018, 1–7. [Google Scholar] [CrossRef]

- Butte, N.F.; Ekelund, U.; Westerterp, K.R. Assessing physical activity using wearable monitors: Measures of physical activity. Med. Sci. Sports Exerc. 2012, 44, 5–12. [Google Scholar] [CrossRef]

- Chen, K.; Janz, K.F.; Zhu, W.; Brychta, R.J. Re-Defining the roles of sensors in objective physical activity monitoring. Med. Sci. Sports Exerc. 2012, 44, 13–23. [Google Scholar] [CrossRef]

- Arif, M.; Kattan, A. Physical activities monitoring using wearable acceleration sensors attached to the body. PLoS ONE 2015, 10, 1–16. [Google Scholar] [CrossRef] [PubMed]

- Garbey, M.; Sun, N.; Merla, A.; Pavlidis, I. Contact-free measurement of cardiac pulse based on the analysis of thermal energy. IEEE Trans. Biomed. Eng. 2007, 54, 1418–1427. [Google Scholar] [CrossRef] [PubMed]

- Docampo, G.N. Heart Rate Estimation Using Facial Video Information (Master Thesis). 2012. Available online: http://hdl.handle.net/2099.1/16616 (accessed on 28 June 2022).

- Balakrishnan, G.; Durand, F.; Guttag, J. Detecting pulse from head motions in video. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Piscataway, NJ, USA, 23–28 June 2013; pp. 3430–3438. [Google Scholar]

- Lin, Y.C.; Chou, N.K.; Lin, G.Y.; Li, M.H.; Lin, Y.H. A Real-Time contactless pulse rate and motion status monitoring system based on complexian tracking. Sensors 2017, 17, 1490. [Google Scholar] [CrossRef]

- Ndahimana, D.; Kim, E. Measurement methods for physical activity and energy expenditure: A review. Clin. Nutr. Res. 2017, 6, 68–80. [Google Scholar] [CrossRef]

- Haque, M.A.; Irani, R.; Nasrollahi, K.; Thomas, M.B. Facial Video Based Detection of Physical Fatigue for Maximal Muscle Activity. IET Comput. Vis. 2016, 10, 323–330. [Google Scholar] [CrossRef]

- Chen, J.; Tao, Y.; Zhang, D.; Liu, X.; Fang, Z.; Zhou, Q.; Zhang, B. Fatigue detection based on faical images prossed by difference algorithm. In Proceedings of the lASTED International Conference Biomedical Engineering, Innsbruck, Austria, 20–21 February 2017; BioMed: Innsbruck, Austria; pp. 1–4. [Google Scholar]

- Ekman, P.; Friesen, W.V.; Ancoli, S. Ficial Signs of Emotion Experiences. J. Personal. Soc. Psychol. 1980, 39, 1125–1134. [Google Scholar] [CrossRef] [Green Version]

- Khanal, S.R.; Sampaio, J.; Barroso, J.; Filipe, V. Classification of Physical Exercise Intensity Based on Facial Expression Using Deep Neural Network; Springer: Cham, Switzerland, 2019; Volume 11573, ISBN 9783030235628. [Google Scholar]

- Khanal, S.R.; Fonseca, A.; Marques, A.; Barroso, J.; Filipe, V. Physical exercise intensity monitoring through eye-blink and mouth’s shape analysis. In Proceedings of the TISHW 2018-2nd International Conference on Technology and Innovation in Sports, Health and Wellbeing, Thessaloniki, Greece, 20–22 June 2018. [Google Scholar]

- Khanal, S.R.; Barroso, J.; Sampaio, J.; Filipe, V. Classification of physical exercise intensity by using facial expression analysis. In Proceedings of the 2nd International Conference on Computing Methodologies and Communication, ICCMC 2018, Erode, India, 15–16 February 2018. [Google Scholar]

- Khanal, S.R.; Sampaio, J.; Barroso, J.; Filipe, V. Individual’s Neutral Emotional Expression Tracking for Physical Exercise Monitoring; Springer: Cham, Switzerland, 2020; Volume 12424, ISBN 9783030601164. [Google Scholar]

- Neagoe, V.E. An Optimum 2D Color Space for Pattern Recognition. In Proceedings of the 2006 International Conference on Image Processing, Computer Vision, & Pattern Recognition, Las Vegas, NV, USA, 26–29 June 2006; Volume 2. [Google Scholar]

- Tayal, Y.; Lamba, R.; Padhee, S. Automatic face detection using color based segmentation. Int. J. Sci. Publ. 2012, 2, 1–7. [Google Scholar]

- Rewar, E.; Lenka, S.K. Comparative analysis of skin color based models for face detection. Int. J. Signal Image Process. 2013, 4, 69–75. [Google Scholar] [CrossRef]

- Silva, S.M.; Jayawardana, M.W.; Meyer, D. Statistical methods to model and evaluate physical activity programs, using step counts: A systematic review. PLoS ONE 2018, 13, 206763. [Google Scholar] [CrossRef]

- Gang, K.Q.; Wu, Z.X.; Zhou, D.Y. Effects of hot air-drying process on lipid quality of whelks Neptunea arthritica cumingi Crosse and Neverita didyma. J. Food Sci. Technol. 2019, 56, 4166–4176. [Google Scholar] [CrossRef]

- Ivanov, Y. Adaptive moving object segmentation algorithms in cluttered environments. In Proceedings of the The Experience of Designing and Application of CAD Systems in Microelectronics, Lviv, Ukraine, 24–27 February 2015; pp. 97–99. [Google Scholar] [CrossRef]

- Tkachenko, R.; Tkachenko, P.; Izonin, I.; Tsymbal, Y. Learning-Based Image Scaling Using Neural-Like Structure of Geometric Transformation Paradigm. In Advances in Soft Computing and Machine Learning in Image Processing. Studies in Computational Intelligence; Hassanien, A., Oliva, D., Eds.; Springer: Cham, Switzerlamd, 2018; Volume 730. [Google Scholar]

- Staffini, A.; Svensson, T.; Chung, U.-i.; Svensson, A.K. Heart Rate Modeling and Prediction Using Autoregressive Models and Deep Learning. Sensors 2021, 22, 34. [Google Scholar] [CrossRef] [PubMed]

- Ni, A.; Azarang, A.; Kehtarnavaz, N. A Review of Deep Learning-Based Contactless Heart Rate Measurement Methods. Sensors 2021, 21, 3719. [Google Scholar] [CrossRef]

- Timme, S.; Brand, R. Affect and exertion during incremental physical exercise: Examining changes using automated facial action analysis and experiential self-report. PLoS ONE 2020, 15, 228739. [Google Scholar] [CrossRef]

- Ramirez, G.A.; Fuentes, O.; Crites, S.L.; Jimenez, M.; Ordonez, J. Color Analysis of Facial Skin: Detection of Emotional State. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition Workshops, Columbus, OH, USA, 23–28 June 2014; pp. 468–473. [Google Scholar]

- Temko, A. Accurate heart rate monitoring duding physical exercise using PPG. IEEE Trans. Biomed. Eng. 2017, 64, 2018–2024. [Google Scholar] [CrossRef]

- Jimenez, J.; Scully, T.; Barbosa, N.; Donner, C.; Alvarez, X.; Vierira, T.; Weyrich, T. A practical appearance model for dynamic facial color. ACM Trans. Graph. 2010, 29, 141. [Google Scholar] [CrossRef]

- Chandrappa, D.N.; Ravishankar, M.; Rameshbabu, D.R. Face detection in color images using skin color model algorithm based on skin color information. In Proceedings of the 2011 3rd International Conference on Electronics Computer Technology, Kanyakumari, India, 8–10 April 2011; pp. 254–258. [Google Scholar]

- Hasan, M.M.; Hossain, M.F.; Thakur, J.M. Podder Driver fatigue recognition using skin color modeling. Intern. J. Comput. Appl. 2014, 97, 34–41. [Google Scholar]

- Nanni, L.; Lumini, A.; Dominio, F.; Zanuttigh, P. Effective and precise face detection based on depth and color data. Appl. Comput. Inform. 2014, 10, 1–13. [Google Scholar] [CrossRef]

- Qerem, A. Face detection and recognition using fusion of color space. Int. J. Comput. Sci. Electron. Eng. 2016, 4, 12–16. [Google Scholar]

- Lajevardi, S.M.; Wu, H.R. Facial expression recognition in perceptual color space. IEEE Trans. Image Process. 2012, 21, 3721–3734. [Google Scholar] [CrossRef]

- Nakajima, K.; Minami, T.; Nakauchi, S. Interaction between facial expression and color. Sci. Rep. 2017, 7, 41019. [Google Scholar]

- Irani, R.; Nasrollahi, K.; Moeslund, T.B. Contactless measurement of muscle fatigue by tracking facial feature points in video. In Proceedings of the IEEE International Conference on Image Processing ICIP, Paris, France, 27–30 October 2014; pp. 4181–5186. [Google Scholar]

- Wu, B.F.; Lin, C.H.; Huang, P.W.; Lin, T.M.; Chung, M.L. A contactless sport training monitor based on facial expression and remote PPG. In Proceedings of the 2017 IEEE International Conference on Systems, Man and Cybernetics, Banff, AB, Canada, 5–8 October 2017; pp. 846–852. [Google Scholar]

- Lueangwattana, C.; Kondo, T.; Haneishi, H. A Comparative Study of video Signals for Non-contact heart rate measurement. In Proceedings of the 12th International Conference on Electrical Engineering/Electronics; Computer, Telecommunications and Information Technology (ECTI-CON), Hua Hin, Thailand, 24–27 June 2015. [Google Scholar]

- Wang, S.-J.; Yan, W.-J.; Li, X.; Zhao, G.; Fu, X. Micro-expression Recognition Using Dynamic Textures on Tensor Independent Color Space. In Proceedings of the 22nd International Conference on Pattern Recognition, Stockholm, Sweden, 24–28 August 2014; pp. 4678–4684. [Google Scholar]

- Wang, S.-J.; Yan, W.-J.; Li, X.; Zhao, G.; Zhou, C.-G.; Fu, X.; Tao, J. Micro-Expression Recognition Using Color Spaces. IEEE Trans. Image Process. 2015, 24, 6035–6049. [Google Scholar] [CrossRef]

- Perrett, D.I.; Talamas, S.N.; Cairns, P.; Henderson, A.J. Skin Color Cues to Human Health: Carotenoids, Aerobic Fitness, and Body Fat. Front. Psychol. 2020, 11, 392. [Google Scholar] [CrossRef]

- Poh, M.-Z.; McDuff, D.J.; Picard, R.W. Advancements in noncontact, multiparameter physiological measurements using webcam. IEEE Trans. Biomed. Eng. 2011, 58, 7–11. [Google Scholar] [CrossRef]

- More, A.V.; Wakankar, A.; Gawande, J.P. Automated heart rate measurement using wavelet analysis of face video sequences. In Innovations in Electronics and Communication Engineering; Saini, H.S., Sing, R.K., Patel, V.M., Santhi, K., Ranganayakulu, S.V., Eds.; Springer: Singapore, 2018; pp. 113–120. [Google Scholar]

- Tanaka, H.; Monahan, K.D.; Seals, D.R. Age-predicted maximal heart rate revisited. J. Am. Coll. Cardiol. 2000, 37, 153–156. [Google Scholar] [CrossRef]

- Orieux, F.; Giovannelli, J.; Rodet, T. Bayesian estimation of regularization and point spread function parameters for Wiener–Hunt deconvolution. J. Opt. Soc. Am. A 2010, 27, 1593–1607. [Google Scholar] [CrossRef] [PubMed]

- Viola, P.; Jones, M. Robust Real-time Object Detection. Int. J. Comput. Vis. 2004, 57, 137–154. [Google Scholar] [CrossRef]

- Hassan, M.A.; Malik, G.S.; Saad, N.; Karasfi, B.; Ali, Y.S.; Fofi, D. Optimal source selection for image photoplethysmography. In Proceedings of the IEEE International Instrumentation and Measurement Technology Conference Proceedings, Taipei, Taiwan, 23–26 May 2016; pp. 1–5. [Google Scholar]

- Rosado, C.M.; Jansz Rieken, C.; Spear, J. The effects of heart rate feedback on physical activity during treadmill exercise. Behav. Anal. Res. Pract. 2021, 21, 209–218. [Google Scholar] [CrossRef]

- Mikus, C.R.; Earnest, C.P.; Blair, S.N.; Church, T.S. Heart rate and exercise intensity during training: Observations from the DREW Study. Br. J. Sports Med. 2009, 43, 750–755. [Google Scholar] [CrossRef]

- Tran, D.L.; Kamaladasa, Y.; Munoz, P.A.; Kotchetkova, I.; D’Souza, M.; Celermajer, D.S.; Maiorana, A.; Cordina, R. Estimating exercise intensity using heart rate in adolescents and adults with congenital heart disease: Are established methods valid? Int. J. Cardiol. Congenit. Heart Dis. 2022, 8, 100362. [Google Scholar] [CrossRef]

- Paschos, G. Perceptually Uniform Color Spaces for Color Texture Analysis: An Emperical Evaluation. IEEE Trans. Image Process. 2001, 10, 932–937. [Google Scholar] [CrossRef]

- Sanchez-Cuevas, M.C.; Aguilar-Ponce, R.M.; Tecpanecatl-Xihuitl, J.L. A Comparison of Color Models for Color Face Segmentation. Procedia Technol. 2013, 7, 134–141. [Google Scholar] [CrossRef]

- Seidman, D.S.; Moise, J.; Ergaz, Z.; Laor, A.; Vreman, H.J.; Stevenson, D.K.; Gale, R. A prospective randomized controlled study of phototherapy using blue, and blue-green light-emiting devices, and conventional halogen-quartz phototherapy. J. Perinatol. 2003, 23, 123–127. [Google Scholar] [CrossRef] [Green Version]

| Participants ID | Gender | Age (years) | Weight (KG) | Height (cm) | Initial HR (bpm) | Final HR (bpm) | Duration (mm:ss) |

|---|---|---|---|---|---|---|---|

| Participant 1 | Male | 22 | 64.2 | 172 | 93 | 191 | 9:30 |

| Participant 2 | Male | 33 | 66.9 | 177 | 70 | 180 | 16:00 |

| Participant 3 | Female | 19 | 64.2 | 177 | 87 | 191 | 9:05 |

| Participant 4 | Male | 36 | 83.2 | 182 | 91 | 191 | 15:00 |

| Participant 5 | Female | 24 | 66.6 | 170 | 97 | 184 | 9:20 |

| Participant 6 | Female | 29 | 47 | 157 | 114 | 193 | 8:00 |

| Participant 7 | Male | 33 | 83 | 186 | 101 | 180 | 16:00 |

| Participant 8 | Male | 22 | 90.7 | 195 | 101 | 201 | 12:00 |

| Participant 9 | Male | 24 | 87.3 | 194 | 115 | 188 | 11:00 |

| Color | Sub1 | Sub2 | Sub3 | Sub4 | Sub5 | Sub6 | Sub7 | Sub8 | Sub9 | AVG |

|---|---|---|---|---|---|---|---|---|---|---|

| RGB | 0.31 | 0.35 | 0.42 | 0.5 | 0.46 | 0.35 | 0.54 | 0.25 | 0.24 | 0.275 |

| HSV | 0.31 | 0.33 | 0.38 | 0.35 | 0.38 | 0.29 | 0.51 | 0.22 | 0.2 | 0.255 |

| YCBCR | 0.33 | 0.37 | 0.46 | 0.42 | 0.43 | 0.36 | 0.45 | 0.29 | 0.31 | 0.32 |

| LAB | 0.32 | 0.34 | 0.42 | 0.45 | 0.39 | 0.42 | 0.59 | 0.3 | 0.21 | 0.265 |

| YUV | 0.32 | 0.38 | 0.41 | 0.48 | 0.48 | 0.43 | 0.61 | 0.23 | 0.3 | 0.31 |

| Color Model | RMES | F-Value | R-Square Value |

|---|---|---|---|

| RGB | 7.85 | (F(3,6060) = 4633, p = 0.006) | 0.70 |

| HSV | 6.75 | (F(3,6060) = 7360, p < 0.001) | 0.78 |

| YCBCR | 7.84 | (F(3,6060) = 3839, p < 0.001) | 0.92 |

| LAB | 7.78 | (F(3,6060) = 6905, p < 0.001) | 0.70 |

| YUV | 7.73 | (F(3,6060) = 3651, p < 0.001) | 0.94 |

| Color Model | p-Value | VIF | |

|---|---|---|---|

| RGB | R | 0.0000 | 2.54 |

| G | 0.0000 | 9.25 | |

| B | 0.00518 | 8.56 | |

| HSV | H | 0.2796 | 1.27 |

| S | 0.0000 | 1.04 | |

| V | 0.0000 | 1.19 | |

| YCBCR | Y | 0.0000 | 3.56 |

| Cb | 0.0000 | 3.48 | |

| Cr | 0.0000 | 5.14 | |

| Lab | A | 0.0552 | 5.24 |

| a | 0.0000 | 6.14 | |

| B | 0.0087 | 2.85 | |

| YUV | Y | 0.0000 | 2.45 |

| U | 0.0041 | 4.15 | |

| V | 0.0000 | 5.32 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khanal, S.R.; Sampaio, J.; Exel, J.; Barroso, J.; Filipe, V. Using Computer Vision to Track Facial Color Changes and Predict Heart Rate. J. Imaging 2022, 8, 245. https://doi.org/10.3390/jimaging8090245

Khanal SR, Sampaio J, Exel J, Barroso J, Filipe V. Using Computer Vision to Track Facial Color Changes and Predict Heart Rate. Journal of Imaging. 2022; 8(9):245. https://doi.org/10.3390/jimaging8090245

Chicago/Turabian StyleKhanal, Salik Ram, Jaime Sampaio, Juliana Exel, Joao Barroso, and Vitor Filipe. 2022. "Using Computer Vision to Track Facial Color Changes and Predict Heart Rate" Journal of Imaging 8, no. 9: 245. https://doi.org/10.3390/jimaging8090245

APA StyleKhanal, S. R., Sampaio, J., Exel, J., Barroso, J., & Filipe, V. (2022). Using Computer Vision to Track Facial Color Changes and Predict Heart Rate. Journal of Imaging, 8(9), 245. https://doi.org/10.3390/jimaging8090245