Abstract

Breast cancer remains a critical global health challenge, with over 2.1 million new cases annually. This review systematically evaluates recent advancements (2022–2024) in machine and deep learning approaches for breast cancer detection and risk management. Our analysis demonstrates that deep learning models achieve 90–99% accuracy across imaging modalities, with convolutional neural networks showing particular promise in mammography (99.96% accuracy) and ultrasound (100% accuracy) applications. Tabular data models using XGBoost achieve comparable performance (99.12% accuracy) for risk prediction. The study confirms that lifestyle modifications (dietary changes, BMI management, and alcohol reduction) significantly mitigate breast cancer risk. Key findings include the following: (1) hybrid models combining imaging and clinical data enhance early detection, (2) thermal imaging achieves high diagnostic accuracy (97–100% in optimized models) while offering a cost-effective, less hazardous screening option, (3) challenges persist in data variability and model interpretability. These results highlight the need for integrated diagnostic systems combining technological innovations with preventive strategies. The review underscores AI’s transformative potential in breast cancer diagnosis while emphasizing the continued importance of risk factor management. Future research should prioritize multi-modal data integration and clinically interpretable models.

1. Introduction

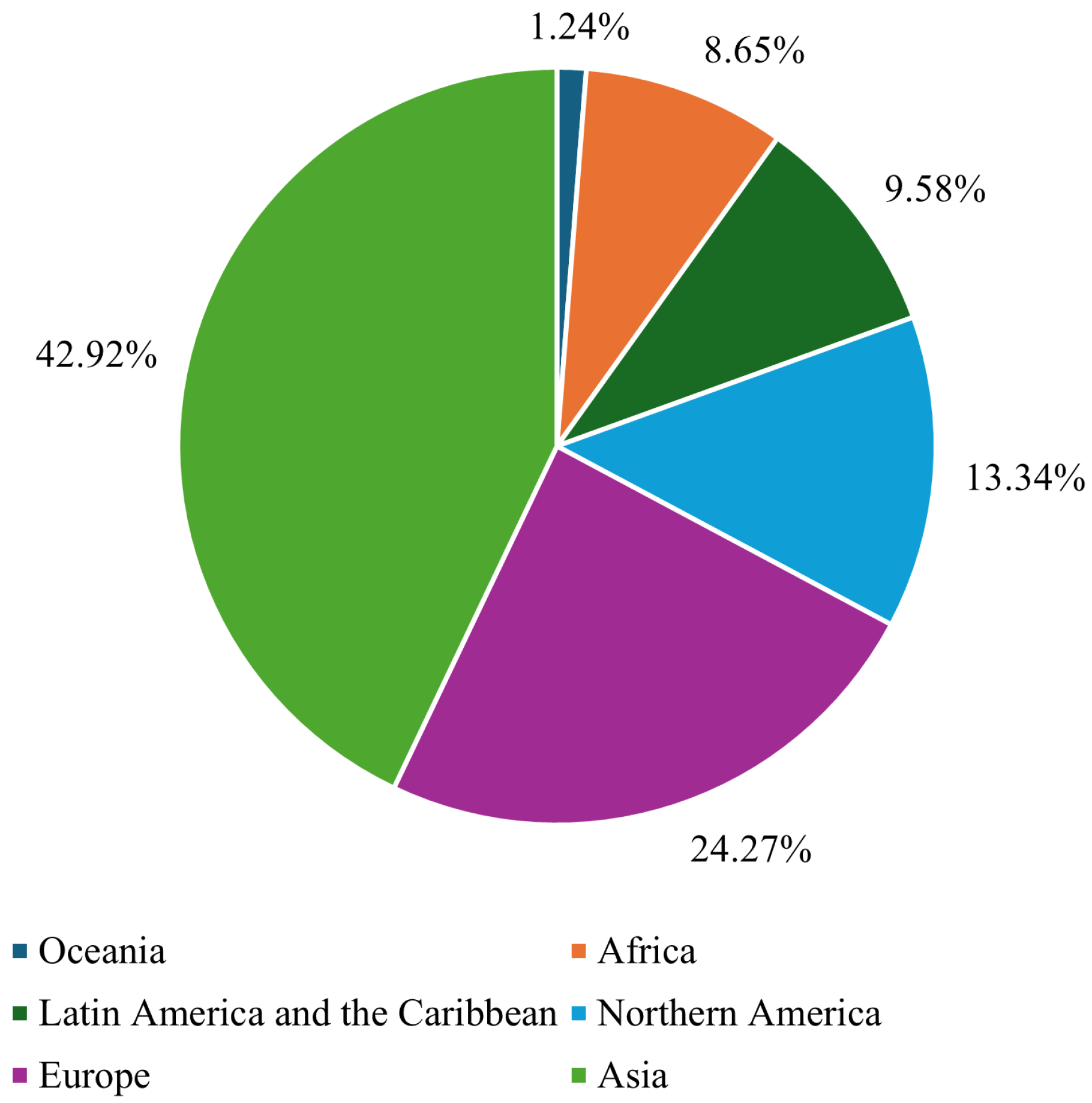

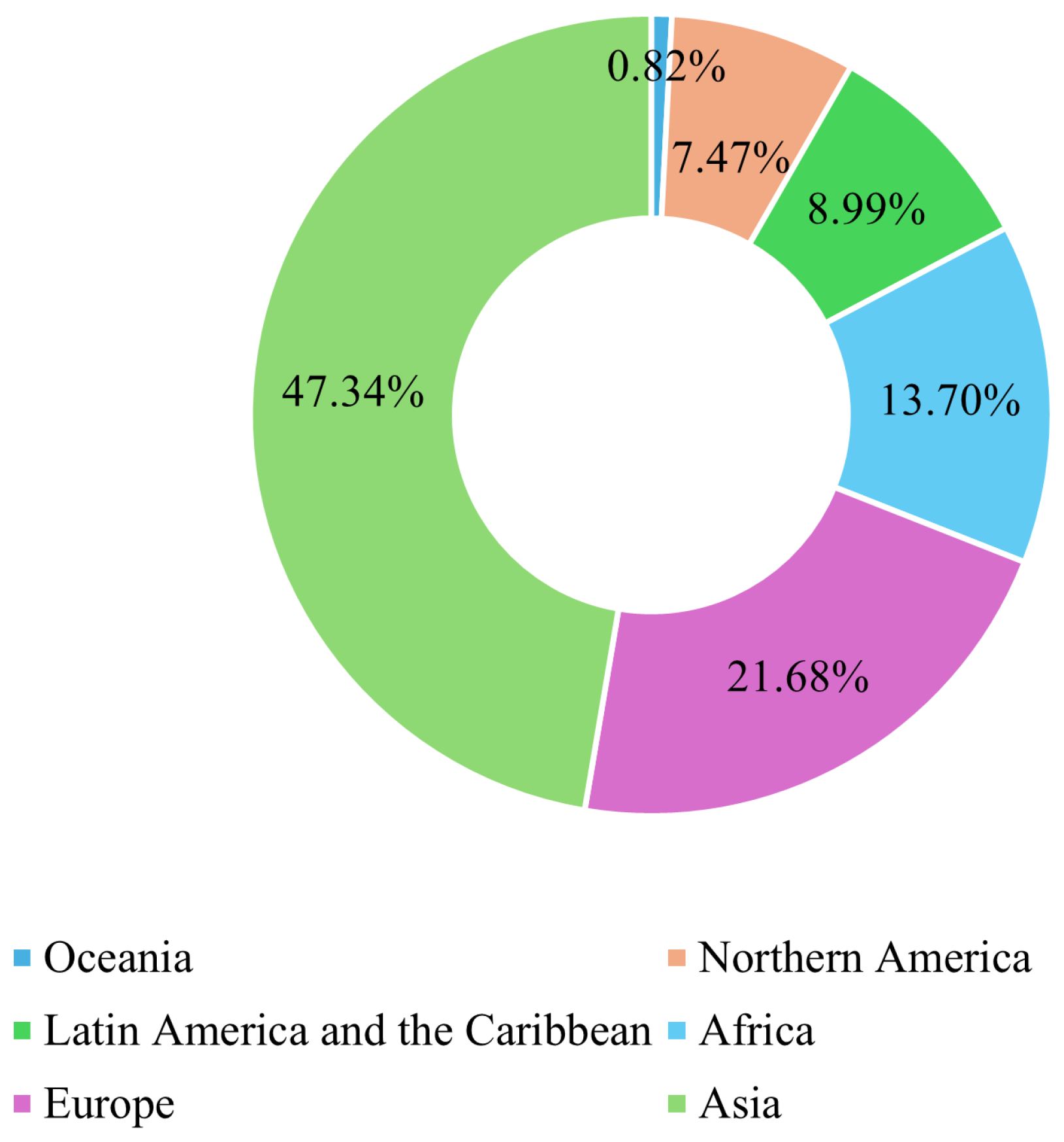

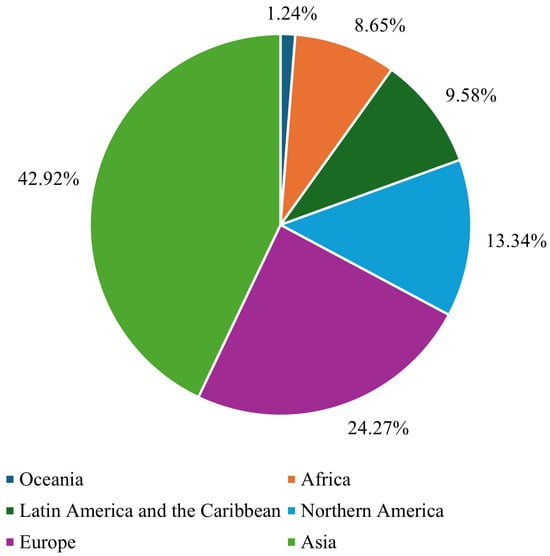

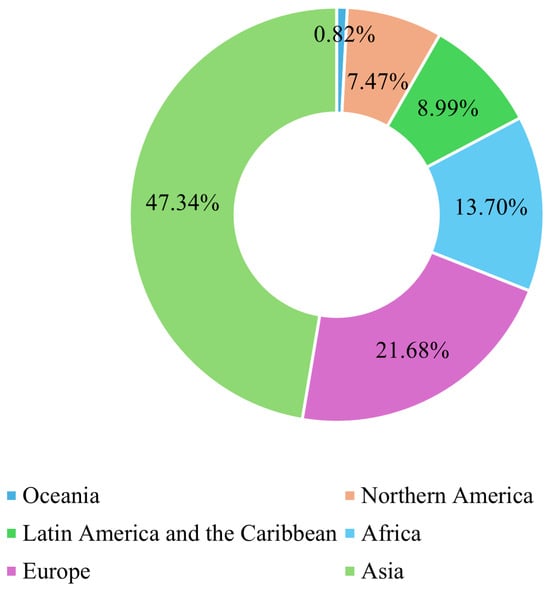

Breast cancer (BC) is the leading form of cancer among women, comprising 25% of global female cancer cases. In 2020, there were roughly 2.3 million new cases and 685,000 deaths worldwide. Early and accurate detection remains critical, as malignant tumors can spread aggressively, significantly reducing the probability of survival if left untreated [1]. Advances in artificial intelligence (AI) and machine learning (ML) have transformed medical image analysis, offering innovative tools for BC diagnosis. Techniques such as convolutional neural networks (CNNs) have shown promise in classifying and diagnosing BC using diverse imaging modalities, including mammography, CT, and ultrasound. By incorporating CAD systems and utilizing deep learning (DL), the fatality rate of breast carcinoma decreased by 40% from the 1980s to 2020, with projections suggesting that up to 2.5 million lives could be saved by 2040 if global mortality rates decline by 2.5% annually [2]. Figure 1 and Figure 2 illustrate the global incidence and mortality rates of BC among females in 2022. Figure 1 shows that Asia has the highest incidence of BC, accounting for 42.92% of cases, followed by Europe at 24.27%. Oceania reports the lowest incidence rate, at just 1.24%. Regarding mortality, 47.34% of female deaths occurred in Asia, while Europe accounted for 21.68%. These statistics demonstrate that over 65% of BC cases and deaths are concentrated in Asia and Europe.

Figure 1.

Global breast cancer incidence rate in females in 2022 [3].

Figure 2.

Global breast cancer mortality rate in females in 2022 [3].

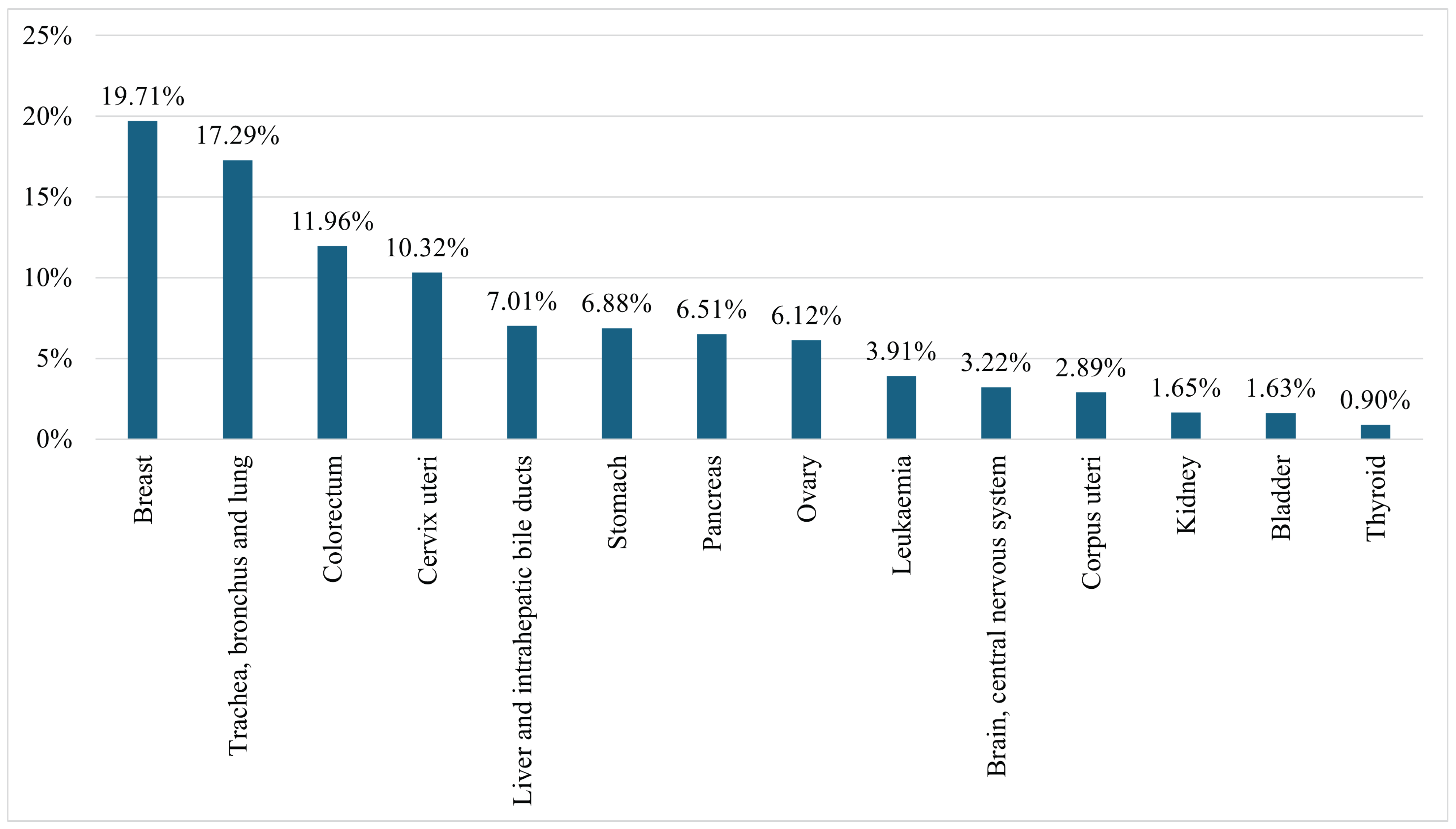

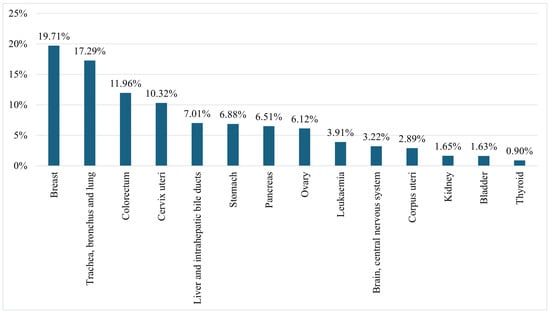

Figure 3 presents the mortality rates of females from the fourteen leading cancers worldwide in 2022, including ‘Breast’; ‘Trachea, bronchus, and lung’; ‘Colorectum’; ‘Cervix uteri’; ‘Liver and intrahepatic bile ducts’; ‘Stomach’; ‘Pancreas’; ‘Ovary’; ‘Leukaemia’; ‘Brain, central nervous system’; ‘Corpus uteri’; ‘Kidney’; ‘Bladder’; and ‘Thyroid’. The figure highlights that 19.71% of female cancer-related deaths are due to BC, making it the leading cause of cancer-related mortality among women [3].

Figure 3.

Female mortality rates from all cancers worldwide in 2022 [3].

1.1. Motivation

Breast cancer remains a significant global health concern, particularly among women, where it stands as one of the most prevalent and life-threatening forms of cancer. Despite advances in medical imaging and therapeutic strategies, there are still persistent challenges to early and accurate diagnosis, representing a major hurdle to effective treatment.

While DL models achieve high accuracy, their ‘black-box’ nature remains a barrier to clinical trust. Recent advancements in explainable AI (XAI) techniques, such as Grad-CAM and SHAP, are critical for bridging this gap. This review evaluates not only performance metrics but also the interpretability of state-of-the-art models, highlighting their clinical applicability.

Below are the key motivations for this study.

- A1.

- Breast cancer affects millions annually, with increasing prevalence across diverse age groups. Traditional diagnostic techniques such as mammography are often inadequate, particularly in dense breast tissues, leading to missed or delayed diagnoses.

- A2.

- DL techniques have revolutionized medical imaging by enabling precise analysis of complex, high-dimensional datasets. These methods reduce human error, increase diagnostic consistency, and improve efficiency.

- A3.

- Existing screening tools have inherent strengths and weaknesses. Investigating these methods to identify the most effective approaches for breast cancer detection remains critical for advancing diagnostic quality.

- A4.

- Innovations such as transfer learning, hybrid DL models, and explainable AI have enhanced diagnostic accuracy while addressing trust and interpretability concerns, essential factors for clinical integration.

- A5.

- By integrating advanced technologies into clinical practice, we can increase early detection rates, potentially saving countless lives and transforming breast cancer treatment outcomes.

1.2. Contributions

This paper offers a comprehensive review of advancements in BC detection, with a strong focus on the transformative impact of machine learning and DL. The key contributions are as follows:

- A1.

- The global BC trends and risk factors are examined to inform preventive strategies.

- A2.

- We present a visualization of current trends in BC research, review the impacts of early detection and technology on mortality rates, and highlight the potential of lifestyle changes to reduce the incidence of breast cancer in high-risk groups.

- A3.

- This review examines various BC imaging techniques, including mammograms, ultrasound, MRI, CT scans, histopathology, and thermal imaging. We discuss their advantages and disadvantages and present examples of specific cases.

- A4.

- We explore the use of structured data (CSV) for BC detection, focusing on feature engineering, preprocessing, and algorithmic approaches.

- A5.

- The study presents a summary of hybrid frameworks combining deep feature extraction and machine learning classifiers to enhance diagnostic accuracy.

- A6.

- We report the level of accuracy achieved to date by various models using different datasets.

2. Background Study

Breast cancer is a major global health issue, with the World Health Organization (WHO) reporting 7.8 million women being diagnosed in the previous three years, making it the most prevalent cancer worldwide. Breast cancer accounts for 4–5.5% of all new cancer cases annually, significantly contributing to morbidity rates [4,5]. In the United States alone, over 250,000 individuals are diagnosed with BC each year; the disease accounts for 41,690 deaths, making it the most frequent and fatal cancer type among women. BC represents approximately 25% of all cancer diagnoses in women globally [6]. Early diagnosis is critical, as patients diagnosed in the early stages have a five-year survival rate of about 99% [7,8].

Breast cancer typically begins in the ducts or lobules and manifests through symptoms such as lumps, changes in breast size or shape, and abnormal discharge. Traditional diagnostic methods, including mammography, histopathological analysis, and other imaging techniques, are widely employed but are time-consuming, requiring skilled professionals, and are prone to human error. For example, mammograms rely on radiologists for interpretation, while histopathological analysis demands experienced pathologists to accurately classify tissues [9,10,11,12]. Breast cancer was first identified in Egypt around 1600 BC and has been responsible for approximately 15% of female deaths [13,14]. While BC affects men, women, and transgender individuals, its prevalence is significantly higher among females. The risk for transgender individuals, particularly the impact of gender-affirming hormonal therapy (GAHT) on BC, remains unclear, and guidelines for screening in this group are still undefined [15,16].

The WHO projects that BC cases will increase to 2.7 million by 2040. The National Breast Cancer Coalition (NBCC) has reported a rise in the lifetime risk of developing invasive breast cancer among women in the US since 1975, with 287,850 new cases identified in women and 2710 in men. Additionally, the American Chemical Society (ACS) notes that a woman’s likelihood of being diagnosed with invasive breast cancer increased from 9.09% to 12.9% during the same period [17,18,19,20]. Early and accurate BC detection is essential for improving survival rates, but early-stage diagnosis remains challenging. Diagnostic tools such as mammography, magnetic resonance imaging (MRI), computed tomography (CT), and ultrasonography are critical, with mammography being the most widely used method. Mammography has a sensitivity range of 77–95% and a specificity range of 92–95%, yet its accuracy can be affected by dense breast tissue. To address these limitations, advancements in technology, particularly machine learning (ML), are being utilized to enhance diagnostic precision, offering significant promise for early detection and personalized treatment [15].

In India, breast cancer ranks among the top five most common cancers, with new cases rising from 159,417 in 2017 to 178,361 in 2020, comprising nearly 26% of all cancer cases in women [14].

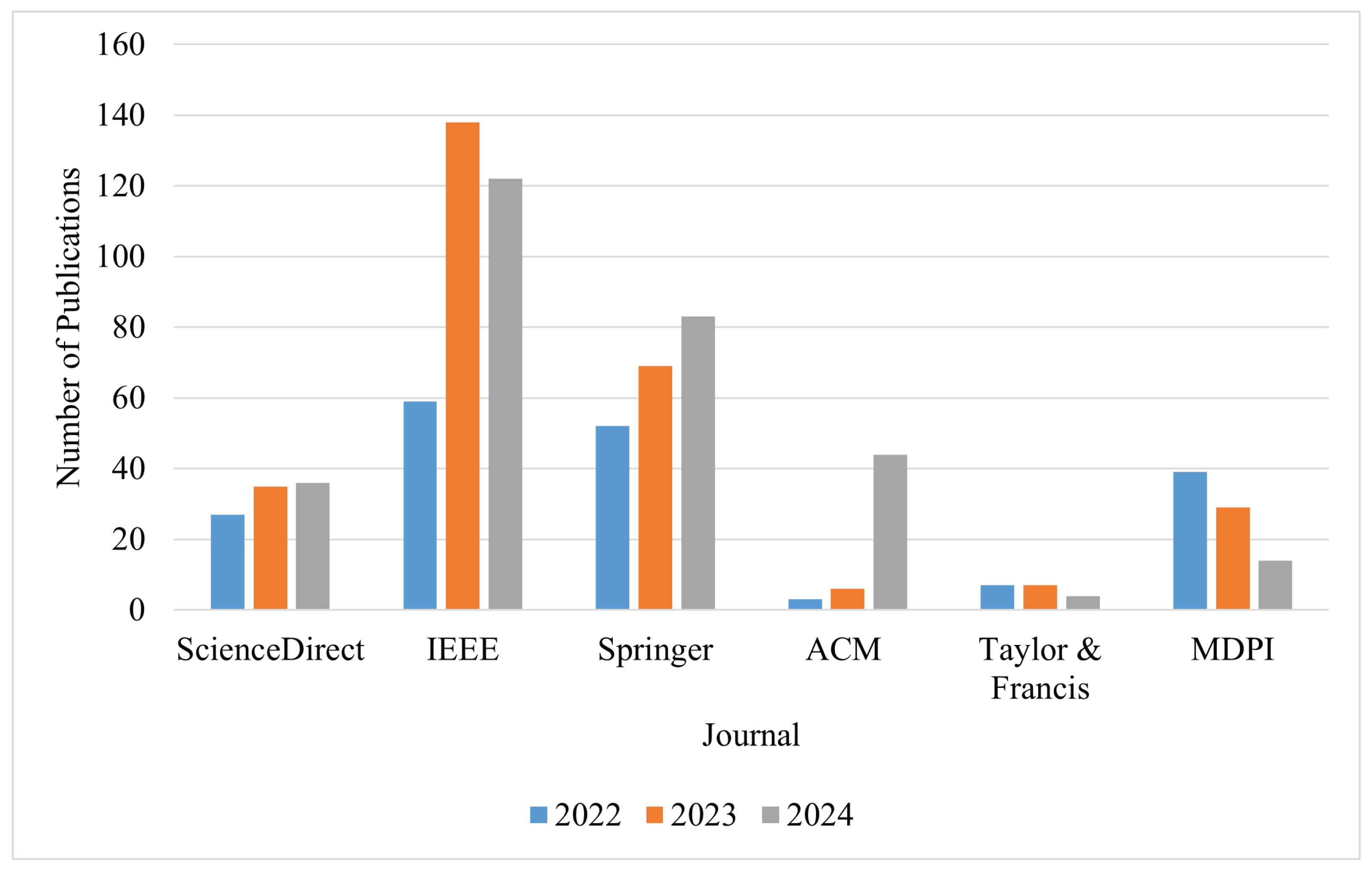

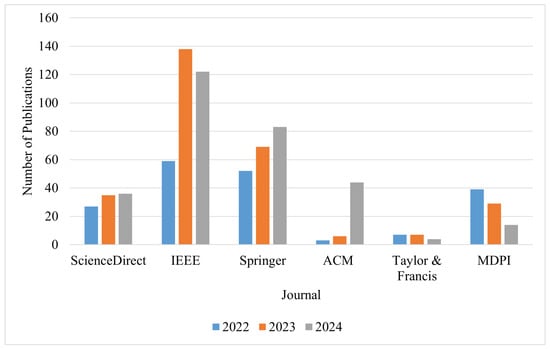

The remainder of the paper is organized as follows: Section 3 presents the research questions and includes a comparison table of our review with existing review papers on breast cancer detection. Section 4 discusses the most popular datasets used in breast cancer detection research, while Section 5 details the machine learning techniques applied for breast cancer detection. Section 6 provides statistics on various cancers, focusing on mortality and incidence rates for breast cancer across different categories. Section 7 outlines current diagnostic techniques for breast cancer and highlights key disease-associated factors. Section 8 analyzes different breast cancer detection approaches, followed by Section 9, which examines their applications, strengths, and weaknesses. Section 10 presents an overview of publication trends across journals. Finally, Section 11 concludes the paper, and Section 12 proposes future research directions to advance breast cancer detection and management.

3. Research Methodology

This section outlines the research methodology used to conduct a comprehensive review of breast cancer detection techniques using machine learning and deep learning approaches.

3.1. Study Selection Strategy

To ensure a rigorous and unbiased review, we implemented a structured selection process for identifying relevant studies:

3.1.1. Search Strategy

- SS1.

- Conducted searches across major academic databases including IEEE Xplore, SpringerLink, ScienceDirect, Nature, Wiley Online Library, and MDPI.

- SS2.

- Used keywords: (“breast cancer detection” OR “breast cancer diagnosis”) AND (“machine learning” OR “deep learning” OR “artificial intelligence”) combined with modality-specific terms (“mammography”, “ultrasound”, “MRI”, “CT”, “Histopathological”, “Thermal”, etc.).

3.1.2. Inclusion Criteria

- IC1.

- Primary focus on studies published between 2023 and 2024.

- IC2.

- Included a few foundational papers from 2019 to 2022 that introduced significant methodological advances.

- IC3.

- Included studies were required to report quantitative performance metrics such as accuracy, AUC, sensitivity, and specificity.

3.1.3. Exclusion Criteria

- EC1.

- Non-English publications, conference abstracts, and editorials.

- EC2.

- Duplicate or overlapping studies.

3.2. Research Questions

The article addresses ten key research questions, as outlined below. Additionally, Table 1 provides a comparison of existing review papers on breast cancer detection with our own, highlighting the main differences and contributions. The key research questions explored in this review include the following:

Table 1.

Comparative analysis of recently published review papers and our review of literature on breast cancer detection.

- Q1.

- What are the incidence and death rates for breast cancer across various categories?

- Q2.

- What diagnostic methods are currently used for breast cancer detection?

- Q3.

- What are the key risk factors for breast cancer?

- Q4.

- How can lifestyle changes help reduce the risk of breast cancer?

- Q5.

- Which breast cancer datasets have been used in the development of machine and deep learning models?

- Q6.

- What are the reasons for adopting machine and deep learning techniques in breast cancer detection?

- Q7.

- What are the different medical imaging modalities used for breast cancer classification, and what is the highest accuracy achieved by various models on these modalities to date?

- Q8.

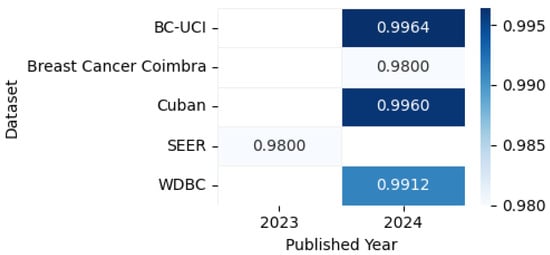

- What are the various CSV datasets employed for breast cancer classification, and what is the best accuracy attained by different models on these datasets so far?

- Q9.

- What are the strengths, weaknesses, and applications of imaging modalities in breast cancer detection techniques?

- Q10.

- What are the benefits, drawbacks, and use cases of breast cancer detection techniques based on CSV datasets?

4. Overview of Breast Cancer Datasets

Researchers utilize diverse breast cancer datasets across multiple modalities—including X-ray, ultrasound, MRI, histopathology, thermography, and structured clinical records—for developing and validating detection algorithms. These datasets vary significantly in scale (from hundreds to thousands of samples), content complexity (from basic images to comprehensive multimodal clinical data), and accessibility (ranging from open public repositories to restricted institutional databases). For systematic evaluation, Table 2 provides a short description for the modalities, detailing key characteristics such as sample size, benign/malignant class distribution, annotation quality, and access protocols, enabling researchers to identify optimal resources for their specific computational analysis needs.

Table 2.

Comprehensive overview of multimodal breast cancer datasets.

5. Machine Learning Techniques

The three main categories of machine learning are supervised learning, unsupervised learning, and reinforcement learning. Supervised learning trains models using labeled data to map inputs to known outputs, making it ideal for predictive tasks like classification and regression. The process involves analyzing training data, computing statistical features, and establishing input–output relationships. While effective for accurate predictions, it requires large labeled datasets and significant training resources. Unsupervised learning discovers hidden patterns in unlabeled data through techniques like clustering and association rule mining. Valuable for exploratory analysis, it requires less preprocessing than supervised methods but can yield more challenging-to-interpret results. Reinforcement learning trains autonomous agents through environmental interaction and reward feedback, excelling in dynamic domains like robotics and game AI. This powerful approach demands substantial computation and careful reward mechanism design. Table 3 summarizes the key characteristics of machine learning algorithms, including their types, categorizations, approaches, advantages, and limitations.

Table 3.

Machine learning algorithm taxonomy (type, categorization, approach, advantages, limitations).

6. Cancer Statistics and Incidence and MortalityRates

This section presents a statistical analysis of data for cancer rankings from 1990 to 2021. We highlight the mortality rates for both males and females, along with the global death rate per 100,000 individuals. Additionally, the section includes the incidence and death rates for both sexes categorized by age groups (20+ years and under 20 years) specifically for Bangladesh. Table 4 presents the year-wise rankings of six major cancers—Breast, Lung, Stomach, Cervical, Colorectal, and Brain—from 1990 to 2021, obtained from the Institute for Health Metrics and Evaluation (IHME) [62]. In the table, lower values indicate a higher impact and higher values indicate a lower impact. The rankings highlight significant changes in the incidence of these cancers over three decades. Breast Cancer ranked third in 1990 and 2000, worsened to become the most impactful cancer by 2011, and it had retained its top position by 2021, underscoring its increasing global prevalence and burden. Lung Cancer consistently ranked among the top two, taking the first position in 2000 and 2010 before being overtaken by Breast Cancer. Stomach Cancer, initially the most impactful cancer (ranked first) in 1990, had declined to fifth by 2021, reflecting advancements in prevention and treatment. Cervical Cancer remained stable at fourth until 2011 before worsening to third in 2021, while Brain Cancer consistently held the sixth position, indicating a lower comparative global impact. Meanwhile, Colorectal Cancer worsened from fifth in 1990 to fourth by 2021, reflecting its global increase. These patterns illustrate the evolving global cancer landscape, with BC emerging as the most impactful over time, particularly during e period 2011 to 2021. The worsening of BC highlights its growing prevalence and significance, underscoring the urgent need for enhanced research, early detection, and effective treatment strategies.

Table 4.

Year-wise ranking of the six top cancers in the world [62].

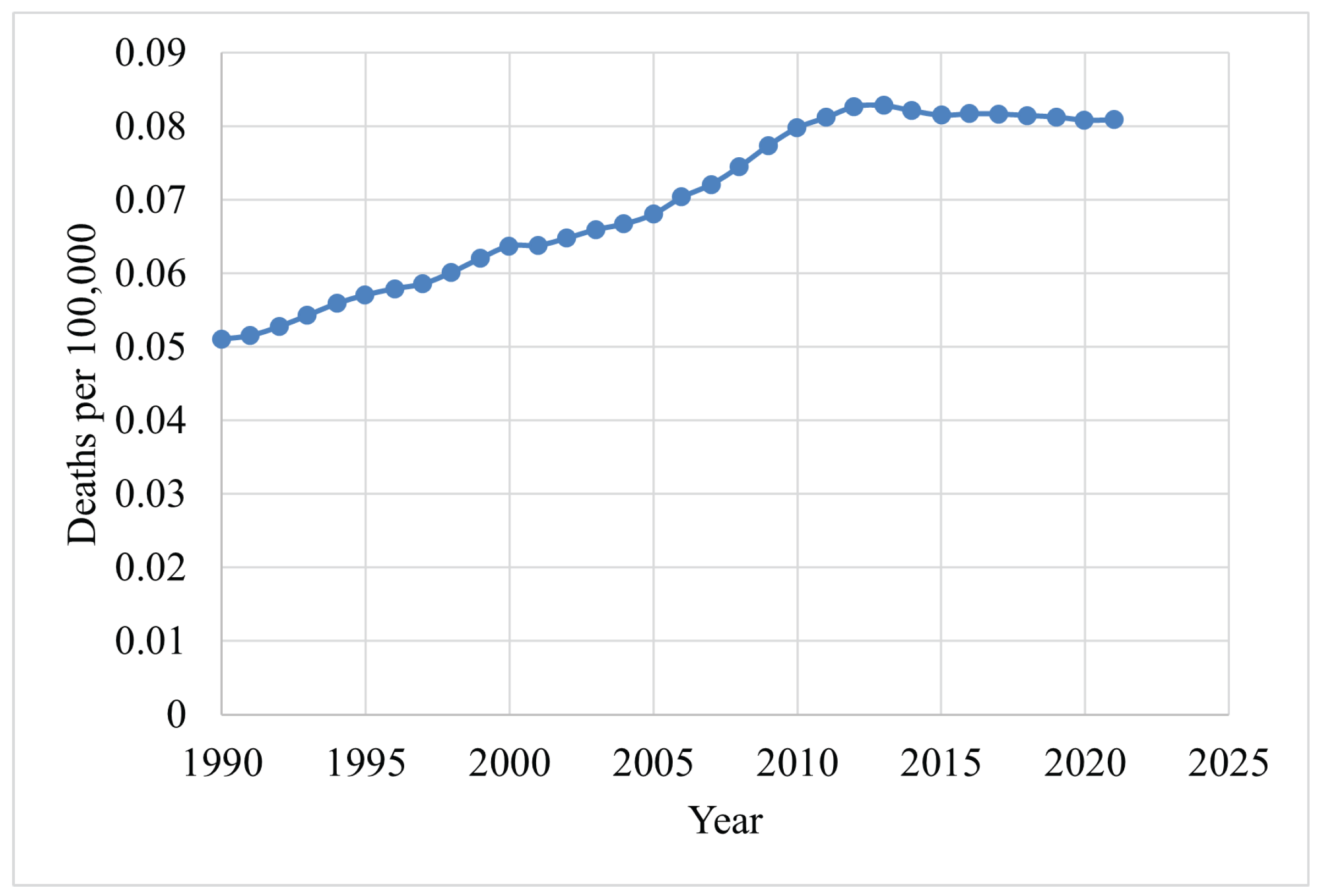

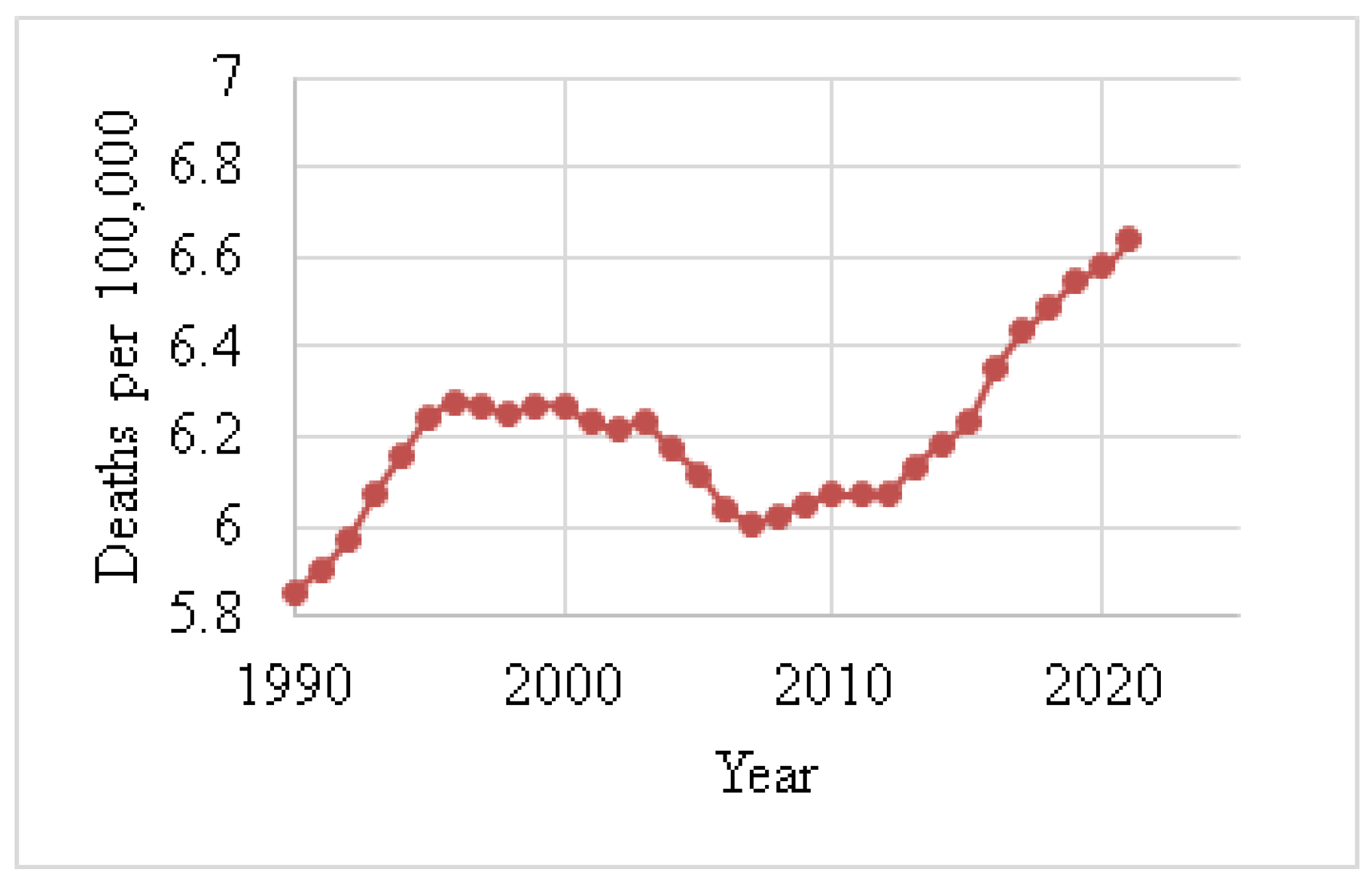

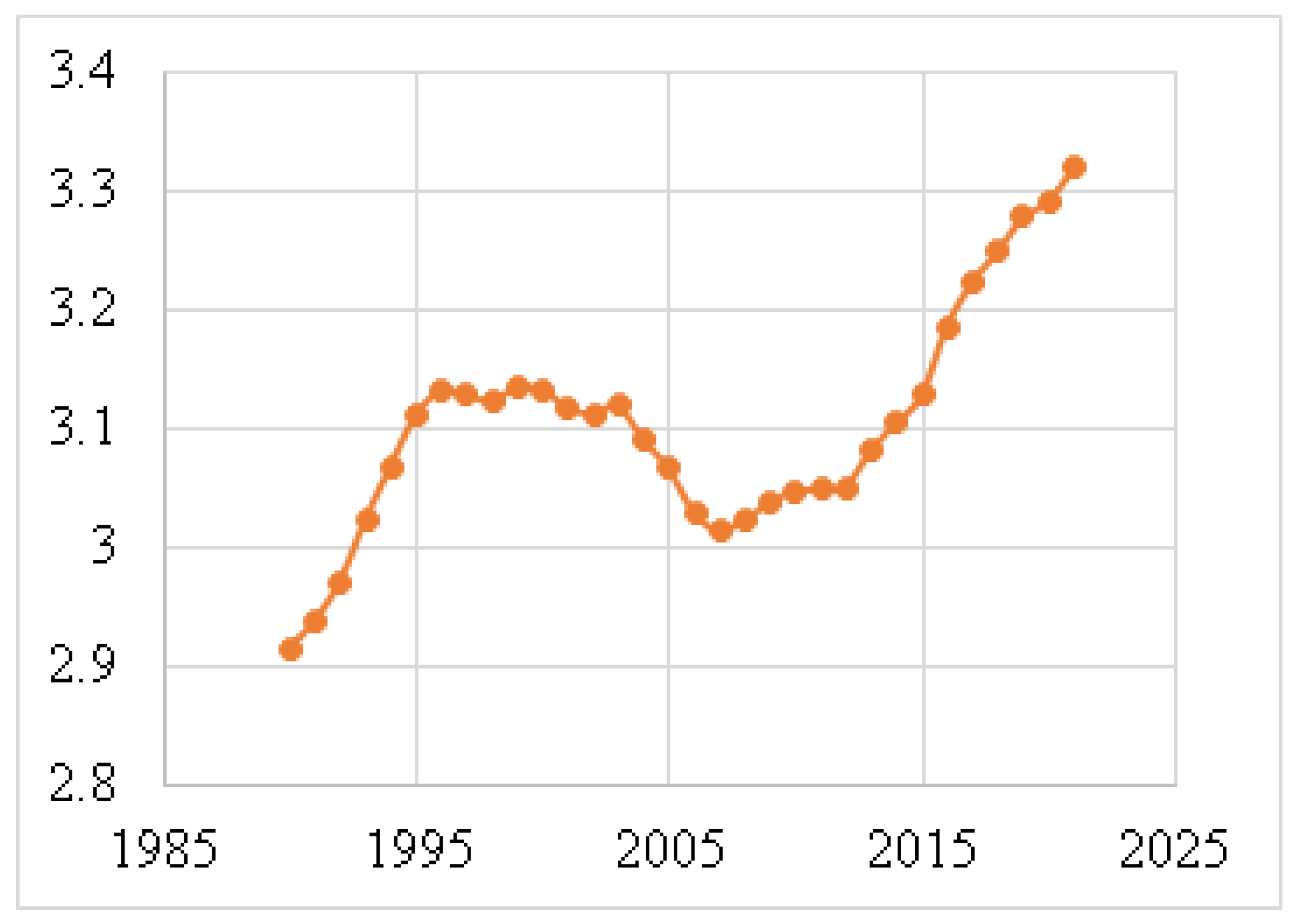

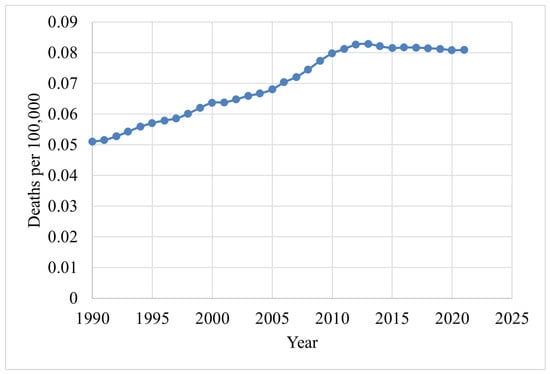

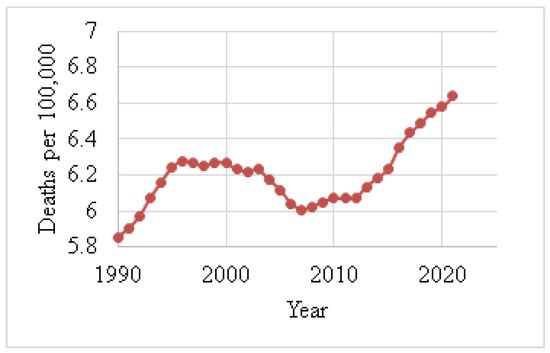

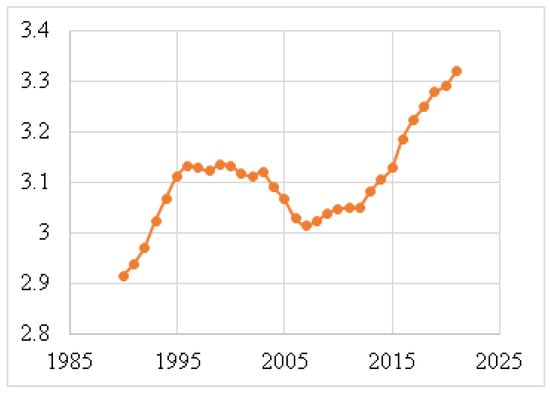

Figure 4 shows the death rates for males, and Figure 5 depicts the death rates for females per 100,000 individuals aged 15–49 between 1990 and 2021, using data obtained from the Institute for IHME [62]. The data reveal that the average death rate for females was 6.6401, while for males this was 0.0829. This indicates that the female death rate for BC is 98.75% higher than that of males during this period. Figure 6 illustrates the global death rates per 100,000 individuals. The data demonstrate an increase from 2.9141 in 1990 to 3.3183 in 2021, marking a 12.181% increase over this period using data provided by the same institute.

Figure 4.

Male death rates (1990–2021) due to BC [62].

Figure 5.

Female death rates (1990–2021) due to BC [62].

Figure 6.

Global death rates per 100,000 individuals (1990–2021) [62].

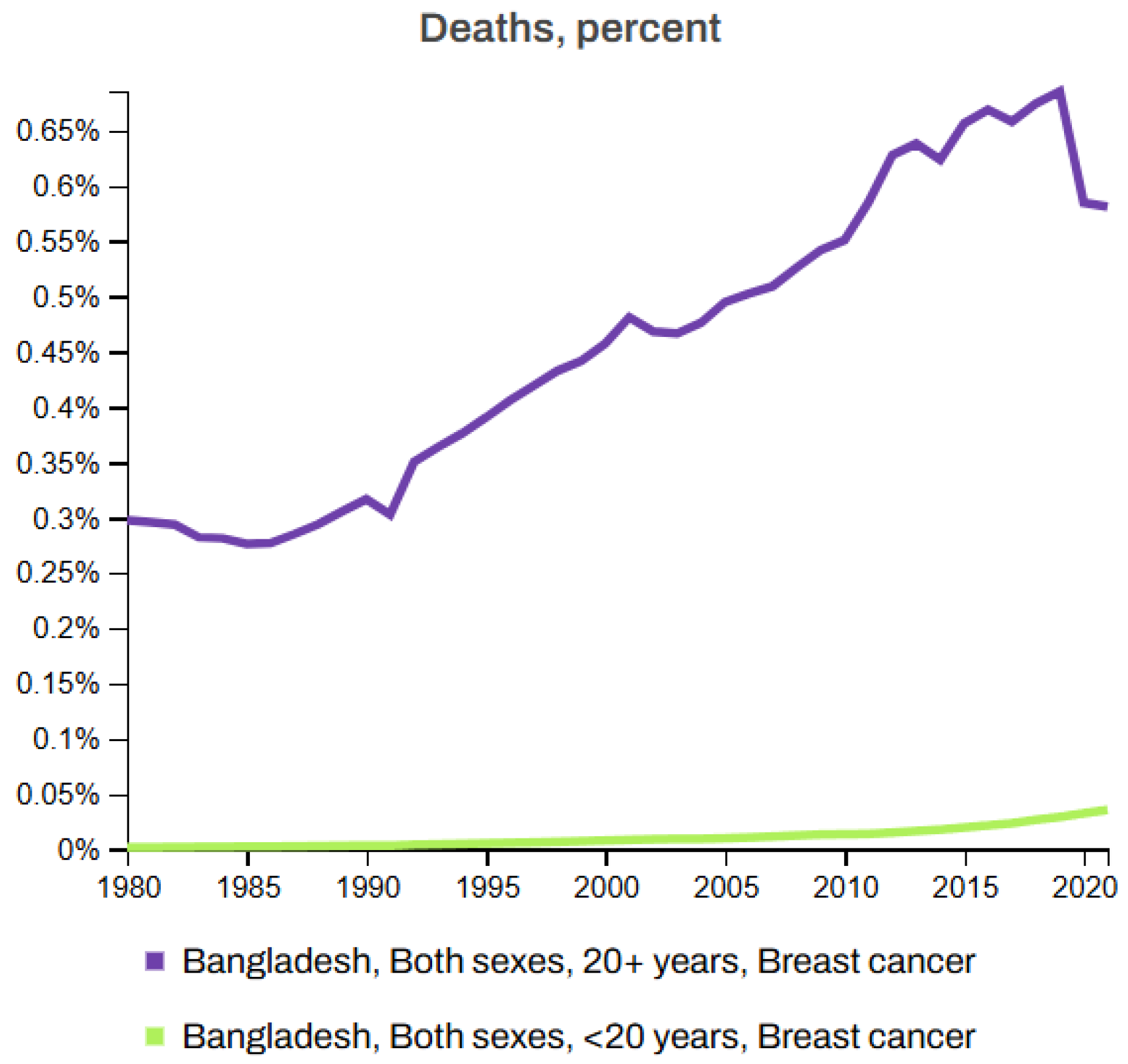

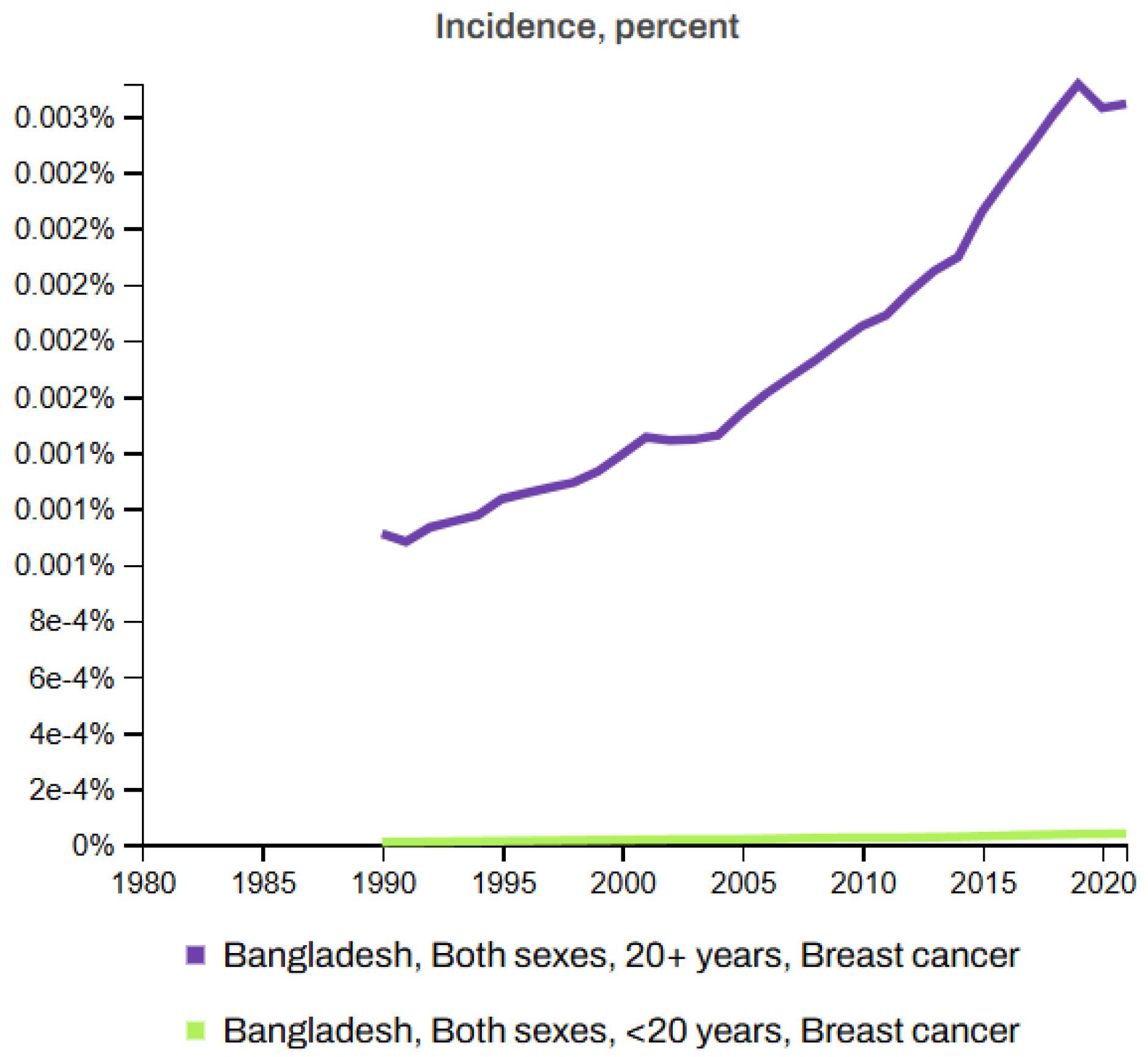

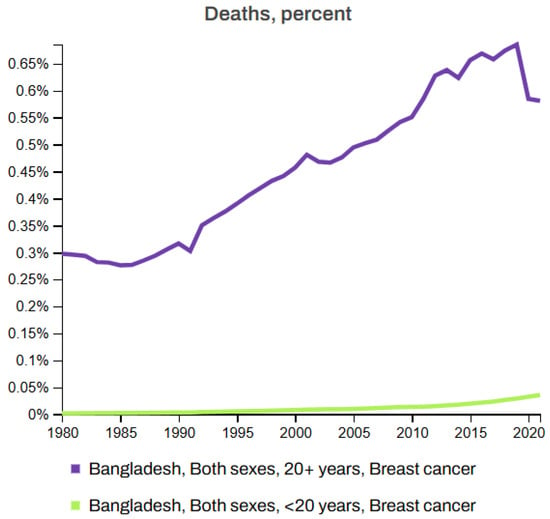

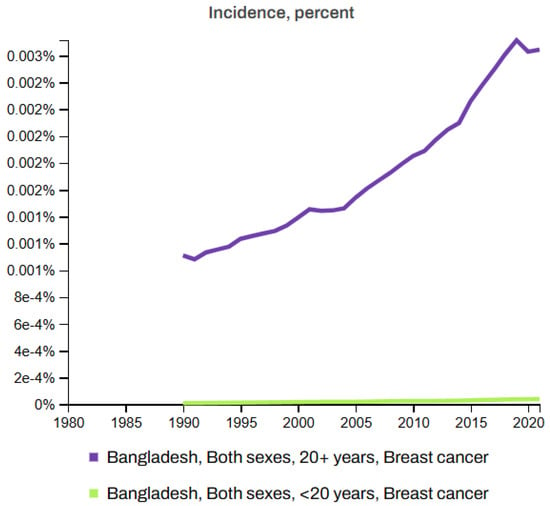

Figure 7 and Figure 8 depict the death rates and incidence rates of BC in Bangladesh from 1980 to 2021 categorized by age groups (20+ years and under 20 years) using data provided by the Institute for IHME, Seattle, United States [63]. Both figures clearly show a consistent increase in the mortality and incidence rates over time.

Figure 7.

Breast cancer death rate in Bangladesh (1980–2021) [63].

Figure 8.

Incidence rates of breast cancer in Bangladesh (1980–2021) [63].

The integration of ML and DL technologies has emerged as a transformative approach, enabling rapid and more accurate diagnosis. Research has shown that ML techniques such as CNNs can achieve up to 90% accuracy in classifying mammographic images as benign, malignant, or normal. Similarly, artificial neural networks have demonstrated high efficiency in analyzing ultrasound images to distinguish between benign and malignant cases. Some studies have reported that ML techniques can improve the prediction accuracy of BC recurrence by up to 25% compared to traditional methods [24,64,65]. These advancements in ML and DL offer the potential to address the limitations of traditional diagnostics by automating image interpretation, enhancing predictive analytics, and tailoring individual treatment plans. This review summarizes commonly used ML and DL algorithms and methodologies used in BC research, providing insights into their applications, strengths, and prospects.

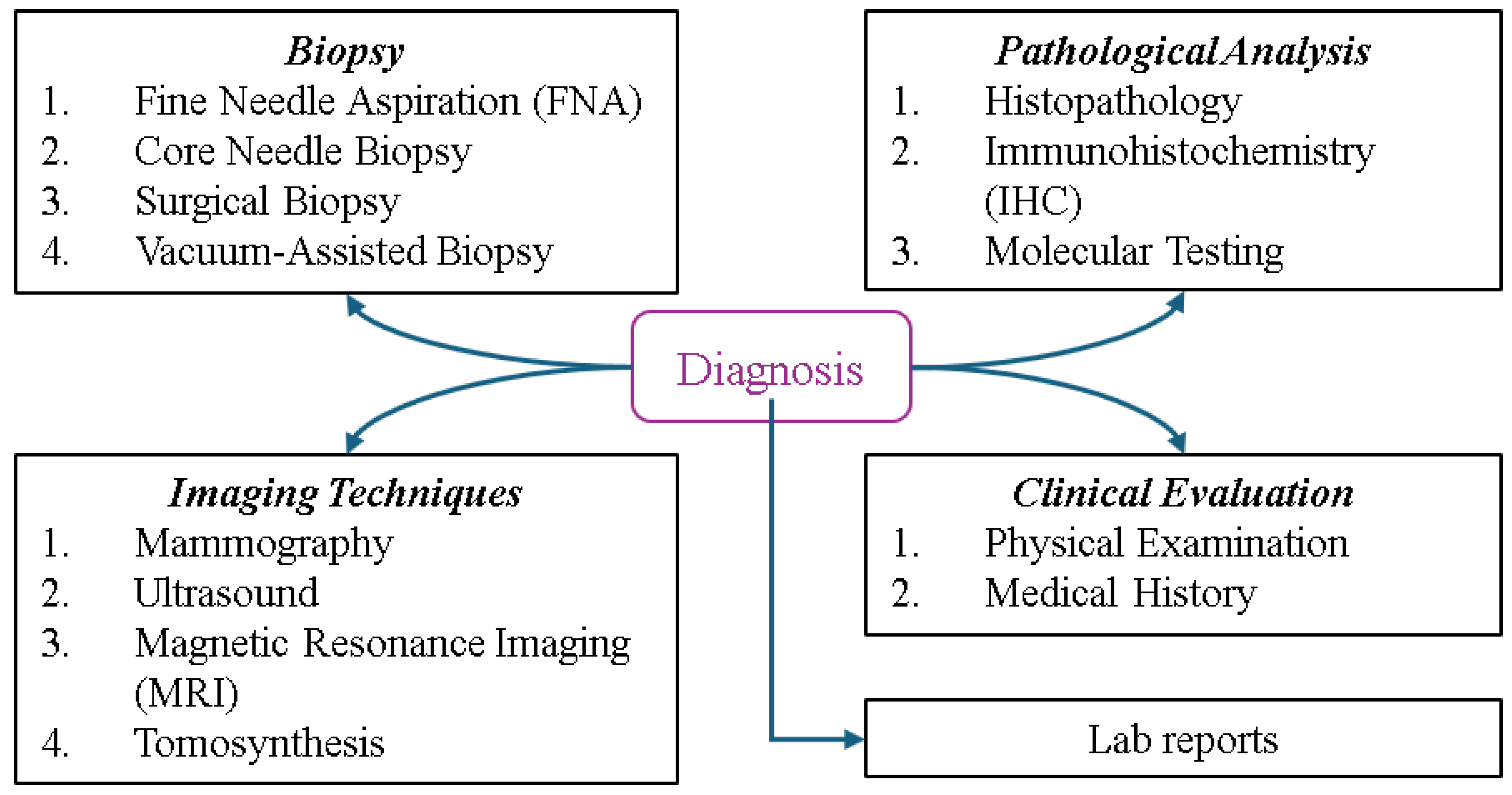

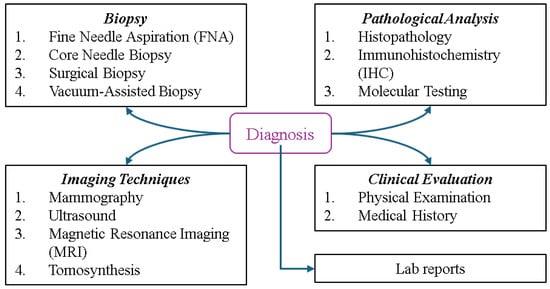

7. Breast Cancer Diagnosis and Classification

Determining the type and stage of breast cancer is crucial for patients, as this directly impacts treatment planning and prognosis, helping physicians develop optimal strategies and predict the chance of survival. Figure 9 illustrates the types of BC diagnosis, spanning multiple categories. Biopsy methods such as fine needle aspiration (FNA) for extracting fluid or cells, core needle biopsy for removing small tissue samples, Surgical Biopsy for excising part or all of a suspicious mass, and vacuum-assisted biopsy for obtaining larger samples play key roles in diagnosis. Imaging techniques include mammography, an X-ray of the breast; ultrasound, which uses sound waves to differentiate masses; MRI, a technology that provides detailed images of soft tissues, and tomosynthesis, a 3D mammography employed for enhanced clarity. Pathological analysis involves histopathology to examine tissue, immunohistochemistry (IHC) to identify protein markers such as HER2 or hormone receptors, and molecular testing to detect genetic mutations or biomarkers. Clinical evaluation includes a physical examination to check for abnormalities and a review of the patient’s medical history, while lab reports involve tests such as tumor markers (e.g., CA 15-3, CEA) for cancer indicators and a complete blood count (CBC) to assess general health. These methods provide a comprehensive framework for accurate identification and evaluation [2,66].

Figure 9.

Overview of breast cancer diagnostic methods.

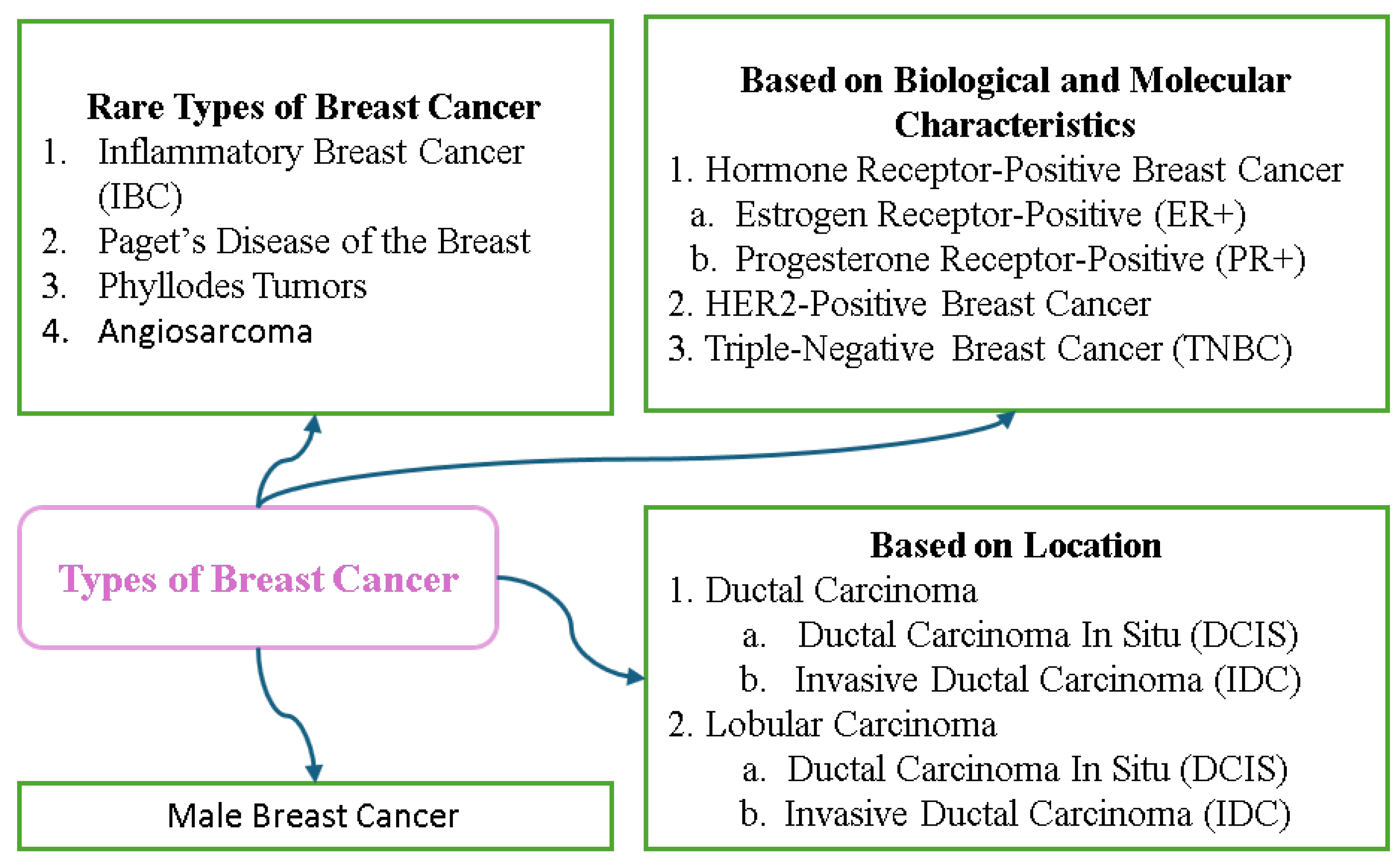

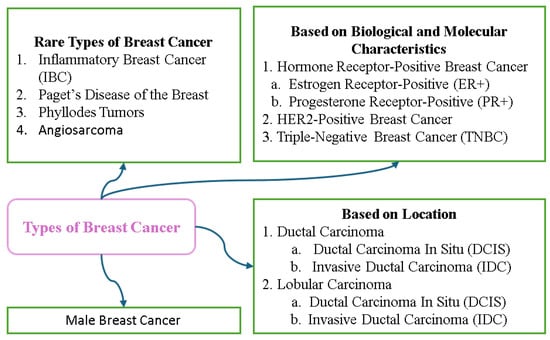

7.1. Breast Cancer Variants

Breast cancer can be categorized according to the location, biological characteristics, and molecular subtype. Non-invasive types such as Ductal Carcinoma In Situ (DCIS) and Lobular Carcinoma In Situ (LCIS) are confined to their point of origin and serve as warning signs for potential invasive cancers. Invasive types include Invasive Ductal Carcinoma (IDC), the most common form, and Invasive Lobular Carcinoma (ILC) that often responds to hormone therapy. Rare types include Inflammatory Breast Cancer, a highly aggressive form that causes redness and swelling, and Paget’s Disease that affects the nipple and areola. Breast cancer is also classified into molecular subtypes based on the hormone receptor (ER, PR) and HER2 status; these include Luminal A, Luminal B, HER2-enriched, and Triple-Negative, and these subtypes can be used to guide treatment strategies. Assignment of stages from 0 to 4 and grading further assess the progression and behavior of the cancer, with stages indicating the degree of spread and grades reflecting growth patterns. Understanding the meanings of the classifications is crucial for diagnosis and treatment planning. Figure 10 displays the different types of breast cancer.

Figure 10.

Types of breast cancer.

7.2. Key Risk Factors Associated with Breast Cancer

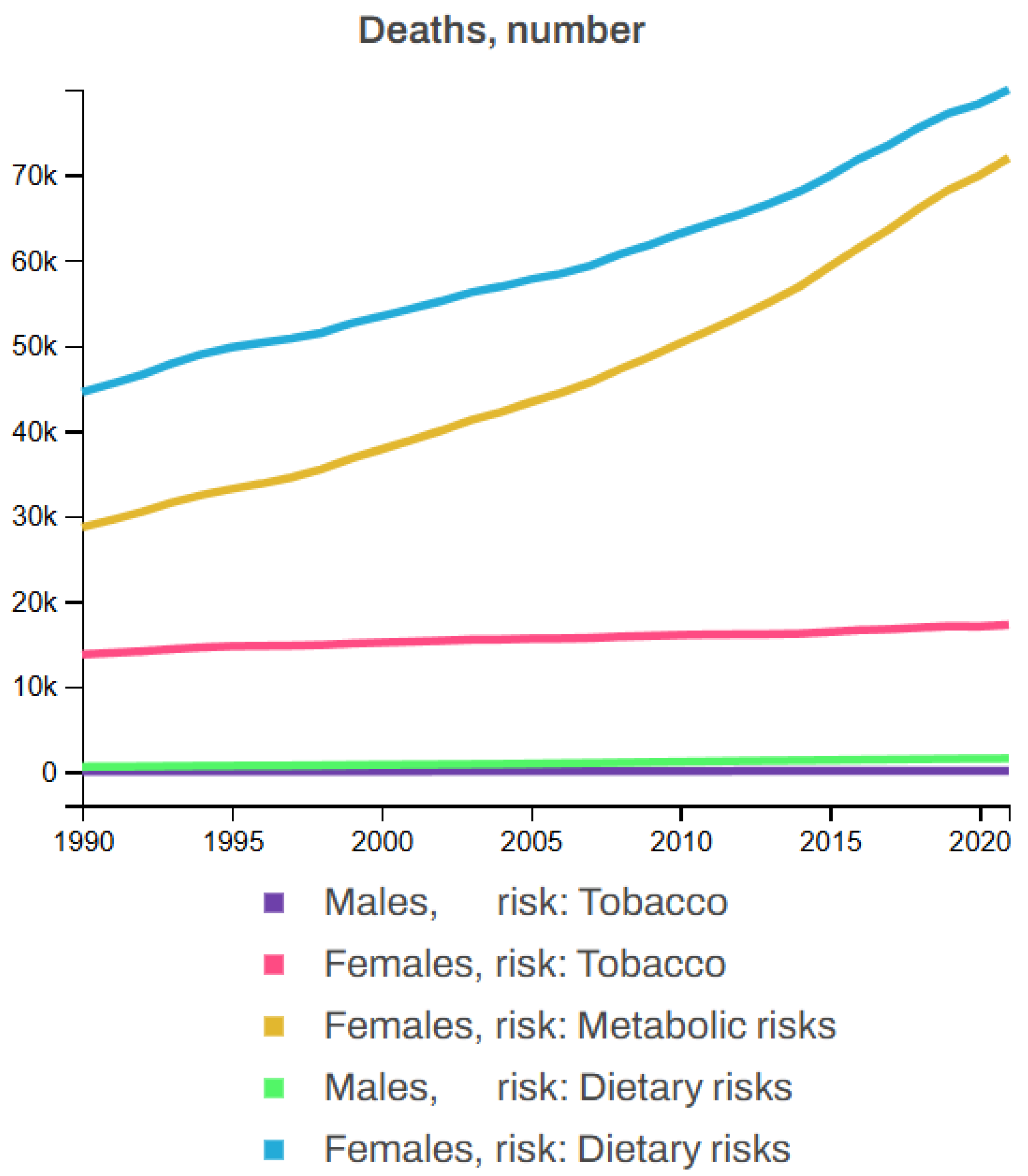

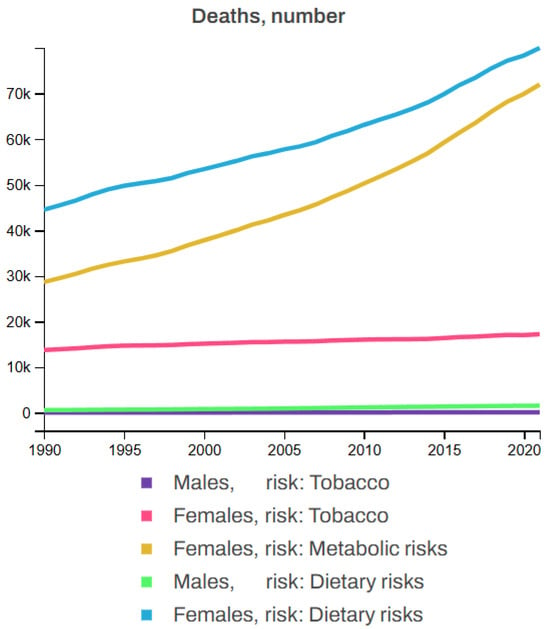

Breast cancer is influenced by several non-modifiable risk factors, including gender, age, family history, genetics, hormonal factors, and personal history, with women being at a significantly higher risk, particularly after the age of 50. A family history of BC, especially in first-degree relatives, along with known genetic mutations such as BRCA1 and BRCA2, further increases the likelihood of developing the disease. Hormonal factors such as early menstruation, late menopause, or hormone replacement therapy also contribute to this risk. Additionally, individuals who have had BC in one breast are at higher risk. Beyond these, other risk factors including tobacco use (smoking, chewing tobacco, and secondhand smoke), poor dietary habits (low intake of fruits, vegetables, whole grains, and seafood omega-3 fatty acids, along with a high intake of red meat, processed meat, sugar-sweetened beverages, and trans fats), and metabolic factors (high levels of fasting plasma glucose and LDL cholesterol, high blood pressure and body mass index, low bone mineral density, and kidney dysfunction) also play significant roles in the development of BC. Figure 11 illustrates the statistics of BC deaths linked to various risk factors, showing that, from 1990 to 2021, tobacco contributed to 3.92% of female and 0.88% of male deaths; dietary factors accounted for 11.87% of male and 12.62% of female deaths, while metabolic risk factors were responsible for 10.48% of female deaths but none for males among all BC patients [62,63].

Figure 11.

Global breast cancer mortality by risk factor (1990–2021) [63].

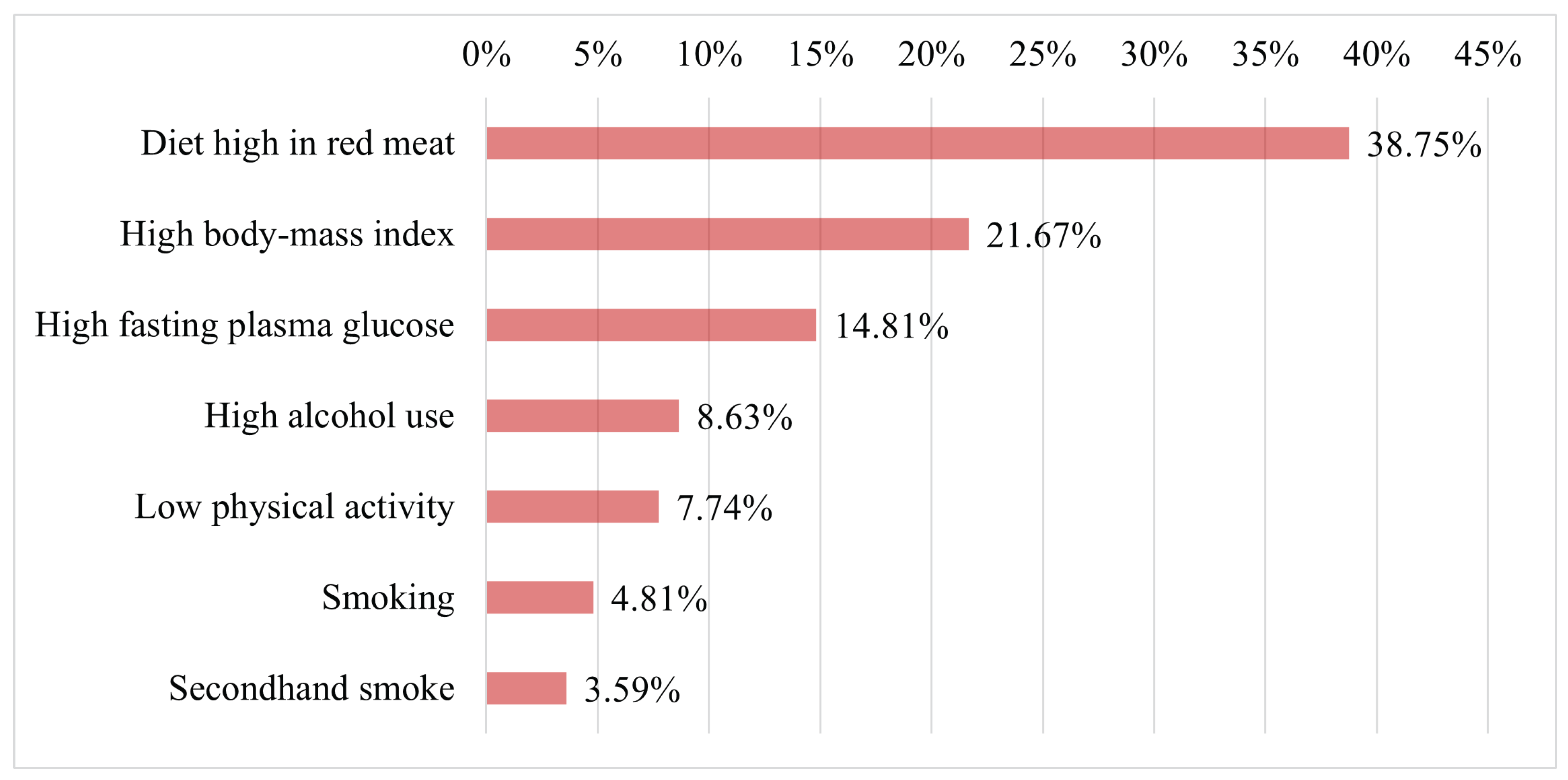

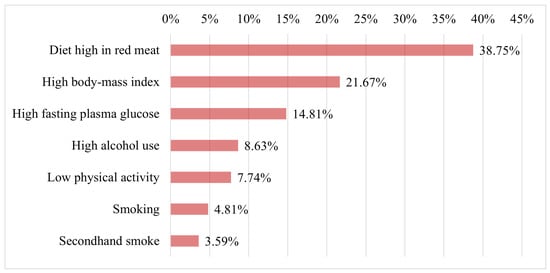

Figure 12 presents the seven key factors associated with the occurrence of breast cancer in females of all ages in 2021. The figure highlights that high consumption of red meat was the leading factor associated with BC, followed by high body mass index and high fasting plasma glucose as the second and third most significant factors, respectively. Furthermore, the figure shows that other critical factors contributing to BC risk include high alcohol use, low physical activity, smoking, and exposure to secondhand smoke. Therefore, avoiding these risk factors could significantly reduce the likelihood of developing BC in females.

Figure 12.

Key afctors contributing to breast cancer risk in females in 2021 [62].

Beyond hormonal and lifestyle factors, genetic mutations significantly influence breast cancer risk. BRCA1/2 mutations impair DNA repair, conferring up to 65% lifetime risk and association with triple-negative subtypes. TP53 and PTEN mutations dysregulate cell-cycle control and PI3K signaling, respectively, while PROC variants may promote metastatic survival via coagulation pathways [67,68].

8. Breast Cancer Detection Approaches

The diagnosis of breast cancer employs both traditional methods, such as imaging (mammograms, ultrasounds, and MRIs) and biopsies, as well as advanced computational techniques like machine learning and deep learning (DL). Deep learning, particularly methods using convolutional neural networks (CNNs), has shown exceptional performance in analyzing medical images and tissue samples. Emerging approaches, including liquid biopsies, radiomics, and AI-powered multimodal systems, are further transforming the accuracy and efficiency of BC diagnosis.

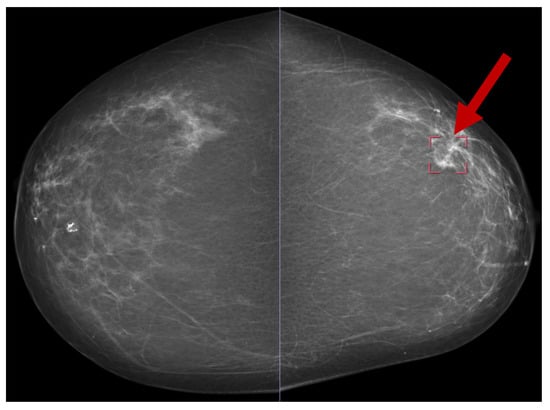

8.1. Mammogram Image-Based Breast Cancer Detection

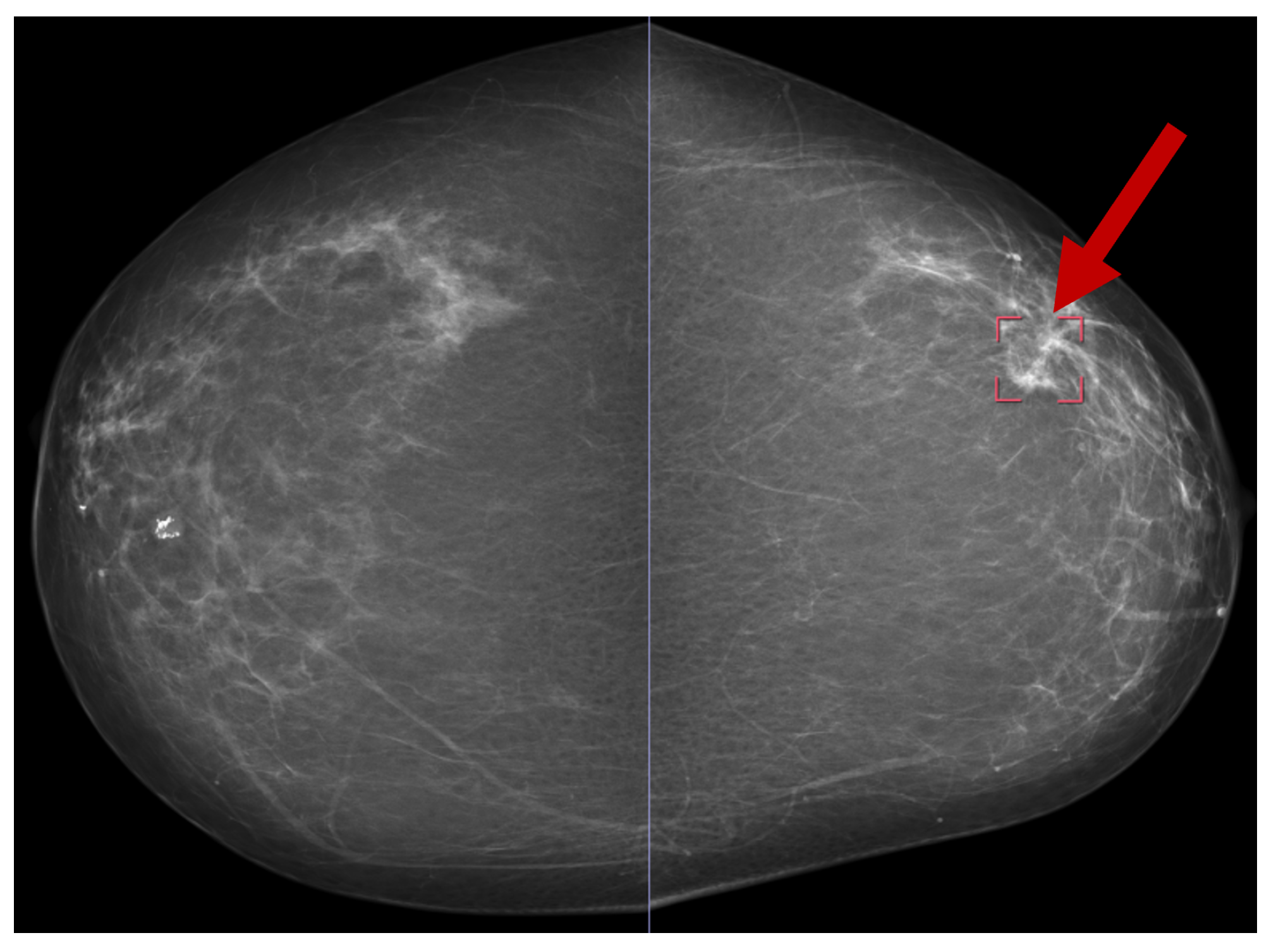

Several publicly available datasets are widely used for mammogram image-based BC detection, including the Curated Breast Imaging Subset of DDSM (CBIS-DDSM) [69], the Digital Database for Screening Mammography (DDSM) [70], the Mammographic Image Analysis Society (MIAS) dataset [71], and the INbreast dataset [30]. Figure 13 shows a sample mammogram image with a marked areaindicated by the red square box on the right side highlighting a potential abnormality for further analysis.

Figure 13.

A sample mammogram image [72].

The approach proposed by Zhao et al. involved identifying eligible patients, preparing image data, extracting labels and BI-RADS scores, and applying AlexNet, VGG, ResNet, and DenseNet models, with DenseNet achieving the best performance. The evaluation was conducted under three settings: General (BI-RADS 1–5), False Positive Reduction (BI-RADS 4–5), and Difficult Cases (BI-RADS 0) [73]. Jamil et al. proposed a BC detection technique using mammography images, achieving an accuracy of 0.9719. Their approach applied three techniques in sequence: Log Ratio, Gabor Filter, and Fuzzy C-Means (FCM) [74]. Nemade et al. proposed an ensemble-based model for BC classification, utilizing VGG16, InceptionV3, and VGG19 as base learners, with an artificial neural network for classification. The model achieved an accuracy of 98.10%, a specificity of 99.12%, and a sensitivity of 97.01% [75].

Another study conducted five experiments using different preprocessing techniques. The researchers applied six classifiers—SVM, Random Forest, ANN, KNN, Naive Bayes, and Decision Tree—and achieved 100% for all metrics (accuracy, sensitivity, specificity, and F1-score) with SVM and ANN. This was achieved when preprocessing was performed using contrast limited adaptive histogram equalization (CLAHE) and unsharp masking (USM) on the mini-MIAS dataset [76].

Yaqub et al. developed a two-stage system for BC diagnosis using mammogram images, where the first stage involves image segmentation through the Atrous Convolution-based Attentive and Adaptive Trans-Res-UNet (ACA-ATRUNet) model, and the second stage utilizes the Atrous Convolution-based Attentive and Adaptive Multi-scale DenseNet (ACA-AMDN) model for cancer detection, with hyperparameter optimization carried out using the Modified Mussel Length-based Eurasian Oystercatcher Optimization (MML-EOO) algorithm. They achieved an accuracy of 89.13%, a specificity of 0.8937, a precision of 0.8226, and an F1-score of 0.8536 for the MIAS mammography dataset, and accuracy, precision, and F1-score values of 89.061%, 80.348%, and 84.424%, respectively, for the CBIS-DDSM BC image dataset [77]. In [78], EfficientNet achieved the highest accuracy of 0.9829 on the MaMaTT2 dataset. For the DDSM dataset, a CNN classifier with different fine-tuning achieved an accuracy of 0.9996, a sensitivity of 1.0, a precision of 0.9992, and an F1-score of 0.9996 [79]. Additionally, the Inception Recurrent Residual Convolutional Neural Network (IRRCNN) achieved an accuracy of 0.9676 on the BreakHis dataset (factor ×40) and 0.9711 on the BC Classification Challenge 2015 dataset [10].

Table 5 summarizes recently published research on mammography image-based studies.

Table 5.

Comparison of recent mammography-based breast cancer detection techniques.

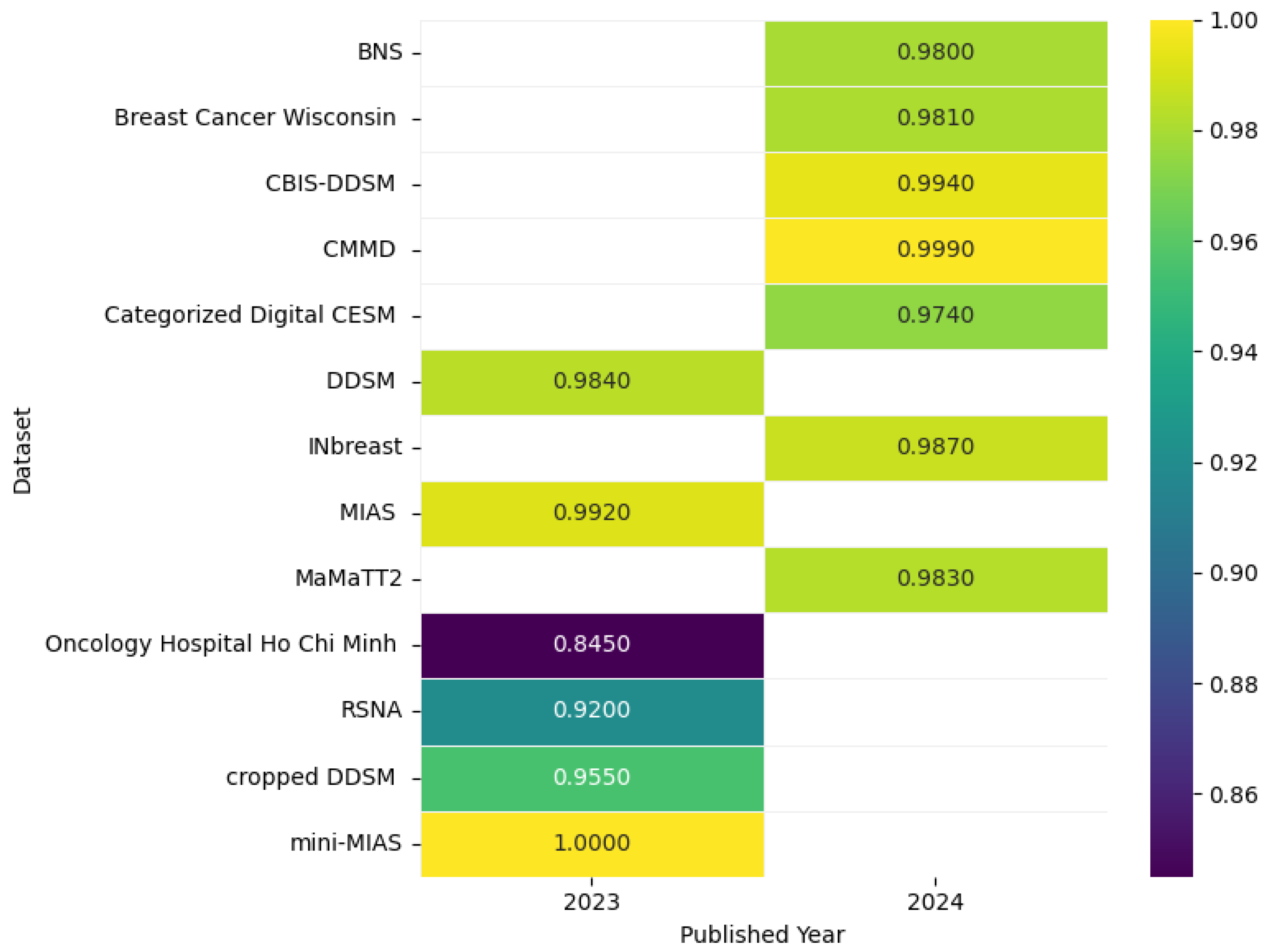

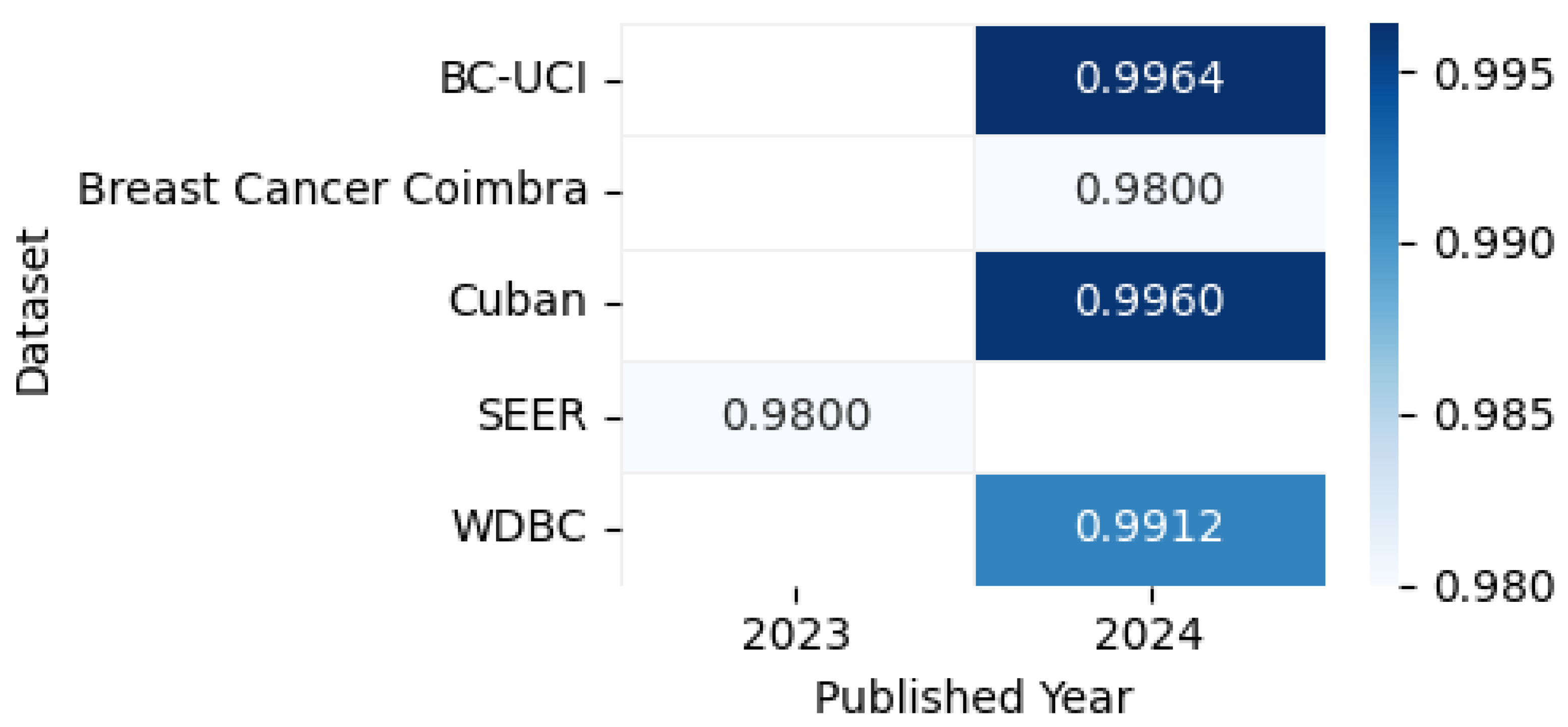

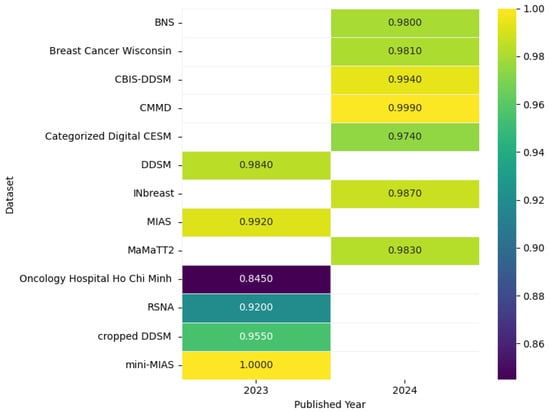

Figure 14 shows the highest accuracies obtained by different models applied to mammogram datasets in 2023 and 2024, including CBIS-DDSM [80], CMMD [80], DDSM [81], INbreast [82], MIAS [81], mini-MIAS [76], Breast Cancer Wisconsin [83], Categorized Digital CESM [84], Oncology Hospital Ho Chi Minh [85], Cropped DDSM [86], MaMaTT2 [78], RSNA [87], and BNS [88], as reported in the articles, while the accompanying correlation figure uses color intensity to represent accuracy levels.

Figure 14.

Highest detection accuracy across multiple mammogram datasets for breast cancer.

8.1.1. Model Performance on Mammogram Image: Pros, Cons, and Future Directions

Table 6 provides an overview of the key benefits, limitations, and future research directions for leading mammogram-based breast cancer detection models, enabling easy comparison of their capabilities and areas for improvement.

Table 6.

Comprehensive overview of top-performing models on mammogram breast cancer datasets.

8.1.2. Summary

Several publicly available datasets, such as CBIS-DDSM, DDSM, MIAS, and INbreast, have been widely used for breast cancer (BC) detection from mammogram images. Recent studies have employed advanced machine learning and deep learning techniques, including DenseNet, VGG16, AlexNet, and InceptionV3, to achieve high accuracy and specificity in BC detection. Preprocessing methods like contrast limited adaptive histogram equalization (CLAHE) and unsharp masking (USM) have been utilized for image enhancement, while ensemble models and hybrid systems combining deep learning with traditional image processing techniques have also shown promising results. The highest reported accuracy reached 99.96% on the DDSM dataset. Table 7 presents a summary of key findings in mammogram-based breast cancer detection.

Table 7.

Summary of key findings in mammogram-based BC detection.

8.1.3. Analysis

The application of deep learning models, particularly CNNs and ensemble models, has significantly improved BC detection performance. Preprocessing techniques like CLAHE and USM, combined with classifiers such as SVM and ANN, have enabled researchers to achieve near-perfect metrics. Advanced architectures like DenseNet and EfficientNet have demonstrated robust performance across multiple datasets, including DDSM, MIAS, and CBIS-DDSM. Hybrid systems, such as those combining deep learning with traditional image processing techniques (e.g., Log Ratio, Gabor Filter, and FCM), have also shown remarkable accuracy. Table 8 provides an analysis of techniques and their impact based on mammogram-based BC detection.

Table 8.

Analysis of techniques and their impact based on mammogram-based BC detection.

8.1.4. Critique

While current models demonstrate impressive performance, several limitations need addressing. First, the interpretability of these models remains a challenge, which is critical for clinical acceptance. Second, the generalization ability of models across diverse datasets and imaging conditions is often untested, raising concerns about their real-world applicability. Additionally, the lack of diversity in datasets (e.g., limited representation of different populations and imaging devices) may lead to overfitting and reduced robustness. Table 9 presents a critique of current limitations.

Table 9.

Critique of current limitations based on mammogram-based BC detection.

8.1.5. Comparison

The results of various studies highlight differing approaches and outcomes. For instance, Yaqub et al. (2024) [77] achieved 89.13% accuracy on the MIAS dataset using a two-stage system, while Nemade et al. (2024) [75] reported 98.10% accuracy with an ensemble model. Avci et al. (2023) [76] demonstrated the importance of preprocessing, achieving 100% accuracy with CLAHE, USM, SVM, and ANN. EfficientNet, as used by Dada et al. (2024) [78], outperformed other models with 98.29% accuracy on the MaMaTT2 dataset. These comparisons underscore the importance of dataset quality, preprocessing, and algorithm choice in achieving high detection accuracy. Table 10 provides a comparison of key studies.

Table 10.

Comparison of key studies based on mammogram-based BC detection.

The advancements in mammogram-based BC detection are promising, with deep learning models and preprocessing techniques driving significant improvements in accuracy and performance. However, challenges related to interpretability, generalization, and dataset diversity must be addressed to ensure these models are clinically viable and applicable across diverse populations and imaging conditions. Future research should focus on developing more interpretable models, expanding dataset diversity, and testing models in real-world clinical settings.

While mammography models achieve >99% accuracy on curated datasets (Table 5), two critical gaps persist: (1) performance drops significantly on dense breast tissue, (2) most studies lack real-world testing with radiologist workflows.

8.2. Ultrasound Image-Based Breast Cancer Detection

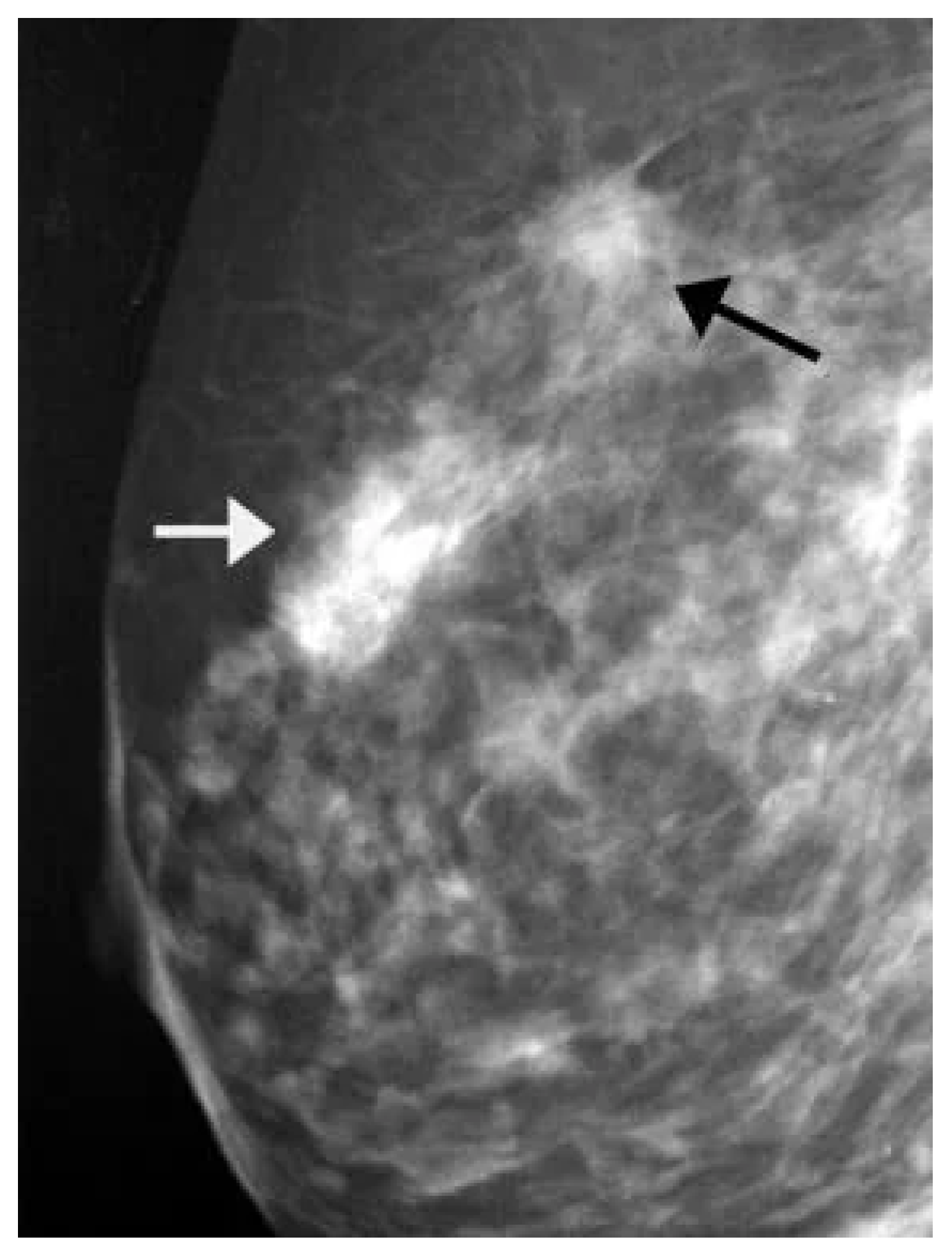

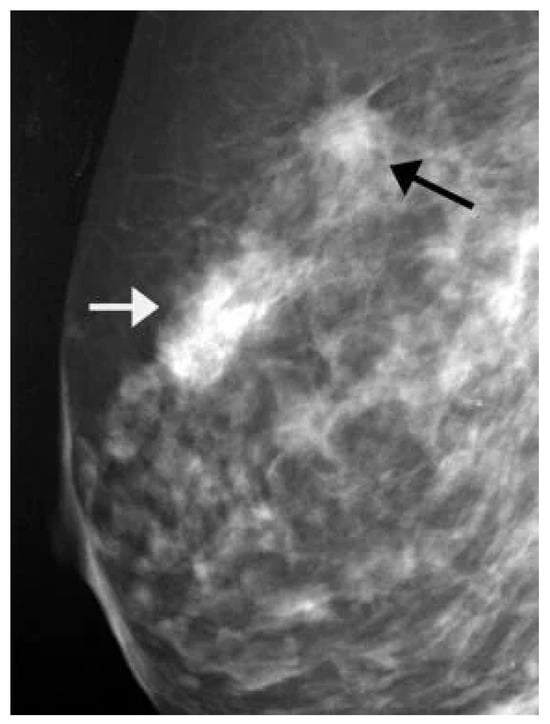

Ultrasound images are used to visualize internal structures through high-frequency sound waves. There are several publicly available datasets for ultrasound image-based BC detection, including the Breast Ultrasound Images (BUSI) dataset [34], BUS-BRA [89], BUS-UCLM [90], and Breast-Lesions-USG [91]. Figure 15 presents a sample ultrasound image with an array-marked region, highlighting a potential abnormality for further examination.

Figure 15.

A sample ultrasound image [92].

The BUSI dataset was created in 2018 and includes 780 images from 600 female patients aged 25–75 categorized as normal, benign, or malignant, with PNG images averaging pixels and ground truth masks. The BUS-BRA dataset features 1875 anonymized images from 1064 patients in Brazil, including biopsy-proven cases (722 benign and 342 malignant), BI-RADS assessments, manual segmentations, and cross-validation partitions to standardize CAD system evaluations. The BUS-UCLM dataset, collected between 2022 and 2023, contains 683 images with RGB segmentation masks for normal, benign, and malignant cases, enabling the development of machine learning models for the detection and diagnosis of breast lesions. Lastly, the Breast-Lesions-USG dataset offers 256 scans with annotated tumors, BIRADS classifications, and histopathological diagnoses, serving as an external testing set and providing support for AI development in BC research.

Mehedi et al. introduced a DL strategy for BC detection using ultrasound images, based on a publicly available breast ultrasound image dataset from Rodrigues [93] that includes 250 images (100 benign and 150 malignant cases). They incorporated Grad-CAM and occlusion mapping to evaluate feature extraction and developed a custom CNN model with fewer parameters for improved efficiency. For classification, they applied DenseNet201, ResNet50, and VGG16 models, achieving 100% accuracy after fine-tuning using this dataset. Specifically, DenseNet201 and ResNet50 performed best with the Adam and RMSprop optimizers, while VGG16 reached 100% accuracy using Stochastic Gradient Descent. The original images with varying sizes (57 × 75 to 161 × 199) were resized to 224 × 224, 227 × 227, and 299 × 299 for different pre-trained models [94].

The DeepBreastCancerNet model consists of 24 layers, with six convolutional layers, nine inception modules, and a fully connected layer. The model achieved 99.35% accuracy on the initial ultrasound dataset [34] and 99.63% accuracy on a second publicly available dataset [93], demonstrating its robustness. By applying transfer learning, a method that utilizes pre-trained models to avoid overfitting, the researchers improved detection accuracy, surpassing state-of-the-art models in BC classification. The model incorporates clipped ReLU and leaky ReLU activation functions, along with batch and cross-channel normalization, that enhanced its performance on both datasets [95].

A study of 603 patients from three institutions (2018–2021) trained and validated four DCNNs on ultrasound images (420 training, 183 validation) to predict the responses to NAC. ResNet50, the best model using only images, achieved an AUC of 0.879 and 82.5% accuracy. When combined with clinical–pathologic variables, the integrated DLR model outperformed both the image-based and clinical models, achieving AUCs of 0.962 and 0.939 in training and validation, surpassing radiologists’ predictions and improving their accuracy as a support tool [96]. The study introduces a novel ROI-free system for BC diagnosis using ultrasound images that incorporates prior anatomical knowledge of the spatial relationships between malignant and benign tumors, captured through the HoVer-Transformer. This model, which extracts inter- and intra-layer spatial information both horizontally and vertically, was evaluated on the GDPH&SYSUCC dataset and compared to four CNN-based and three vision transformer models. The proposed model achieved state-of-the-art results, with an AUC of 0.924, an accuracy of 0.893, a specificity of 0.836, and a sensitivity of 0.926, surpassing two senior sonographers in BC diagnosis [97].

Madhusudan et al. presented a method for BC detection using ultrasound images (USIs) [98], combining transfer learning (TL) models (MobileNetV2, ResNet50, VGG16) with LSTM for feature extraction and SMOTETomek for feature balancing. The method with VGG16 achieved an F1 score of 99.0%, MCC and kappa coefficients of 98.9%, and an AUC of 1.0. Using K-fold cross-validation, the same method achieved an average F1-score of 96%. Grad-CAM and LIME were applied for visualization and interpretability, and confidence intervals were calculated via NAI (LCI 96.50%, UCI 99.75%, MCI 98.13%) and bootstrapping (LCI 93.81%, UCI 96.00%, MCI 94.90%) [99]. Rao et al. proposed Inception V3 + Stacking, a model that combined transfer learning with ensemble stacking of ML models (including MLP and SVM with RBF and polynomial kernels) and applied the model to the ultrasound breast cancer (USBC) image dataset. Their model achieved excellent results, with an AUC of 0.947 and an accuracy of 0.858, outperforming existing diagnostic systems [100].

Appiah et al. explored the use of DL for classifying BC from ultrasound images, focusing on low- and middle-income countries (LMIC) with limited healthcare access. Their aim was to deploy a simple classifier on a mobile device using affordable handheld ultrasound systems to identify cases needing medical attention. Their model achieved 64% accuracy when trained from scratch and 78% accuracy after pre-training, highlighting DL’s potential to aid early BC detection with minimal image data [101]. Table 11 provides a summary of recent research on ultrasound image-based studies.

Table 11.

Comparison of recent ultrasound-based breast cancer detection techniques.

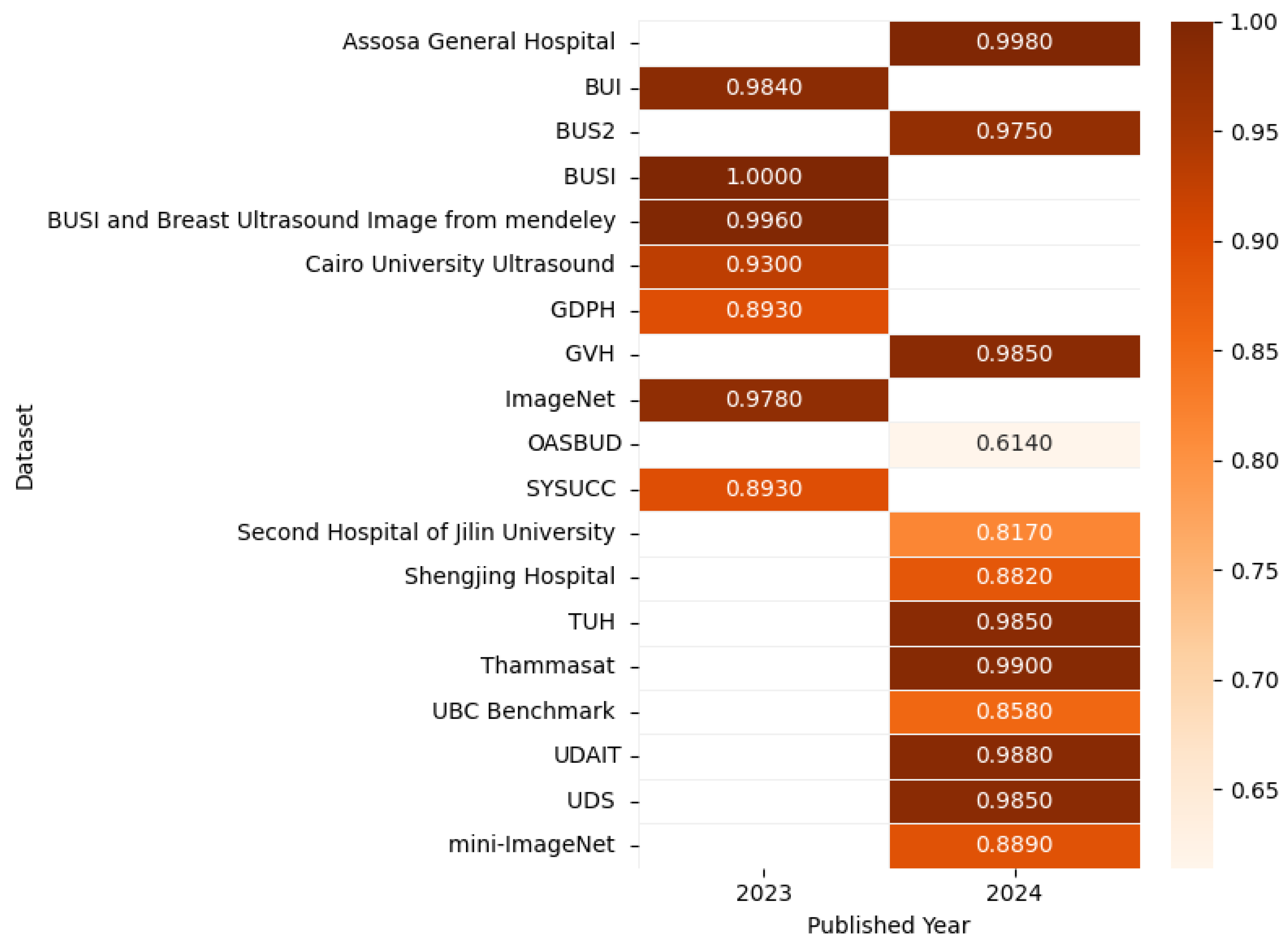

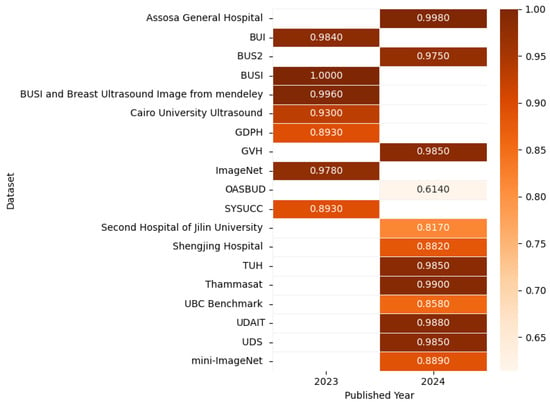

Figure 16 presents the highest accuracy rates achieved by various models on ultrasound datasets in 2023 and 2024, including UBC Benchmark [100], BUSI [102], BUS2 [103], UDAIT [104], multiple hospital datasets, and others [95,104,105,106,107,108,109,110,111,112,113,114], as outlined in the referenced studies, with the corresponding correlation figure using color intensity to depict accuracy levels.

Figure 16.

Highest detection accuracy across multiple ultrasound datasets for breast cancer.

8.2.1. Model Performance on Ultrasound Image: Pros, Cons, and Future Directions

Table 12 summarizes the advantages, disadvantages, and future work recommendations for top-performing models in ultrasound-based breast cancer detection, facilitating direct comparison of their strengths and development needs.

Table 12.

Comprehensive overview of top-performing models on ultrasound breast cancer datasets.

8.2.2. Summary

Ultrasound imaging is a widely used modality for breast cancer (BC) detection, supported by publicly available datasets such as BUSI, BUS-BRA, BUS-UCLM, and Breast-Lesions-USG. Recent studies have employed advanced deep learning (DL) models, including CNNs, transfer learning, and hybrid approaches, to achieve high accuracy and AUC scores. Notable models like DeepBreastCancerNet, HoVer-Transformer, and VGG16-LSTM have demonstrated exceptional performance. Additionally, interpretability techniques such as Grad-CAM and LIME have been integrated to enhance clinical applicability. Despite these advancements, challenges remain in standardizing image preprocessing, improving dataset diversity, and enabling real-world deployment. Table 13 presents a summary of key findings in ultrasound-based breast cancer detection.

Table 13.

Summary of key findings in ultrasound-based BC detection.

8.2.3. Analysis

The studies reviewed demonstrate the effectiveness of DL models, particularly those leveraging transfer learning and ensemble techniques, in classifying BC from ultrasound images. Models like ResNet50, DenseNet201, and VGG16 achieve near-perfect accuracy when fine-tuned, with some studies reporting 100% accuracy. The integration of clinical–pathologic variables further enhances predictive performance, as seen in the integrated DLR model, which achieved an AUC of 0.962. However, dataset limitations, such as small sample sizes and inconsistent imaging protocols, hinder model generalizability. Additionally, the focus on real-time deployment in resource-limited settings highlights the need for computationally efficient models. Table 14 provides an analysis of techniques and their impact based on ultrasound-based breast cancer detection.

Table 14.

Analysis of techniques and their impact in ultrasound-based BC detection.

8.2.4. Critique

While the reported accuracies are impressive, several limitations warrant attention. First, the lack of cross-dataset validation raises concerns about model overfitting and generalizability. Many studies rely on a single dataset, which may not represent diverse populations or imaging conditions. Second, although interpretability techniques like Grad-CAM provide insights into model decisions, they are often insufficient for full clinical trust. Third, models achieving near-100% accuracy may indicate potential biases or dataset-specific overfitting, necessitating independent validation. Finally, the absence of standardized imaging protocols and the limited diversity of datasets hinder the development of robust models. Table 15 presents a critique of current limitations in ultrasound-based breast cancer detection.

Table 15.

Critique of current limitations in ultrasound-based breast cancer detection.

8.2.5. Comparison

The reviewed studies demonstrate varying approaches and outcomes, as summarized in Table 16. DeepBreastCancerNet [95] achieved the highest accuracy (99.63%) on a secondary dataset, followed by HoVer-Transformer (AUC 0.924) and VGG16-LSTM (AUC 1.0, F1-score 99%). The integrated DLR model outperformed image-only approaches by incorporating clinical features, achieving an AUC of 0.962. In contrast, simpler models designed for mobile devices, such as the one proposed by Appiah et al., achieved lower accuracy (78%), highlighting the trade-off between computational efficiency and predictive performance [100]. These comparisons underscore the importance of dataset quality, preprocessing, and algorithm choice in achieving high detection accuracy.

Table 16.

Comparison of key studies in ultrasound-based breast cancer detection.

Ultrasound-based BC detection has seen significant advancements through the application of deep learning models, transfer learning, and hybrid approaches. While models like DeepBreastCancerNet and HoVer-Transformer demonstrate exceptional performance, challenges related to dataset diversity, interpretability, and real-world deployment remain. Future research should focus on cross-dataset validation, standardized imaging protocols, and the development of computationally efficient models for resource-limited settings. Addressing these challenges will enhance the clinical applicability and robustness of ultrasound-based BC detection systems.

Current ultrasound DL models exhibit two key limitations: (1) overreliance on small, single-center datasets (Table 11) leading to poor generalization, (2) lack of standardized evaluation metrics for clinically crucial tasks like tumor boundary delineation.

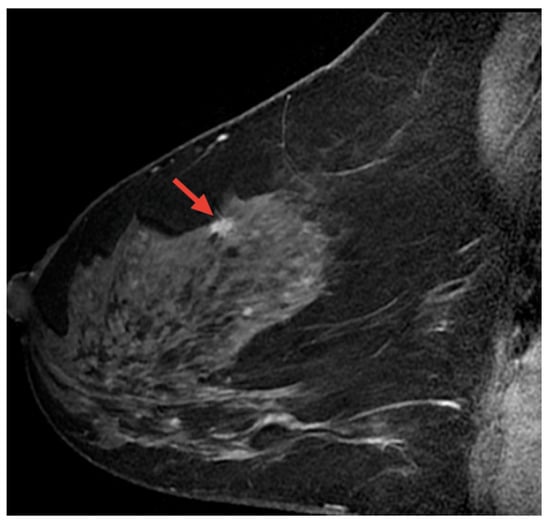

8.3. Breast Cancer Detection Based on Magnetic Resonance Imaging (MRI) Images

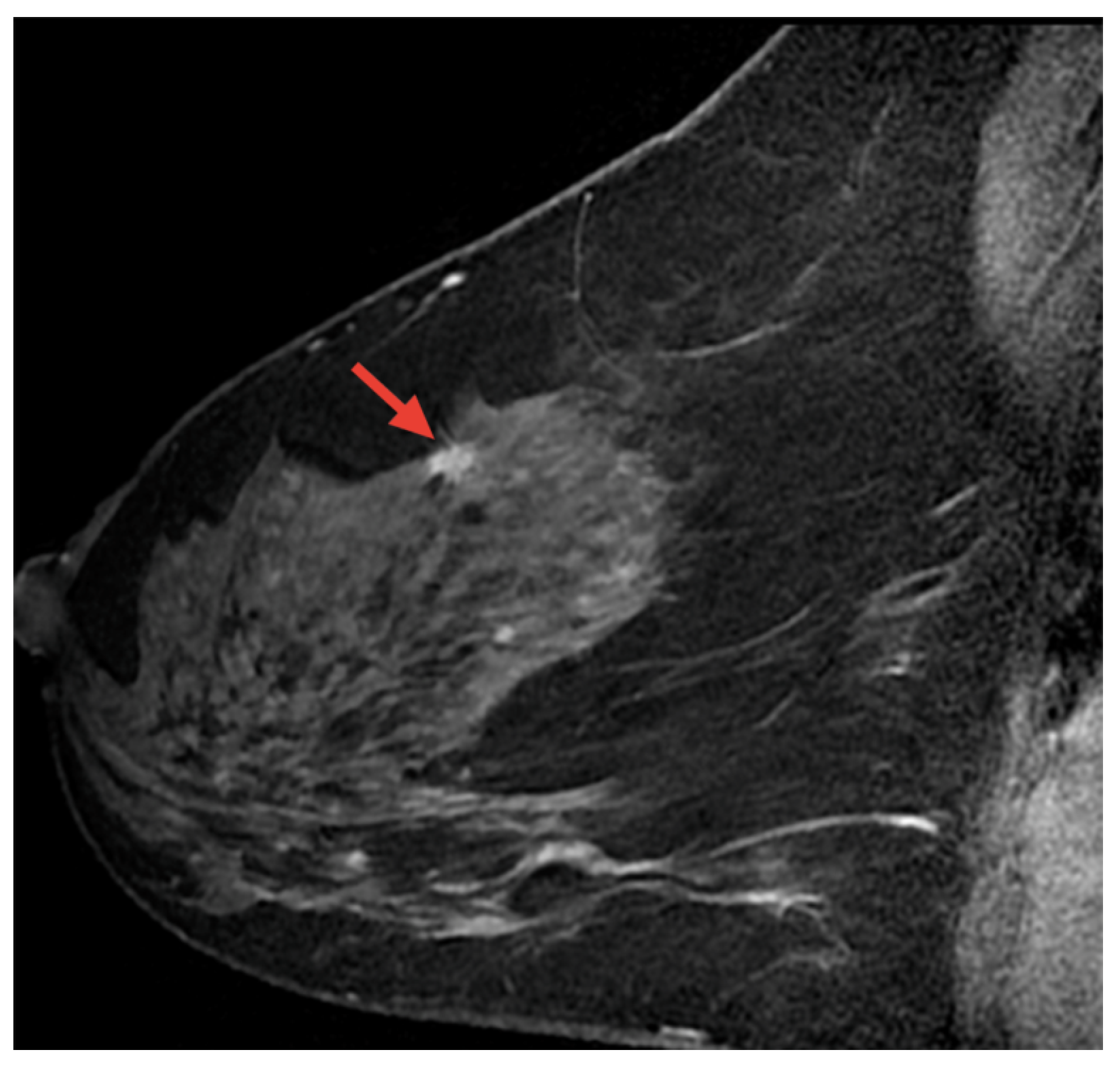

Magnetic resonance imaging (MRI) is a safe, non-invasive technique that uses magnetic fields and radio waves to produce detailed internal body images. MRI is employed in the diagnosis and monitoring of conditions such as soft tissue abnormalities, tumors, and brain and spinal disorders. This section highlights AI-based methods for BC detection using MRI images [115]. Figure 17 displays a sample MRI image with a highlighted area, marked by the red arrow, indicating a potential abnormality for further examination.

Figure 17.

A sample MRI image [92,116].

Zhang et al. proposed an approach that involved creating radiomics models for diagnosing BC using various MRI modalities (T1WI, T2WI, DWI, ADC, and DCE), with radiomic features extracted from plain, enhanced, and diffuse MRI scans and analyzed through DL for automatic extraction and classification. The diagnostic accuracy was significantly boosted by combining these modalities, with the highest diagnostic performance reflected in an AUC of 0.927 from the combined use of plain scan, enhanced, and diffuse sequences [117]. Liu et al. conducted an IRB-approved study utilizing a weakly supervised ResNet-101-based network for classifying malignant and benign images from a dataset of 278,685 image slices from 438 patients. The slices were grouped into 92,895 three-channel images, with 85% used for training and validation. The Adam optimizer with a SoftMax score threshold of 0.5 was used to train the model. The model achieved an AUC of 0.92 (±0.03), an accuracy of 94.2% (±3.4), a sensitivity of 74.4% (±8.5), and a specificity of 95.3% (±3.3). These results highlight the model’s ability to distinguish among various cancer types [118].

Yunan et al. developed a CNN-based computer-aided diagnostic tool for BC using DCE-MRI images, incorporating tumor geometric and pharmacokinetic features for classification. In a study of 130 patients, 71 were malignant cases and 59 were benign cases. The model achieved an accuracy, precision, sensitivity, and AUC of 87.7%, 91.2%, 86.1%, and 91.2% (±4.0%), respectively, across five-fold testing. Confidence in classification was reinforced by prediction probability, feature heatmaps, and dynamic scan time points, minimizing misclassification [119]. In a study by Yue et al., 516 BC patients were analyzed using a 3D UNet-based automatic segmentation model to extract 1316 radiomics features from regions of interest. Eighteen radiomics methods combining feature selection and classifiers were employed for model selection, and the model achieved an average Dice similarity coefficient of 0.89. The radiomics models effectively predicted four molecular subtypes, with the best performance showing an AUC of 0.8623, an accuracy of 0.6596, a sensitivity of 0.6383, and a specificity of 0.8775. For specific subtypes, the AUC values ranged from 0.8676 to 0.9335, with specificity reaching 0.9865 [120]. Qian et al. used CIBERSORT to analyze immune cell infiltration in BC (BRCA) patients from the TCGA database, applying univariate and multivariate Cox regression to identify M2 macrophages as an independent prognostic factor (HR = 32.288) while also obtaining imaging data from the TCIA database to construct an MRI-based radiomics model by selecting key features via LASSO. The models developed included intratumoral, peritumoral, and combined types, with the peritumoral model showing the highest performance, achieving an accuracy of 0.773, a sensitivity of 0.727, and a specificity of 0.818 [121].

Guo et al. proposed an MRI-based deep learning radiomics (DLR) model to predict HER2-low-positive status in BC patients and evaluate its prognostic value. The model utilized features from traditional radiomics and deep semantic segmentation and achieved AUCs of 0.868 and 0.763 for distinguishing HER2-negative from HER2-overexpressing patients, and 0.855 and 0.750 for differentiating HER2-low-positive from HER2-zero patients in the training and validation cohorts, respectively [122]. The study by Chao et al. used 569 local cases and 125 external cases for lesion classification, employing T1-weighted, DCE-MRI, T2WI, and diffusion-weighted imaging. A CNN and LSTM cascaded network achieved AUCs of 0.98/0.91 and sensitivities of 0.96/0.83 in internal/external cohorts. The DL model outperformed radiologists without DCE-MRI (AUC 0.96 vs. 0.90), and lesion localization had sensitivities of 0.97/0.93 with DCE-MRI/T2WI [123]. Liang et al. proposed a DL model (MLP-radiomic) using MRI radiomics and clinical radiological features, achieving a high AUC of 0.896 in predicting lymphovascular invasion in BC patients and outperforming ML models [124]. Table 17 provides an overview of studies conducted using MRI image-based BC detection.

Table 17.

Comparison of recent MRI image-based techniques for breast cancer detection.

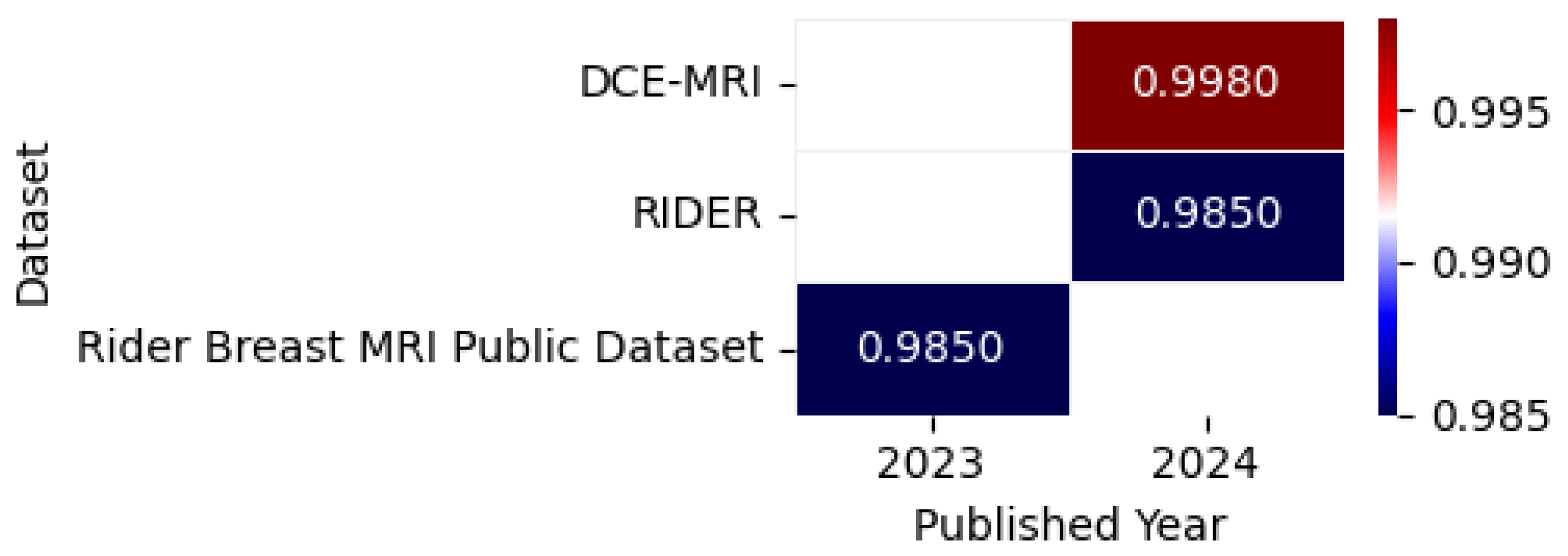

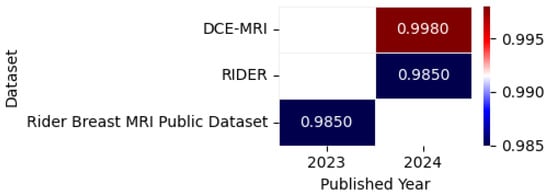

Figure 18 illustrates the highest accuracy rates obtained by various models on MRI datasets in 2023 and 2024, including datasets such as RIDER [125], DCE-MRI [126], and Rider Breast MRI Public Dataset [127], as detailed in the referenced studies, accompanied by a correlation figure that represents accuracy levels through color intensity.

Figure 18.

Highest detection accuracy across multiple MRI datasets for breast cancer.

8.3.1. Model Performance on MRI Image: Pros, Cons, and Future Directions

Table 18 provides an overview of the key benefits, limitations, and future research directions for leading MRI-based breast cancer detection models, enabling easy comparison of their capabilities and areas for improvement.

Table 18.

Comprehensive overview of top-performing models on MRI breast cancer datasets.

8.3.2. Summary

Magnetic resonance imaging (MRI) has emerged as a powerful tool for breast cancer (BC) detection, particularly in cases involving dense breast tissue where other imaging modalities may be less effective. Recent advancements in machine learning (ML) and deep learning (DL) have significantly enhanced the accuracy of MRI-based BC detection. Studies have utilized various MRI modalities, including T1-weighted, T2-weighted, diffusion-weighted imaging (DWI), apparent diffusion coefficient (ADC), and dynamic contrast-enhanced (DCE) MRI, to extract relevant features and detect cancerous lesions. These approaches have achieved impressive results, with models evaluated based on metrics such as accuracy, area under the curve (AUC), and Dice similarity coefficient. However, challenges related to dataset variability, model generalizability, and computational complexity remain. Table 19 presents a summary of key findings in MRI-based breast cancer detection.

Table 19.

Summary of key findings in MRI-based breast cancer detection.

8.3.3. Analysis

The integration of multimodal MRI approaches with deep learning techniques has significantly improved BC detection accuracy. Studies like Zhang et al. (2024) [112] demonstrate the advantage of combining multiple MRI modalities (T1WI, T2WI, DWI, ADC, and DCE) to capture complementary features, achieving an AUC of 0.927. Weakly supervised learning, as employed by Liu et al. (2022) [118], has shown promise in scenarios with limited annotated data, achieving an AUC of 0.92. CNN-based architectures, such as ResNet-101 and 3D UNet, have been widely used for classification and segmentation tasks, with notable performance improvements. Additionally, advanced models incorporating immune composition data, such as Qian et al. (2024) [121], have opened new avenues for predicting patient prognosis and cancer status. Table 20 provides an analysis of techniques and their impact on MRI-based breast cancer detection.

Table 20.

Analysis of techniques and their impact in MRI-based breast cancer detection.

8.3.4. Critique

Despite the impressive results, several limitations need to be addressed. First, the variability in performance across different datasets and cohorts raises concerns about model generalizability. Many models perform well on internal datasets but struggle with external validation, as seen in Chao et al. (2024) [123]. Second, the reliance on large annotated datasets remains a significant challenge, as acquiring such data is costly and time-consuming. Third, the computational complexity of advanced models, such as CNN-LSTM cascaded networks, may limit their feasibility in real-world clinical settings. Finally, while models achieve high-performance metrics, their lack of interpretability hinders clinical trust and adoption. Table 21 presents a critique of current limitations in MRI-based breast cancer detection.

Table 21.

Critique of current limitations in MRI-based breast cancer detection.

8.3.5. Comparison

The reviewed studies demonstrate varying approaches and outcomes, as summarized in Table 22. Chao et al. (2024) [123] achieved the highest AUC of 0.98 using a CNN-LSTM cascaded network, outperforming radiologists in lesion classification. Liu et al. (2022) [118] and Yue et al. (2023) [120] also reported strong performance, with AUC values of 0.92 and 0.86, respectively. The integration of radiomics features, as seen in Qian et al. (2024) [121], further enhanced predictive accuracy, achieving an AUC of 0.927. However, performance discrepancies in external validation datasets highlight the need for further research in model generalization. The table also highlights variations in model complexity, with simpler models like MLP-radiomics offering a balance between performance and computational efficiency.

Table 22.

Comparison of key studies in MRI-based breast cancer detection.

MRI-based breast cancer detection has seen significant advancements through the integration of deep learning and radiomics techniques. While models like CNN-LSTM and ResNet-101 demonstrate exceptional performance, challenges related to generalizability, dataset dependency, and computational complexity remain. Future research should focus on improving model interpretability, expanding dataset diversity, and developing computationally efficient models for real-world clinical deployment. Addressing these challenges will enhance the clinical applicability and robustness of MRI-based BC detection systems.

MRI-based models face unresolved challenges: (1) multi-institutional data variability degrades performance (Table 17), (2) most studies ignore computational costs prohibitive for clinical deployment, (3) temporal dynamics in DCE-MRI remain underutilized.

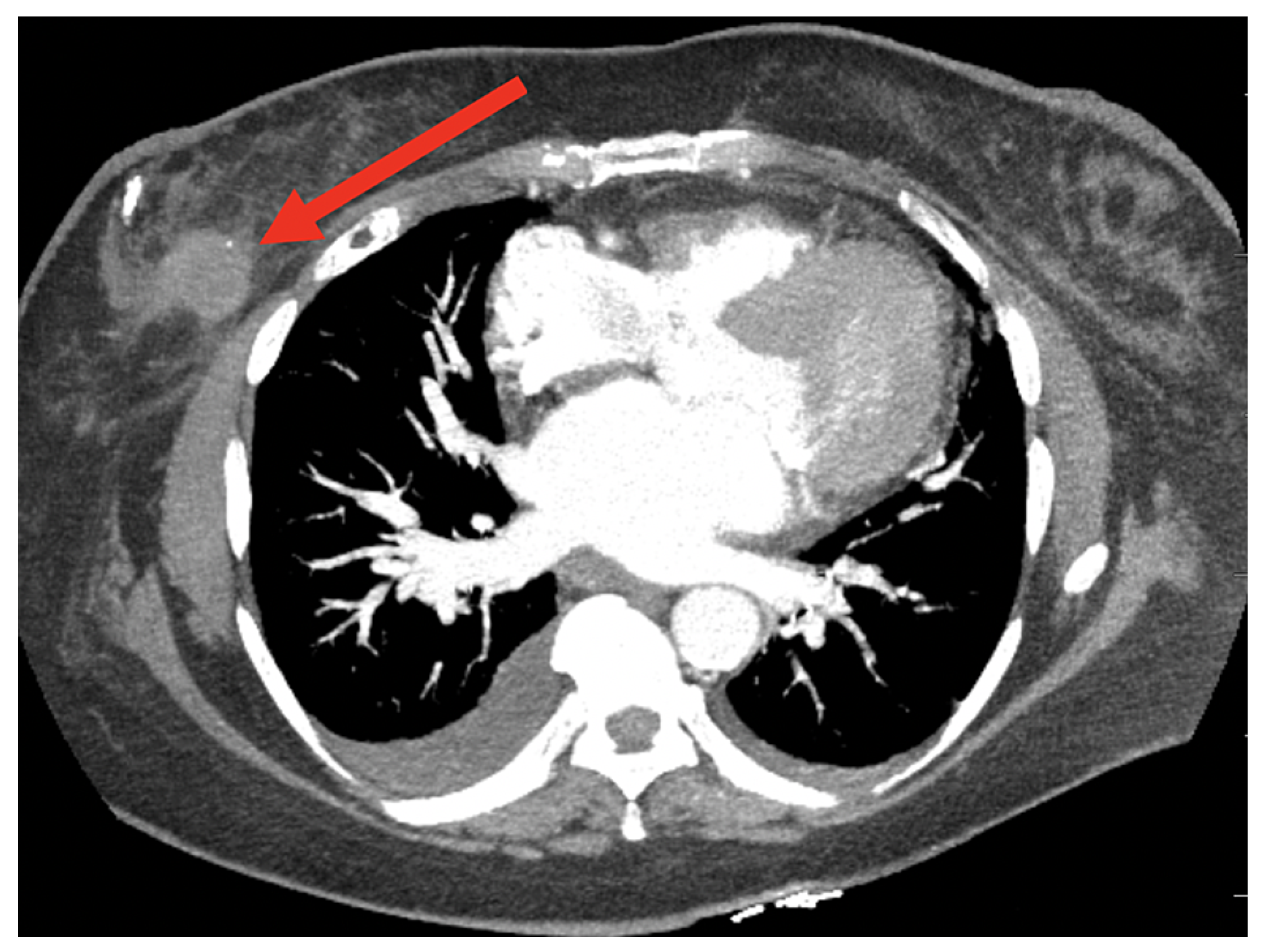

8.4. Breast Cancer Detection Using Computed Tomography (CT) Scan Images

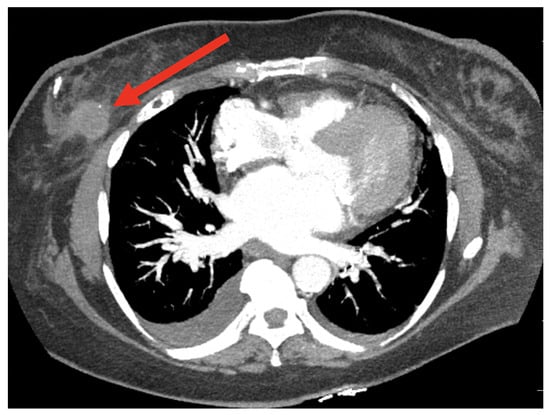

Computed tomography (CT) imaging, a diagnostic method using X-ray technology to produce detailed cross-sectional body images, facilitates the detection and monitoring of abnormalities such as tumors; this section examines AI-driven techniques for BC detection using CT images, with Figure 19 highlighting a region of interest for further analysis.

Figure 19.

A sample CT image [116].

Bargalló et al. [128] proposed a comprehensive CT imaging pipeline for BC that employs a trained CNN-Unet model to auto-contour standard tissue structures, thereby enabling accurate classification of patients by laterality and surgical procedures, such as lumpectomy or mastectomy, with a Random Forest classifier achieving 93.75% accuracy and an AUC of 0.993 in a secondary sample of 16 patients. While this method reduced target delineation errors and highlighted the need to consider anatomical and procedural differences, there remains scope for enhancing workflow efficiency. Koh et al. developed a DL model for detecting BC from chest CT scans using augmented axial images with bounding boxes and the RetinaNet algorithm. The model achieved 96.5% sensitivity in the internal test set and 96.1% in the external test set, with sensitivities ranging from 88.5% to 93.0% depending on the candidate probability threshold [129].

Yasak et al. conducted a study on BC detection using DL models applied to contrast-enhanced chest CT images. They used a dataset of 201 training, 26 validation, and 30 test cases and achieved an AUC of 0.976, thus improving the performance of radiologists through model assistance [130]. Table 23 presents a summary of research focused on BC detection using computed tomography (CT) images.

Table 23.

A comparison of recent techniques for breast cancer detection using computed tomography.

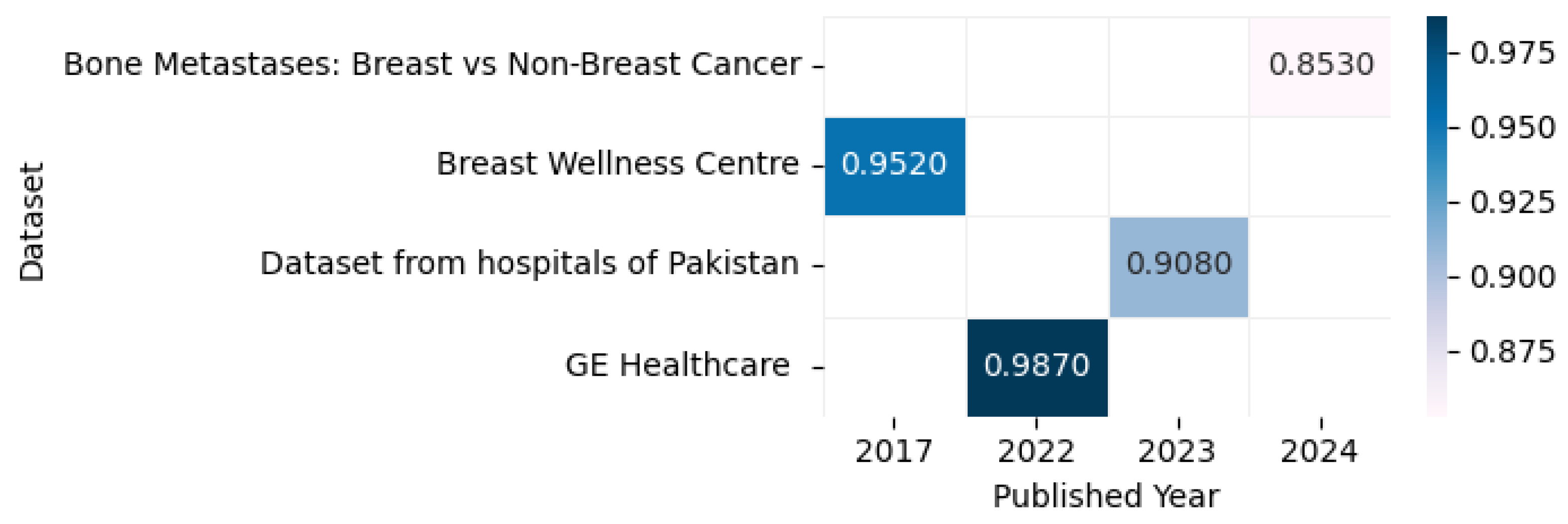

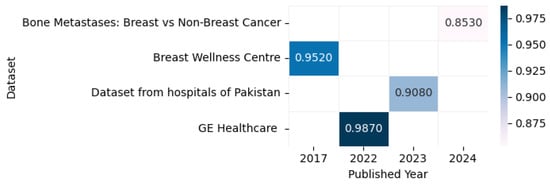

Figure 20 displays the highest accuracy rates achieved by various models on computed tomography datasets in 2023 and 2024, including the Breast Wellness Centre [131], Bone Metastases: Breast vs. Non-Breast Cancer [132], GE Healthcare [133], and datasets from hospitals in Pakistan [134], as outlined in the referenced studies, along with a correlation figure illustrating accuracy levels using varying color intensities.

Figure 20.

Highest detection accuracy across multiple computed tomography datasets for breast cancer.

8.4.1. Model Performance on CT Image: Pros, Cons, and Future Directions

The comparative analysis in Table 24 highlights the main strengths, weaknesses, and research opportunities for current CT imaging approaches in breast cancer detection.

Table 24.

Comprehensive overview of top-performing models on CT breast cancer datasets.

8.4.2. Summary

Computed tomography (CT) imaging is a widely used diagnostic tool for breast cancer (BC) detection, offering detailed cross-sectional images of the body. Recent advancements in artificial intelligence (AI) and deep learning (DL) have significantly enhanced the accuracy of BC detection using CT images. Studies have employed various DL models, such as CNN-Unet and RetinaNet, to segment and classify breast cancer lesions with high accuracy. For example, Bargalló et al. (2023) [128] achieved 93.75% accuracy using a CNN-Unet model combined with a Random Forest classifier, while Koh et al. (2022) [129] reported 96.5% sensitivity using RetinaNet on augmented CT images. Despite these advancements, challenges such as dataset dependency, computational complexity, and generalizability remain. Table 25 presents a summary of key findings in CT-based breast cancer detection.

Table 25.

Summary of key findings in CT-based breast cancer detection.

8.4.3. Analysis

The integration of deep learning models with CT imaging has significantly improved BC detection accuracy. Bargalló et al. (2023) [128] proposed a comprehensive CT imaging pipeline using CNN-Unet for automatic segmentation and Random Forest for classification, achieving 93.75% accuracy and an AUC of 0.993. This approach reduces target delineation errors and highlights the importance of considering anatomical and procedural differences. Koh et al. (2022) [129] employed RetinaNet with augmented axial images, achieving 96.5% sensitivity in internal test sets and 96.1% in external test sets, demonstrating robust performance across different datasets. Yasak et al. (2024) [130] utilized deep learning on contrast-enhanced CT images, achieving an AUC of 0.976, which improved radiologists’ performance. These studies underscore the effectiveness of combining advanced CT imaging techniques with deep learning models for accurate BC detection. Table 26 provides an analysis of techniques and their impact in CT-based breast cancer detection.

Table 26.

Analysis of techniques and their impact in CT-based breast cancer detection.

8.4.4. Critique

Despite the promising results, several limitations need to be addressed. First, the reliance on high-quality, annotated CT image datasets limits scalability, as such datasets are not always available in clinical settings. Second, the performance of deep learning models can vary when applied to real-world data, as seen in Koh et al. (2022) [129], where sensitivity varied across test sets. Third, the computational complexity of advanced models, such as CNN-Unet and RetinaNet, may hinder their practical implementation in resource-limited environments. Finally, while automatic segmentation and procedural classification reduce human error, they require further refinement to improve workflow efficiency. The lack of generalizability across diverse datasets and clinical practices remains a significant challenge. Table 27 presents a critique of current limitations in CT-based breast cancer detection.

Table 27.

Critique of current limitations in CT-based breast cancer detection.

8.4.5. Comparison

The reviewed studies demonstrate varying approaches and outcomes, as summarized in Table 28. Bargalló et al. (2023) [128] achieved 93.75% accuracy using CNN-Unet and Random Forest, while Koh et al. (2022) [129] reported 96.5% sensitivity using RetinaNet. Yasak et al. (2024) [130] utilized deep learning on contrast-enhanced CT images, achieving an AUC of 0.976. These studies highlight the diversity of approaches in CT-based BC detection, with each method offering unique advantages. For instance, CNN-Unet excels in automatic segmentation, while RetinaNet performs well in lesion detection. However, performance discrepancies across datasets underscore the need for further research in model generalization.

Table 28.

Comparison of key studies in CT-based breast cancer detection.

CT-based breast cancer detection has seen significant advancements through the integration of deep learning models and advanced imaging techniques. While models like CNN-Unet and RetinaNet demonstrate exceptional performance, challenges related to dataset dependency, computational complexity, and generalizability remain. Future research should focus on improving model interpretability, expanding dataset diversity, and developing computationally efficient models for real-world clinical deployment. Addressing these challenges will enhance the clinical applicability and robustness of CT-based BC detection systems.

While CT-based models show promise (Table 28), two fundamental gaps limit clinical translation: (1) Radiation concerns—Most studies use standard-dose CT [133], ignoring the trade-offs between detection accuracy and patient safety in low-dose protocols; (2) Anatomical specificity—Models like CNN-Unet [128] focus on lesion detection but fail to incorporate breast density patterns that critically impact diagnostic interpretation.

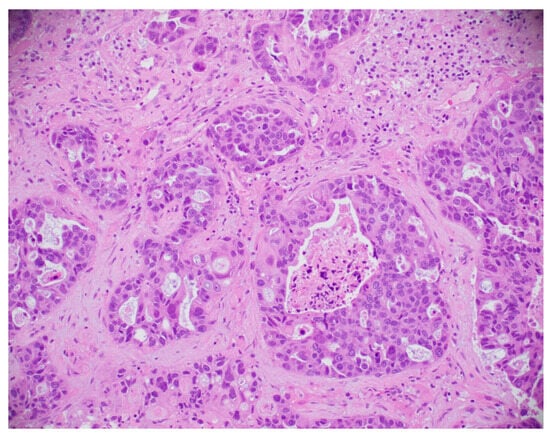

8.5. Histopathological Image-Based Breast Cancer Detection

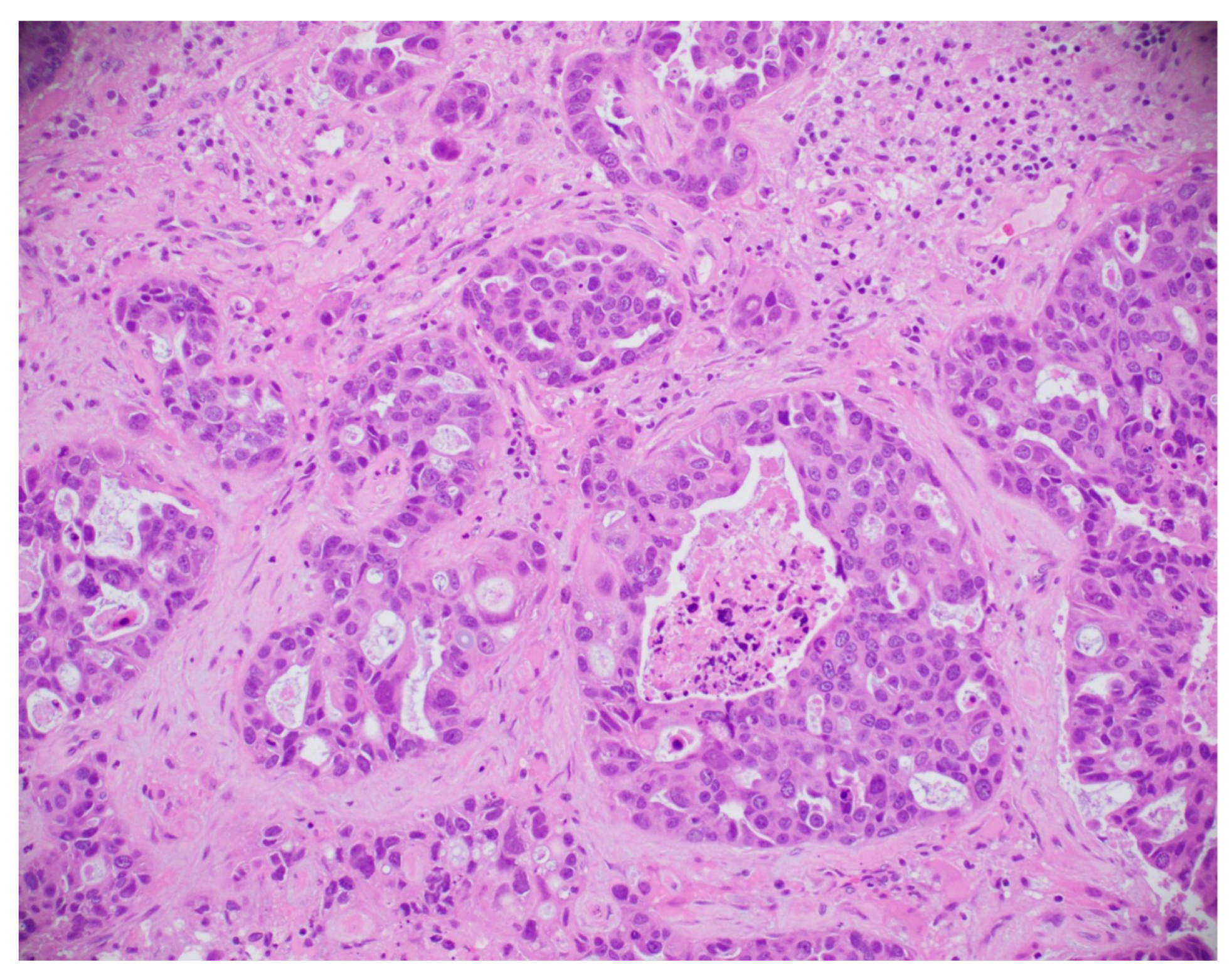

This subsection explores the application of histopathological and microscopic imaging, key diagnostic tools for BC detection that involve examining tissue samples at the cellular level to identify malignancies and support accurate diagnosis and treatment planning. A simple histopathological image is shown in Figure 21.

Figure 21.

A sample histopathological image [135].

Muntean and Chowkkar compared CNN and DenseNet121 models for BC detection using 7909 histopathological images. The CNN achieved 90.9% accuracy with 100× magnification, while DenseNet121 reached 86.6% accuracy, with a 16.4% accuracy improvement through transfer learning. Both models demonstrated effective performance [136]. Bhowal et al. [137] proposed a BC histology classification method that combined multiple DL models—VGG16, VGG19, Xception, Inception V3, and InceptionResnet V2—using Choquet Integral, Coalition Game Theory, and Information Theory for synthesis. The method incorporated image preprocessing, feature extraction, and classification, achieving improved accuracy, precision, and recall over traditional techniques. The dataset used for evaluation was from the BACH dataset, with an accuracy of 96% for the two-class problem and 95% for the four-class problem, outperforming state-of-the-art methods.

Yang et al. proposed a multimodal DL model to predict the prognosis of HER2-positive BC patients by integrating whole slide H&E images and clinical data. The model utilized a deep convolutional neural network (CNN) to extract features from 512 × 512-pixel H&E images that were combined with clinical information to assess the risks of relapse and metastasis. The model achieved an AUC of 0.76 in two-fold cross-validation and 0.72 in an independent TCGA testing set, demonstrating its effectiveness in predicting the prognosis of HER2-positive BC patients across different datasets [138].

Maleki et al. proposed a method for histopathological image classification using DL and transfer learning. The approach utilized pre-trained networks for feature extraction and then applied the XGBoost classifier. Evaluated on the BreakHis dataset, the proposed method achieved accuracies of 93.6%, 91.3%, 93.8%, and 89.1% for magnifications of 40×, 100×, 200×, and 400×, respectively. The DenseNet201 model was used for feature extraction, and XGBoost was employed as the final classifier [139]. Majumdar et al. proposed a rank-based ensemble method for BC detection from histopathological images, utilizing three CNN models: GoogleNet, VGG11, and MobileNetV3_Small. The decision scores from these models were combined using the Gamma function for a two-class classification problem. Their approach was evaluated on the BreakHis and ICIAR-2018 datasets, achieving classification accuracies of 99.16%, 98.24%, 98.67%, and 96.16% for 40×, 100×, 200×, and 400× magnification levels on the BreakHis dataset and 96.95% on the ICIAR-2018 dataset [140]. Ray et al. proposed the use of pre-trained deep transfer learning models, including ResNet50, ResNet101, VGG16, and VGG19, for the detection of BC using histopathological images. The study utilized a dataset of 2453 images, divided into invasive ductal carcinoma (IDC) and non-IDC categories. Among the models analyzed, ResNet50 showed superior performance, achieving an accuracy of 92.2%, an AUC of 91.0%, and a recall of 95.7%, with a minimal loss of 3.5% [141].

Rajkumar et al. proposed a method for BC detection using the Darknet-53 convolutional neural network (CNN) that enhanced the accuracy of image classification. They utilized the contrast-limited adaptive histogram equalization (CLAHE) technique for image preprocessing and the Haralick grey-level co-occurrence matrix (HGLCM) for feature extraction. The model achieved an accuracy of 95.6% [142]. Alhassan et al. proposed a comprehensive classification framework for differentiating types of lung, colon, and breast cancers by analyzing histopathological images using AI, machine learning, and digital image processing techniques. They utilized the BreakHis and LC25000 datasets and applied various preprocessing steps, including quadruple clipped adaptive histogram equalization for noise reduction and stain color adaptive normalization (SCAN) to enhance image contrast. The VGG16 model was used for feature extraction, followed by an ensemble max-voting classifier for classification. The results demonstrated strong performance, with 89.03% accuracy, 88.56% precision, 88.09% sensitivity, and an F1-score of 88.7%, highlighting the effectiveness of their approach for faster and more accurate cancer diagnoses [143]. The study used the BreaKHis and BACH datasets for histopathological BC image classification. The image sizes varied according to the magnification level, with images from 40×, 100×, 200×, and 400× magnifications used for evaluation. The proposed model was BCHI-CovNet, a lightweight AI model employing multiscale depth-wise separable convolution, an additional pooling module, and a multi-head self-attention mechanism to reduce computational complexity while maintaining high accuracy. The model achieved impressive results, with accuracies of 99.15% at 40×, 99.08% at 100×, 99.22% at 200×, and 98.87% at 400× magnification on the BreaKHis dataset, and 99.38% on the BACH dataset [144]. A summary of BC classification using histopathological images is provided in Table 29.

Table 29.

A review of recent approaches for detecting breast cancer through histopathological images.

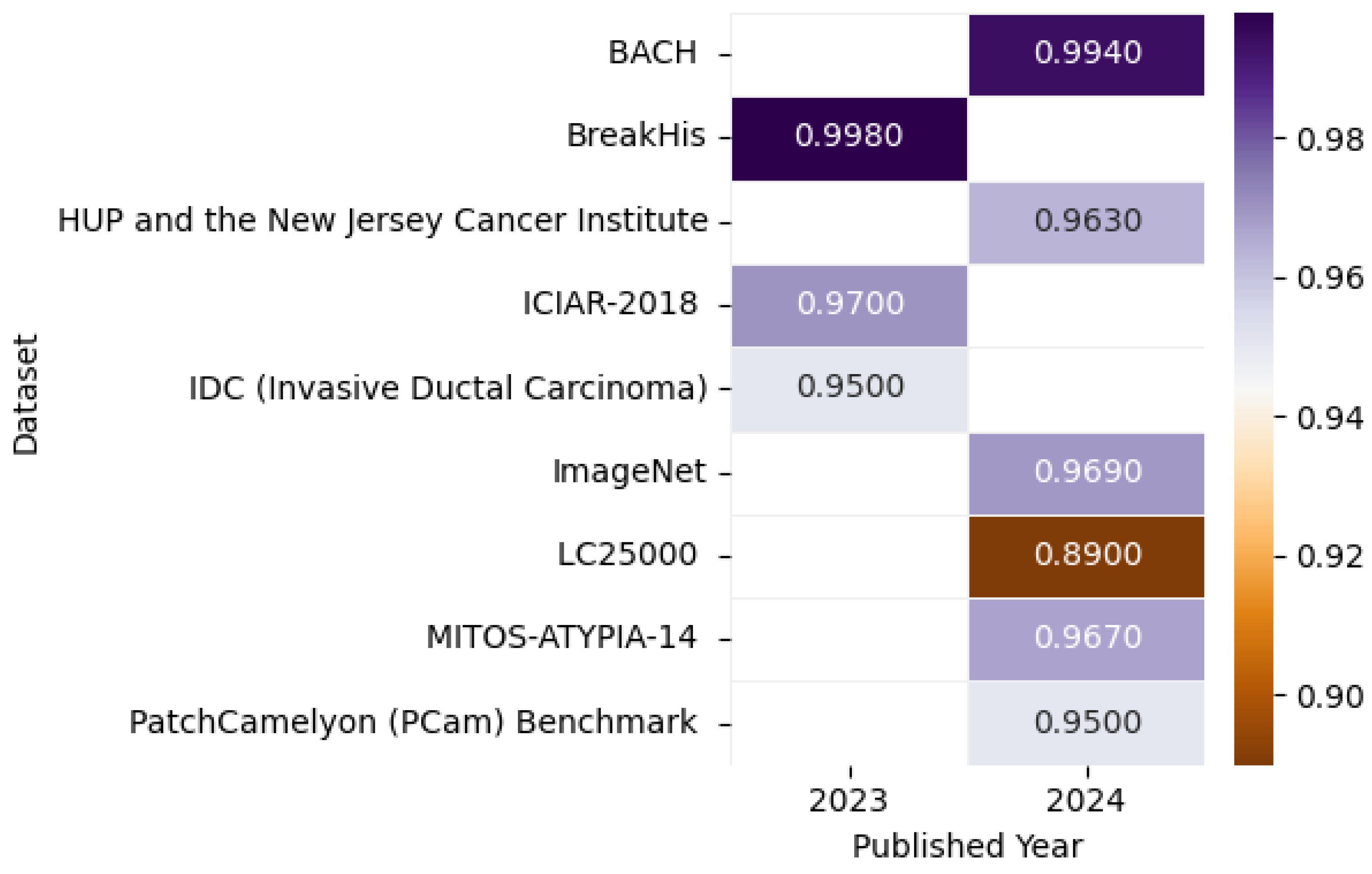

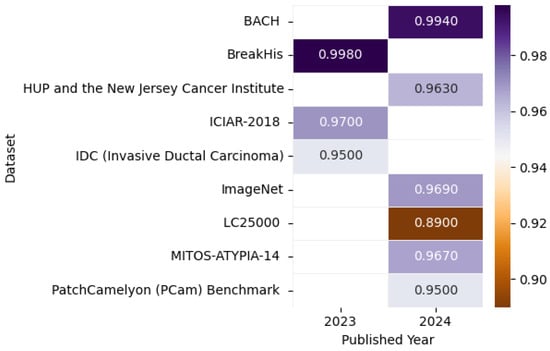

Figure 22 presents the highest accuracy rates achieved by various models on Histopathological datasets in 2023 and 2024, including the ImageNet [145], BreakHis [146], Pennsylvania University Hospital (HUP) and the New Jersey Cancer Institute [147], BACH [144], LC25000 [143], MITOS-ATYPIA-14 [148], PatchCamelyon (PCam) Benchmark [149], IDC (Invasive Ductal Carcinoma) [150], and ICIAR-2018 [140] datasets, as detailed in the referenced studies, paired with a correlation figure that conveys accuracy levels through color intensity variation.

Figure 22.

Highest detection accuracy across multiple histopathological datasets for breast cancer.

8.5.1. Model Performance on Histopathological Image: Pros, Cons, and Future Directions

The comparative analysis in Table 30 highlights the main strengths, weaknesses, and research opportunities for current histopathological imaging approaches in breast cancer detection.

Table 30.

Comprehensive overview of top-performing models on histopathological breast cancer datasets.

8.5.2. Summary

Histopathological imaging is a critical tool for breast cancer (BC) detection, enabling the examination of tissue samples at the cellular level to identify malignancies. Recent advancements in deep learning (DL) have significantly improved the accuracy of BC detection using histopathological images. Studies have employed various DL models, such as CNNs, DenseNet, ResNet, and ensemble methods, to achieve high accuracy and AUC scores. For example, Muntean and Chowkkar (2022) [136] achieved 90.9% accuracy using a CNN, while Bhowal et al. (2022) [137] reported 96.0% accuracy using a fusion model. Despite these advancements, challenges such as dataset dependency, computational complexity, and generalizability remain. Table 31 presents a summary of key findings in histopathology-based breast cancer detection.

Table 31.

Summary of key findings in histopathological-based breast cancer detection.

8.5.3. Analysis

The integration of deep learning models with histopathological imaging has significantly improved BC detection accuracy. Muntean and Chowkkar (2022) [136] demonstrated the effectiveness of CNNs and DenseNet121, achieving 90.9% and 86.6% accuracy, respectively, with transfer learning further improving performance. Bhowal et al. [137] proposed a fusion model combining VGG16, Xception, and InceptionResNet V2, achieving 96.0% accuracy on the ICIAR 2018 dataset. Yang et al. (2022) [138] integrated whole slide H&E images with clinical data, achieving an AUC of 0.76, showcasing the potential of multimodal approaches. Ensemble methods, such as those proposed by Majumdar et al. (2023) [140], further enhance robustness, achieving 99.16% accuracy on the BreakHis dataset. Table 32 provides an analysis of techniques and their impact on histopathological-based breast cancer detection.

Table 32.

Analysis of techniques and their impact in histopathological-based breast cancer detection.

8.5.4. Critique

Despite the promising results, several limitations need to be addressed. First, the reliance on high-quality, annotated histopathological image datasets limits scalability, as such datasets are not always available in clinical settings. Second, the performance of deep learning models can vary when applied to real-world data, as seen in Yang et al. (2022) [138], where the AUC dropped to 0.76 in external validation. Third, the computational complexity of advanced models, such as ensemble methods and multimodal approaches, may hinder their practical implementation in resource-limited environments. Finally, while models achieve high-performance metrics, their lack of interpretability hinders clinical trust and adoption. Table 33 presents a critique of current limitations in histopathological-based breast cancer detection.

Table 33.

Critique of current limitations in histopathological-based breast cancer detection.

8.5.5. Comparison

The reviewed studies demonstrate varying approaches and outcomes, as summarized in Table 34. Muntean and Chowkkar (2022) [136] achieved 90.9% accuracy using a CNN, while Bhowal et al. (2022) [137] reported 95.6% accuracy using a fusion model. Yang et al. (2022) [138] integrated clinical data with imaging, achieving an AUC of 0.76. Majumdar et al. (2023) [140] employed an ensemble method, achieving 99.16% accuracy on the BreakHis dataset. Addo et al. (2024) [144] proposed BCHI-CovNet, achieving 99.38% accuracy on the BACH dataset, showcasing the effectiveness of lightweight models. These comparisons highlight the diversity of approaches in histopathology-based BC detection, with each method offering unique advantages.

Table 34.

Comparison of key studies in histopathological-based breast cancer detection.

Histopathological imaging combined with deep learning models has significantly advanced breast cancer detection, achieving high accuracy and AUC scores. While models like CNNs, DenseNet, and ensemble methods demonstrate exceptional performance, challenges related to dataset dependency, computational complexity, and generalizability remain. Future research should focus on improving model interpretability, expanding dataset diversity, and developing computationally efficient models for real-world clinical deployment. Addressing these challenges will enhance the clinical applicability and robustness of histopathological-based BC detection systems.

Four fundamental gaps emerge in histopathology analysis: (1) stain variation robustness is rarely tested, (2) whole-slide analysis remains computationally expensive, (3) few models incorporate pathologist feedback loops, (4) clinical significance of extracted features is often unverified.

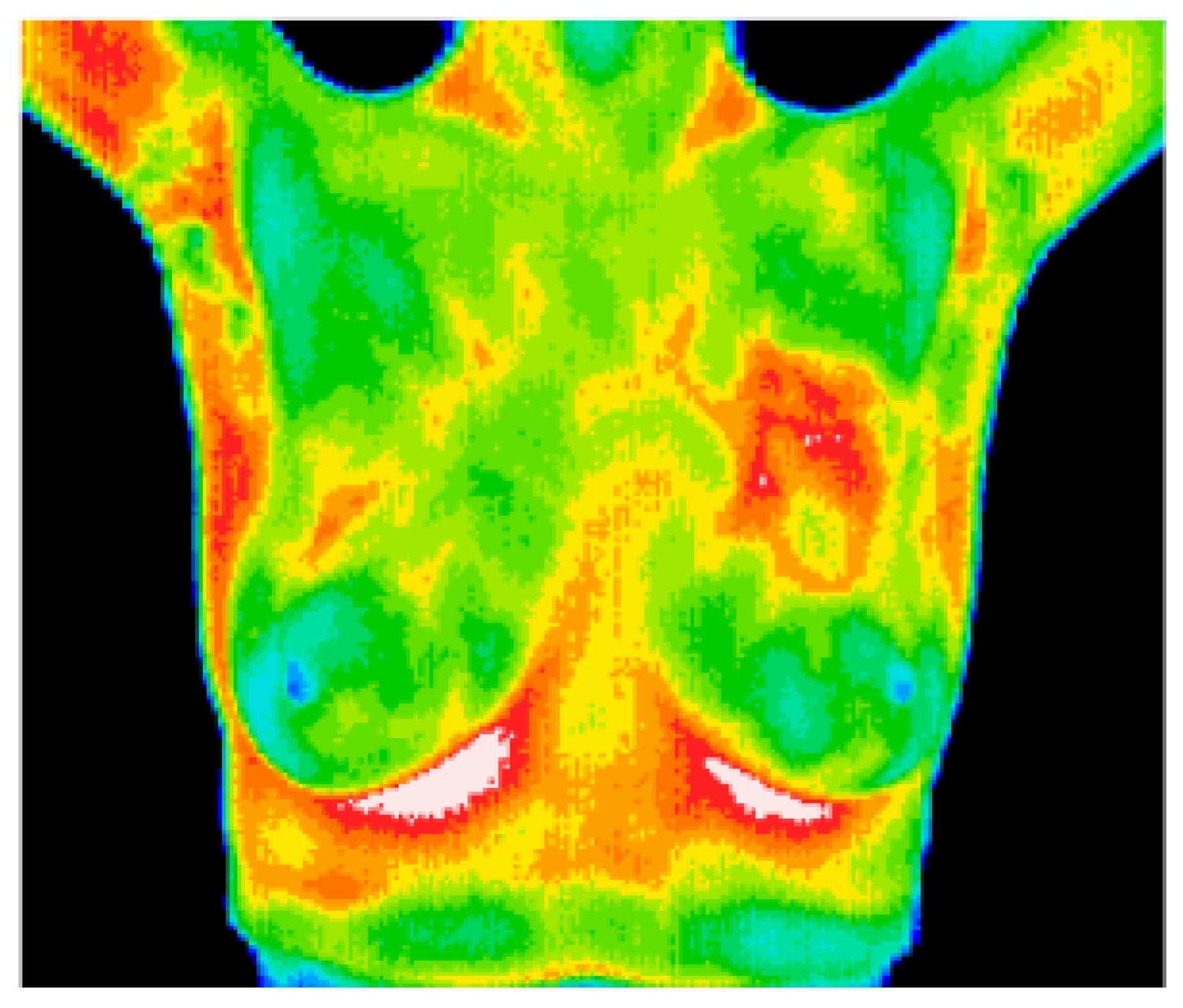

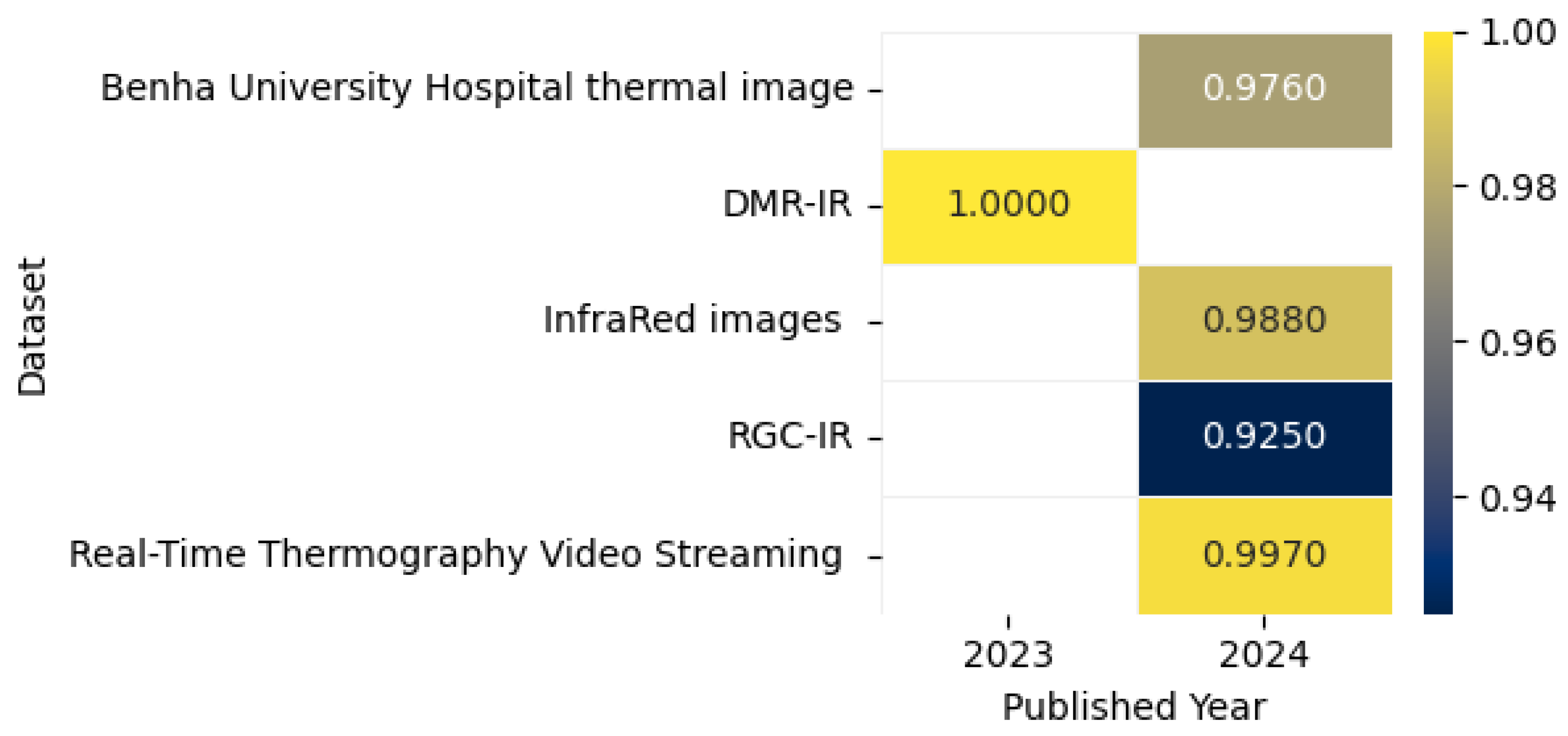

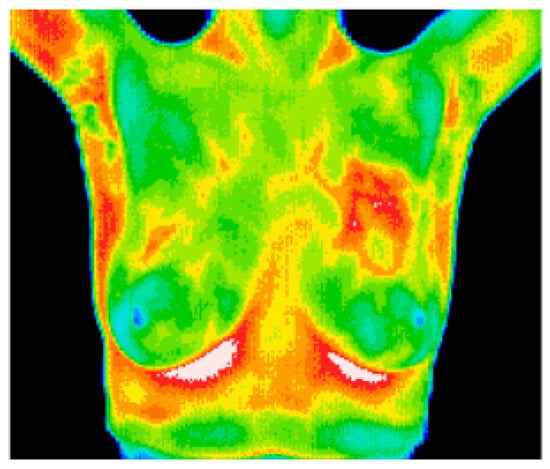

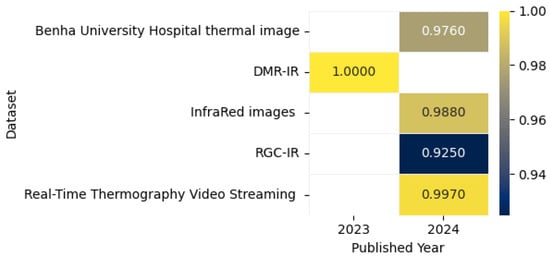

8.6. Breast Cancer Detection Using Thermal Imaging

The thermal image shown in Figure 23 captures the heat emitted by objects, visualizing patterns of temperature variation. This study presents a CADx system for BC detection using DL, integrating multi-view thermograms (frontal and lateral) with patient clinical data. The system employs transfer learning with pre-trained models and focuses on regions of interest (ROIs) for targeted analysis. By addressing the limitations of single-view thermograms and incorporating critical clinical data, the proposed approach achieved 90.48% accuracy, 93.33% sensitivity, and an AUROC of 0.94, offering a cost-effective, less hazardous screening option [151]. Chatterjee et al. proposed a two-stage model for BC detection using thermographic images. The model utilizes VGG16 for feature extraction and a memory-based version of the Dragonfly Algorithm (DA) enhanced by the Grunwald–Letnikov (GL) method for optimal feature selection. Evaluated on the DMR-IR dataset, the framework achieved 100% diagnostic accuracy while reducing features by 82%, demonstrating its efficiency and potential for accurate early detection [152].

Figure 23.

A sample thermal image [153].

Civilibal et al. proposed a Mask R-CNN model with ResNet-50 and ResNet-101 backbones for breast tumor detection, segmentation, and classification using thermal images. The ResNet-50 model pre-trained on COCO achieved superior performance, with 97.1% accuracy, an mAP of 0.921, and an overlap score of 0.868, outperforming models used in prior studies. This single DL model effectively delineates tumors and adjacent tissues for accurate diagnosis [154]. The study utilized the DMR database for thermal breast image classification, employing a custom CNN model trained on augmented, standardized, and enhanced images with histogram of oriented gradients (HOG) feature extraction. The model achieved impressive accuracy rates ranging from 95.7% to 98.5%, outperforming other classifiers and demonstrating a significant potential for real-time BC diagnosis [155]. Mahoro et al. proposed a BC detection system using DL techniques. They employed TransUNet for breast region segmentation and four models—ResNet-50, EfficientNet-B7, VGG-16, and DenseNet-201—for classifying images into healthy, sick, or unknown categories. The best performance was achieved with ResNet-50 and demonstrated an accuracy of 97.26%, a sensitivity of 97.26%, and a specificity of 100%, 96.94%, and 99.72% for healthy, sick, and unknown classes, respectively [156].