Application of Machine Learning and Deep Learning Models in Prostate Cancer Diagnosis Using Medical Images: A Systematic Review

Abstract

:1. Introduction

1.1. Related Works

1.2. Scope of Review

| RQ1: | What are the trends and evolutions of this study? |

| RQ2: | Which ML and DL models are used for this study? |

| RQ3: | Which datasets are publicly available? |

| RQ4: | What are the necessary considerations for the application of these artificial intelligence (AI) techniques in PCa diagnosis? |

| RQ5: | What are the limitations that were identified so far by the authors? |

| RQ6: | What are the future directions for this research? |

1.3. High-Level Structure of This Study

2. Methods

2.1. Database Search and Eligibility Criteria

2.2. Review Strategy

| Results for (a): | Deep Learning Machine Learning Significant Prostate Cancer Artificial Intelligence Prediction Diagnosis; |

| Results for (b): | Prediction/Diagnosis/Classification Machine/Deep Prostate Cancer/PCa/csPCa; |

| Results for (c): | Review, systematic review, preprint, risk factor, treatment, biopsy, Gleason grading, DRE; |

| Results for (d): | a, b and c combined using AND OR. |

2.3. Characteristics of Studies

2.4. Quality Assessment

2.5. Data Sources and Search Strategy

2.6. Inclusion and Exclusion Criteria

2.7. Data Extraction

2.8. Data Synthesis

2.9. Risk of Bias Assessment

3. Preliminary Discussions

3.1. Imaging Modalities

3.2. Risks of PCa

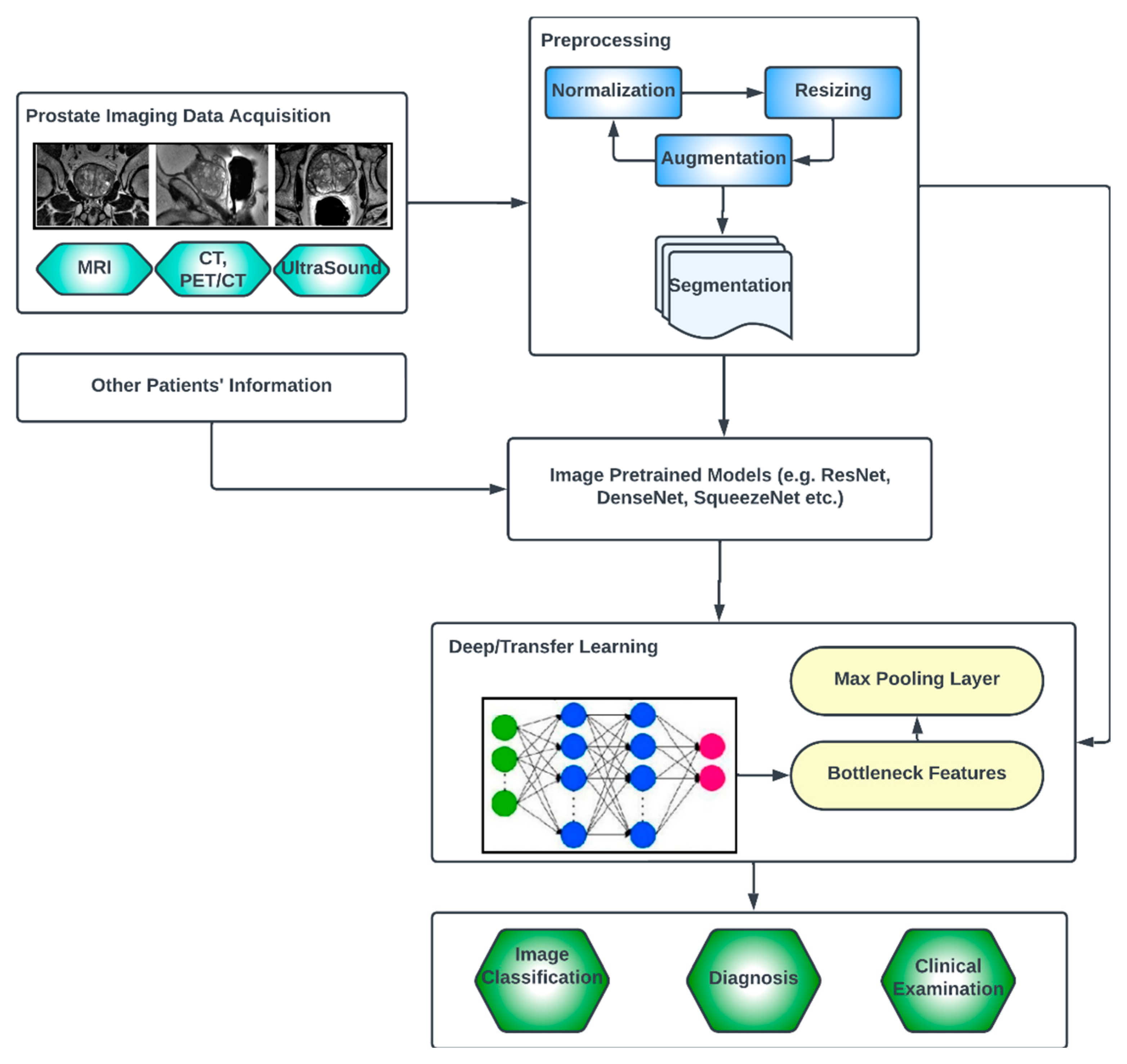

3.3. Generic Overview of Deep Learning Architecture for PCa Diagnosis

4. Results

Review Summary of Relevant Papers

5. Discussion

5.1. Considerations for Choice of Deep Learning for PCa Image Data Analysis

5.2. Considerations for Choice of Loss Functions for PCa Image Data Analysis

5.3. Prostate Cancer Datasets

5.4. Some Important Limitations Discussed in the Literature

5.5. Lessons Learned and Recommendations

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A. Springer Papers on Prostate Cancer Detection Using Machine Learning, Deep Learning or Artificial Intelligence Methods

| Ref. | Problem Addressed | Imaging Modality | Machine Learning Type | Data Collection | Medic-Verified | Discussion | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MRI | US | Others | Transfer | SL | UL | Primary | Secondary | Yes | No | Strengths | Weaknesses | ||

| [15] | Comparison between deep learning and non-deep learning classifier for performance evaluation in classification of PCa | ✓ | ✓ | ✓ | ✓ | Convolution features learned from morphologic images (axial 2D T2-weighted imaging) of the prostate were used to classify PCa | One image from each patient was used, assuming independence among them | ||||||

| [69] | Classifying PCa tissue with weakly semi-supervised technique | ✓ | ✓ | ✓ | ✓ | Pseudo-labeled regions in the task of prostate cancer classification | Increase in time to label the training data | ||||||

| [75] | Predicting csPCa with a deep learning approach | ✓ | ✓ | ✓ | ✓ | Significantly reduce unnecessary biopsies and aid in the precise diagnosis of csPCa | It was difficult to achieve a complete balance between the training and external validation cohorts | ||||||

| [66] | Classification of patient’s overall risk with ML on high or low lesion in PCa | ✓ | ✓ | ✓ | ✓ | Lesion characterization and risk prediction in PCa | Model built on a single-center cohort and included only patients with confirmed PCa | ||||||

| [81] | Localization of PCa lesion using multiparametric ML on transrectal US | ✓ | ✓ | ✓ | ✓ | Visibility of a multiparametric classifier to improve single US modalities for the localization of PCa | Data collected in a single center and 2D imaging were used | ||||||

| [67] | Clinically significant PCa detection using CNN | ✓ | ✓ | ✓ | ✓ | Automated deep learning pipeline for slice-level and patient-level PCa diagnosis with DWI | Data are inherently biased | ||||||

| [76] | ML model capable of predicting PI-RADS score 3 lesions, differentiating between non-csPCa and csPCa | ✓ | ✓ | ✓ | ✓ | Solid feature extraction techniques were used | Relatively small dataset for training developed model | ||||||

| [68] | PCa risk classification using ML techniques | ✓ | ✓ | ✓ | ✓ | PCa risk based on PSA, free PSA and age in patients | Dataset was collected retrospectively, and thus, patient management was not consistent and oncological outcome was absent | ||||||

| [8] | Prostate detection, segmentation and localization in MRI | ✓ | ✓ | ✓ | ✓ | Ability to segment and diagnose prostate images | Lack of availability of manually annotated data | ||||||

| [70] | Impact of scanning systems and cycle-GAN-based normalization on performance of DL algorithms in detecting PCa | ✓ | ✓ | ✓ | ✓ | Model was developed on multi-center cohort | Significant class imbalance occurred with the data | ||||||

| [83] | Transfer learning approach using breast histopathological images for detection of PCa | ✓ | ✓ | ✓ | ✓ | Transfer learning approach for cross cancer domains was demonstrated | No extensive pre-training of the models | ||||||

| [82] | Developed a feature extraction framework from US prostate tissues | ✓ | ✓ | ✓ | ✓ | High-dimensional temporal ultrasound features were used to detect PCa | All originally labeled data are seen as suspicious PCa | ||||||

| [77] | Multimodality to improve detection of PCa in cancer foci during biopsy | ✓ | ✓ | ✓ | ✓ | Improved targeting of PCa biopsies through generation of cancer likelihood maps | Transfer learning network was not used | ||||||

| [85] | Image-based PCa staging support system | ✓ | ✓ | ✓ | ✓ | Expert assessment for identification and anatomical location classification of suspicious uptake sites in whole-body for PCa | A limited number of subjects with advanced prostate cancer were included | ||||||

| [78] | Risk assessment of csPCa using mpMRI | ✓ | ✓ | ✓ | ✓ | Established that using risk estimates from built 3D CNN is a better strategy | Single-center study on a heterogeneous cohort and the size was still limited | ||||||

| [79] | Proposed a better segmentation technique for csPCa | ✓ | ✓ | ✓ | ✓ | Automatic segmentation of csPCa combined with radiomics modeling | Low number of patients used | ||||||

| [80] | Lesion detection and novel segmentation method for both local and global image features | ✓ | ✓ | ✓ | ✓ | Novel panoptic model for PCa lesion detection | Method was used for a single lesion only | ||||||

| [86] | Incident detection of csPCa on CT scan | ✓ | ✓ | ✓ | ✓ | CT scans for detection of prostate cancer through deep learning pipeline | Only CT data were used | ||||||

| [71] | Gleason grading of whole-slide images of prostatectomies | ✓ | ✓ | ✓ | ✓ | Gleason scoring of whole-slide images with millions of images | Grade group informs postoperative treatment decision only | ||||||

| [72] | Detection of PCa tissue in whole-slide images | ✓ | ✓ | ✓ | ✓ | Solid analysis of histological images in patients with PCa | Needs more datasets to train the model for better accuracy | ||||||

| [73] | Segmentation and grading of epithelial tissue for PCa region detection | ✓ | ✓ | ✓ | ✓ | High performance characteristics of a multi-task algorithm for PCa interpretation | Misclassifications were occasionally discovered in the output | ||||||

| [74] | Image analysis AI support for PCa and tissue region detection | ✓ | ✓ | ✓ | ✓ | High accuracy in image examination | Increase in time to label the dataset | ||||||

| [84] | Gleason grading for PCa in biopsy tissues | ✓ | ✓ | ✓ | ✓ | Strength in determining the stage of PCa | Availability of relatively small data | ||||||

Appendix B. ScienceDirect Papers on Prostate Cancer Detection Using Machine Learning, Deep Learning or Artificial Intelligence Methods

| Ref. | Problem Addressed | Imaging Modality | Machine Learning Type | Data | Medic Verified | Discussion | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MRI | US | Others | Transfer | SL | UL | Primary | Secondary | Yes | No | Strengths | Weaknesses | ||

| [87] | Effect of labeling strategies on performance of PCa detection | ✓ | ✓ | ✓ | ✓ | Identification of aggressive and indolent prostate cancer on MRI | Number of samples used is relatively small and they were obtained from a single institution | ||||||

| [88] | Detection of PCa with an explainable early detection classification model | ✓ | ✓ | ✓ | ✓ | Improved the classification accuracy of prostate cancer from MRI and US images with fusion algorithm models | Faced difficulty in selecting which MRI to be fed as input for the fusion model | ||||||

| [89] | Radiomics and machine learning techniques to detect PCa aggressiveness biopsy | ✓ | ✓ | ✓ | ✓ | Image-derived radiomics features integrated with automatic machine learning approaches for PCa detection gave high accuracy | Relatively small-sized samples were used | ||||||

| [92] | Segmentation of prostate glands with an ensemble deep and classical learning method | ✓ | ✓ | ✓ | ✓ | Detect prostate glands accurately and assist the pathologists in making accurate diagnosis | Study was based on stroma segmentation only | ||||||

| [93] | An automated grading PCa detection model with YOLO | ✓ | ✓ | ✓ | ✓ | Grading of prostate biopsies with high performance | Relatively small amount of data used | ||||||

| [90] | Textual analysis and machine learning models to detect extra prostatic cancer | ✓ | ✓ | ✓ | ✓ | Combined TA and ML approaches for predicting presence of EPE in PCa patients | Low number of patients was used | ||||||

| [94] | Diagnosis of PCa with integration of multiple deep learning approaches | ✓ | ✓ | ✓ | ✓ | Improve the detection of PCa without significantly increasing the complexity model | Limited dataset and use of only bilinear interpolation algorithm | ||||||

| [91] | Detection of PCa with an improved feature extraction method with ensemble machine learning | ✓ | ✓ | ✓ | ✓ | Combined machine learning techniques to improve GrowCut algorithm and Zernik feature selection algorithm | Limited dataset used | ||||||

| [95] | Prostate biopsy calculator using an automated machine learning technique | ✓ | ✓ | ✓ | ✓ | First report of ML approach to formulae PBCG RC | No external validation for the experimentation | ||||||

| [96] | Upgrading a patient from MRI-targeted biopsy to active surveillance with machine learning model | ✓ | ✓ | ✓ | ✓ | Machine learning with the ability to give diagnostic assessments for PCa patients was developed | A lot of missing values in the dataset and small dataset | ||||||

| [97] | A pathological grading of PCa on single US image | ✓ | ✓ | ✓ | ✓ | High accuracy in grading of PCa from single ultrasound images without puncture biopsy | Low detection of PCa lesion region and imbalance of data | ||||||

| [99] | A radiomics deeply supervised segmentation method for prostate gland and lesion | ✓ | ✓ | ✓ | ✓ | Prostate lesion detection and prostate gland delineation with the inclusion of local and global features | Small sample size | ||||||

| [100] | Performance comparison of promising machine learning models on typical PCa radiomics | ✓ | ✓ | ✓ | ✓ | GBDT model implemented with CatBoost that gave consistent high performance | Only radiomic features with whole prostate in the T2-w MRI were used | ||||||

| [101] | SVM on Gleason grading of PCa-based image features (mpMRI) | ✓ | ✓ | ✓ | ✓ | Accurate and automatic discrimination of low-grade and high-grade prostate cancer in the central gland | The number of study patients was relatively small and highly unbalanced | ||||||

| [102] | Deep learning model to simplify PCa image registration in order to map regions of interest | ✓ | ✓ | ✓ | ✓ | Image alignment in developing radiomic and deep learning approaches for early detection of PCa | Segmentation on MRI, histopathology images and gross rotation were not captured | ||||||

| [98] | An interpretable PCa ensemble deep learning model to enhance decision making for clinicians | ✓ | ✓ | ✓ | ✓ | Stacking-based tree ensemble method used | Relatively small sample size was used | ||||||

| [103] | Ensemble feature extraction methods for PCa aggressiveness and indolent detection | ✓ | ✓ | ✓ | ✓ | Radiology–pathology fusion-based algorithm for PCa detection from adolescence and aggressiveness | Training cohort was relatively small and it was taken from a single institution | ||||||

| [104] | Detection of PCa using 3D CAD in bpMR images | ✓ | ✓ | ✓ | ✓ | Demonstration of a deep learning-based 3D detection and diagnosis system for csPCa | Prostate scans were acquired using MRI scanners developed by the same vendor | ||||||

| [106] | PCa localization and classification with ML | ✓ | ✓ | ✓ | ✓ | Automatic classification of 3D PCa | There is a need to increase the dataset | ||||||

| [105] | Segmentation of MR images tested on DL methods | ✓ | ✓ | ✓ | ✓ | Automatic classification of PCa in MRI | 3D images are relatively small | ||||||

| [108] | Segmenting MRI of PCa using deep learning techniques | ✓ | ✓ | ✓ | ✓ | Established that ensemble DCNNs initialized with pre-trained weights substantially improve segmentation accuracy | Approach is time-consuming | ||||||

| [109] | Detection of PCa leveraging on the strength of multi-modality of MR images | ✓ | ✓ | ✓ | ✓ | Novel model that detects PCa with different modalities of MRI and still maintains its robustness | Dual-attention model in depth was not considered | ||||||

| [110] | GANs were investigated for detection of PCa with MRI | ✓ | ✓ | ✓ | ✓ | GAN models in an end-to-end pipeline for automated PCa detection on T2W MRI | T2-weighted scans were used in this study | ||||||

| [111] | Gleason grading for PCa detection with deep learning techniques | ✓ | ✓ | ✓ | ✓ | Classify PCa belonging to different grade groups | More datasets needed for higher accuracy and diagnostic accuracy also needs further improvement | ||||||

| [112] | HC for early diagnosis of PCa | ✓ | ✓ | ✓ | ✓ | Detection of PCa with unsupervised HC in mpMRI | Relatively small patients used and they do not include other quantitative parameters and clinical information | ||||||

| [107] | Ensemble method of mpMRI and PHI for diagnosis of early PCa | ✓ | ✓ | ✓ | ✓ | The presence of PCa is automatically identified | Only present the design of co-trained CNNs for fusing ADC and T2w images, and their performance is based on two image modalities | ||||||

| [113] | Ensemble method of mpMRI and PHI for diagnosis of early PCa | ✓ | ✓ | ✓ | ✓ | Combined PHI and mpMRI to obtain higher csPCa detection | Relatively small amount of data for training | ||||||

| [114] | An improved CAD MRI for significant PCa detection | ✓ | ✓ | ✓ | ✓ | An improved inter-reader agreement and diagnostic performance for PCa detection | Lack of reproducibility of prostate MRI interpretations | ||||||

| [115] | Compared deep learning models for classification of PCa with GG | ✓ | ✓ | ✓ | ✓ | combining strongly and weakly supervised models | Labeling of data consumes time | ||||||

Appendix C. IEEE Xplore Papers on Prostate Cancer Detection Using Machine Learning, Deep Learning or Artificial Intelligence Methods

| Ref. | Problem Addressed | Imaging Modality | Machine Learning Type | Data | Medic Verified | Discussion | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MRI | US | Others | Transfer | SL | UL | Primary | Secondary | Yes | No | Strengths | Weaknesses | ||

| [14] | Classification of MRI for diagnosis of PCa. | ✓ | ✓ | ✓ | ✓ | Model was trained steadily which results in high accuracy. | Only diffusion-weighted images were used. | ||||||

| [116] | Prediction of PCa using machine learning classifiers. | ✓ | ✓ | ✓ | ✓ | Improved LR for better prediction. | mpMRI was not considered. | ||||||

| [120] | PCa detection in CEUS images through deep learning framework. | ✓ | ✓ | ✓ | ✓ | Captured dynamic information through 3D convolution operations. | Availability of limited dataset. | ||||||

| [117] | Deep learning regression analysis for PCa detection and Gleason scoring. | ✓ | ✓ | ✓ | ✓ | Improvement of PCa grading and detection with soft-label ordinal regression. | Fixed sized box in the middle of the image was used for segmentation. | ||||||

| [118] | PCa detection with classical and deep learning models. | ✓ | ✓ | ✓ | ✓ | Feature extraction through hand-crafted and non-hand-crafted methods and comparison in performance. | Only LSTM with possible bit parity was used. | ||||||

| [122] | PCa detection with WSI using CNN. | ✓ | ✓ | ✓ | ✓ | Developed an excellent patch-scoring model. | Model was limited with heatmap. | ||||||

| [124] | An improved Gleason score and PCa detection with a better feature extraction technique. | ✓ | ✓ | ✓ | ✓ | Enhancing radiomics with deep entropy feature generation through pre-trained CNN. | Only one feature extraction technique was utilized. | ||||||

| [125] | csPCa detection using deep neural network. | ✓ | ✓ | ✓ | ✓ | The neural network was optimized with different loss functions, which resulted in high accuracy in detecting PCa. | 2D network was used in their work. | ||||||

| [123] | Epithelial cell detection and Gleason grading in histological images. | ✓ | ✓ | ✓ | ✓ | Developed a model with the ability to perform multi-task prediction. | Experiment was not based on patient-wise validation. | ||||||

| [119] | Detection of PCa lesions with transfer learning. | ✓ | ✓ | ✓ | ✓ | Compared three (3) CNN models and suggested the best model. | Limited dataset used for testing the model developed. | ||||||

| [127] | Early diagnosis of Pca using CNN-CAD. | ✓ | ✓ | ✓ | ✓ | PCa segmentation, feature extraction and classification were performed with an improved CNN-CAD. | Classification was found only on one b-value. | ||||||

| [126] | Prediction of PCa lesions and their aggressiveness through Gleason grading. | ✓ | ✓ | ✓ | ✓ | A multi-class CNN and Focal-Net was developed in order to predict PCa. | No inclusion of non-visible MRI lesions. | ||||||

| [128] | Detection of PCa with CNN. | ✓ | ✓ | ✓ | ✓ | Transferred learning with reduction in MRI size to reduce complexity gave high accuracy in PCa detection. | Minimal dataset to work with. | ||||||

| [129] | Classification of Pca lesions into high-grade and low-grade through evaluation of radiomics. | ✓ | ✓ | ✓ | ✓ | Established that radiomics has high tendency to distinguish between high-grade and low-grade Pca tumor. | Tendency to have some wrong cases in the ground truth data. | ||||||

| [130] | Pca MRI segmentation improvement. | ✓ | ✓ | ✓ | ✓ | Developed an improved 2D PCa segmentation network. | They only focused on MRI segmentation of PCa. | ||||||

| [121] | Improved TRUS for csPCa detection. | ✓ | ✓ | ✓ | ✓ | Combined acoustic radiation force impulse (ARFI) imaging and shear wave elasticity imaging (SWEI) to give an improved csPCa detection. | Limited number of patients were used during the experiment. | ||||||

Appendix D. PubMed Papers on Prostate Cancer Detection Using Machine Learning, Deep Learning or Artificial Intelligence Methods

| Ref. | Problem Addressed | Imaging Modality | Machine Learning Type | Data | Medic Verified | Discussion | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MRI | US | Others | Transfer | SL | UL | Primary | Secondary | Yes | No | Strengths | Weaknesses | ||

| [131] | Aggressiveness of PCa was predicted using ML and DL frameworks | ✓ | ✓ | ✓ | ✓ | Characterization of PCa according to their aggressiveness level | Sample size was relatively small and study was monocentric | ||||||

| [178] | Survival analysis of localized PCa | ✓ | ✓ | ✓ | ✓ | Large cohort of localized prostate cancer patients were used | Lack of independent external validation | ||||||

| [132] | Transfer learning approach with CNN framework for detecting PCa | ✓ | ✓ | ✓ | ✓ | Compared the performances of machine learning and deep learning in detecting PCa with multimodal feature extraction | Better results could be achieved with more datasets | ||||||

| [135] | Detection of csPCa with deep learning-based imaging prediction using PI-RADS scoring and clinical variables | ✓ | ✓ | ✓ | ✓ | Models built were validated on different external sites | Manual delineations of the prostate gland were used with possibility of inter-reader variability | ||||||

| [134] | PCa detection using UNet | ✓ | ✓ | ✓ | ✓ | DL-based AI approach can predict prostate cancer lesions | Only one highly experienced genitourinary radiologist was involved in annotation, and histopathology verification was based on targeted biopsies but not surgical specimens | ||||||

| [133] | UNet architecture for PCa detection with minimal dataset | ✓ | ✓ | ✓ | ✓ | Detection of csPCa with prior knowledge on DL-based zonal segmentation | All data came from one MRI vendor (Siemens) | ||||||

| [136] | Bi-modal deep learning model fusion of pathology–radiology data for PCa diagnostic classification | ✓ | ✓ | ✓ | ✓ | Complementary information from biopsy report and MRI used to improve prediction of PCa | Axial T2w MRI only was used in this study and MRI was labeled using pathology labels, which may include inaccurate histological findings | ||||||

| [137] | ANN was used to accurately predict PCa | ✓ | ✓ | ✓ | ✓ | Accurately predicted PCa on prostate biopsy | The sample size was limited | ||||||

References

- Litwin, M.S.; Tan, H.-J. The diagnosis and treatment of prostate cancer: A review. JAMA 2017, 317, 2532–2542. [Google Scholar] [CrossRef] [PubMed]

- Akinnuwesi, B.A.; Olayanju, K.A.; Aribisala, B.S.; Fashoto, S.G.; Mbunge, E.; Okpeku, M.; Owate, P. Application of support vector machine algorithm for early differential diagnosis of prostate cancer. Data Sci. Manag. 2023, 6, 1–12. [Google Scholar] [CrossRef]

- Ayenigbara, I.O. Risk-Reducing Measures for Cancer Prevention. Korean J. Fam. Med. 2023, 44, 76. [Google Scholar] [CrossRef] [PubMed]

- Musekiwa, A.; Moyo, M.; Mohammed, M.; Matsena-Zingoni, Z.; Twabi, H.S.; Batidzirai, J.M.; Singini, G.C.; Kgarosi, K.; Mchunu, N.; Nevhungoni, P. Mapping evidence on the burden of breast, cervical, and prostate cancers in Sub-Saharan Africa: A scoping review. Front. Public Health 2022, 10, 908302. [Google Scholar] [CrossRef]

- Walsh, P.C.; Worthington, J.F. Dr. Patrick Walsh’s Guide to Surviving Prostate Cancer; Grand Central Life & Style: New York, NY, USA, 2010. [Google Scholar]

- Hayes, R.; Pottern, L.; Strickler, H.; Rabkin, C.; Pope, V.; Swanson, G.; Greenberg, R.; Schoenberg, J.; Liff, J.; Schwartz, A. Sexual behaviour, STDs and risks for prostate cancer. Br. J. Cancer 2000, 82, 718–725. [Google Scholar] [CrossRef]

- Plym, A.; Zhang, Y.; Stopsack, K.H.; Delcoigne, B.; Wiklund, F.; Haiman, C.; Kenfield, S.A.; Kibel, A.S.; Giovannucci, E.; Penney, K.L. A healthy lifestyle in men at increased genetic risk for prostate cancer. Eur. Urol. 2023, 83, 343–351. [Google Scholar] [CrossRef]

- Alkadi, R.; Taher, F.; El-Baz, A.; Werghi, N. A deep learning-based approach for the detection and localization of prostate cancer in T2 magnetic resonance images. J. Digit. Imaging 2019, 32, 793–807. [Google Scholar] [CrossRef]

- Ishioka, J.; Matsuoka, Y.; Uehara, S.; Yasuda, Y.; Kijima, T.; Yoshida, S.; Yokoyama, M.; Saito, K.; Kihara, K.; Numao, N. Computer-aided diagnosis of prostate cancer on magnetic resonance imaging using a convolutional neural network algorithm. BJU Int. 2018, 122, 411–417. [Google Scholar] [CrossRef]

- Reda, I.; Shalaby, A.; Abou El-Ghar, M.; Khalifa, F.; Elmogy, M.; Aboulfotouh, A.; Hosseini-Asl, E.; El-Baz, A.; Keynton, R. A new NMF-autoencoder based CAD system for early diagnosis of prostate cancer. In Proceedings of the 2016 IEEE 13th International Symposium on Biomedical Imaging (ISBI), Prague, Czech Republic, 13–16 April 2016. [Google Scholar]

- Wildeboer, R.R.; van Sloun, R.J.; Wijkstra, H.; Mischi, M. Artificial intelligence in multiparametric prostate cancer imaging with focus on deep-learning methods. Comput. Methods Programs Biomed. 2020, 189, 105316. [Google Scholar] [CrossRef]

- Aribisala, B.; Olabanjo, O. Medical image processor and repository–mipar. Inform. Med. Unlocked 2018, 12, 75–80. [Google Scholar] [CrossRef]

- Shen, D.; Wu, G.; Suk, H.-I. Deep learning in medical image analysis. Annu. Rev. Biomed. Eng. 2017, 19, 221–248. [Google Scholar] [CrossRef]

- Liu, Y.; An, X. A classification model for the prostate cancer based on deep learning. In Proceedings of the 2017 10th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI), Shanghai, China, 13–16 April 2016. [Google Scholar]

- Wang, X.; Yang, W.; Weinreb, J.; Han, J.; Li, Q.; Kong, X.; Yan, Y.; Ke, Z.; Luo, B.; Liu, T. Searching for prostate cancer by fully automated magnetic resonance imaging classification: Deep learning versus non-deep learning. Sci. Rep. 2017, 7, 15415. [Google Scholar] [CrossRef] [PubMed]

- Suarez-Ibarrola, R.; Hein, S.; Reis, G.; Gratzke, C.; Miernik, A. Current and future applications of machine and deep learning in urology: A review of the literature on urolithiasis, renal cell carcinoma, and bladder and prostate cancer. World J. Urol. 2020, 38, 2329–2347. [Google Scholar] [CrossRef]

- Almeida, G.; Tavares, J.M.R. Deep learning in radiation oncology treatment planning for prostate cancer: A systematic review. J. Med. Syst. 2020, 44, 179. [Google Scholar] [CrossRef] [PubMed]

- Khan, Z.; Yahya, N.; Alsaih, K.; Al-Hiyali, M.I.; Meriaudeau, F. Recent automatic segmentation algorithms of MRI prostate regions: A review. IEEE Access 2021, 9, 97878–97905. [Google Scholar] [CrossRef]

- Roest, C.; Fransen, S.J.; Kwee, T.C.; Yakar, D. Comparative Performance of Deep Learning and Radiologists for the Diagnosis and Localization of Clinically Significant Prostate Cancer at MRI: A Systematic Review. Life 2022, 12, 1490. [Google Scholar] [CrossRef]

- Castillo, T.J.M.; Arif, M.; Niessen, W.J.; Schoots, I.G.; Veenland, J.F. Automated classification of significant prostate cancer on MRI: A systematic review on the performance of machine learning applications. Cancers 2020, 12, 1606. [Google Scholar] [CrossRef]

- Michaely, H.J.; Aringhieri, G.; Cioni, D.; Neri, E. Current value of biparametric prostate MRI with machine-learning or deep-learning in the detection, grading, and characterization of prostate cancer: A systematic review. Diagnostics 2022, 12, 799. [Google Scholar] [CrossRef]

- Naik, N.; Tokas, T.; Shetty, D.K.; Hameed, B.Z.; Shastri, S.; Shah, M.J.; Ibrahim, S.; Rai, B.P.; Chłosta, P.; Somani, B.K. Role of Deep Learning in Prostate Cancer Management: Past, Present and Future Based on a Comprehensive Literature Review. J. Clin. Med. 2022, 11, 3575. [Google Scholar] [CrossRef] [PubMed]

- Sarkis-Onofre, R.; Catalá-López, F.; Aromataris, E.; Lockwood, C. How to properly use the PRISMA Statement. Syst. Rev. 2021, 10, 117. [Google Scholar] [CrossRef]

- Hricak, H.; Choyke, P.L.; Eberhardt, S.C.; Leibel, S.A.; Scardino, P.T. Imaging prostate cancer: A multidisciplinary perspective. Radiology 2007, 243, 28–53. [Google Scholar] [CrossRef] [PubMed]

- Kyle, K.Y.; Hricak, H. Imaging prostate cancer. Radiol. Clin. North Am. 2000, 38, 59–85. [Google Scholar]

- Cornud, F.; Brolis, L.; Delongchamps, N.B.; Portalez, D.; Malavaud, B.; Renard-Penna, R.; Mozer, P. TRUS–MRI image registration: A paradigm shift in the diagnosis of significant prostate cancer. Abdom. Imaging 2013, 38, 1447–1463. [Google Scholar] [CrossRef]

- Reynier, C.; Troccaz, J.; Fourneret, P.; Dusserre, A.; Gay-Jeune, C.; Descotes, J.L.; Bolla, M.; Giraud, J.Y. MRI/TRUS data fusion for prostate brachytherapy. Preliminary results. Med. Phys. 2004, 31, 1568–1575. [Google Scholar] [CrossRef] [PubMed]

- Rasch, C.; Barillot, I.; Remeijer, P.; Touw, A.; van Herk, M.; Lebesque, J.V. Definition of the prostate in CT and MRI: A multi-observer study. Int. J. Radiat. Oncol. 1999, 43, 57–66. [Google Scholar] [CrossRef]

- Pezaro, C.; Woo, H.H.; Davis, I.D. Prostate cancer: Measuring PSA. Intern. Med. J. 2014, 44, 433–440. [Google Scholar] [CrossRef]

- Takahashi, N.; Inoue, T.; Lee, J.; Yamaguchi, T.; Shizukuishi, K. The roles of PET and PET/CT in the diagnosis and management of prostate cancer. Oncology 2008, 72, 226–233. [Google Scholar] [CrossRef]

- Sturge, J.; Caley, M.P.; Waxman, J. Bone metastasis in prostate cancer: Emerging therapeutic strategies. Nat. Rev. Clin. Oncol. 2011, 8, 357. [Google Scholar] [CrossRef]

- Raja, J.; Ramachandran, N.; Munneke, G.; Patel, U. Current status of transrectal ultrasound-guided prostate biopsy in the diagnosis of prostate cancer. Clin. Radiol. 2006, 61, 142–153. [Google Scholar] [CrossRef]

- Bai, H.; Xia, W.; Ji, X.; He, D.; Zhao, X.; Bao, J.; Zhou, J.; Wei, X.; Huang, Y.; Li, Q. Multiparametric magnetic resonance imaging-based peritumoral radiomics for preoperative prediction of the presence of extracapsular extension with prostate cancer. J. Magn. Reson. Imaging 2021, 54, 1222–1230. [Google Scholar] [CrossRef]

- Jansen, B.H.; Nieuwenhuijzen, J.A.; Oprea-Lager, D.E.; Yska, M.J.; Lont, A.P.; van Moorselaar, R.J.; Vis, A.N. Adding multiparametric MRI to the MSKCC and Partin nomograms for primary prostate cancer: Improving local tumor staging? In Urologic Oncology: Seminars and Original Investigations; Elsevier: Amsterdam, The Netherlands, 2019. [Google Scholar]

- Maurer, T.; Eiber, M.; Schwaiger, M.; Gschwend, J.E. Current use of PSMA–PET in prostate cancer management. Nat. Rev. Urol. 2016, 13, 226–235. [Google Scholar] [CrossRef] [PubMed]

- Stavrinides, V.; Papageorgiou, G.; Danks, D.; Giganti, F.; Pashayan, N.; Trock, B.; Freeman, A.; Hu, Y.; Whitaker, H.; Allen, C. Mapping PSA density to outcome of MRI-based active surveillance for prostate cancer through joint longitudinal-survival models. Prostate Cancer Prostatic Dis. 2021, 24, 1028–1031. [Google Scholar] [CrossRef] [PubMed]

- Fuchsjäger, M.; Shukla-Dave, A.; Akin, O.; Barentsz, J.; Hricak, H. Prostate cancer imaging. Acta Radiol. 2008, 49, 107–120. [Google Scholar] [CrossRef]

- Ghafoor, S.; Burger, I.A.; Vargas, A.H. Multimodality imaging of prostate cancer. J. Nucl. Med. 2019, 60, 1350–1358. [Google Scholar] [CrossRef] [PubMed]

- Rohrmann, S.; Roberts, W.W.; Walsh, P.C.; Platz, E.A. Family history of prostate cancer and obesity in relation to high-grade disease and extraprostatic extension in young men with prostate cancer. Prostate 2003, 55, 140–146. [Google Scholar] [CrossRef]

- Porter, M.P.; Stanford, J.L. Obesity and the risk of prostate cancer. Prostate 2005, 62, 316–321. [Google Scholar] [CrossRef]

- Gann, P.H. Risk factors for prostate cancer. Rev. Urol. 2002, 4 (Suppl. 5), S3. [Google Scholar]

- Tian, W.; Osawa, M. Prevalent latent adenocarcinoma of the prostate in forensic autopsies. J. Clin. Pathol. Forensic Med. 2015, 6, 11–13. [Google Scholar]

- Marley, A.R.; Nan, H. Epidemiology of colorectal cancer. Int. J. Mol. Epidemiol. Genet. 2016, 7, 105. [Google Scholar]

- Kumagai, H.; Zempo-Miyaki, A.; Yoshikawa, T.; Tsujimoto, T.; Tanaka, K.; Maeda, S. Lifestyle modification increases serum testosterone level and decrease central blood pressure in overweight and obese men. Endocr. J. 2015, 62, 423–430. [Google Scholar] [CrossRef]

- Moyad, M.A. Is obesity a risk factor for prostate cancer, and does it even matter? A hypothesis and different perspective. Urology 2002, 59, 41–50. [Google Scholar] [CrossRef]

- Parikesit, D.; Mochtar, C.A.; Umbas, R.; Hamid, A.R.A.H. The impact of obesity towards prostate diseases. Prostate Int. 2016, 4, 1–6. [Google Scholar] [CrossRef] [PubMed]

- Tse, L.A.; Lee, P.M.Y.; Ho, W.M.; Lam, A.T.; Lee, M.K.; Ng, S.S.M.; He, Y.; Leung, K.-S.; Hartle, J.C.; Hu, H. Bisphenol A and other environmental risk factors for prostate cancer in Hong Kong. Environ. Int. 2017, 107, 1–7. [Google Scholar] [CrossRef] [PubMed]

- Vaidyanathan, V.; Naidu, V.; Kao, C.H.-J.; Karunasinghe, N.; Bishop, K.S.; Wang, A.; Pallati, R.; Shepherd, P.; Masters, J.; Zhu, S. Environmental factors and risk of aggressive prostate cancer among a population of New Zealand men–a genotypic approach. Mol. BioSystems 2017, 13, 681–698. [Google Scholar] [CrossRef]

- Minaee, S.; Boykov, Y.; Porikli, F.; Plaza, A.; Kehtarnavaz, N.; Terzopoulos, D. Image segmentation using deep learning: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 3523–3542. [Google Scholar] [CrossRef]

- Zhang, X.; Li, H.; Wang, C.; Cheng, W.; Zhu, Y.; Li, D.; Jing, H.; Li, S.; Hou, J.; Li, J. Evaluating the accuracy of breast cancer and molecular subtype diagnosis by ultrasound image deep learning model. Front. Oncol. 2021, 11, 623506. [Google Scholar] [CrossRef]

- Tammina, S. Transfer learning using vgg-16 with deep convolutional neural network for classifying images. Int. J. Sci. Res. Publ. (IJSRP) 2019, 9, 143–150. [Google Scholar] [CrossRef]

- Abbas, A.; Abdelsamea, M.M.; Gaber, M.M. Classification of COVID-19 in chest X-ray images using DeTraC deep convolutional neural network. Appl. Intell. 2021, 51, 854–864. [Google Scholar] [CrossRef]

- Christlein, V.; Spranger, L.; Seuret, M.; Nicolaou, A.; Král, P.; Maier, A. Deep generalized max pooling. In Proceedings of the 2019 International Conference on Document Analysis and Recognition (ICDAR), Sydney, NSW, Australia, 20–25 September 2019. [Google Scholar]

- Sharma, S.; Sharma, S.; Athaiya, A. Activation functions in neural networks. Towards Data Sci. 2017, 6, 310–316. [Google Scholar] [CrossRef]

- Sibi, P.; Jones, S.A.; Siddarth, P. Analysis of different activation functions using back propagation neural networks. J. Theor. Appl. Inf. Technol. 2013, 47, 1264–1268. [Google Scholar]

- Fu, J.; Zheng, H.; Mei, T. Look closer to see better: Recurrent attention convolutional neural network for fine-grained image recognition. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Yin, W.; Schütze, H.; Xiang, B.; Zhou, B. Abcnn: Attention-based convolutional neural network for modeling sentence pairs. Trans. Assoc. Comput. Linguist. 2016, 4, 259–272. [Google Scholar] [CrossRef]

- Ranftl, R.; Bochkovskiy, A.; Koltun, V. Vision transformers for dense prediction. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021. [Google Scholar]

- Fan, H.; Xiong, B.; Mangalam, K.; Li, Y.; Yan, Z.; Malik, J.; Feichtenhofer, C. Multiscale vision transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021. [Google Scholar]

- Khan, S.; Naseer, M.; Hayat, M.; Zamir, S.W.; Khan, F.S.; Shah, M. Transformers in vision: A survey. ACM Comput. Surv. (CSUR) 2022, 54, 1–41. [Google Scholar] [CrossRef]

- Ikromjanov, K.; Bhattacharjee, S.; Hwang, Y.-B.; Sumon, R.I.; Kim, H.-C.; Choi, H.-K. Whole slide image analysis and detection of prostate cancer using vision transformers. In Proceedings of the 2022 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), Jeju Island, Republic of Korea, 21–24 February 2022. [Google Scholar]

- Singla, D.; Cimen, F.; Narasimhulu, C.A. Novel artificial intelligent transformer U-NET for better identification and management of prostate cancer. Mol. Cell. Biochem. 2023, 478, 1439–1445. [Google Scholar] [CrossRef]

- Pachetti, E.; Colantonio, S. 3D-Vision-Transformer Stacking Ensemble for Assessing Prostate Cancer Aggressiveness from T2w Images. Bioengineering 2023, 10, 1015. [Google Scholar] [CrossRef]

- Pachetti, E.; Colantonio, S.; Pascali, M.A. On the effectiveness of 3D vision transformers for the prediction of prostate cancer aggressiveness. In Image Analysis and Processing; Springer: Cham, Switzerland, 2022. [Google Scholar]

- Li, C.; Deng, M.; Zhong, X.; Ren, J.; Chen, X.; Chen, J.; Xiao, F.; Xu, H. Multi-view radiomics and deep learning modeling for prostate cancer detection based on multi-parametric MRI. Front. Oncol. 2023, 13, 1198899. [Google Scholar] [CrossRef] [PubMed]

- Papp, L.; Spielvogel, C.; Grubmüller, B.; Grahovac, M.; Krajnc, D.; Ecsedi, B.; Sareshgi, R.A.; Mohamad, D.; Hamboeck, M.; Rausch, I. Supervised machine learning enables non-invasive lesion characterization in primary prostate cancer with [68 Ga] Ga-PSMA-11 PET/MRI. Eur. J. Nucl. Med. Mol. Imaging 2021, 48, 1795–1805. [Google Scholar] [CrossRef]

- Yoo, S.; Gujrathi, I.; Haider, M.A.; Khalvati, F. Prostate cancer detection using deep convolutional neural networks. Sci. Rep. 2019, 9, 19518. [Google Scholar] [CrossRef]

- Perera, M.; Mirchandani, R.; Papa, N.; Breemer, G.; Effeindzourou, A.; Smith, L.; Swindle, P.; Smith, E. PSA-based machine learning model improves prostate cancer risk stratification in a screening population. World J. Urol. 2021, 39, 1897–1902. [Google Scholar] [CrossRef] [PubMed]

- Otálora, S.; Marini, N.; Müller, H.; Atzori, M. Semi-weakly supervised learning for prostate cancer image classification with teacher-student deep convolutional networks. In Interpretable and Annotation-Efficient Learning for Medical Image Computing, Proceedings of the Third International Workshop, iMIMIC 2020, Second International Workshop, MIL3ID 2020, and 5th International Workshop, LABELS 2020, Held in Conjunction with MICCAI 2020, Lima, Peru, 4–8 October 2020; Proceedings 3; Springer: Berlin/Heidelberg, Germany, 2020. [Google Scholar]

- Swiderska-Chadaj, Z.; de Bel, T.; Blanchet, L.; Baidoshvili, A.; Vossen, D.; van der Laak, J.; Litjens, G. Impact of rescanning and normalization on convolutional neural network performance in multi-center, whole-slide classification of prostate cancer. Sci. Rep. 2020, 10, 14398. [Google Scholar] [CrossRef]

- Nagpal, K.; Foote, D.; Liu, Y.; Chen, P.-H.C.; Wulczyn, E.; Tan, F.; Olson, N.; Smith, J.L.; Mohtashamian, A.; Wren, J.H. Development and validation of a deep learning algorithm for improving Gleason scoring of prostate cancer. NPJ Digit. Med. 2019, 2, 48. [Google Scholar] [CrossRef]

- Tolkach, Y.; Dohmgörgen, T.; Toma, M.; Kristiansen, G. High-accuracy prostate cancer pathology using deep learning. Nat. Mach. Intell. 2020, 2, 411–418. [Google Scholar] [CrossRef]

- Singhal, N.; Soni, S.; Bonthu, S.; Chattopadhyay, N.; Samanta, P.; Joshi, U.; Jojera, A.; Chharchhodawala, T.; Agarwal, A.; Desai, M. A deep learning system for prostate cancer diagnosis and grading in whole slide images of core needle biopsies. Sci. Rep. 2022, 12, 3383. [Google Scholar] [CrossRef] [PubMed]

- Del Rio, M.; Lianas, L.; Aspegren, O.; Busonera, G.; Versaci, F.; Zelic, R.; Vincent, P.H.; Leo, S.; Pettersson, A.; Akre, O. AI support for accelerating histopathological slide examinations of prostate cancer in clinical studies. In Image Analysis and Processing. ICIAP 2022 Workshops, Proceedings of the ICIAP International Workshops, Lecce, Italy, 23–27 May 2022; Revised Selected Papers, Part I; Springer: Berlin/Heidelberg, Germany, 2022. [Google Scholar]

- Zhao, L.; Bao, J.; Qiao, X.; Jin, P.; Ji, Y.; Li, Z.; Zhang, J.; Su, Y.; Ji, L.; Shen, J. Predicting clinically significant prostate cancer with a deep learning approach: A multicentre retrospective study. Eur. J. Nucl. Med. Mol. Imaging 2023, 50, 727–741. [Google Scholar] [CrossRef] [PubMed]

- Hou, Y.; Bao, M.-L.; Wu, C.-J.; Zhang, J.; Zhang, Y.-D.; Shi, H.-B. A radiomics machine learning-based redefining score robustly identifies clinically significant prostate cancer in equivocal PI-RADS score 3 lesions. Abdom. Radiol. 2020, 45, 4223–4234. [Google Scholar] [CrossRef] [PubMed]

- Sedghi, A.; Mehrtash, A.; Jamzad, A.; Amalou, A.; Wells, W.M.; Kapur, T.; Kwak, J.T.; Turkbey, B.; Choyke, P.; Pinto, P. Improving detection of prostate cancer foci via information fusion of MRI and temporal enhanced ultrasound. Int. J. Comput. Assist. Radiol. Surg. 2020, 15, 1215–1223. [Google Scholar] [CrossRef]

- Deniffel, D.; Abraham, N.; Namdar, K.; Dong, X.; Salinas, E.; Milot, L.; Khalvati, F.; Haider, M.A. Using decision curve analysis to benchmark performance of a magnetic resonance imaging–based deep learning model for prostate cancer risk assessment. Eur. Radiol. 2020, 30, 6867–6876. [Google Scholar] [CrossRef]

- Bleker, J.; Kwee, T.C.; Rouw, D.; Roest, C.; Borstlap, J.; de Jong, I.J.; Dierckx, R.A.; Huisman, H.; Yakar, D. A deep learning masked segmentation alternative to manual segmentation in biparametric MRI prostate cancer radiomics. Eur. Radiol. 2022, 32, 6526–6535. [Google Scholar] [CrossRef]

- Yu, X.; Lou, B.; Zhang, D.; Winkel, D.; Arrahmane, N.; Diallo, M.; Meng, T.; von Busch, H.; Grimm, R.; Kiefer, B. Deep attentive panoptic model for prostate cancer detection using biparametric MRI scans. In Medical Image Computing and Computer Assisted Intervention–MICCAI 2020, Proceedings of the 23rd International Conference, Lima, Peru, 4–8 October 2020; Proceedings, Part IV 23; Springer: Berlin/Heidelberg, Germany, 2020. [Google Scholar]

- Wildeboer, R.R.; Mannaerts, C.K.; van Sloun, R.J.; Budäus, L.; Tilki, D.; Wijkstra, H.; Salomon, G.; Mischi, M. Automated multiparametric localization of prostate cancer based on B-mode, shear-wave elastography, and contrast-enhanced ultrasound radiomics. Eur. Radiol. 2020, 30, 806–815. [Google Scholar] [CrossRef]

- Azizi, S.; Imani, F.; Zhuang, B.; Tahmasebi, A.; Kwak, J.T.; Xu, S.; Uniyal, N.; Turkbey, B.; Choyke, P.; Pinto, P. Ultrasound-based detection of prostate cancer using automatic feature selection with deep belief networks. In Medical Image Computing and Computer-Assisted Intervention--MICCAI 2015, Proceedings of the 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings, Part II 18; Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar]

- Khan, U.A.H.; Stürenberg, C.; Gencoglu, O.; Sandeman, K.; Heikkinen, T.; Rannikko, A.; Mirtti, T. Improving prostate cancer detection with breast histopathology images. In Digital Pathology, Proceedings of the 15th European Congress, Proceedings of the ECDP 2019, Warwick, UK, 10–13 April 2019; Proceedings 15; Springer: Berlin/Heidelberg, Germany, 2019. [Google Scholar]

- Gour, M.; Jain, S.; Shankar, U. Application of Deep Learning Techniques for Prostate Cancer Grading Using Histopathological Images. In Computer Vision and Image Processing, Proceedings of the 6th International Conference, CVIP 2021, Rupnagar, India, 3–5 December 2021; Revised Selected Papers, Part I; Springer: Berlin/Heidelberg, Germany, 2022. [Google Scholar]

- Capobianco, N.; Sibille, L.; Chantadisai, M.; Gafita, A.; Langbein, T.; Platsch, G.; Solari, E.L.; Shah, V.; Spottiswoode, B.; Eiber, M. Whole-body uptake classification and prostate cancer staging in 68 Ga-PSMA-11 PET/CT using dual-tracer learning. Eur. J. Nucl. Med. Mol. Imaging 2022, 49, 517–526. [Google Scholar] [CrossRef]

- Korevaar, S.; Tennakoon, R.; Page, M.; Brotchie, P.; Thangarajah, J.; Florescu, C.; Sutherland, T.; Kam, N.M.; Bab-Hadiashar, A. Incidental detection of prostate cancer with computed tomography scans. Sci. Rep. 2021, 11, 7956. [Google Scholar] [CrossRef]

- Bhattacharya, I.; Lim, D.S.; Aung, H.L.; Liu, X.; Seetharaman, A.; Kunder, C.A.; Shao, W.; Soerensen, S.J.; Fan, R.E.; Ghanouni, P. Bridging the gap between prostate radiology and pathology through machine learning. Med. Phys. 2022, 49, 5160–5181. [Google Scholar] [CrossRef]

- Hassan, M.R.; Islam, M.F.; Uddin, M.Z.; Ghoshal, G.; Hassan, M.M.; Huda, S.; Fortino, G. Prostate cancer classification from ultrasound and MRI images using deep learning based Explainable Artificial Intelligence. Future Gener. Comput. Syst. 2022, 127, 462–472. [Google Scholar] [CrossRef]

- Liu, B.; Cheng, J.; Guo, D.; He, X.; Luo, Y.; Zeng, Y.; Li, C. Prediction of prostate cancer aggressiveness with a combination of radiomics and machine learning-based analysis of dynamic contrast-enhanced MRI. Clin. Radiol. 2019, 74, 896.e1–896.e8. [Google Scholar] [CrossRef]

- Stanzione, A.; Cuocolo, R.; Cocozza, S.; Romeo, V.; Persico, F.; Fusco, F.; Longo, N.; Brunetti, A.; Imbriaco, M. Detection of extraprostatic extension of cancer on biparametric MRI combining texture analysis and machine learning: Preliminary results. Acad. Radiol. 2019, 26, 1338–1344. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Li, L.; Tang, M.; Huan, Y.; Zhang, X.; Zhe, X. A new approach to diagnosing prostate cancer through magnetic resonance imaging. Alex. Eng. J. 2021, 60, 897–904. [Google Scholar] [CrossRef]

- Salvi, M.; Bosco, M.; Molinaro, L.; Gambella, A.; Papotti, M.; Acharya, U.R.; Molinari, F. A hybrid deep learning approach for gland segmentation in prostate histopathological images. Artif. Intell. Med. 2021, 115, 102076. [Google Scholar] [CrossRef]

- Salman, M.E.; Çakar, G.Ç.; Azimjonov, J.; Kösem, M.; Cedimoğlu, İ.H. Automated prostate cancer grading and diagnosis system using deep learning-based Yolo object detection algorithm. Expert Syst. Appl. 2022, 201, 117148. [Google Scholar] [CrossRef]

- Liu, Z.; Yang, C.; Huang, J.; Liu, S.; Zhuo, Y.; Lu, X. Deep learning framework based on integration of S-Mask R-CNN and Inception-v3 for ultrasound image-aided diagnosis of prostate cancer. Future Gener. Comput. Syst. 2021, 114, 358–367. [Google Scholar] [CrossRef]

- Stojadinovic, M.; Milicevic, B.; Jankovic, S. Improved predictive performance of prostate biopsy collaborative group risk calculator when based on automated machine learning. Comput. Biol. Med. 2021, 138, 104903. [Google Scholar] [CrossRef]

- ElKarami, B.; Deebajah, M.; Polk, S.; Peabody, J.; Shahrrava, B.; Menon, M.; Alkhateeb, A.; Alanee, S. Machine learning-based prediction of upgrading on magnetic resonance imaging targeted biopsy in patients eligible for active surveillance. In Urologic Oncology: Seminars and Original Investigations; Elsevier: Amsterdam, The Netherlands, 2022. [Google Scholar]

- Lu, X.; Zhang, S.; Liu, Z.; Liu, S.; Huang, J.; Kong, G.; Li, M.; Liang, Y.; Cui, Y.; Yang, C. Ultrasonographic pathological grading of prostate cancer using automatic region-based Gleason grading network. Comput. Med. Imaging Graph. 2022, 102, 102125. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, D.; Geng, N.; Wang, Y.; Yin, Y.; Jin, Y. Stacking-based ensemble learning of decision trees for interpretable prostate cancer detection. Appl. Soft Comput. 2019, 77, 188–204. [Google Scholar] [CrossRef]

- Hambarde, P.; Talbar, S.; Mahajan, A.; Chavan, S.; Thakur, M.; Sable, N. Prostate lesion segmentation in MR images using radiomics based deeply supervised U-Net. Biocybern. Biomed. Eng. 2020, 40, 1421–1435. [Google Scholar] [CrossRef]

- Isaksson, L.J.; Repetto, M.; Summers, P.E.; Pepa, M.; Zaffaroni, M.; Vincini, M.G.; Corrao, G.; Mazzola, G.C.; Rotondi, M.; Bellerba, F. High-performance prediction models for prostate cancer radiomics. Inform. Med. Unlocked 2023, 37, 101161. [Google Scholar] [CrossRef]

- Li, J.; Weng, Z.; Xu, H.; Zhang, Z.; Miao, H.; Chen, W.; Liu, Z.; Zhang, X.; Wang, M.; Xu, X. Support Vector Machines (SVM) classification of prostate cancer Gleason score in central gland using multiparametric magnetic resonance images: A cross-validated study. Eur. J. Radiol. 2018, 98, 61–67. [Google Scholar] [CrossRef]

- Shao, W.; Banh, L.; Kunder, C.A.; Fan, R.E.; Soerensen, S.J.; Wang, J.B.; Teslovich, N.C.; Madhuripan, N.; Jawahar, A.; Ghanouni, P. ProsRegNet: A deep learning framework for registration of MRI and histopathology images of the prostate. Med. Image Anal. 2021, 68, 101919. [Google Scholar] [CrossRef] [PubMed]

- Bhattacharya, I.; Seetharaman, A.; Kunder, C.; Shao, W.; Chen, L.C.; Soerensen, S.J.; Wang, J.B.; Teslovich, N.C.; Fan, R.E.; Ghanouni, P. Selective identification and localization of indolent and aggressive prostate cancers via CorrSigNIA: An MRI-pathology correlation and deep learning framework. Med. Image Anal. 2022, 75, 102288. [Google Scholar] [CrossRef]

- Saha, A.; Hosseinzadeh, M.; Huisman, H. End-to-end prostate cancer detection in bpMRI via 3D CNNs: Effects of attention mechanisms, clinical priori and decoupled false positive reduction. Med. Image Anal. 2021, 73, 102155. [Google Scholar] [CrossRef]

- Chen, J.; Wan, Z.; Zhang, J.; Li, W.; Chen, Y.; Li, Y.; Duan, Y. Medical image segmentation and reconstruction of prostate tumor based on 3D AlexNet. Comput. Methods Programs Biomed. 2021, 200, 105878. [Google Scholar] [CrossRef] [PubMed]

- Trigui, R.; Mitéran, J.; Walker, P.M.; Sellami, L.; Hamida, A.B. Automatic classification and localization of prostate cancer using multi-parametric MRI/MRS. Biomed. Signal Process. Control 2017, 31, 189–198. [Google Scholar] [CrossRef]

- Yang, X.; Liu, C.; Wang, Z.; Yang, J.; Le Min, H.; Wang, L.; Cheng, K.-T.T. Co-trained convolutional neural networks for automated detection of prostate cancer in multi-parametric MRI. Med. Image Anal. 2017, 42, 212–227. [Google Scholar] [CrossRef]

- Jia, H.; Xia, Y.; Song, Y.; Cai, W.; Fulham, M.; Feng, D.D. Atlas registration and ensemble deep convolutional neural network-based prostate segmentation using magnetic resonance imaging. Neurocomputing 2018, 275, 1358–1369. [Google Scholar] [CrossRef]

- Li, B.; Oka, R.; Xuan, P.; Yoshimura, Y.; Nakaguchi, T. Robust multi-modal prostate cancer classification via feature autoencoder and dual attention. Inform. Med. Unlocked 2022, 30, 100923. [Google Scholar] [CrossRef]

- Patsanis, A.; Sunoqrot, M.R.; Langørgen, S.; Wang, H.; Selnæs, K.M.; Bertilsson, H.; Bathen, T.F.; Elschot, M. A comparison of Generative Adversarial Networks for automated prostate cancer detection on T2-weighted MRI. Inform. Med. Unlocked 2023, 39, 101234. [Google Scholar] [CrossRef]

- Abraham, B.; Nair, M.S. Automated grading of prostate cancer using convolutional neural network and ordinal class classifier. Inform. Med. Unlocked 2019, 17, 100256. [Google Scholar] [CrossRef]

- Akamine, Y.; Ueda, Y.; Ueno, Y.; Sofue, K.; Murakami, T.; Yoneyama, M.; Obara, M.; Van Cauteren, M. Application of hierarchical clustering to multi-parametric MR in prostate: Differentiation of tumor and normal tissue with high accuracy. Magn. Reson. Imaging 2020, 74, 90–95. [Google Scholar] [CrossRef]

- Gentile, F.; La Civita, E.; Della Ventura, B.; Ferro, M.; Cennamo, M.; Bruzzese, D.; Crocetto, F.; Velotta, R.; Terracciano, D. A combinatorial neural network analysis reveals a synergistic behaviour of multiparametric magnetic resonance and prostate health index in the identification of clinically significant prostate cancer. Clin. Genitourin. Cancer 2022, 20, e406–e410. [Google Scholar] [CrossRef]

- Anderson, M.A.; Mercaldo, S.; Chung, R.; Ulrich, E.; Jones, R.W.; Harisinghani, M. Improving Prostate Cancer Detection With MRI: A Multi-Reader, Multi-Case Study Using Computer-Aided Detection (CAD). Acad. Radiol. 2022, 30, 1340–1349. [Google Scholar] [CrossRef]

- Otálora, S.; Atzori, M.; Khan, A.; Jimenez-del-Toro, O.; Andrearczyk, V.; Müller, H. Systematic comparison of deep learning strategies for weakly supervised Gleason grading. In Medical Imaging 2020: Digital Pathology; SPIE: Bellingham, WA, USA, 2020. [Google Scholar]

- Alam, M.; Tahernezhadi, M.; Vege, H.K.; Rajesh, P. A machine learning classification technique for predicting prostate cancer. In Proceedings of the 2020 IEEE International Conference on Electro Information Technology (EIT), Chicago, IL, USA, 31 July–1 August 2020. [Google Scholar]

- De Vente, C.; Vos, P.; Hosseinzadeh, M.; Pluim, J.; Veta, M. Deep learning regression for prostate cancer detection and grading in bi-parametric MRI. IEEE Trans. Biomed. Eng. 2020, 68, 374–383. [Google Scholar] [CrossRef]

- Iqbal, S.; Siddiqui, G.F.; Rehman, A.; Hussain, L.; Saba, T.; Tariq, U.; Abbasi, A.A. Prostate cancer detection using deep learning and traditional techniques. IEEE Access 2021, 9, 27085–27100. [Google Scholar] [CrossRef]

- Wiratchawa, K.; Wanna, Y.; Cha-in, S.; Aphinives, C.; Aphinives, P.; Intharah, T. Training Deep CNN’s to Detect Prostate Cancer Lesion with Small Training Data. In Proceedings of the 2022 37th International Technical Conference on Circuits/Systems, Computers and Communications (ITC-CSCC), Phuket, Thailand, 5–8 July 2022. [Google Scholar]

- Feng, Y.; Yang, F.; Zhou, X.; Guo, Y.; Tang, F.; Ren, F.; Guo, J.; Ji, S. A deep learning approach for targeted contrast-enhanced ultrasound based prostate cancer detection. IEEE/ACM Trans. Comput. Biol. Bioinform. 2018, 16, 1794–1801. [Google Scholar] [CrossRef]

- Morris, D.C.; Chan, D.Y.; Chen, H.; Palmeri, M.L.; Polascik, T.J.; Foo, W.-C.; Huang, J.; Mamou, J.; Nightingale, K.R. Multiparametric Ultrasound for the Targeting of Prostate Cancer using ARFI, SWEI, B-mode, and QUS. In Proceedings of the 2019 IEEE International Ultrasonics Symposium (IUS), Glasgow, UK, 6–9 October 2019. [Google Scholar]

- Duran-Lopez, L.; Dominguez-Morales, J.P.; Conde-Martin, A.F.; Vicente-Diaz, S.; Linares-Barranco, A. PROMETEO: A CNN-based computer-aided diagnosis system for WSI prostate cancer detection. IEEE Access 2020, 8, 128613–128628. [Google Scholar] [CrossRef]

- Li, W.; Li, J.; Sarma, K.V.; Ho, K.C.; Shen, S.; Knudsen, B.S.; Gertych, A.; Arnold, C.W. Path R-CNN for prostate cancer diagnosis and gleason grading of histological images. IEEE Trans. Med. Imaging 2018, 38, 945–954. [Google Scholar] [CrossRef] [PubMed]

- Chaddad, A.; Kucharczyk, M.J.; Desrosiers, C.; Okuwobi, I.P.; Katib, Y.; Zhang, M.; Rathore, S.; Sargos, P.; Niazi, T. Deep radiomic analysis to predict gleason score in prostate cancer. IEEE Access 2020, 8, 167767–167778. [Google Scholar] [CrossRef]

- Wang, Z.; Liu, C.; Cheng, D.; Wang, L.; Yang, X.; Cheng, K.-T. Automated detection of clinically significant prostate cancer in mp-MRI images based on an end-to-end deep neural network. IEEE Trans. Med. Imaging 2018, 37, 1127–1139. [Google Scholar] [CrossRef]

- Cao, R.; Bajgiran, A.M.; Mirak, S.A.; Shakeri, S.; Zhong, X.; Enzmann, D.; Raman, S.; Sung, K. Joint prostate cancer detection and Gleason score prediction in mp-MRI via FocalNet. IEEE Trans. Med. Imaging 2019, 38, 2496–2506. [Google Scholar] [CrossRef]

- Reda, I.; Ayinde, B.O.; Elmogy, M.; Shalaby, A.; El-Melegy, M.; Abou El-Ghar, M.; Abou El-fetouh, A.; Ghazal, M.; El-Baz, A. A new CNN-based system for early diagnosis of prostate cancer. In Proceedings of the 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018), Washington, DC, USA, 4–7 April 2018. [Google Scholar]

- Mosleh, M.A.; Hamoud, M.H.; Alsabri, A.A. Detection of Prostate Cancer Using MRI Images Classification with Deep Learning Techniques. In Proceedings of the 2022 2nd International Conference on Emerging Smart Technologies and Applications (eSmarTA), Ibb, Yemen, 25–26 October 2022. [Google Scholar]

- Starmans, M.P.; Niessen, W.J.; Schoots, I.; Klein, S.; Veenland, J.F. Classification of prostate cancer: High grade versus low grade using a radiomics approach. In Proceedings of the 2019 IEEE 16th International Symposium on Biomedical Imaging (ISBI 2019), Venice, Italy, 8–11 April 2019. [Google Scholar]

- Hassanzadeh, T.; Hamey, L.G.; Ho-Shon, K. Convolutional neural networks for prostate magnetic resonance image segmentation. IEEE Access 2019, 7, 36748–36760. [Google Scholar] [CrossRef]

- Bertelli, E.; Mercatelli, L.; Marzi, C.; Pachetti, E.; Baccini, M.; Barucci, A.; Colantonio, S.; Gherardini, L.; Lattavo, L.; Pascali, M.A. Machine and deep learning prediction of prostate cancer aggressiveness using multiparametric mri. Front. Oncol. 2022, 11, 802964. [Google Scholar] [CrossRef]

- Abbasi, A.A.; Hussain, L.; Awan, I.A.; Abbasi, I.; Majid, A.; Nadeem, M.S.A.; Chaudhary, Q.-A. Detecting prostate cancer using deep learning convolution neural network with transfer learning approach. Cogn. Neurodynamics 2020, 14, 523–533. [Google Scholar] [CrossRef]

- Hosseinzadeh, M.; Saha, A.; Brand, P.; Slootweg, I.; de Rooij, M.; Huisman, H. Deep learning–assisted prostate cancer detection on bi-parametric MRI: Minimum training data size requirements and effect of prior knowledge. Eur. Radiol. 2021, 32, 2224–2234. [Google Scholar] [CrossRef]

- Mehralivand, S.; Yang, D.; Harmon, S.A.; Xu, D.; Xu, Z.; Roth, H.; Masoudi, S.; Kesani, D.; Lay, N.; Merino, M.J. Deep learning-based artificial intelligence for prostate cancer detection at biparametric MRI. Abdom. Radiol. 2022, 47, 1425–1434. [Google Scholar] [CrossRef]

- Hiremath, A.; Shiradkar, R.; Fu, P.; Mahran, A.; Rastinehad, A.R.; Tewari, A.; Tirumani, S.H.; Purysko, A.; Ponsky, L.; Madabhushi, A. An integrated nomogram combining deep learning, Prostate Imaging–Reporting and Data System (PI-RADS) scoring, and clinical variables for identification of clinically significant prostate cancer on biparametric MRI: A retrospective multicentre study. Lancet Digit. Health 2021, 3, e445–e454. [Google Scholar] [CrossRef] [PubMed]

- Khosravi, P.; Lysandrou, M.; Eljalby, M.; Li, Q.; Kazemi, E.; Zisimopoulos, P.; Sigaras, A.; Brendel, M.; Barnes, J.; Ricketts, C. A deep learning approach to diagnostic classification of prostate cancer using pathology–radiology fusion. J. Magn. Reson. Imaging 2021, 54, 462–471. [Google Scholar] [CrossRef] [PubMed]

- Takeuchi, T.; Hattori-Kato, M.; Okuno, Y.; Iwai, S.; Mikami, K. Prediction of prostate cancer by deep learning with multilayer artificial neural network. Can. Urol. Assoc. J. 2019, 13, E145. [Google Scholar] [CrossRef] [PubMed]

- Soni, M.; Khan, I.R.; Babu, K.S.; Nasrullah, S.; Madduri, A.; Rahin, S.A. Light weighted healthcare CNN model to detect prostate cancer on multiparametric MRI. Comput. Intell. Neurosci. 2022, 2022, 5497120. [Google Scholar] [CrossRef]

- Azizi, S.; Bayat, S.; Yan, P.; Tahmasebi, A.; Kwak, J.T.; Xu, S.; Turkbey, B.; Choyke, P.; Pinto, P.; Wood, B. Deep recurrent neural networks for prostate cancer detection: Analysis of temporal enhanced ultrasound. IEEE Trans. Med. Imaging 2018, 37, 2695–2703. [Google Scholar] [CrossRef]

- Laabidi, A.; Aissaoui, M. Performance analysis of Machine learning classifiers for predicting diabetes and prostate cancer. In Proceedings of the 2020 1st International Conference on Innovative Research in Applied Science, Engineering and Technology (IRASET), Meknes, Morocco, 16–19 April 2020. [Google Scholar]

- Murakami, Y.; Magome, T.; Matsumoto, K.; Sato, T.; Yoshioka, Y.; Oguchi, M. Fully automated dose prediction using generative adversarial networks in prostate cancer patients. PLoS ONE 2020, 15, e0232697. [Google Scholar] [CrossRef]

- Kohl, S.; Bonekamp, D.; Schlemmer, H.-P.; Yaqubi, K.; Hohenfellner, M.; Hadaschik, B.; Radtke, J.-P.; Maier-Hein, K. Adversarial networks for the detection of aggressive prostate cancer. arXiv 2017, arXiv:1702.08014. [Google Scholar]

- Yu, H.; Zhang, X. Synthesis of prostate MR images for classification using capsule network-based GAN Model. Sensors 2020, 20, 5736. [Google Scholar] [CrossRef]

- Li, Y.; Wang, J.; Hu, M.; Patel, P.; Mao, H.; Liu, T.; Yang, X. Prostate Gleason score prediction via MRI using capsule network. In Medical Imaging 2023: Computer-Aided Diagnosis; SPIE: Bellingham, WA, USA, 2023. [Google Scholar]

- Ding, M.; Lin, Z.; Lee, C.H.; Tan, C.H.; Huang, W. A multi-scale channel attention network for prostate segmentation. IEEE Trans. Circuits Syst. II Express Briefs 2023, 70, 1754–1758. [Google Scholar] [CrossRef]

- Xu, X.; Lian, C.; Wang, S.; Zhu, T.; Chen, R.C.; Wang, A.Z.; Royce, T.J.; Yap, P.-T.; Shen, D.; Lian, J. Asymmetric multi-task attention network for prostate bed segmentation in computed tomography images. Med. Image Anal. 2021, 72, 102116. [Google Scholar] [CrossRef]

- Yuan, Y.; Qin, W.; Buyyounouski, M.; Ibragimov, B.; Hancock, S.; Han, B.; Xing, L. Prostate cancer classification with multiparametric MRI transfer learning model. Med. Phys. 2019, 46, 756–765. [Google Scholar] [CrossRef]

- Janocha, K.; Czarnecki, W.M. On loss functions for deep neural networks in classification. arXiv 2017, arXiv:1702.05659. [Google Scholar] [CrossRef]

- Zhao, H.; Gallo, O.; Frosio, I.; Kautz, J. Loss functions for neural networks for image processing. arXiv 2015, arXiv:1511.08861. [Google Scholar]

- Ghosh, A.; Kumar, H.; Sastry, P.S. Robust loss functions under label noise for deep neural networks. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Xu, C.; Lu, C.; Liang, X.; Gao, J.; Zheng, W.; Wang, T.; Yan, S. Multi-loss regularized deep neural network. IEEE Trans. Circuits Syst. Video Technol. 2015, 26, 2273–2283. [Google Scholar] [CrossRef]

- Kim, T.; Oh, J.; Kim, N.; Cho, S.; Yun, S.-Y. Comparing kullback-leibler divergence and mean squared error loss in knowledge distillation. arXiv 2021, arXiv:2105.08919. [Google Scholar]

- Qi, J.; Du, J.; Siniscalchi, S.M.; Ma, X.; Lee, C.-H. On mean absolute error for deep neural network based vector-to-vector regression. IEEE Signal Process. Lett. 2020, 27, 1485–1489. [Google Scholar] [CrossRef]

- Ruby, U.; Yendapalli, V. Binary cross entropy with deep learning technique for image classification. Int. J. Adv. Trends Comput. Sci. Eng 2020, 9, 5393–5397. [Google Scholar]

- Ho, Y.; Wookey, S. The real-world-weight cross-entropy loss function: Modeling the costs of mislabeling. IEEE Access 2019, 8, 4806–4813. [Google Scholar] [CrossRef]

- Gordon-Rodriguez, E.; Loaiza-Ganem, G.; Pleiss, G.; Cunningham, J.P. Uses and abuses of the cross-entropy loss: Case studies in modern deep learning. arXiv 2020, arXiv:2011.05231. [Google Scholar]

- Sudre, C.H.; Li, W.; Vercauteren, T.; Ourselin, S.; Jorge Cardoso, M. Generalised dice overlap as a deep learning loss function for highly unbalanced segmentations. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support, Proceedings of the Third International Workshop, DLMIA 2017, and 7th International Workshop, ML-CDS 2017, Held in Conjunction with MICCAI 2017, Québec City, QC, Canada, 14 September 2017; Proceedings 3; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Zhang, Y.; Liu, S.; Li, C.; Wang, J. Rethinking the dice loss for deep learning lesion segmentation in medical images. J. Shanghai Jiaotong Univ. 2021, 26, 93–102. [Google Scholar] [CrossRef]

- Mukhoti, J.; Kulharia, V.; Sanyal, A.; Golodetz, S.; Torr, P.; Dokania, P. Calibrating deep neural networks using focal loss. Adv. Neural Inf. Process. Syst. 2020, 33, 15288–15299. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 42, 318–327. [Google Scholar] [CrossRef]

- Asperti, A.; Trentin, M. Balancing reconstruction error and kullback-leibler divergence in variational autoencoders. IEEE Access 2020, 8, 199440–199448. [Google Scholar] [CrossRef]

- Phan, H.; Mikkelsen, K.; Chén, O.Y.; Koch, P.; Mertins, A.; Kidmose, P.; De Vos, M. Personalized automatic sleep staging with single-night data: A pilot study with Kullback–Leibler divergence regularization. Physiol. Meas. 2020, 41, 064004. [Google Scholar] [CrossRef] [PubMed]

- Tomczak, K.; Czerwińska, P.; Wiznerowicz, M. Review The Cancer Genome Atlas (TCGA): An immeasurable source of knowledge. Contemp. Oncol./Współczesna Onkol. 2015, 2015, 68–77. [Google Scholar] [CrossRef]

- Wang, Z.; Jensen, M.A.; Zenklusen, J.C. A practical guide to the cancer genome atlas (TCGA). In Statistical Genomics; Humana Press: New York, NY, USA, 2016; Volume 1418, pp. 111–141. [Google Scholar]

- Hutter, C.; Zenklusen, J.C. The cancer genome atlas: Creating lasting value beyond its data. Cell 2018, 173, 283–285. [Google Scholar] [CrossRef] [PubMed]

- Way, G.P.; Sanchez-Vega, F.; La, K.; Armenia, J.; Chatila, W.K.; Luna, A.; Sander, C.; Cherniack, A.D.; Mina, M.; Ciriello, G. Machine learning detects pan-cancer ras pathway activation in the cancer genome atlas. Cell Rep. 2018, 23, 172–180.e3. [Google Scholar] [CrossRef] [PubMed]

- Ganini, C.; Amelio, I.; Bertolo, R.; Bove, P.; Buonomo, O.C.; Candi, E.; Cipriani, C.; Di Daniele, N.; Juhl, H.; Mauriello, A. Global mapping of cancers: The Cancer Genome Atlas and beyond. Mol. Oncol. 2021, 15, 2823–2840. [Google Scholar] [CrossRef]

- Rosenkrantz, A.B.; Oto, A.; Turkbey, B.; Westphalen, A.C. Prostate Imaging Reporting and Data System (PI-RADS), version 2: A critical look. Am. J. Roentgenol. 2016, 206, 1179–1183. [Google Scholar] [CrossRef] [PubMed]

- Westphalen, A.C.; Rosenkrantz, A.B. Prostate imaging reporting and data system (PI-RADS): Reflections on early experience with a standardized interpretation scheme for multiparametric prostate MRI. Am. J. Roentgenol. 2014, 202, 121–123. [Google Scholar] [CrossRef]

- Deng, K.; Li, H.; Guan, Y. Treatment stratification of patients with metastatic castration-resistant prostate cancer by machine learning. Iscience 2020, 23, 100804. [Google Scholar] [CrossRef] [PubMed]

- Abdallah, K.; Hugh-Jones, C.; Norman, T.; Friend, S.; Stolovitzky, G. The Prostate Cancer DREAM Challenge: A Community-Wide Effort to Use Open Clinical Trial Data for the Quantitative Prediction of Outcomes in Metastatic Prostate Cancer; Oxford University Press: Oxford, UK, 2015; Volume 20, pp. 459–460. [Google Scholar]

- Clark, K.; Vendt, B.; Smith, K.; Freymann, J.; Kirby, J.; Koppel, P.; Moore, S.; Phillips, S.; Maffitt, D.; Pringle, M. The Cancer Imaging Archive (TCIA): Maintaining and operating a public information repository. J. Digit. Imaging 2013, 26, 1045–1057. [Google Scholar] [CrossRef] [PubMed]

- Prior, F.; Smith, K.; Sharma, A.; Kirby, J.; Tarbox, L.; Clark, K.; Bennett, W.; Nolan, T.; Freymann, J. The public cancer radiology imaging collections of The Cancer Imaging Archive. Sci. Data 2017, 4, 170124. [Google Scholar] [CrossRef]

- Liu, Y.; Yang, G.; Mirak, S.A.; Hosseiny, M.; Azadikhah, A.; Zhong, X.; Reiter, R.E.; Lee, Y.; Raman, S.S.; Sung, K. Automatic prostate zonal segmentation using fully convolutional network with feature pyramid attention. IEEE Access 2019, 7, 163626–163632. [Google Scholar] [CrossRef]

- Mehrtash, A.; Sedghi, A.; Ghafoorian, M.; Taghipour, M.; Tempany, C.M.; Wells, W.M., III; Kapur, T.; Mousavi, P.; Abolmaesumi, P.; Fedorov, A. Classification of clinical significance of MRI prostate findings using 3D convolutional neural networks. In Medical Imaging 2017: Computer-Aided Diagnosis; SPIE: Bellingham, WA, USA, 2017. [Google Scholar]

- Hamm, C.A.; Baumgärtner, G.L.; Biessmann, F.; Beetz, N.L.; Hartenstein, A.; Savic, L.J.; Froböse, K.; Dräger, F.; Schallenberg, S.; Rudolph, M. Interactive Explainable Deep Learning Model Informs Prostate Cancer Diagnosis at MRI. Radiology 2023, 307, e222276. [Google Scholar] [CrossRef]

- Kraaijveld, R.C.; Philippens, M.E.; Eppinga, W.S.; Jürgenliemk-Schulz, I.M.; Gilhuijs, K.G.; Kroon, P.S.; van der Velden, B.H. Multi-modal volumetric concept activation to explain detection and classification of metastatic prostate cancer on PSMA-PET/CT. In International Workshop on Interpretability of Machine Intelligence in Medical Image Computing; Springer: Berlin/Heidelberg, Germany, 2022. [Google Scholar]

- Dai, X.; Park, J.H.; Yoo, S.; D’Imperio, N.; McMahon, B.H.; Rentsch, C.T.; Tate, J.P.; Justice, A.C. Survival analysis of localized prostate cancer with deep learning. Sci. Rep. 2022, 12, 17821. [Google Scholar] [CrossRef] [PubMed]

| Ref. | Year | Articles Included | Work Conducted |

|---|---|---|---|

| [16] | 2019 | 43 | Authors investigated current and future applications of ML and DL urolithiasis, renal cell carcinoma and bladder and prostate cancers. Only PubMed database was used. It was concluded in the study that machine learning techniques outperform classical statistical methods. |

| [17] | 2020 | 28 | Study investigated deep learning methods for CT and MRI images for PCa diagnosis and analysis. It was concluded that most deep learning models are limited by the size of the dataset used in model training. |

| [18] | 2021 | 100 | Study investigated 22 machine learning-based and 88 deep learning-based segmentation of only MRI images. Authors also presented popular loss functions for the training of these models and discussed public Pca-related datasets. |

| [19] | 2022 | 8 | Authors reviewed eight papers on the use of biparametric MRI (bpMRI) for deep learning diagnosis of clinically significant Pca. It was discovered that although deep learning is highly performing in terms of accuracy, there is lower sensitivity when compared to human radiologists. Dataset size has also been identified as a major limitation in these deep learning experiments. |

| [20] | 2020 | 27 | Embase and Ovid MEDLINE databases were searched for application of ML and DL for differential diagnosis of Pca using multi-parametric MRI. |

| [21] | 2022 | 29 | Authors investigated the current value of bpMRI using ML and DL in the grading, detection and characterization of Pca. |

| [22] | 2022 | 24 | Authors reviewed the role of deep learning in Pca management. Study also recommended that focus should be placed on model improvement in order to make these models verifiable as well as clinically acceptable. |

| SN | Databases | URL | Count | % Count |

|---|---|---|---|---|

| 1 | IEEE Xplorer | https://ieeexplore.ieee.org | 16 | 20.78 |

| 2 | Springer | https://link.springer.com | 23 | 29.87 |

| 3 | ScienceDirect | https://sciencedirect.com | 29 | 37.66 |

| 4 | PubMed | https://pubmed.ncbi.nlm.nih.gov/ | 9 | 11.69 |

| Ref. | Problem Addressed | Imaging Modality | ML/DL Model | Metrics Reported | Hyperparameter Reported | Subjects | Similar Works |

|---|---|---|---|---|---|---|---|

| [15] | Comparison between deep learning and non-deep classifier for performance evaluation of classification of PCa | MRI | DCNN, SIFT-BoW, Linear-SVM | AUC = 0.84, sensitivity = 69.6%, specificity = 83.9%, PPV = 78.6%, NPV = 76.5% | Gamma = 0.1, momentum = 0.9, weight decay = 0.1, max training iteration = 1000, 10-fold CV | 172 | [8,66,67,68] |

| [69] | Classifying PCa tissue with weakly semi-supervised technique | WSI | CNN, DenseNet121 | - | Batch size = 128,32, learning rate = 10−3, decay-rate = 10−6, Adam optimizer | 1368 | [70,71,72,73,74] |

| [75] | Predicting clinically significant prostate cancer with a deep learning approach in a multi-center study | Parametric MRI | PI-RADS, CNN (ResNet3D, DenseNet3D, ShfeNet3D and MobileNet3D) | Sensitivity = 98.6%, p-value > 0.99, specificity = 35.0% | Cross-entropy loss, Adam optimizer, learning rate = 0.01, epochs = 30, batch size = 32 | 1861 | [76,77,78,79,80] |

| [81] | Localization of PCa lesion using multiparametric ML on transrectal US | US | RF | ROC-AUC for PCa and Gleason > 3 + 4 = 0.75, 0.90 | Depth = 50 nodes, | 50 | [82] |

| [83] | Transfer learning approach using breast histopathological images for detection of PCa | Histopathological images | Transfer learning, deep CNN | AUC = 0.936 | Epochs = 50 | - | [84] |

| [85] | Image-based PCa staging support system | CT | CNN | AP = 80.4%, (CI: 71.1–87.8), Acc = 77% (CI: 70.0–83.4) | 4-fold CV = 121 | 173 | [86] |

| Ref. | Problem Addressed | Imaging Modality | ML/DL Model | Metrics Reported | Hyperparameter Reported | Subjects | Similar Works |

|---|---|---|---|---|---|---|---|

| [87] | Effect of labeling strategies on performance of PCa detection. | MRI | SPCNet, U-Net, branched UNet and DeepLabv3+ | ROC-AUC = 0.91–0.94 | Loss fn, Adam optimizer, batch size = 22, epochs =30, cross-entropy | 390 | [88,89,90,91] |

| [92] | Segmentation of prostate glands with ensemble deep learning and classical learning methods. | Histopathological images | RINGS, CNN | DICE = 90.16% | Batch size = 128, learning rate = 10−3, epochs = 30 | 18,851 | [93] |

| [94] | Diagnosis of PCa with integration of multiple deep learning approaches. | US | S-Mask, R-CNN and Inception-v3 | Map = 88%, DICE = 87%, IOU = 79%, AP = 92% | Vector = 0.001, weight decay rate = 0.0001, number of iterations = 3000 | 704 | [88,95] |

| [96] | Upgrading a patient from MRI-targeted biopsy to active surveillance with machine learning model. | MRI, US | AdaBoost, RF | Acc = 94.3%, 88.1%, pre = 94.6%, 88.0%, recall = 94.3%, 88.1% for Adaboost and RF. | - | 592 | - |

| [97] | A pathological grading of PCa on single US image. | US | Region labeling object detection (RLOD), Gleason grading network (GNet) | Pre = 0.830, mean dice = 0.815 | - | - | [98] |

| [99] | A radiomics deeply supervised segmentation method for prostate gland and lesion. | MRI | U-Net | Mean Dice Similarity Coefficient (DSC) = 0.8958 and 0.9176 | - | 50 | [100,101,102] |

| [103] | Ensemble feature extraction methods for PCa aggressiveness and indolent detection. | MRI | CorrSigNIA, CNN | Acc = 80%, ROC-AUC = 0.81 ± 0.31 | Epochs = 100, batch size = 8, Adam optimizer, learning rate = 10−3, weight decay = 0.1 | 98 | [104,105] |

| [106] | PCa localization and classification with ML. | MRI | SVM, RF | Global ER = 1%, sens = 99.1% and speci = 98.4% | - | 34 | [107] |

| [108] | Segmenting MR images of PCa using deep learning separation techniques. | MRI | DNN | Dice = 0.910 ± 0.036, ABD = 1.583 ± 0, Hausdorff Dis = 4414.579 ± 1.791 | - | 304 | [109] |

| [110] | GANs were investigated for detection of PCa with MRI. | MRI | GANs | AUC = 0.73, average AUCs SD = 0.71 ± 0.01 and 0.71 ± 0.04. | GANs parameters were maintained | 1160 | - |

| [111] | Gleason grading for PCa detection with deep learning techniques. | MRI-guided biopsy | VGG-16 CNN, J48 | Quadratic weighted kappa score = 0.4727, positive predictive = 0.9079 | - | - | [112] |

| [113] | Ensemble method of mpMRI and PHI for diagnosis of early PCa. | mpMRI | ANN | Sensi = 80%, speci = 68% | - | 177 | [114] |

| [115] | Compared deep learning models for classification of PCa with GG. | WSI | DLN, CNN | kappa score = 0:44 | Layer = 121, LR = 0.0001, Adam optimizer | 341 | - |

| Ref. | Problem Addressed | Imaging Modality | ML/DL Model | Metrics Reported | Hyperparameter Reported | Subjects | Similar Works |

|---|---|---|---|---|---|---|---|

| [14] | Classification of MRI images for easy diagnosis of PCa. | MRI | CNN, DL | Accuracy for training = 0.80, accuracy for testing = 0.78 | ReLU | 200 | [116,117,118,119] |

| [120] | Detection of PCa in sequential CEUS images. | US | 3D CNN | Specificity = 91%, average accuracy = 0.90 | Layers = 6, kernels = 2–12 | 21,844 | [121] |

| [122] | CNN-based WSI for PCa detection. | WSI | CNN, | Accuracy = 0.99, F1 score = 0.99, AUC = 0.99 | Cross-validation = 3 | 97 | [123] |

| [124] | Deep entropy features (DEFs) from CNNs applied to MRI images of PCa to predict Gleason score (GS) of PCa lesions. | mpMRI | DEF, CNN, RF, NASNet-mobile | AUC = 0.80, 0.86, 0.97, 0.98 and 0.86 | Number of trees = 500, maximum tree depth = 15 and minimum number of samples in a node = 4 | 99 | [125,126] |

| [127] | Early diagnosis of PCa using CNN-CAD system. | Diffusion-weighted MRI | CNN | Accuracy = 0.96, sensitivity = 100%, specificity = 91.67% | ReLU, layers = 6 | 23 | - |

| [128] | Detection of PCa with CNN. | MRI | CNN, Inception-v3, Inception-v4, Inception-Resent-v2, Xception, PolyNet | Accuracy = 0.99 | 1524 | [129,130] |

| Ref. | Problem Addressed | Imaging Modality | ML/DL Model | Metrics Reported | Hyperparameter Reported | Subjects | Similar Works |

|---|---|---|---|---|---|---|---|

| [131] | The aggressiveness of PCa was predicted using ML/DL frameworks | mpMRI | CNN | AUROC—0.75 Specificity—78% Sensitivity—60% | 5-fold CV, 87-13 train-test splitting | 112 patients | [132,133] |

| [134] | UNet-based PCa detection system using MRI | bpMRI | CNN-UNet | Sensitivity—72.8% PPV—35.5% | 70/30 splitting, Dice Coefficient used | 525 patients | [117,135] |

| [136] | Bi-modal deep learning model fusion of pathology–radiology data for PCa diagnostic classification | MRI + histological data | CNN-GoogleNet | AUC—0.89 | - | 1484 images | - |

| [137] | ANN was used to accurately predict PCa without biopsy and was marginally better than LR | mpMRI | Multi-layer ANN | - | 5-fold CV, cross-entropy, learning rate 0.0001, L2 regularization penalty of 0.0005 | 334 patients | - |

| Ref. | Title | Journal | Publisher | Year | Citation | Impact Index |

|---|---|---|---|---|---|---|

| [71] | Development and validation of a deep learning algorithm for improving Gleason scoring of prostate cancer. | NPJ Digital Medicine | Nature | 2019 | 320 | 80 |

| [94] | Deep learning framework based on integration of S-Mask R-CNN and Inception-v3 for ultrasound-image-aided diagnosis of prostate cancer. | Future Generation Computer Systems | Elsevier | 2021 | 68 | 34 |

| [67] | Prostate cancer detection using deep Convolutional Neural Networks. | Scientific Reports | Springer | 2019 | 134 | 33.5 |

| [126] | Joint prostate cancer detection and Gleason score prediction in mp-MRI via FocalNet. | IEEE Transactions on Medical Imaging | IEEE | 2019 | 131 | 32.75 |

| [88] | Prostate cancer classification from ultrasound and MRI images using deep learning-based explainable artificial intelligence. | Future Generation Computer Systems | Elsevier | 2022 | 31 | 31 |

| [15] | Searching for prostate cancer via fully automated magnetic resonance imaging classification: deep learning versus non-deep learning. | Scientific Reports | Springer | 2017 | 175 | 29.16667 |

| [66] | Supervised machine learning enables non-invasive lesion characterization in primary prostate cancer with [68 Ga] Ga-PSMA-11 PET/MRI. | European Journal of Nuclear Medicine and Molecular Imaging | Springer | 2021 | 58 | 29 |

| [104] | End-to-end prostate cancer detection in bpMRI via 3D CNNs: effects of attention mechanisms, clinical priori and decoupled false positive reduction. | Medical Image Analysis | Elsevier | 2021 | 58 | 29 |

| [98] | Stacking-based ensemble learning of decision trees for interpretable prostate cancer detection. | Applied Soft Computing | Elsevier | 2019 | 114 | 28.5 |

| [72] | High-accuracy prostate cancer pathology using deep learning. | Nature Machine Intelligence | Nature | 2020 | 81 | 27 |

| Model | Considerations |

|---|---|