Artificial Intelligence-Based Detection of Pneumonia in Chest Radiographs

Abstract

:1. Introduction

2. Materials and Methods

2.1. Patient Collective

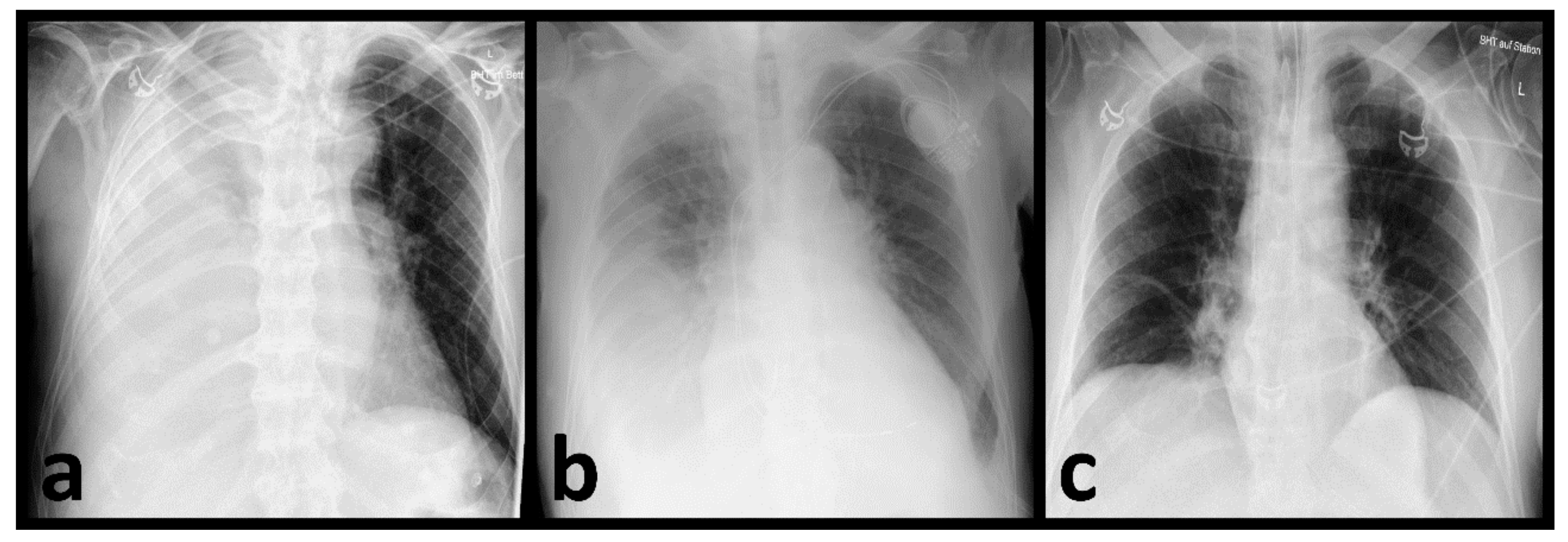

2.2. Imaging Evaluation

2.3. Laboratory Testing

2.4. Statistical Analysis

3. Results

3.1. Patient Collective

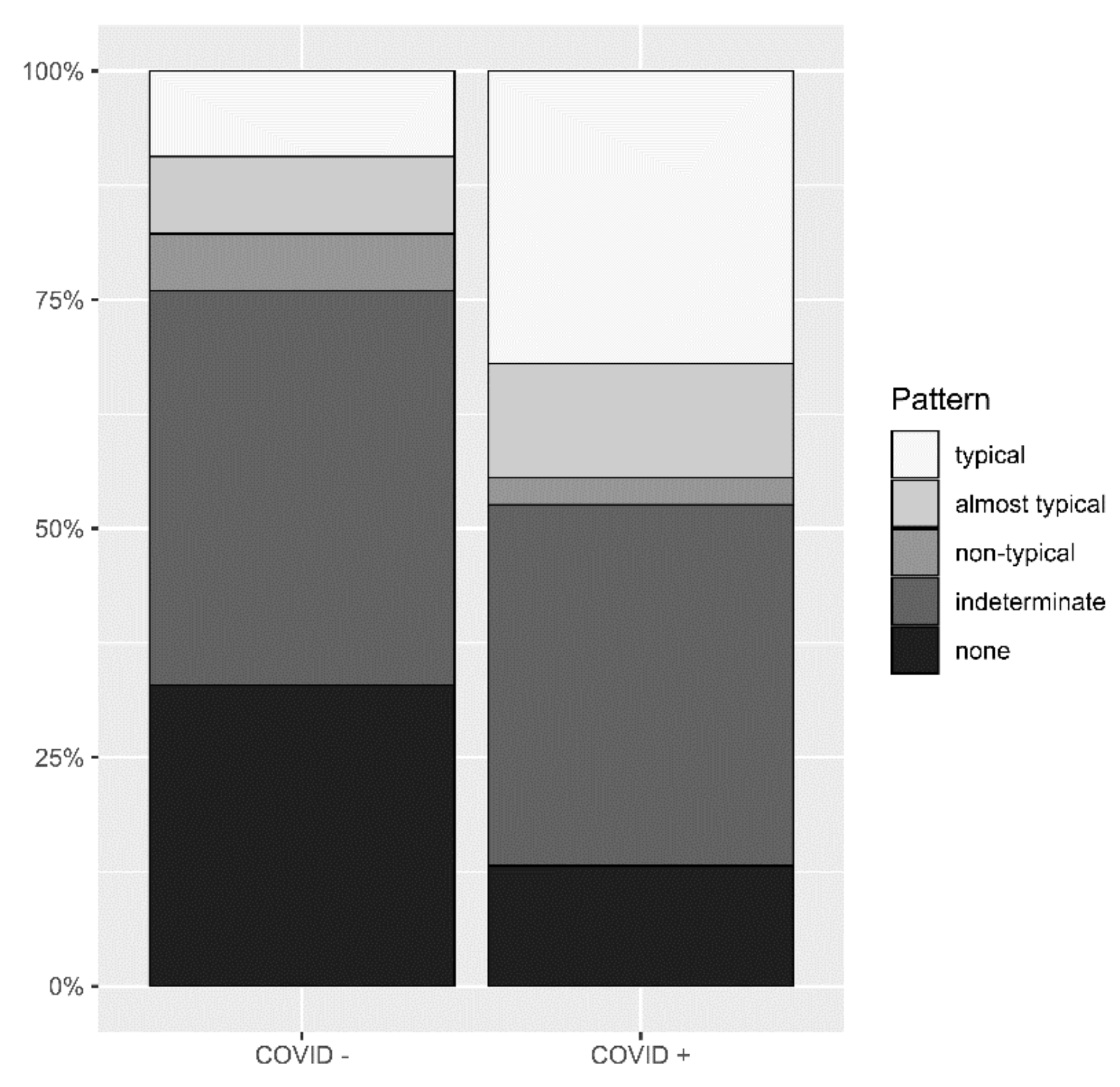

3.2. Identification of Pneumonia by Radiologists

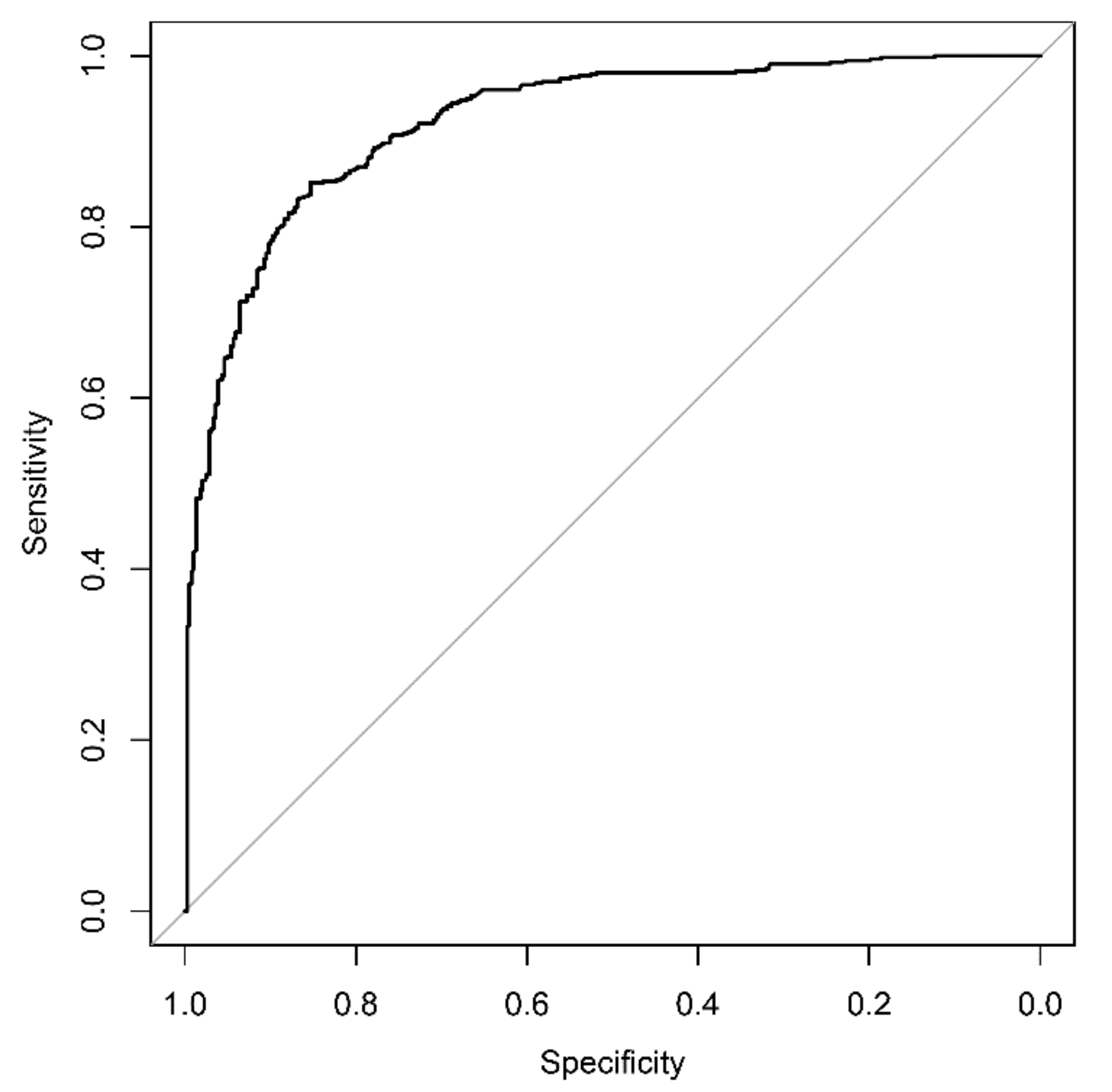

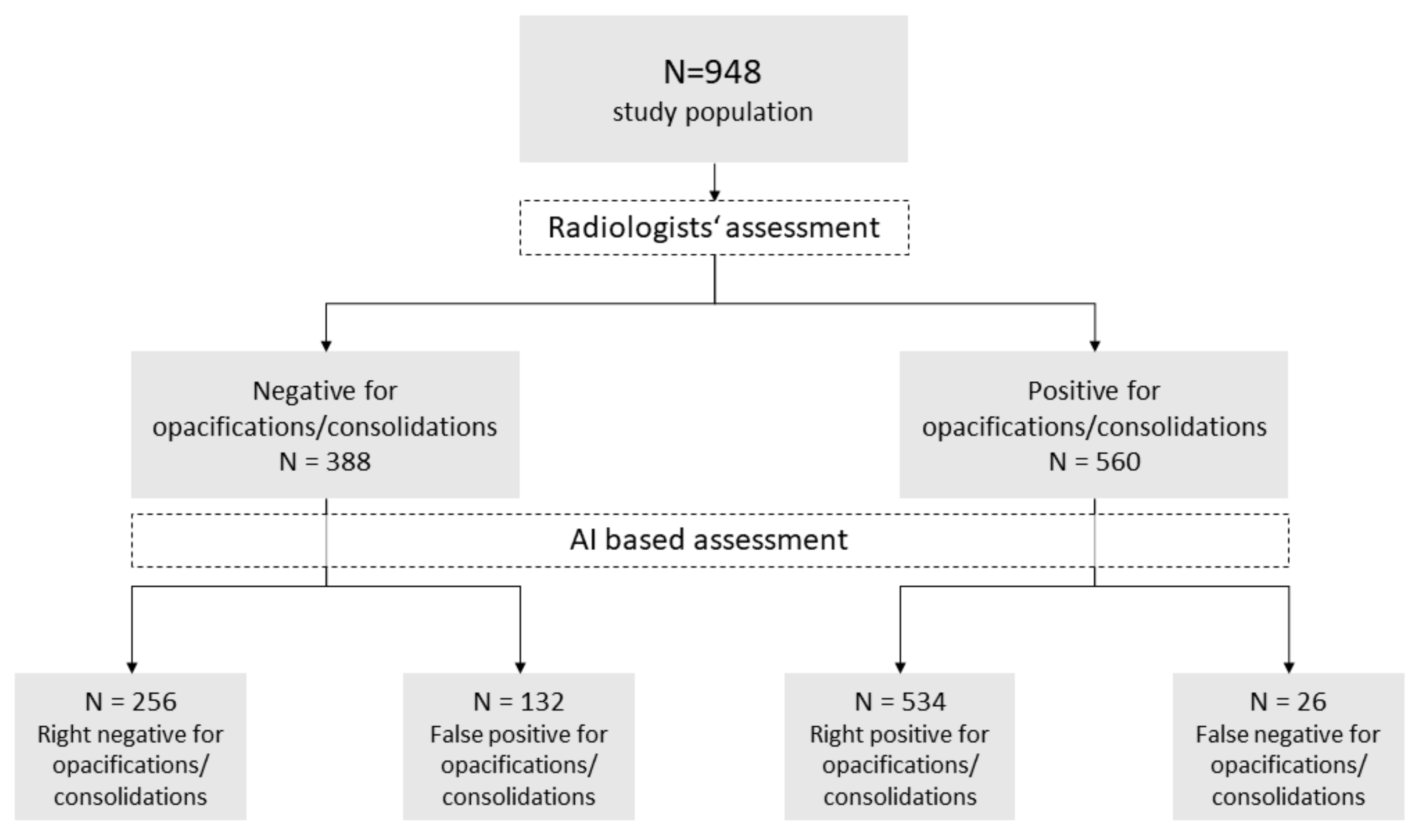

3.3. AI-Based Diagnostic Performance

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- AWMF: Detail. Available online: https://www.awmf.org/leitlinien/detail/ll/020-020.html (accessed on 19 February 2022).

- Rubin, G.D.; Ryerson, C.J.; Haramati, L.B.; Sverzellati, N.; Kanne, J.P.; Raoof, S.; Schluger, N.W.; Volpi, A.; Yim, J.-J.; Martin, I.B.K.; et al. The Role of Chest Imaging in Patient Management during the COVID-19 Pandemic: A Multinational Consensus Statement from the Fleischner Society. Radiology 2020, 296, 172–180. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sahu, A.K.; Dhar, A.; Aggarwal, B. Radiographic Features of COVID-19 Infection at Presentation and Significance of Chest X-ray: Early Experience from a Super-Specialty Hospital in India. Indian J. Radiol. Imaging 2021, 31, S128–S133. [Google Scholar] [CrossRef] [PubMed]

- Myall, K.J.; Mukherjee, B.; Castanheira, A.M.; Lam, J.L.; Benedetti, G.; Mak, S.M.; Preston, R.; Thillai, M.; Dewar, A.; Molyneaux, P.L.; et al. Persistent Post–COVID-19 Interstitial Lung Disease. An Observational Study of Corticosteroid Treatment. Ann. Am. Thorac. Soc. 2021, 18, 799–806. [Google Scholar] [CrossRef] [PubMed]

- Baratella, E.; Ruaro, B.; Marrocchio, C.; Starvaggi, N.; Salton, F.; Giudici, F.; Quaia, E.; Confalonieri, M.; Cova, M.A. Interstitial Lung Disease at High Resolution CT after SARS-CoV-2-Related Acute Respiratory Distress Syndrome According to Pulmonary Segmental Anatomy. J. Clin. Med. 2021, 10, 3985. [Google Scholar] [CrossRef]

- Bewick, T.; Greenwood, S.; Lim, W.S. The Impact of an Early Chest Radiograph on Outcome in Patients Hospitalised with Community-Acquired Pneumonia. Clin. Med. 2010, 10, 563–567. [Google Scholar] [CrossRef]

- Larremore, D.B.; Wilder, B.; Lester, E.; Shehata, S.; Burke, J.M.; Hay, J.A.; Milind, T.; Mina, M.J.; Parker, R. Test Sensitivity Is Secondary to Frequency and Turnaround Time for COVID-19 Surveillance. medRxiv 2020. [Google Scholar] [CrossRef]

- Williams, G.J.; Macaskill, P.; Kerr, M.; Fitzgerald, D.A.; Isaacs, D.; Codarini, M.; McCaskill, M.; Prelog, K.; Craig, J.C. Variability and Accuracy in Interpretation of Consolidation on Chest Radiography for Diagnosing Pneumonia in Children under 5 Years of Age. Pediatr. Pulmonol. 2013, 48, 1195–1200. [Google Scholar] [CrossRef]

- Hopstaken, R.M.; Witbraad, T.; van Engelshoven, J.M.A.; Dinant, G.J. Inter-Observer Variation in the Interpretation of Chest Radiographs for Pneumonia in Community-Acquired Lower Respiratory Tract Infections. Clin. Radiol. 2004, 59, 743–752. [Google Scholar] [CrossRef]

- Fontanellaz, M.; Ebner, L.; Huber, A.; Peters, A.; Löbelenz, L.; Hourscht, C.; Klaus, J.; Munz, J.; Ruder, T.; Drakopoulos, D.; et al. A Deep-Learning Diagnostic Support System for the Detection of COVID-19 Using Chest Radiographs: A Multireader Validation Study. Investig. Radiol. 2020, 56, 348–356. [Google Scholar] [CrossRef]

- Jang, S.B.; Lee, S.H.; Lee, D.E.; Park, S.-Y.; Kim, J.K.; Cho, J.W.; Cho, J.; Kim, K.B.; Park, B.; Park, J.; et al. Deep-Learning Algorithms for the Interpretation of Chest Radiographs to Aid in the Triage of COVID-19 Patients: A Multicenter Retrospective Study. PLoS ONE 2020, 15, e0242759. [Google Scholar] [CrossRef]

- Murphy, K.; Smits, H.; Knoops, A.J.G.; Korst, M.B.J.M.; Samson, T.; Scholten, E.T.; Schalekamp, S.; Schaefer-Prokop, C.M.; Philipsen, R.H.H.M.; Meijers, A.; et al. COVID-19 on Chest Radiographs: A Multireader Evaluation of an Artificial Intelligence System. Radiology 2020, 296, E166–E172. [Google Scholar] [CrossRef]

- Sharma, A.; Rani, S.; Gupta, D. Artificial Intelligence-Based Classification of Chest X-ray Images into COVID-19 and Other Infectious Diseases. Int. J. Biomed. Imaging 2020, 2020, 8889023. [Google Scholar] [CrossRef]

- Wehbe, R.M.; Sheng, J.; Dutta, S.; Chai, S.; Dravid, A.; Barutcu, S.; Wu, Y.; Cantrell, D.R.; Xiao, N.; Allen, B.D.; et al. DeepCOVID-XR: An Artificial Intelligence Algorithm to Detect COVID-19 on Chest Radiographs Trained and Tested on a Large US Clinical Dataset. Radiology 2020, 299, 203511. [Google Scholar] [CrossRef]

- Zhang, R.; Tie, X.; Qi, Z.; Bevins, N.B.; Zhang, C.; Griner, D.; Song, T.K.; Nadig, J.D.; Schiebler, M.L.; Garrett, J.W.; et al. Diagnosis of COVID-19 Pneumonia Using Chest Radiography: Value of Artificial Intelligence. Radiology 2020, 298, 202944. [Google Scholar] [CrossRef]

- Hwang, E.J.; Kim, K.B.; Kim, J.Y.; Lim, J.-K.; Nam, J.G.; Choi, H.; Kim, H.; Yoon, S.H.; Goo, J.M.; Park, C.M. COVID-19 Pneumonia on Chest X-rays: Performance of a Deep Learning-Based Computer-Aided Detection System. PLoS ONE 2021, 16, e0252440. [Google Scholar] [CrossRef]

- Rajaraman, S.; Candemir, S.; Kim, I.; Thoma, G.; Antani, S. Visualization and Interpretation of Convolutional Neural Network Predictions in Detecting Pneumonia in Pediatric Chest Radiographs. Appl. Sci. 2018, 8, 1715. [Google Scholar] [CrossRef] [Green Version]

- Kim, J.H.; Kim, J.Y.; Kim, G.H.; Kang, D.; Kim, I.J.; Seo, J.; Andrews, J.R.; Park, C.M. Clinical Validation of a Deep Learning Algorithm for Detection of Pneumonia on Chest Radiographs in Emergency Department Patients with Acute Febrile Respiratory Illness. J. Clin. Med. 2020, 9, 1981. [Google Scholar] [CrossRef]

- Castiglioni, I.; Ippolito, D.; Interlenghi, M.; Monti, C.B.; Salvatore, C.; Schiaffino, S.; Polidori, A.; Gandola, D.; Messa, C.; Sardanelli, F. Machine Learning Applied on Chest X-ray Can Aid in the Diagnosis of COVID-19: A First Experience from Lombardy, Italy. Eur. Radiol. Exp. 2021, 5, 7. [Google Scholar] [CrossRef]

- Hwang, E.J.; Park, S.; Jin, K.-N.; Kim, J.I.; Choi, S.Y.; Lee, J.H.; Goo, J.M.; Aum, J.; Yim, J.-J.; Cohen, J.G.; et al. Development and Validation of a Deep Learning-Based Automated Detection Algorithm for Major Thoracic Diseases on Chest Radiographs. JAMA Netw. Open 2019, 2, e191095. [Google Scholar] [CrossRef] [Green Version]

- Brogna, B.; Bignardi, E.; Brogna, C.; Volpe, M.; Lombardi, G.; Rosa, A.; Gagliardi, G.; Capasso, P.F.M.; Gravino, E.; Maio, F.; et al. A Pictorial Review of the Role of Imaging in the Detection, Management, Histopathological Correlations, and Complications of COVID-19 Pneumonia. Diagnostics 2021, 11, 437. [Google Scholar] [CrossRef]

- Baratella, E.; Ruaro, B.; Marrocchio, C.; Poillucci, G.; Pigato, C.; Bozzato, A.M.; Salton, F.; Confalonieri, P.; Crimi, F.; Wade, B.; et al. Diagnostic Accuracy of Chest Digital Tomosynthesis in Patients Recovering after COVID-19 Pneumonia. Tomography 2022, 8, 1221–1227. [Google Scholar] [CrossRef]

- Martínez Redondo, J.; Comas Rodríguez, C.; Pujol Salud, J.; Crespo Pons, M.; García Serrano, C.; Ortega Bravo, M.; Palacín Peruga, J.M. Higher Accuracy of Lung Ultrasound over Chest X-ray for Early Diagnosis of COVID-19 Pneumonia. Int. J. Environ. Res. Public. Health 2021, 18, 3481. [Google Scholar] [CrossRef]

- Campbell, H.; Byass, P.; Greenwood, B.M. Acute Lower Respiratory Infections in Gambian Children: Maternal Perception of Illness. Ann. Trop. Paediatr. 1990, 10, 45–51. [Google Scholar] [CrossRef]

- Cherian, T.; Simoes, E.; John, T.J.; Steinhoff, M.; John, M. Evaluation of simple clinical signs for the diagnosis of acute lower respiratory tract infection. Lancet 1988, 332, 125–128. [Google Scholar] [CrossRef]

- Al aseri, Z. Accuracy of Chest Radiograph Interpretation by Emergency Physicians. Emerg. Radiol. 2008, 16, 111. [Google Scholar] [CrossRef]

- Gatt, M.E.; Spectre, G.; Paltiel, O.; Hiller, N.; Stalnikowicz, R. Chest Radiographs in the Emergency Department: Is the Radiologist Really Necessary? Postgrad. Med. J. 2003, 79, 214–217. [Google Scholar] [CrossRef] [Green Version]

- Dorr, F.; Chaves, H.; Serra, M.M.; Ramirez, A.; Costa, M.E.; Seia, J.; Cejas, C.; Castro, M.; Eyheremendy, E.; Fernández Slezak, D.; et al. COVID-19 Pneumonia Accurately Detected on Chest Radiographs with Artificial Intelligence. Intell.-Based Med. 2020, 3, 100014. [Google Scholar] [CrossRef]

- Tajmir, S.H.; Lee, H.; Shailam, R.; Gale, H.I.; Nguyen, J.C.; Westra, S.J.; Lim, R.; Yune, S.; Gee, M.S.; Do, S. Artificial Intelligence-Assisted Interpretation of Bone Age Radiographs Improves Accuracy and Decreases Variability. Skeletal Radiol. 2019, 48, 275–283. [Google Scholar] [CrossRef]

- Taylor-Phillips, S.; Stinton, C. Fatigue in Radiology: A Fertile Area for Future Research. Br. J. Radiol. 2019, 92, 20190043. [Google Scholar] [CrossRef]

- Lee, J.H.; Sun, H.Y.; Park, S.; Kim, H.; Hwang, E.J.; Goo, J.M.; Park, C.M. Performance of a Deep Learning Algorithm Compared with Radiologic Interpretation for Lung Cancer Detection on Chest Radiographs in a Health Screening Population. Radiology 2020, 297, 687–696. [Google Scholar] [CrossRef]

- Hwang, E.J.; Nam, J.G.; Lim, W.H.; Park, S.J.; Jeong, Y.S.; Kang, J.H.; Hong, E.K.; Kim, T.M.; Goo, J.M.; Park, S.; et al. Deep Learning for Chest Radiograph Diagnosis in the Emergency Department. Radiology 2019, 293, 573–580. [Google Scholar] [CrossRef] [PubMed]

- Shi, H.; Han, X.; Jiang, N.; Cao, Y.; Alwalid, O.; Gu, J.; Fan, Y.; Zheng, C. Radiological Findings from 81 Patients with COVID-19 Pneumonia in Wuhan, China: A Descriptive Study. Lancet Infect. Dis. 2020, 20, 425–434. [Google Scholar] [CrossRef]

- Chen, N.; Zhou, M.; Dong, X.; Qu, J.; Gong, F.; Han, Y.; Qiu, Y.; Wang, J.; Liu, Y.; Wei, Y.; et al. Epidemiological and Clinical Characteristics of 99 Cases of 2019 Novel Coronavirus Pneumonia in Wuhan, China: A Descriptive Study. The Lancet 2020, 395, 507–513. [Google Scholar] [CrossRef] [Green Version]

- Cozzi, A.; Schiaffino, S.; Arpaia, F.; Della Pepa, G.; Tritella, S.; Bertolotti, P.; Menicagli, L.; Monaco, C.G.; Carbonaro, L.A.; Spairani, R.; et al. Chest X-ray in the COVID-19 Pandemic: Radiologists’ Real-World Reader Performance. Eur. J. Radiol. 2020, 132, 109272. [Google Scholar] [CrossRef] [PubMed]

- Carlile, M.; Hurt, B.; Hsiao, A.; Hogarth, M.; Longhurst, C.A.; Dameff, C. Deployment of Artificial Intelligence for Radiographic Diagnosis of COVID-19 Pneumonia in the Emergency Department. J. Am. Coll. Emerg. Physicians Open 2020, 1, 1459–1464. [Google Scholar] [CrossRef]

- Patel, B.N.; Rosenberg, L.; Willcox, G.; Baltaxe, D.; Lyons, M.; Irvin, J.; Rajpurkar, P.; Amrhein, T.; Gupta, R.; Halabi, S.; et al. Human–Machine Partnership with Artificial Intelligence for Chest Radiograph Diagnosis. NPJ Digit. Med. 2019, 2, 111. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mei, X.; Lee, H.-C.; Diao, K.; Huang, M.; Lin, B.; Liu, C.; Xie, Z.; Ma, Y.; Robson, P.M.; Chung, M.; et al. Artificial Intelligence–Enabled Rapid Diagnosis of Patients with COVID-19. Nat. Med. 2020, 26, 1224–1228. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Becker, J.; Decker, J.A.; Römmele, C.; Kahn, M.; Messmann, H.; Wehler, M.; Schwarz, F.; Kroencke, T.; Scheurig-Muenkler, C. Artificial Intelligence-Based Detection of Pneumonia in Chest Radiographs. Diagnostics 2022, 12, 1465. https://doi.org/10.3390/diagnostics12061465

Becker J, Decker JA, Römmele C, Kahn M, Messmann H, Wehler M, Schwarz F, Kroencke T, Scheurig-Muenkler C. Artificial Intelligence-Based Detection of Pneumonia in Chest Radiographs. Diagnostics. 2022; 12(6):1465. https://doi.org/10.3390/diagnostics12061465

Chicago/Turabian StyleBecker, Judith, Josua A. Decker, Christoph Römmele, Maria Kahn, Helmut Messmann, Markus Wehler, Florian Schwarz, Thomas Kroencke, and Christian Scheurig-Muenkler. 2022. "Artificial Intelligence-Based Detection of Pneumonia in Chest Radiographs" Diagnostics 12, no. 6: 1465. https://doi.org/10.3390/diagnostics12061465

APA StyleBecker, J., Decker, J. A., Römmele, C., Kahn, M., Messmann, H., Wehler, M., Schwarz, F., Kroencke, T., & Scheurig-Muenkler, C. (2022). Artificial Intelligence-Based Detection of Pneumonia in Chest Radiographs. Diagnostics, 12(6), 1465. https://doi.org/10.3390/diagnostics12061465