Mapping Maize Planting Densities Using Unmanned Aerial Vehicles, Multispectral Remote Sensing, and Deep Learning Technology

Abstract

:1. Introduction

- (1)

- How can the maize planting densities be estimated by combining ultrahigh-definition RGB digital cameras, UAVs, and object detection models?

- (2)

- How can multispectral remote sensing sensors, UAVs, and machine learning techniques be integrated to estimate maize planting densities?

- (3)

- What are the advantages, disadvantages, and applicable scenarios for (a) UHDI-OD and (b) Multi-ML in estimations of maize planting densities?

2. Datasets

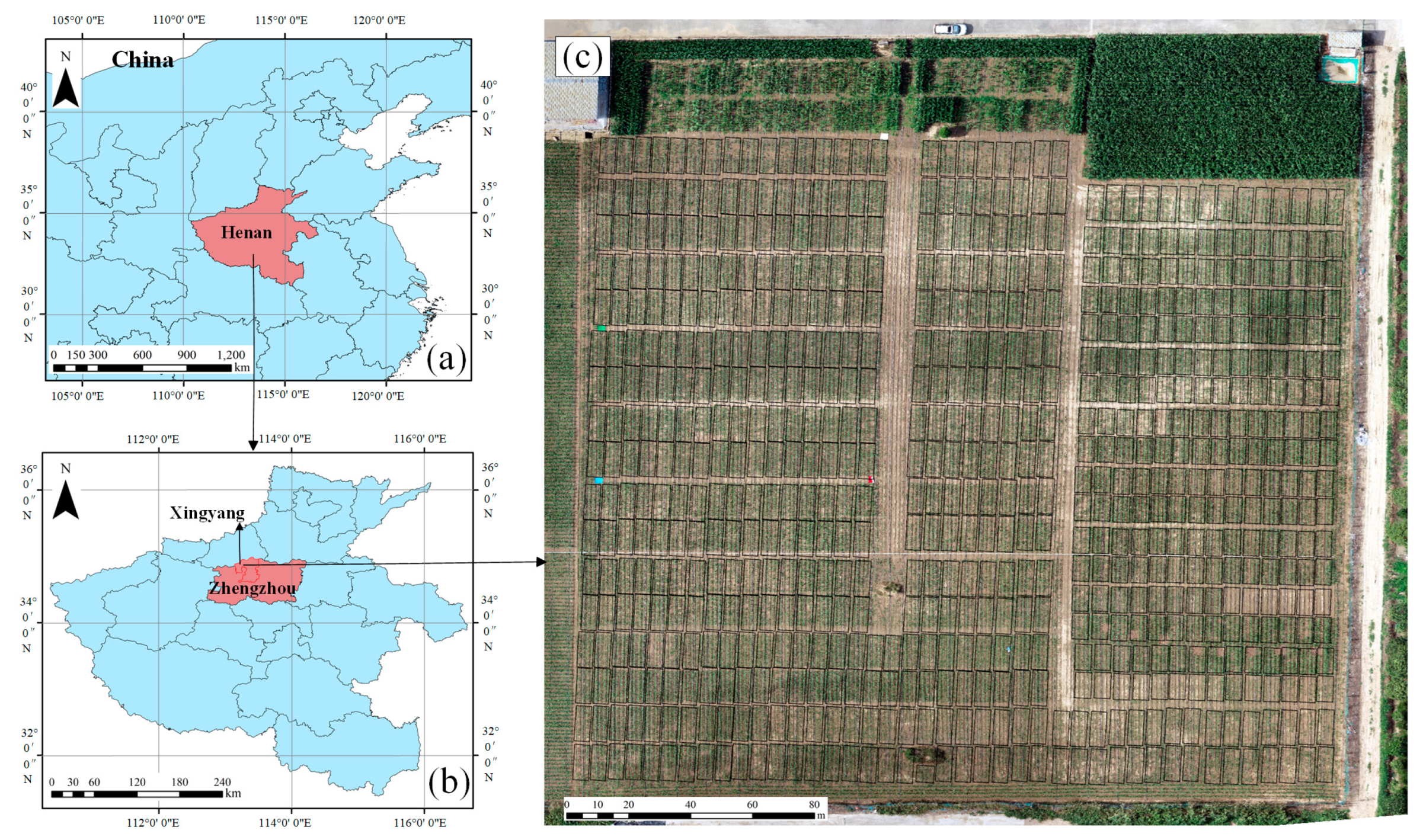

2.1. Study Area

2.2. UAV Data Collection

2.3. Maize Planting Density Measurements

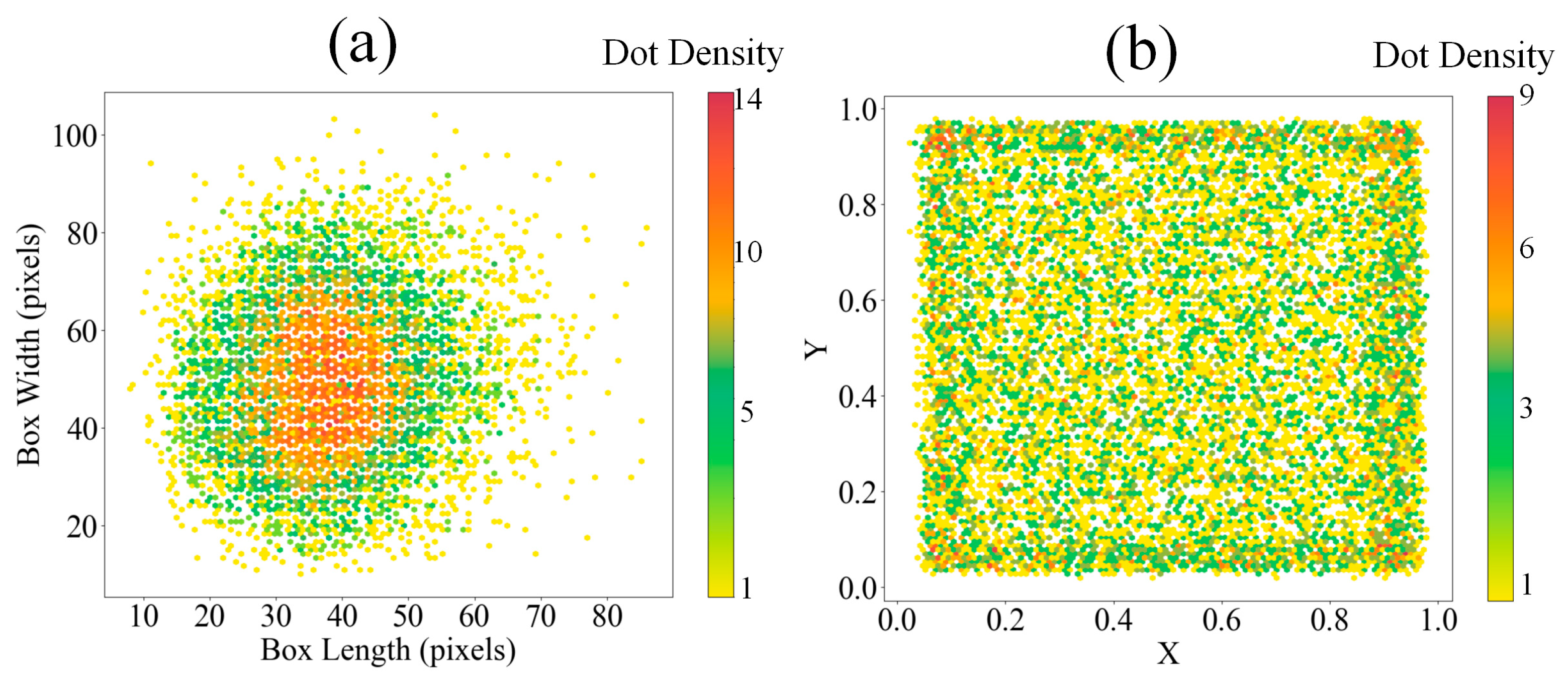

2.4. Maize Position Distribution

3. Methods

3.1. Methodology Framework

- (1)

- Data collection: This study used two UAVs equipped with an RGB digital camera and multispectral sensor to capture maize images in the field. These images were stitched together to generate a digital orthophoto mosaic of the entire area. The field data, including the number of maize plants and the size of the planting area, were obtained through field measurements to calculate the maize planting densities.

- (2)

- Digital image processing and density estimation: This study cropped and annotated the digital orthophoto mosaic and the annotated data into the YOLO object detection model for training. The goal was to find the most suitable model, obtain optimal maize detection results, and, consequently, estimate the planting densities.

- (3)

- Multispectral data processing and density estimation: This study extracted the VIs and the gray-level co-occurrence matrix (GLCM) texture features from the multispectral data. These features were combined and used in regression models (e.g., multiple linear stepwise regression (MLSR), random forest (RF), and partial least squares regression (PLS) to estimate the planting densities.

- (4)

- Comparison and selection of methods: This study comprehensively compared the advantages and disadvantages of the two methods and selected the most suitable model for mapping to accomplish the monitoring of the maize planting densities.

3.2. Proposed UHDI-OD Method for Extracting Planting Densities

3.2.1. Object Detection

3.2.2. Visualization Methods

3.2.3. Planting Density Calculations Based on Object Detection

3.3. Proposed Multi-ML Method for Extracting Planting Densities

3.3.1. Vegetation Indices

3.3.2. Gray-Level Co-Occurrence Matrix

3.3.3. Statistical Regression

3.4. Accuracy Evaluation

3.4.1. Object Detection Evaluation

3.4.2. Planting Density Evaluation

4. Results

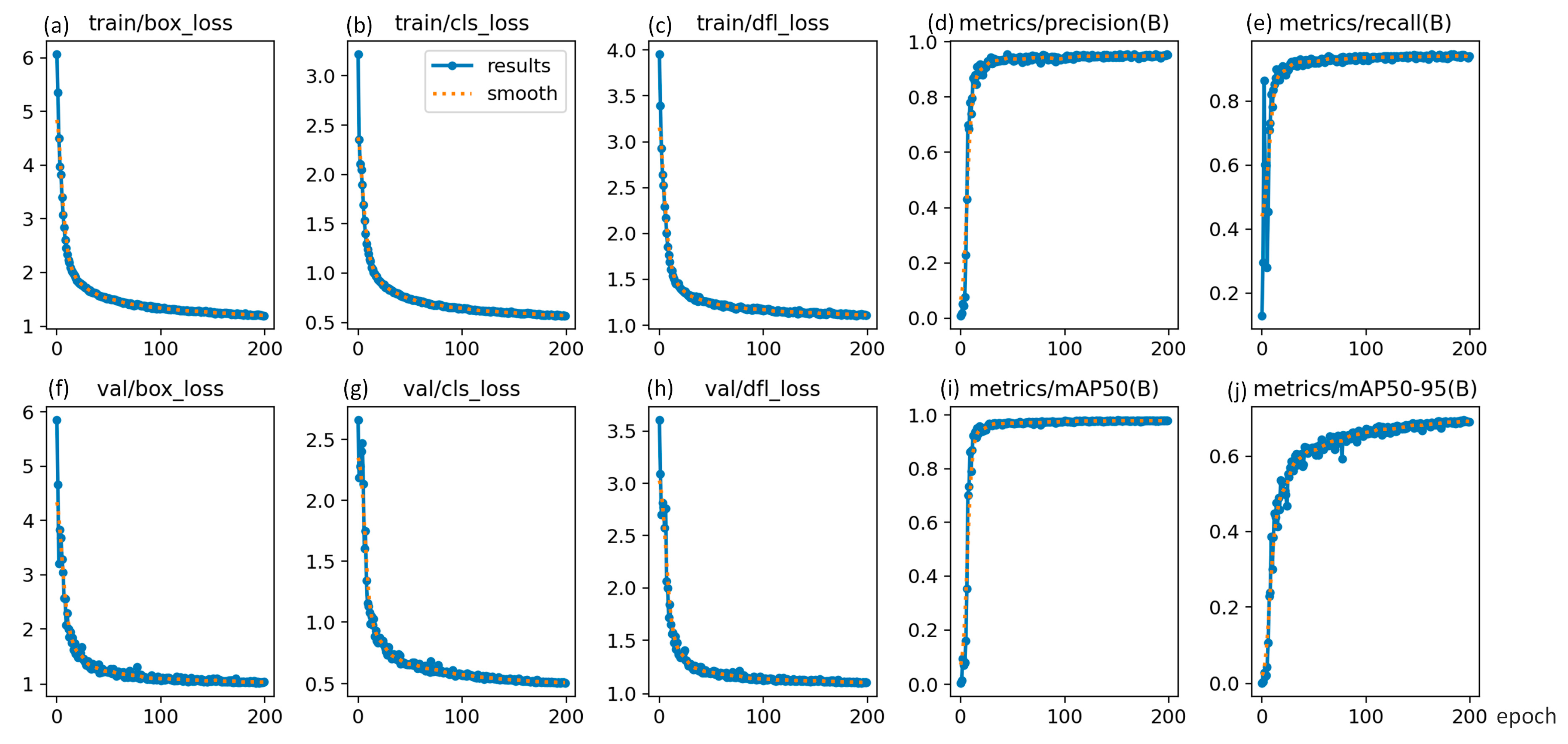

4.1. Maize Detection Based on Ultrahigh-Definition Digital Images

4.1.1. Object Detection Training and Recognition Results

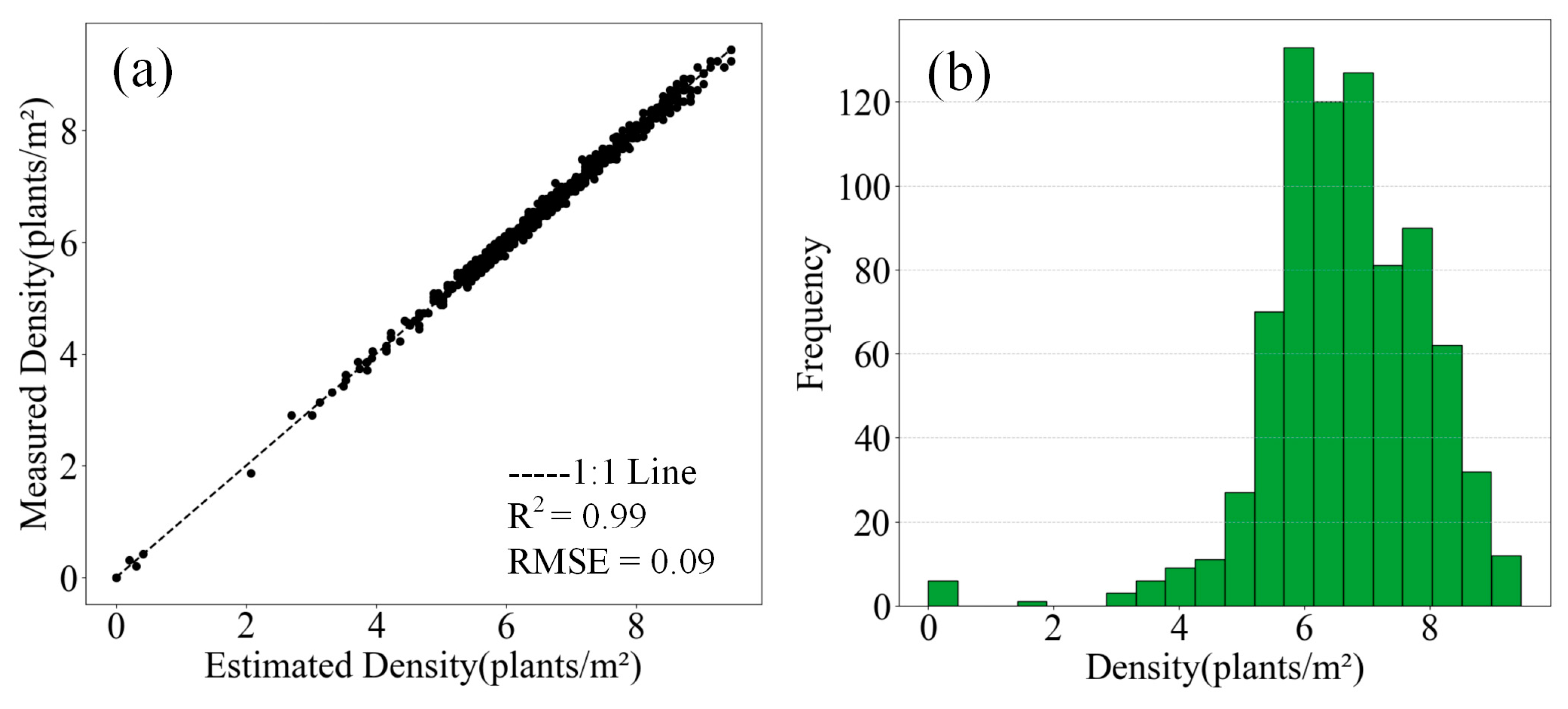

4.1.2. UHDI-OD Estimation Results for Planting Densities

4.2. Maize Density Estimations Based on Multispectral Data

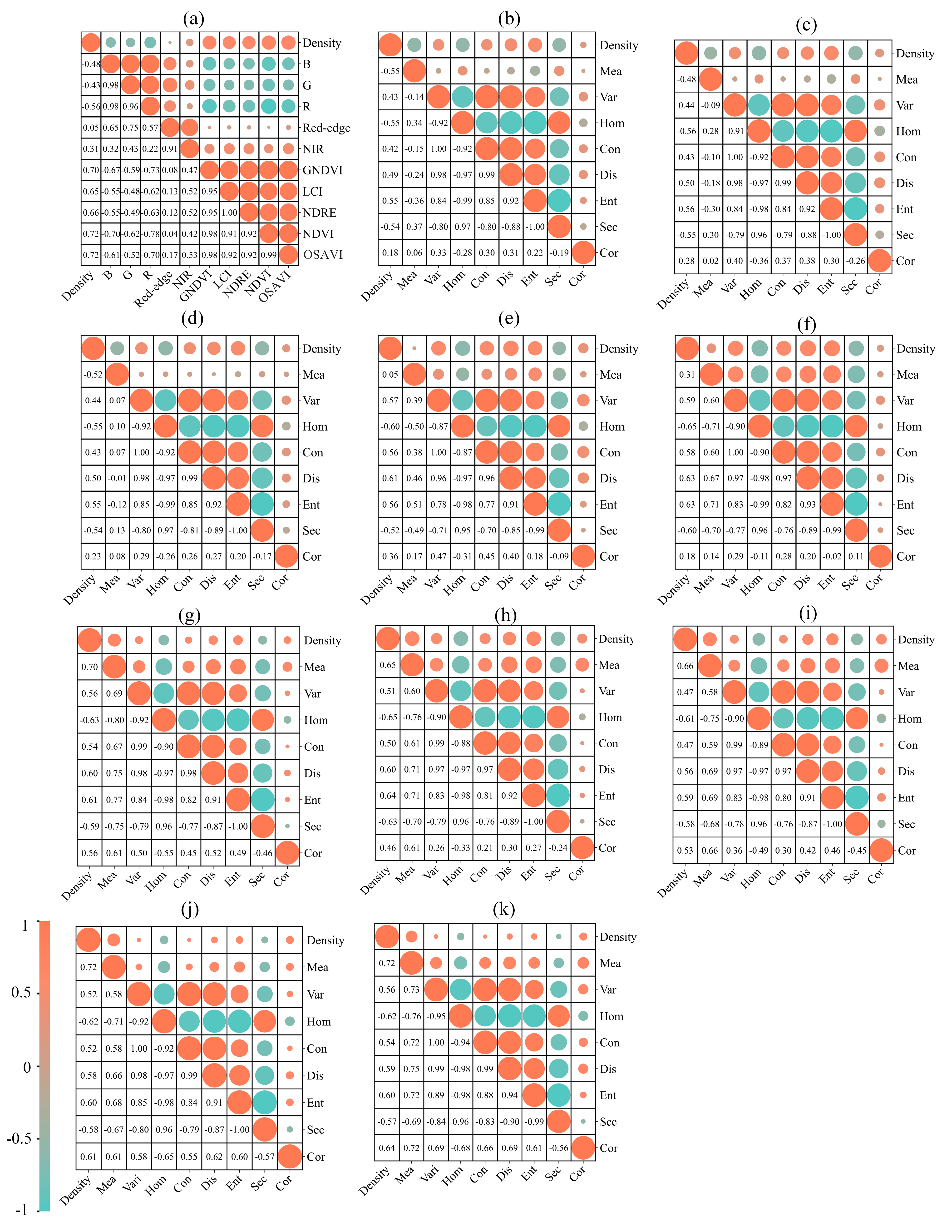

4.2.1. Correlation Analysis

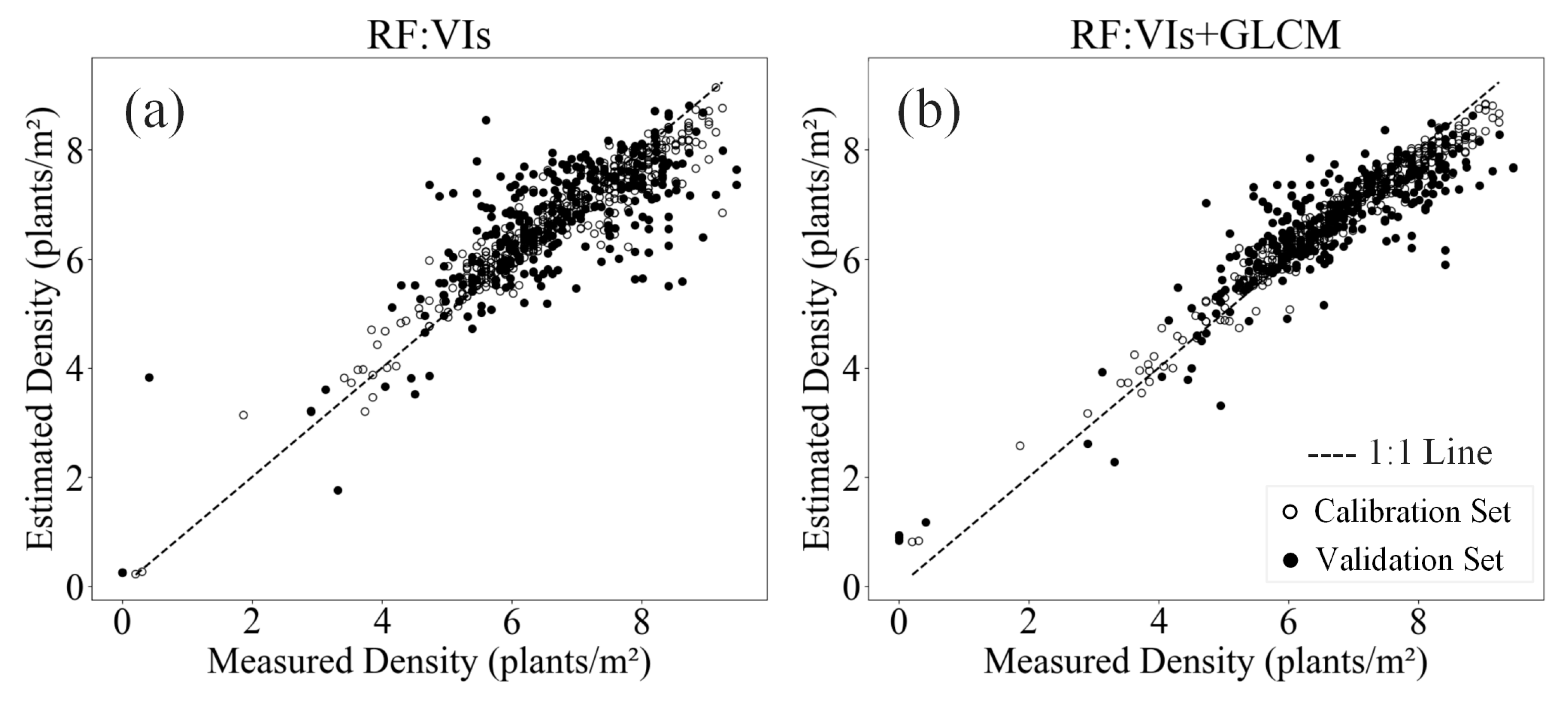

4.2.2. Multi-ML Estimations of Planting Densities

4.3. Scenario Comparison and Advantage Analysis

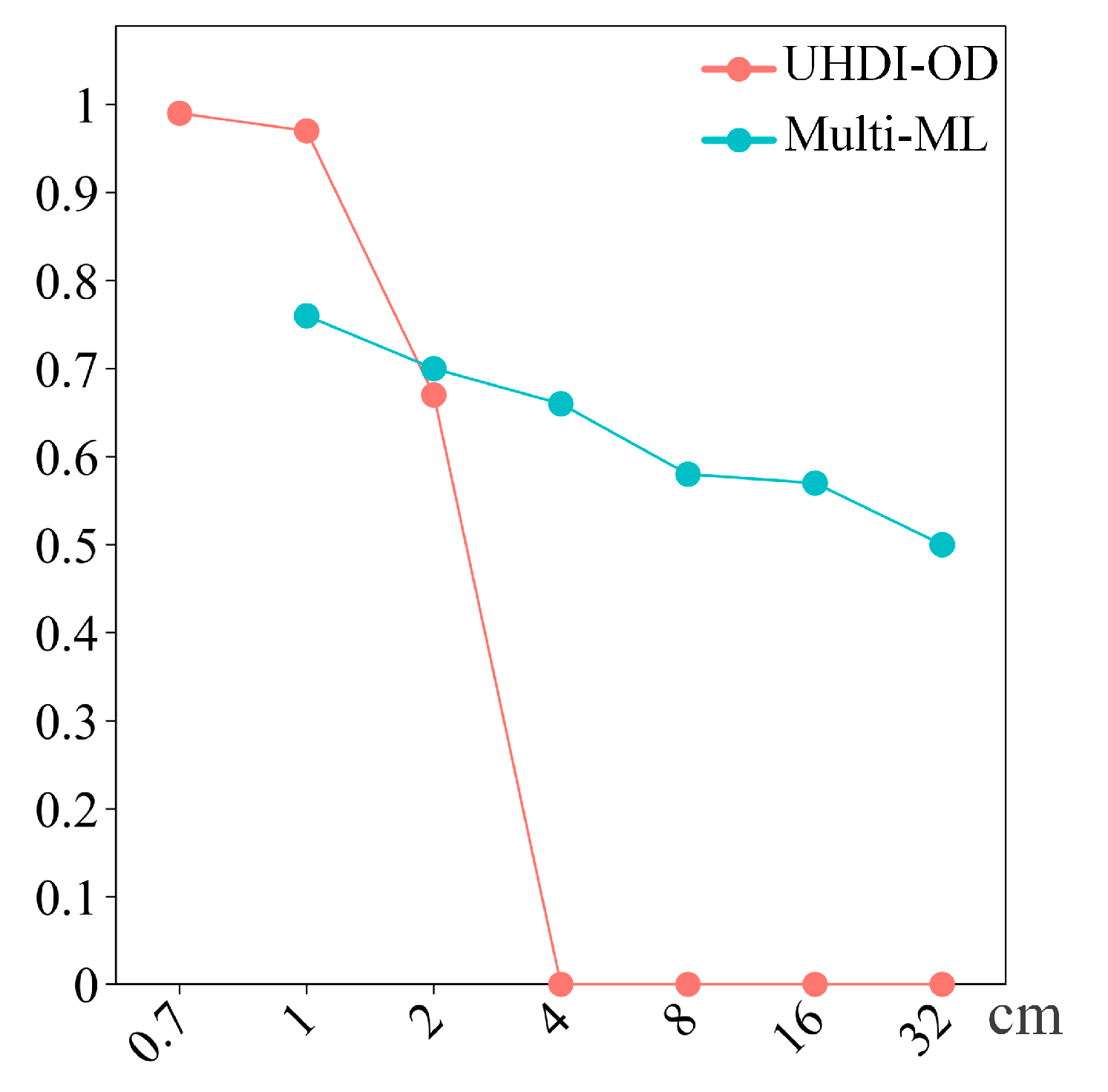

4.3.1. Comparison of the Maize Planting Density Estimation Results at Different Resolutions

4.3.2. Analysis of Model Advantages in Different Application Scenarios

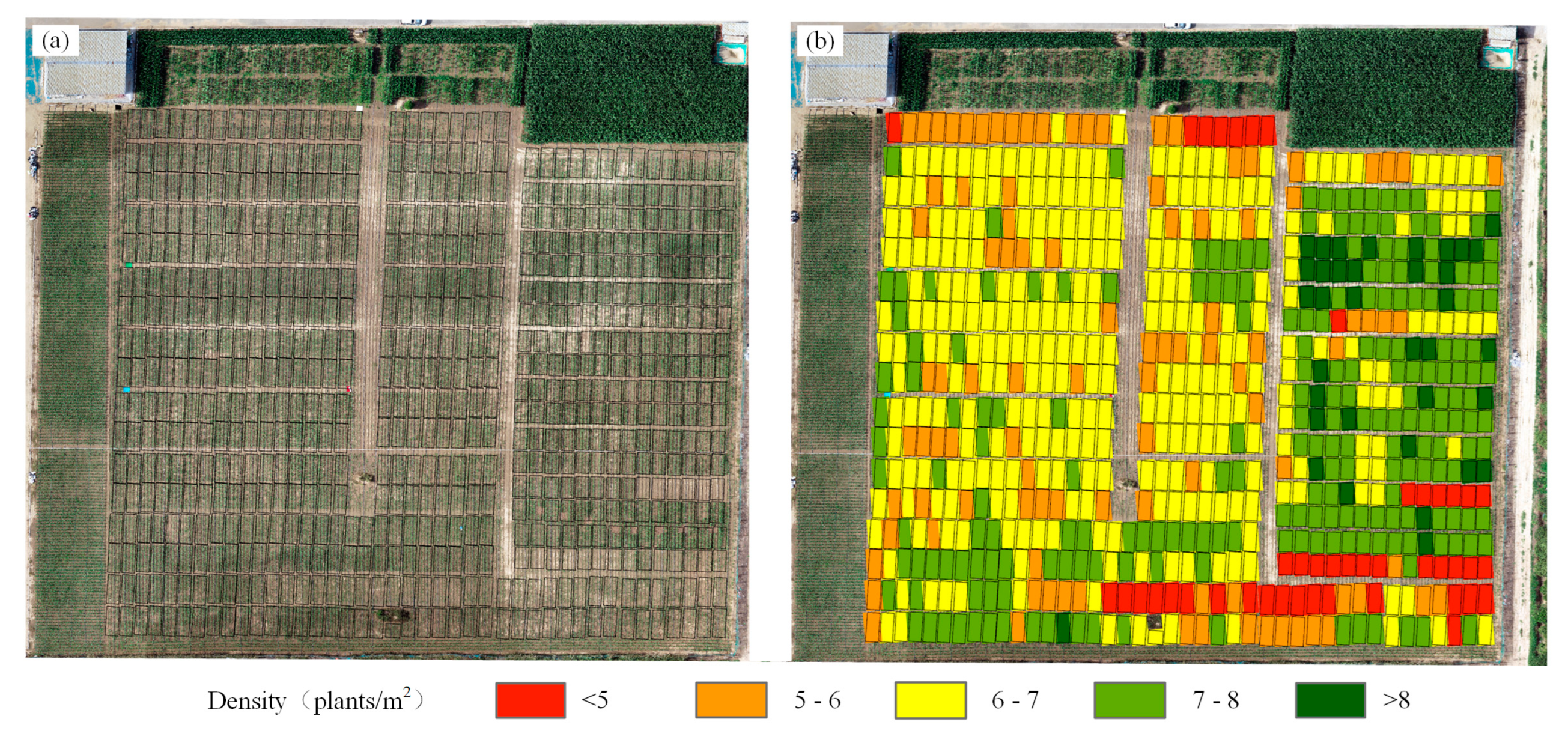

4.4. Multi-ML for Mapping Maize Planting Densities

5. Discussion

5.1. Advantages of the Proposed Methods

5.2. Applicable Scenarios of the Proposed Methods

5.3. Disadvantages of the Proposed Methods

- (1)

- This study tested two methods using simulated low-resolution images, which may not fully reflect the impacts of actual scene changes in the image resolution on model performance. Future research could consider collecting real low-resolution images to more accurately assess the model performance in practical applications.

- (2)

- This study did not thoroughly consider the monitoring results for maize at different time points or account for situations where the maize plants were smaller or partially occluded. In future studies, a detailed analysis of maize monitoring at different growth stages and considering the detection challenges in complex scenarios could enhance the applicability of the method.

- (3)

- This study conducted tests only in specific regions and for a specific crop (maize). Future research should broaden the scope of the field by considering different geographical regions and crop types to comprehensively evaluate the strengths, weaknesses, and feasibility of both methods.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ranum, P.; Peña-Rosas, J.P.; Garcia-Casal, M.N. Global Maize Production, Utilization, and Consumption. Ann. N. Y. Acad. Sci. 2014, 1312, 105–112. [Google Scholar] [CrossRef]

- Shu, G.; Cao, G.; Li, N.; Wang, A.; Wei, F.; Li, T.; Yi, L.; Xu, Y.; Wang, Y. Genetic Variation and Population Structure in China Summer Maize Germplasm. Sci. Rep. 2021, 11, 8012. [Google Scholar] [CrossRef]

- Ghasemi, A.; Azarfar, A.; Omidi-Mirzaei, H.; Fadayifar, A.; Hashemzadeh, F.; Ghaffari, M.H. Effects of Corn Processing Index and Forage Source on Performance, Blood Parameters, and Ruminal Fermentation of Dairy Calves. Sci. Rep. 2023, 13, 17914. [Google Scholar] [CrossRef]

- Shirzadifar, A.; Maharlooei, M.; Bajwa, S.G.; Oduor, P.G.; Nowatzki, J.F. Mapping Crop Stand Count and Planting Uniformity Using High Resolution Imagery in a Maize Crop. Biosyst. Eng. 2020, 200, 377–390. [Google Scholar] [CrossRef]

- Van Roekel, R.J.; Coulter, J.A. Agronomic Responses of Corn to Planting Date and Plant Density. Agron. J. 2011, 103, 1414–1422. [Google Scholar] [CrossRef]

- Gnädinger, F.; Schmidhalter, U. Digital Counts of Maize Plants by Unmanned Aerial Vehicles (UAVs). Remote Sens. 2017, 9, 544. [Google Scholar] [CrossRef]

- Yue, J.; Zhou, C.; Guo, W.; Feng, H.; Xu, K. Estimation of Winter-Wheat above-Ground Biomass Using the Wavelet Analysis of Unmanned Aerial Vehicle-Based Digital Images and Hyperspectral Crop Canopy Images. Int. J. Remote Sens. 2021, 42, 1602–1622. [Google Scholar] [CrossRef]

- Liu, Y.; Feng, H.; Yue, J.; Jin, X.; Fan, Y.; Chen, R.; Bian, M.; Ma, Y.; Song, X.; Yang, G. Improved Potato AGB Estimates Based on UAV RGB and Hyperspectral Images. Comput. Electron. Agric. 2023, 214, 108260. [Google Scholar] [CrossRef]

- Maes, W.H.; Steppe, K. Perspectives for Remote Sensing with Unmanned Aerial Vehicles in Precision Agriculture. Trends Plant Sci. 2019, 24, 152–164. [Google Scholar] [CrossRef]

- Colomina, I.; Molina, P. Unmanned Aerial Systems for Photogrammetry and Remote Sensing: A Review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef]

- Sassu, A.; Motta, J.; Deidda, A.; Ghiani, L.; Carlevaro, A.; Garibotto, G.; Gambella, F. Artichoke Deep Learning Detection Network for Site-Specific Agrochemicals UAS Spraying. Comput. Electron. Agric. 2023, 213, 108185. [Google Scholar] [CrossRef]

- Shakhatreh, H.; Sawalmeh, A.H.; Al-Fuqaha, A.; Dou, Z.; Almaita, E.; Khalil, I.; Othman, N.S.; Khreishah, A.; Guizani, M. Unmanned Aerial Vehicles (UAVs): A Survey on Civil Applications and Key Research Challenges. IEEE Access 2019, 7, 48572–48634. [Google Scholar] [CrossRef]

- Liu, Y.; Feng, H.; Yue, J.; Fan, Y.; Bian, M.; Ma, Y.; Jin, X.; Song, X.; Yang, G. Estimating Potato Above-Ground Biomass by Using Integrated Unmanned Aerial System-Based Optical, Structural, and Textural Canopy Measurements. Comput. Electron. Agric. 2023, 213, 108229. [Google Scholar] [CrossRef]

- Yue, J.; Feng, H.; Li, Z.; Zhou, C.; Xu, K. Mapping Winter-Wheat Biomass and Grain Yield Based on a Crop Model and UAV Remote Sensing. Int. J. Remote Sens. 2021, 42, 1577–1601. [Google Scholar] [CrossRef]

- Yue, J.; Yang, G.; Li, C.; Li, Z.; Wang, Y.; Feng, H.; Xu, B. Estimation of Winter Wheat Above-Ground Biomass Using Unmanned Aerial Vehicle-Based Snapshot Hyperspectral Sensor and Crop Height Improved Models. Remote Sens. 2017, 9, 708. [Google Scholar] [CrossRef]

- Zhu, L.; Li, X.; Sun, H.; Han, Y. Research on CBF-YOLO Detection Model for Common Soybean Pests in Complex Environment. Comput. Electron. Agric. 2024, 216, 108515. [Google Scholar] [CrossRef]

- Zhang, Z.; Khanal, S.; Raudenbush, A.; Tilmon, K.; Stewart, C. Assessing the Efficacy of Machine Learning Techniques to Characterize Soybean Defoliation from Unmanned Aerial Vehicles. Comput. Electron. Agric. 2022, 193, 106682. [Google Scholar] [CrossRef]

- Guo, Y.; Fu, Y.H.; Chen, S.; Robin Bryant, C.; Li, X.; Senthilnath, J.; Sun, H.; Wang, S.; Wu, Z.; de Beurs, K. Integrating Spectral and Textural Information for Identifying the Tasseling Date of Summer Maize Using UAV Based RGB Images. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102435. [Google Scholar] [CrossRef]

- Jin, X.; Liu, S.; Baret, F.; Hemerlé, M.; Comar, A. Estimates of Plant Density of Wheat Crops at Emergence from Very Low Altitude UAV Imagery. Remote Sens. Environ. 2017, 198, 105–114. [Google Scholar] [CrossRef]

- Yu, N.; Li, L.; Schmitz, N.; Tian, L.F.; Greenberg, J.A.; Diers, B.W. Development of Methods to Improve Soybean Yield Estimation and Predict Plant Maturity with an Unmanned Aerial Vehicle Based Platform. Remote Sens. Environ. 2016, 187, 91–101. [Google Scholar] [CrossRef]

- Etienne, A.; Ahmad, A.; Aggarwal, V.; Saraswat, D. Deep Learning-Based Object Detection System for Identifying Weeds Using UAS Imagery. Remote Sens. 2021, 13, 5182. [Google Scholar] [CrossRef]

- Xiao, J.; Suab, S.A.; Chen, X.; Singh, C.K.; Singh, D.; Aggarwal, A.K.; Korom, A.; Widyatmanti, W.; Mollah, T.H.; Minh, H.V.T.; et al. Enhancing Assessment of Corn Growth Performance Using Unmanned Aerial Vehicles (UAVs) and Deep Learning. Measurement 2023, 214, 112764. [Google Scholar] [CrossRef]

- Liu, S.; Baret, F.; Andrieu, B.; Burger, P.; Hemmerlé, M. Estimation of Wheat Plant Density at Early Stages Using High Resolution Imagery. Front. Plant Sci. 2017, 8, 739. [Google Scholar] [CrossRef]

- Yang, Q.; Shi, L.; Han, J.; Yu, J.; Huang, K. A near Real-Time Deep Learning Approach for Detecting Rice Phenology Based on UAV Images. Agric. For. Meteorol. 2020, 287, 107938. [Google Scholar] [CrossRef]

- Lin, Y.; Chen, T.; Liu, S.; Cai, Y.; Shi, H.; Zheng, D.; Lan, Y.; Yue, X.; Zhang, L. Quick and Accurate Monitoring Peanut Seedlings Emergence Rate through UAV Video and Deep Learning. Comput. Electron. Agric. 2022, 197, 106938. [Google Scholar] [CrossRef]

- Li, B.; Xu, X.; Han, J.; Zhang, L.; Bian, C.; Jin, L.; Liu, J. The Estimation of Crop Emergence in Potatoes by UAV RGB Imagery. Plant Methods 2019, 15, 15. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Fan, Z.; Lu, J.; Gong, M.; Xie, H.; Goodman, E.D. Automatic Tobacco Plant Detection in UAV Images via Deep Neural Networks. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 876–887. [Google Scholar] [CrossRef]

- Guo, W.; Zheng, B.; Potgieter, A.B.; Diot, J.; Watanabe, K.; Noshita, K.; Jordan, D.R.; Wang, X.; Watson, J.; Ninomiya, S.; et al. Aerial Imagery Analysis—Quantifying Appearance and Number of Sorghum Heads for Applications in Breeding and Agronomy. Front. Plant Sci. 2018, 9, 1544. [Google Scholar] [CrossRef]

- Fuentes, A.; Yoon, S.; Kim, S.C.; Park, D.S. A Robust Deep-Learning-Based Detector for Real-Time Tomato Plant Diseases and Pests Recognition. Sensors 2017, 17, 2022. [Google Scholar] [CrossRef] [PubMed]

- Sa, I.; Ge, Z.; Dayoub, F.; Upcroft, B.; Perez, T.; McCool, C. DeepFruits: A Fruit Detection System Using Deep Neural Networks. Sensors 2016, 16, 1222. [Google Scholar] [CrossRef] [PubMed]

- Liu, M.; Su, W.-H.; Wang, X.-Q. Quantitative Evaluation of Maize Emergence Using UAV Imagery and Deep Learning. Remote Sens. 2023, 15, 1979. [Google Scholar] [CrossRef]

- Gao, X.; Zan, X.; Yang, S.; Zhang, R.; Chen, S.; Zhang, X.; Liu, Z.; Ma, Y.; Zhao, Y.; Li, S. Maize Seedling Information Extraction from UAV Images Based on Semi-Automatic Sample Generation and Mask R-CNN Model. Eur. J. Agron. 2023, 147, 126845. [Google Scholar] [CrossRef]

- Xu, X.; Wang, L.; Liang, X.; Zhou, L.; Chen, Y.; Feng, P.; Yu, H.; Ma, Y. Maize Seedling Leave Counting Based on Semi-Supervised Learning and UAV RGB Images. Sustainability 2023, 15, 9583. [Google Scholar] [CrossRef]

- Osco, L.P.; dos Santos de Arruda, M.; Gonçalves, D.N.; Dias, A.; Batistoti, J.; de Souza, M.; Gomes, F.D.G.; Ramos, A.P.M.; de Castro Jorge, L.A.; Liesenberg, V.; et al. A CNN Approach to Simultaneously Count Plants and Detect Plantation-Rows from UAV Imagery. ISPRS J. Photogramm. Remote Sens. 2021, 174, 1–17. [Google Scholar] [CrossRef]

- Vong, C.N.; Conway, L.S.; Zhou, J.; Kitchen, N.R.; Sudduth, K.A. Early Corn Stand Count of Different Cropping Systems Using UAV-Imagery and Deep Learning. Comput. Electron. Agric. 2021, 186, 106214. [Google Scholar] [CrossRef]

- Springenberg, J.T.; Dosovitskiy, A.; Brox, T.; Riedmiller, M. Striving for Simplicity: The All Convolutional Net 2015. arXiv 2014, arXiv:1412.6806. [Google Scholar]

- Espejo-Garcia, B.; Mylonas, N.; Athanasakos, L.; Fountas, S. Improving Weeds Identification with a Repository of Agricultural Pre-Trained Deep Neural Networks. Comput. Electron. Agric. 2020, 175, 105593. [Google Scholar] [CrossRef]

- Feng, A.; Zhou, J.; Vories, E.; Sudduth, K.A. Evaluation of Cotton Emergence Using UAV-Based Imagery and Deep Learning. Comput. Electron. Agric. 2020, 177, 105711. [Google Scholar] [CrossRef]

- Zhou, C.; Hu, J.; Xu, Z.; Yue, J.; Ye, H.; Yang, G. A Monitoring System for the Segmentation and Grading of Broccoli Head Based on Deep Learning and Neural Networks. Front. Plant Sci. 2020, 11, 402. [Google Scholar] [CrossRef] [PubMed]

- Hu, J.; Yue, J.; Xu, X.; Han, S.; Sun, T.; Liu, Y.; Feng, H.; Qiao, H. UAV-Based Remote Sensing for Soybean FVC, LCC, and Maturity Monitoring. Agriculture 2023, 13, 692. [Google Scholar] [CrossRef]

- Yue, J.; Guo, W.; Yang, G.; Zhou, C.; Feng, H.; Qiao, H. Method for Accurate Multi-Growth-Stage Estimation of Fractional Vegetation Cover Using Unmanned Aerial Vehicle Remote Sensing. Plant Methods 2021, 17, 51. [Google Scholar] [CrossRef] [PubMed]

- Yue, J.; Yang, H.; Yang, G.; Fu, Y.; Wang, H.; Zhou, C. Estimating Vertically Growing Crop Above-Ground Biomass Based on UAV Remote Sensing. Comput. Electron. Agric. 2023, 205, 107627. [Google Scholar] [CrossRef]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-Based Plant Height from Crop Surface Models, Visible, and near Infrared Vegetation Indices for Biomass Monitoring in Barley. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Liu, Y.; Feng, H.; Yue, J.; Jin, X.; Li, Z.; Yang, G. Estimation of Potato Above-Ground Biomass Based on Unmanned Aerial Vehicle Red-Green-Blue Images with Different Texture Features and Crop Height. Front. Plant Sci. 2022, 13, 938216. [Google Scholar] [CrossRef] [PubMed]

- Qiao, L.; Zhao, R.; Tang, W.; An, L.; Sun, H.; Li, M.; Wang, N.; Liu, Y.; Liu, G. Estimating Maize LAI by Exploring Deep Features of Vegetation Index Map from UAV Multispectral Images. Field Crops Res. 2022, 289, 108739. [Google Scholar] [CrossRef]

- Fan, Y.; Feng, H.; Jin, X.; Yue, J.; Liu, Y.; Li, Z.; Feng, Z.; Song, X.; Yang, G. Estimation of the Nitrogen Content of Potato Plants Based on Morphological Parameters and Visible Light Vegetation Indices. Front. Plant Sci. 2022, 13, 1012070. [Google Scholar] [CrossRef] [PubMed]

- Yue, J.; Tian, J.; Philpot, W.; Tian, Q.; Feng, H.; Fu, Y. VNAI-NDVI-Space and Polar Coordinate Method for Assessing Crop Leaf Chlorophyll Content and Fractional Cover. Comput. Electron. Agric. 2023, 207, 107758. [Google Scholar] [CrossRef]

- Sankaran, S.; Khot, L.R.; Carter, A.H. Field-Based Crop Phenotyping: Multispectral Aerial Imaging for Evaluation of Winter Wheat Emergence and Spring Stand. Comput. Electron. Agric. 2015, 118, 372–379. [Google Scholar] [CrossRef]

- Banerjee, B.P.; Sharma, V.; Spangenberg, G.; Kant, S. Machine Learning Regression Analysis for Estimation of Crop Emergence Using Multispectral UAV Imagery. Remote Sens. 2021, 13, 2918. [Google Scholar] [CrossRef]

- Wilke, N.; Siegmann, B.; Postma, J.A.; Muller, O.; Krieger, V.; Pude, R.; Rascher, U. Assessment of Plant Density for Barley and Wheat Using UAV Multispectral Imagery for High-Throughput Field Phenotyping. Comput. Electron. Agric. 2021, 189, 106380. [Google Scholar] [CrossRef]

- Lee, H.; Wang, J.; Leblon, B. Using Linear Regression, Random Forests, and Support Vector Machine with Unmanned Aerial Vehicle Multispectral Images to Predict Canopy Nitrogen Weight in Corn. Remote Sens. 2020, 12, 2071. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection 2016. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. TPH-YOLOv5: Improved YOLOv5 Based on Transformer Prediction Head for Object Detection on Drone-Captured Scenarios 2021. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) Workshops, Montreal, QC, Canada, 10–17 October 2021. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement 2018. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. Int. J. Comput. Vis. 2020, 128, 336–359. [Google Scholar] [CrossRef]

- Chattopadhyay, A.; Sarkar, A.; Howlader, P.; Balasubramanian, V.N. Grad-CAM++: Improved Visual Explanations for Deep Convolutional Networks. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; pp. 839–847. [Google Scholar]

- Fu, R.; Hu, Q.; Dong, X.; Guo, Y.; Gao, Y.; Li, B. Axiom-Based Grad-CAM: Towards Accurate Visualization and Explanation of CNNs 2020. arXiv 2020, arXiv:2008.02312. [Google Scholar]

- Freden, S.C.; Mercanti, E.P.; Becker, M.A. (Eds.) Third Earth Resources Technology Satellite-1 Symposium: Section A-B; Technical Presentations; Scientific and Technical Information Office, National Aeronautics and Space Administration: Washington, DC, USA, 1973. [Google Scholar]

- Rondeaux, G.; Steven, M.; Baret, F. Optimization of Soil-Adjusted Vegetation Indices. Remote Sens. Environ. 1996, 55, 95–107. [Google Scholar] [CrossRef]

- Datt, B. A New Reflectance Index for Remote Sensing of Chlorophyll Content in Higher Plants: Tests Using Eucalyptus Leaves. J. Plant Physiol. 1999, 154, 30–36. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Merzlyak, M.N. Use of a Green Channel in Remote Sensing of Global Vegetation from EOS-MODIS. Remote Sens. Environ. 1996, 58, 289–298. [Google Scholar] [CrossRef]

- Gitelson, A.; Merzlyak, M.N. Spectral Reflectance Changes Associated with Autumn Senescence of Aesculus hippocastanum L. and Acer platanoides L. Leaves. Spectral Features and Relation to Chlorophyll Estimation. J. Plant Physiol. 1994, 143, 286–292. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I. Textural Features for Image Classification. IEEE Trans. Syst. Man. Cybern. 1973, SMC-3, 610–621. [Google Scholar] [CrossRef]

- Cardellicchio, A.; Solimani, F.; Dimauro, G.; Petrozza, A.; Summerer, S.; Cellini, F.; Renò, V. Detection of Tomato Plant Phenotyping Traits Using YOLOv5-Based Single Stage Detectors. Comput. Electron. Agric. 2023, 207, 107757. [Google Scholar] [CrossRef]

- Yang, T.; Jay, S.; Gao, Y.; Liu, S.; Baret, F. The Balance between Spectral and Spatial Information to Estimate Straw Cereal Plant Density at Early Growth Stages from Optical Sensors. Comput. Electron. Agric. 2023, 215, 108458. [Google Scholar] [CrossRef]

- Habibi, L.N.; Watanabe, T.; Matsui, T.; Tanaka, T.S.T. Machine Learning Techniques to Predict Soybean Plant Density Using UAV and Satellite-Based Remote Sensing. Remote Sens. 2021, 13, 2548. [Google Scholar] [CrossRef]

- Vong, C.N.; Conway, L.S.; Feng, A.; Zhou, J.; Kitchen, N.R.; Sudduth, K.A. Corn Emergence Uniformity Estimation and Mapping Using UAV Imagery and Deep Learning. Comput. Electron. Agric. 2022, 198, 107008. [Google Scholar] [CrossRef]

| Name | Calculation | Reference |

|---|---|---|

| R | DN values of R band | |

| G | DN values of G band | |

| B | DN values of B band | |

| Red-Edge | DN values of Red-Edge band | |

| NIR | DN values of NIR band | |

| NDVI | (NIR − R)/(NIR + R) | [61] |

| OSAVI | 1.16 (NIR − R)/(NIR + R + 0.16) | [62] |

| LCI | (NIR − Red-Edge)/(NIR + R) | [63] |

| GNDVI | (NIR − G)/(NIR + G) | [64] |

| NDRE | (NIR − Red-Edge)/(NIR + Red-Edge) | [65] |

| Abbreviation | Name | Formula |

|---|---|---|

| Mea | Mean | |

| Var | Variance | |

| Hom | Homogeneity | |

| Con | Contrast | |

| Dis | Dissimilarity | |

| Ent | Entropy | |

| ASM | Angular Second Moment | |

| Cor | Correlation |

| Model | Precision (%) | Recall (%) | mAP@50 (%) | mAP@50-95 (%) | Param (M) | FLOPs (GFLOPS) |

|---|---|---|---|---|---|---|

| YOLOv8n | 94.8 | 94.5 | 98.3 | 73.4 | 3.0 | 8.1 |

| YOLOv8s | 95.2 | 94.1 | 98.2 | 73.9 | 11.1 | 28.4 |

| YOLOv8m | 95.1 | 94.5 | 98.2 | 73.7 | 25.8 | 78.7 |

| YOLOv8l | 94.9 | 94.8 | 98.3 | 74.0 | 43.6 | 164.8 |

| YOLOv8x | 95.0 | 94.8 | 98.3 | 73.5 | 68.1 | 257.4 |

| YOLOv3-tiny | 93.9 | 94.4 | 97.9 | 72.4 | 12.1 | 18.9 |

| YOLOv5n | 94.9 | 94.3 | 98.2 | 73.0 | 1.7 | 4.1 |

| YOLOv6n | 94.5 | 93.8 | 98.2 | 73.3 | 4.2 | 11.8 |

| Dataset | Methods | Calibration | Validation | ||

|---|---|---|---|---|---|

| R2 | RMSE (Plants/m2) | R2 | RMSE (Plants/m2) | ||

| VIs | MSLR | 0.50 | 0.86 | 0.55 | 0.92 |

| RF | 0.89 | 0.39 | 0.59 | 0.87 | |

| PLS | 0.52 | 0.84 | 0.58 | 0.89 | |

| GLCM | MSLR | 0.56 | 0.80 | 0.62 | 0.84 |

| RF | 0.94 | 0.29 | 0.72 | 0.72 | |

| PLS | 0.69 | 0.67 | 0.74 | 0.70 | |

| VIs + GLCM | MSLR | 0.67 | 0.69 | 0.70 | 0.75 |

| RF | 0.95 | 0.27 | 0.76 | 0.67 | |

| UHDI-OD | Multi-ML | |

|---|---|---|

| Data training | Deep learning model, slow training speed (50 min), high-performance computing equipment | Relatively simple model, fast training speed (0.2 min) |

| Estimate accuracy | YOLOv8, higher accuracy (R2 = 0.99) | RF regression model, lower accuracy (R2 = 0.76) |

| Universality | Easily affected by resolution interference (R2 drops to 0 when the pixel size is reduced to 4 cm) | Adapt to different resolutions (R2 can still remain above 0.50 when the pixel size is reduced to 32 cm) |

| Scope of application | Small area density monitoring | Large area density monitoring |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shen, J.; Wang, Q.; Zhao, M.; Hu, J.; Wang, J.; Shu, M.; Liu, Y.; Guo, W.; Qiao, H.; Niu, Q.; et al. Mapping Maize Planting Densities Using Unmanned Aerial Vehicles, Multispectral Remote Sensing, and Deep Learning Technology. Drones 2024, 8, 140. https://doi.org/10.3390/drones8040140

Shen J, Wang Q, Zhao M, Hu J, Wang J, Shu M, Liu Y, Guo W, Qiao H, Niu Q, et al. Mapping Maize Planting Densities Using Unmanned Aerial Vehicles, Multispectral Remote Sensing, and Deep Learning Technology. Drones. 2024; 8(4):140. https://doi.org/10.3390/drones8040140

Chicago/Turabian StyleShen, Jianing, Qilei Wang, Meng Zhao, Jingyu Hu, Jian Wang, Meiyan Shu, Yang Liu, Wei Guo, Hongbo Qiao, Qinglin Niu, and et al. 2024. "Mapping Maize Planting Densities Using Unmanned Aerial Vehicles, Multispectral Remote Sensing, and Deep Learning Technology" Drones 8, no. 4: 140. https://doi.org/10.3390/drones8040140