Abstract

In recent years, the scientific community has increasingly recognized the complex multi-scale competency architecture (MCA) of biology, comprising nested layers of active homeostatic agents, each forming the self-orchestrated substrate for the layer above, and, in turn, relying on the structural and functional plasticity of the layer(s) below. The question of how natural selection could give rise to this MCA has been the focus of intense research. Here, we instead investigate the effects of such decision-making competencies of MCA agential components on the process of evolution itself, using in silico neuroevolution experiments of simulated, minimal developmental biology. We specifically model the process of morphogenesis with neural cellular automata (NCAs) and utilize an evolutionary algorithm to optimize the corresponding model parameters with the objective of collectively self-assembling a two-dimensional spatial target pattern (reliable morphogenesis). Furthermore, we systematically vary the accuracy with which the uni-cellular agents of an NCA can regulate their cell states (simulating stochastic processes and noise during development). This allows us to continuously scale the agents’ competency levels from a direct encoding scheme (no competency) to an MCA (with perfect reliability in cell decision executions). We demonstrate that an evolutionary process proceeds much more rapidly when evolving the functional parameters of an MCA compared to evolving the target pattern directly. Moreover, the evolved MCAs generalize well toward system parameter changes and even modified objective functions of the evolutionary process. Thus, the adaptive problem-solving competencies of the agential parts in our NCA-based in silico morphogenesis model strongly affect the evolutionary process, suggesting significant functional implications of the near-ubiquitous competency seen in living matter.

1. Introduction

Biological systems are organized in an exquisite architecture of layers, including molecular networks, organelles, cells, tissues, organs, organisms, swarms, and ecosystems. It is well recognized that life exhibits complexity at every scale. Increasingly realized, however, is the fact that those layers are not merely complex but actually active “agential matter”, which has agendas and competencies of its own [1,2]. Elsewhere, we have discussed examples of problem-solving in unconventional spaces, including transcriptional, physiological, metabolic, and anatomical space [3].

Especially interesting is the ability of these ubiquitous biological agents to deal with novel situations on the fly, which is not limited to brainy animals navigating 3D space but also occurs with respect to injury, mutations, and other kinds of external and internal perturbations (reviewed in [4]). One example of such problem-solving capabilities is the regenerative properties of some species that can regrow limbs, organs, or entire parts of their bodies when amputated, and—remarkably—stop when the precisely correct target morphology is complete [5,6,7]. This can be understood as cellular collectives navigating morphospace until the desired target shape—or the goal—is reached again. Other examples include the ability of scrambled tadpole faces to reorganize in novel ways to result in normal frog faces [8], and the normal shape and size of structures in amphibia despite drastic changes in cell number [9] and cell size [10], which are handled by exploiting different molecular mechanisms to reach correct target morphologies despite novel changes in internal components. Behavioral and morphological plasticity intersect in cases such as tadpoles made with eyes on their tails, which nevertheless can see and learn in visual assays without needing rounds of evolutionary adaptation [11].

The ability to navigate transcriptional and anatomical spaces, using perception–action loops and homeostatic setpoints, is now being increasingly targeted by biomedical and bioengineering efforts [12,13]. A fascinating body of work exists around the question of how neural and non-neural problem-solving capacities evolved, and how neuro-behavioral intelligence affects evolution [14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31]. However, we and others have previously suggested that somatic competency pre-dates neural intelligence [32,33,34], and has a bi-directional interaction with the evolutionary and developmental process [1,3,35]. Thus, here, we address the second half of the evolution–intelligence spiral: how are evolutionary processes affected by the competency of the material? Especially important is the inclusion of the middle layer between the genotype and phenotype. Mutation operates on genomes, and selection operates on phenotypic performance, but in most organisms, the connection between them is not linear or shallow—instead, developmental physiology provides a deep reservoir of dynamics that strongly alter the process. As a contribution to the study of evolvability and developmental mechanisms potentiating it [36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53], we established a virtual embryogeny [54] system focused on anatomical morphogenesis by cells. In this minimal model of morphogenesis, we were able to study the effects of different degrees of cellular competency on the evolutionary process.

The standard understanding of (Neo-Darwinian) evolution is schematized in Figure 1A: The genome of an organism encodes aspects of the organism’s cellular hardware, which together define the phenotypic traits. Given a competitive environment, natural selection then favors organisms with advantageous traits, and thus, on average, the corresponding genes tend to get passed on to the next generations more frequently. Random mutations may occur, consequently changing traits in the offspring phenotype. This affects the offspring’s reproductive success during the selection stage and, in that way, good traits prevail, and bad ones perish over time.

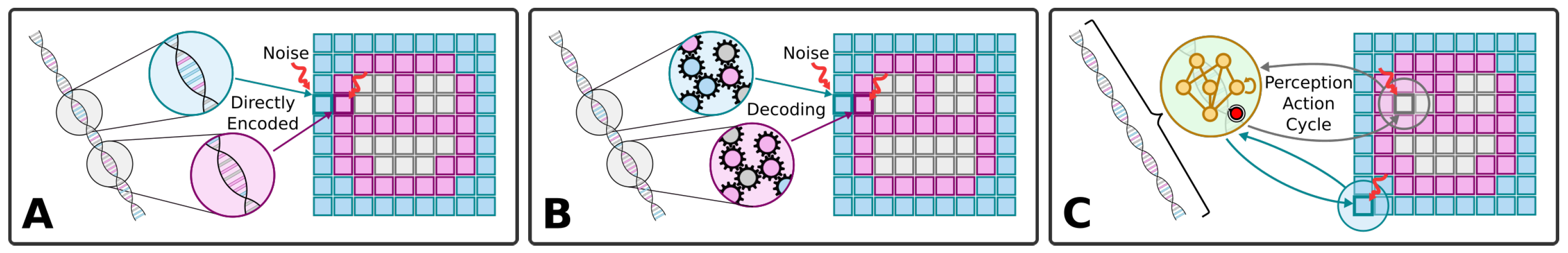

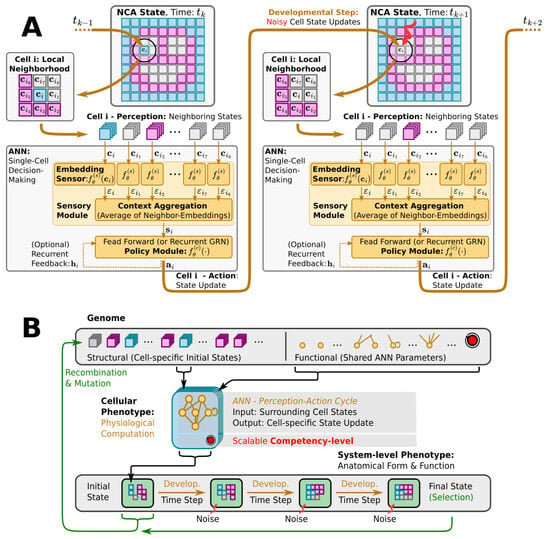

Figure 1.

(A–C) Illustration of different ways of genetic encodings of a phenotype of, here, a two-dimensional smiley-face tissue composed of single cells. (A) Direct encoding: Each gene encodes a specific phenotypic trait, here, of each specific cell type of the tissue, colored blue, pink, and white. (B) Indirect encoding: A deterministic mapping between the genome and different phenotypic traits, here, again of each cell type (shown for completeness, but not investigated here due to reasons discussed in the Section 5). (C) Multi-scale competency architecture: Encoding of functional parameters of the uni-cellular agents which self-assemble a target pattern via successive local perception–action cycles [1] (as detailed in Figure 2A). In all three panels, we schematically illustrate, from left to right, the genome, the respective encoding mechanism, and the corresponding phenotype; colors indicate cell types, and arrows indicate the flow of information and environmental noise, affecting each cell during the developmental process.

This view has been revised by Waddington [55,56], and more recent works [57,58,59,60,61,62,63,64,65,66], and has been the subject of vigorous debate [40,63,67,68,69,70,71,72] with respect to its capabilities for discovery, its optimal locus of control, and the degree to which various aspects are random (uncorrelated to the probability of future fitness improvements). Important open questions concern ways in which the properties of development—the layer between the mutated genotype and the selected phenotype—are evolved and in turn affect the evolutionary process [36,39,45,46,73,74,75,76,77,78]. Specifically, significant work has been conducted at the interface of evolution and learning—selectionist accounts of change and variational accounts of change respectively [30,61,62,66,79,80,81,82,83,84,85]. Significant progress has been made on the question of how evolution produces agents with behavioral competency in diverse problem spaces [17,86,87,88]. We have focused on a particular kind of competency—that of navigating anatomical morphospace [3,12,89,90]. More specifically, we here investigate in silico the evolutionary implications of the self-orchestrated process of morphogenesis, where local actions of single cells need to be aligned with a global policy of a multi-cellular collective to guide the formation of a large-scale tissue, in turn affecting the underlying evolutionary process. Work on developmental plasticity, chimeras, synthetic biobots, and the ability to overcome novel stressors has highlighted ways in which evolution seems to give rise to problem-solving machines, not fixed solutions to specific environments [91].

Thus, the problem-solving capacities of development, regeneration, and remodeling ensure that in many (perhaps most) kinds of organisms, the mapping from genotype to phenotype is not merely complex and indirect [92] (as schematized in Figure 1B) but actually enables evolution to search the space of behavior-shaping signals, not microstates, and exploit the modularity and triggers of complex downstream responses (cf. Figure 1C). We have previously argued that both evolution and human bioengineers face a range of unique problems and opportunities when dealing with the agential material of life—not passive or even just active matter but a substrate that has problem-solving competencies and agendas at many scales [93,94]. What selection sees is not the actual quality of the genome but the quality of the form and function of the flexible physiological “software” that runs on the genomically specified molecular hardware as schematically illustrated in Figure 2. This in turn suggests that the actual progress of evolution should be significantly impacted by the degree and kind of competency in the developmental architecture. Prior work has suggested a powerful feedback loop between the evolution of morphogenetic problem-solving and the effects of these competencies on the ability of evolutionary search to produce adaptive complexity [1,35,95]. Here, we construct and analyze a new model of evolving morphogenesis to study how different competency architectures within and among cells impact evolutionary metrics such as rate, robustness to noise, and transferability to new environmental challenges.

Figure 2.

(A) Detailed information flow-chart of the perception–action cycle of a particular single cell agent, labeled i, in a neural cellular automaton (NCA)-based multi-scale competency architecture (cf. Figure 1C and Section 3.1): Starting from a multi-cellular phenotype configuration at time (left smiley-face panel), and following the thick orange arrows, each cell i perceives cell state information about its respective local neighborhood of the surrounding tissue (respectively labeled). This input is passed through an artificial neural network (ANN), substituting the internal decision-making machinery of a single cell, until an action output is proposed that induces a (noisy) cell state update in the next developmental step at time (details on labeled internal ANN operation and ANN architectures are introduced later in Section 3.1 and Appendix A). (B) Schematic illustration—following Ref. [1]—of the evolution of a morphogenesis process with a multi-scale competency architecture acting as the developmental layer between genotypes and phenotypes (see Section 3.1 and Section 3.2 for details): The genotype (top) encodes the structural (initial cell states) and functional parts (decision-making machinery) of a uni-cellular phenotype (center). The cell’s decision-making machinery is represented as a potentially recurrent ANN (yellow/orange graph) with an adjustable competency level (red knob). Through repeated local interactions (perception–action cycles; detailed in panel (A), the multi-cellular collective self-orchestrates the iterative process of morphogenesis and forms a final target pattern, i.e., a system-level phenotype after a fixed number of developmental steps (bottom left to right) while being subjected to noisy cell state updates at each step (red arrows). The evolutionary process solely selects at the level of the system-level phenotypes (labeled Final State at the bottom right). Based on a phenotypic fitness criterion, the corresponding genotypes, composed of the initial cell states (bottom left) and the functional ANN parameters (top right, are subject to evolutionary reproduction—recombination and mutation operations—to form the next generation of cellular phenotypes that successively “compute” the corresponding system-level phenotypes via morphogenesis, etc.

To quantitatively study the effects that different levels of competency of the decision-making centers in a multi-scale competency architecture have on the process of evolution, we here rely on tools from the research field of Artificial Life [96], which furthers computational and cybernetic models that mimic life-like behavior based on ideas taken from biology; a simple example is cellular automata (CAs) [97]. In such CAs, the (numerical) states of localized cells, organized on a discrete spatial grid, change in time via local update rules. Although typically rather simple “hardcoded” update rules are employed, CAs often display complex dynamics (cf. Conway’s Game of Life [98] or Lenia [99]) but are not known to exhibit homeostatic (closed-loop) activity. An extension of CAs, termed neural cellular automata (NCAs) [100], utilize artificial neural networks (ANNs) as more flexible trainable update rules, aiming to model the internal decision-making machinery of biological cells. Employing machine learning methods, such NCAs have been trained to perform self-orchestrated pattern formation (notably, of images from a single “seed” cell) [101] and even the co-evolution of a rigid robot’s morphology, and its controller has been demonstrated with such NCAs [102].

NCAs exhibit a striking resemblance to the genome-based multi-scale competency architecture of biological life [102] as illustrated in Figure 2: an organism’s entire building plan is encoded in its genome (corresponding to the NCA parameters), while its cells collectively run the self-orchestrated developmental program of morphogenesis (realized by the NCA layout and ANN architecture) via perception–action cycles at the uni-cellular level (cell state updates in the NCA, cf. Figure 2A). Starting from an initial cell state configuration of the NCA, the details of a virtual organism are then, step by step, “refined” in a collective self-organizing growth phase on the cellular level, and maintained against cell state errors later on in the virtual organism’s lifetime. Thus, a single NCA, once trained, guides the growth and integrity of a virtual organism’s tissue via intracellular information processing and intercellular communication, imitating in silico the multi-scale competency-based process of morphogenesis and morphostasis. (Notably, although an NCA update function—the cells’ ANN—is trainable in principle, current approaches pre-train (or evolve, as in our case) the ANN parameters to subsequently study the NCA behavior (such as simulated morphogenesis). Thus, an NCA’s self-orchestrated (developmental) program is defined by a particular set of ANN parameters rather than being acquired during the lifetime of the NCA. Here, we investigate the efficiency at which an evolutionary process arrives at satisfying parameters under various conditions.)

Here, we deploy a swarm of virtual uni-cellular agents on the spatial grid of an NCA. As illustrated in Figure 2A, each uni-cellular agent’s internal decision-making machinery is modeled by an ANN that allows each agent to independently perceive the cell states of its adjacent neighbors on the grid and propose cell state update actions to regulate its own cell state over time. The collective of cells thereby forms a spatial pattern or tissue of cell states on the NCA via local communication rules.

We utilize evolutionary algorithms (EAs) [103] as a simulated evolutionary process to optimize the parameters of such NCAs, so the uni-cellular agents evolve to collectively self-assemble a predefined target pattern of cell states in a fixed number of developmental steps; see Figure 2B for a flow-chart of the evolutionary process. We explicitly separate the NCA parameters into a structural and a functional part. The structural parameters describe the initial cell state, and the functional parameters the weights and biases of the ANN of each agent as illustrated by the “Genome” in Figure 2B. Both the structural and functional parts of the genome are compiled into a swarm of uni-cellular phenotypes on the grid of the NCA. Thus, starting from an initial cell state configuration, given by the structural part of the genome, the uni-cellular agents of the NCA run the developmental program of morphogenesis via successive perception–action cycles (see Figure 2A) to self-assemble in successive developmental steps a system-level phenotype, i.e., a two-dimensional pattern of cell states on the NCA. The deviation of these final cell state configurations from a desired target pattern—here, a Czech flag pattern or smiley-face pattern reminiscent of that of the amphibian craniofacial pre-pattern [104]—defines the phenotypic fitness score of a particular NCA realization. Based on an entire population of NCAs, and on the corresponding fitness scores, the EA successively samples the genomes of the next generation of NCAs, which, over time, evolve to reliably self-assemble the target pattern.

The conceptually simple process of cell state updates of NCAs and the ANN-based modeling of the uni-cellular decision-making allow us to interfere with (I) the reliability of the cell state update executions, and (II) with the computational capacity of the ANNs that guide each cell’s decision-making. To vary the former (I), we introduce a decision-making probability that specifies the probability at which a proposed update of each individual cell is executed in the environment (or omitted otherwise). Thus, by tuning the decision-making probability from to , we can continuously vary the behavior of the NCA from a direct-encoding scheme without competency to a multi-scale competency architecture with perfect reliability in cell state update executions.

To systematically vary the computational capacity of the involved ANNs (II), we introduce independent copies of a particular sub-module of the uni-cellular agents’ ANNs, i.e., of the policy module illustrated in Figure 2A (see Section 3.1 and Section 4.2 and Appendix A for details on the ANN architectures). This increases the number of evolvable parameters of the ANNs, which are responsible for performing the same operation, namely, interpreting the cell’s local environment and proposing a cell state update action. Thus, by taking the average output of all redundant policy modules of a single agent, a cell’s decision-making can be biased by the several redundant paths through which signals are transmitted in the ANN, inspired by error-correcting codes [105,106,107]. We explicitly define a redundancy number R that specifies how many redundant copies of the policy module are used in the ANNs of the cells of the NCA.

The decision-making probability (I) and the redundancy number (II) represent two levels of competency in our system (schematically illustrated by the red arrowin Figure 1C and Figure 2B), which we can scale continuously (I) or discretely (II) to systematically tune the behavior of an NCA. Throughout the manuscript, we refer to these two parameters as “competency levels”, but we would like to stress that many more options would have been possible to vary the competency in our system. For instance, the particular ANN architecture can have large effects on the competency of the uni-cellular agents; a systematic investigation thereof is out of the scope of this work. Here, we utilize two particular ANN architectures, one based on a Feedforward (FF) and one based on a recurrent ANN architecture [108] that is inspired by gene regulatory networks (GRNs) [109], which we thus term recurrent gene regulatory network (RGRN), see Appendix A for details.

To study the effects of different competency levels of the decision-making centers in a multi-scale competency architecture on the underlying evolutionary process of a morphogenesis task, we systematically vary in large-scale simulations the decision-making probability (I) and the redundancy number (II) of NCAs with FF and RGRN ANN architectures. Furthermore, we expose the corresponding NCAs to different noise conditions during cell state updates (III) and perform several statistically independent evolutionary searches at each parameter combination (I–III) to investigate the performance of the evolutionary process of finding solutions to such noisy pattern formation tasks.

The manuscript is organized as follows: In Section 3, we describe the numerical and computational methods applied herein. More specifically, we introduce NCAs in Section 3.1, and describe the neuroevolution approach used to optimize the NCAs ANN parameters based on ideas of evolution and natural selection via EAs in Section 3.2. We specify the particular morphogenetic problem we primarily focused on—the Czech flag task—in Section 4.1, and compare in Section 4.2 the efficiency of evolving the target pattern via a direct encoding scheme and a multi-scale competency architecture. In Section 4.3, we functionally define and systematically vary the different tunable competency levels in our system to illustrate the evolutionary implications of utilizing a multi-scale competency architecture rather than a direct encoding scheme for morphogenesis tasks. We then study the effects of allowing the evolutionary process to afford competency as a gene during optimization in Section 4.4, and eventually investigate our multi-scale competency approach for robustness and generalizability regarding system parameter changes in Section 4.5, and for transferability to modified target patterns in Section 4.6. We conclude in Section 5, and attach an appendix.

2. General Summary

Biological systems are composed of layers of organization, each level providing the foundation for the next higher level of abstraction: membranes, DNA, and proteins form cells, which then collectively organize into tissue and, in further hierarchical steps, into tissues, organs, bodies, swarms, ecosystems, etc. Each of these layers has a degree of ability to adapt in real-time to new conditions to establish and maintain specific outcomes in terms of physiological, metabolic, transcriptional, and anatomical spaces. In other words, evolution works with material that is not passive matter but rather has a degree of competency—an agential material that forms the layer between the genotype and the phenotype. Many scientific studies have been dedicated to investigating how evolution gives rise to such intriguing problem-solving machines we call organisms. In this study, we ask the reverse question: what is it like to evolve over such a material vs. one that passively maps genotypes into the form and function that selection operates over—how does it affect the process of evolution itself? We test this in silico by utilizing evolutionary algorithms to adapt the behavior of a swarm of virtual uni-cellular agents in large-scale simulations of virtual embryos. In our minimal model, the cells collectively self-assemble a predefined target tissue on a neural cellular automaton. We find that competency at the cellular level of our multi-scale model system strongly affects the resulting evolutionary process, as well as the generalizability, evolvability, and transferability of the evolved solutions, suggesting the profound evolutionary implications of the highly intricate multi-scale competency architecture of biological life.

3. Methods

3.1. Neural Cellular Automaton: A Multi-Agent Model for Morphogenesis

Cellular automata (CAs) have been introduced by von Neumann to study self-replicating machines [97] and are simple models for Artificial Life [96]. In CAs, a discrete spatial grid of cells is maintained over time, each cell i being attributed a binary, integer, real, or even vector-valued state at each step in time . The cell states evolve over time via local updated rules as a function of its own and the numerical states of its neighboring cells on the grid that we collect in the matrix with . Although typically rather simple “hardcoded” (i.e., predefined) update rules are employed, CAs often display complex dynamics and can even be utilized for universal computation (cf. Conway’s Game of Life [98] or Wolfram’s rule 110 [110,111]).

Neural cellular automata (NCAs) [100] extend CAs by replacing the local update rule with more flexible [112] artificial neural networks (ANNs) , where denotes the set of trainable parameters of the ANN (see Appendix A for details). Employing Machine Learning, such NCAs have been trained to perform self-orchestrated pattern formation [101] (notably, of RGB images from a single “seed” pixel) and even the co-evolution of a rigid robot’s morphology, and its controller has been demonstrated recently with NCAs in silico [102]. Such self-orchestrated pattern formation is reminiscent of the self-regulated development of a biological organism, from a single fertilized egg cell to a complex anatomical form. Thus, NCAs have been proposed as toy models for morphogenesis [101].

An NCA basically represents a grid of cells that are equipped with identical ANNs, each perceiving the numerical cell states of its host’s local environment, , and proposing actions, , to regulate its own cell state

and, in turn, the cell states of its neighbors—where we also account for potential noise in the environment during the process of morphogenesis. Thus, each cellular agent can only perceive the numerical states of its direct neighbors at an instant of time and, in turn, communicate with these neighbors via cell state updates , following a policy that is approximated by an ANN with parameters . Through the lens of Reinforcement Learning [113], an NCA can thus be understood as a trainable, locally communicating multi-agent system that can be utilized such that the collective of cells achieves a target system-level outcome (see Appendix B for details).

In contrast to previous contributions of in silico morphogenesis experiments in NCAs [101], we here do not use standard convolutional filters in our ANN architectures but utilize permutation-invariant ANNs with respect to a cell’s neighbors, (see Figure 2A for an illustration). Inspired by Ref. [114], this is achieved by partitioning a cell’s ANN into (i) a sensory part , preprocessing its own, and the state of each neighboring cell separately into a respective sensor embedding , for . These neighbor-wise sensor embeddings are (ii) averaged into a cell’s context vector of fixed size s, which is then used as the input of (iii) a controller ANN , potentially with recurrent feedback connections, that eventually outputs the cell’s action ; for details we refer to Appendix A.

Due to the mean aggregation of a cell’s sensory embedding, each cell completely loses its ability to spatially distinguish between neighboring (and even its own) state inputs and thus fully integrates into the tissue locally. We would like to stress the close relation of our approach to the concept of breaking down the computational boundaries of a cell’s “Self” via forgetting [93] and to the scaling of goals from a single agent’s to a system-level objective [95].

To model the developmental process of morphogenesis, we here employ NCAs on a two-dimensional square grid with the objective that all cells of the grid assume their correct, predefined target cell type after a fixed number of developmental time steps, starting from an initial cell state configuration . We attribute a number of elements of the -dimensional cell state of an NCA as indicators for expressing one of discrete cell types such that ; the remaining elements of the cell state represent hidden states of a cell that can be utilized by the NCA for intercellular communication. We explicitly define each cell’s type as the argument (i.e., the index) of the maximum element of the -dimensional indicator vector :

Training an NCA to assemble a predefined target pattern (realized by a set of target cell types for the entire grid) thus boils down to finding a suitable set of NCA parameters (cf. “Genotype” in Figure 2B) that minimizes the deviation of each cell i’s type from after developmental time steps, i.e., after the developmental stage of the virtual organism (cf. “System-level Phenotype” in Figure 2B, from left to right, and details below). Here, we are interested in the evolutionary implications of biologically inspired multi-scale competency architectures, the latter being modeled by our morphogenetic NCA implementation. We thus introduce in Section 3.2, and utilize in Section 4, evolutionary algorithms to evolve suitable sets of NCA parameters that maximize the fitness score based on comparing the “final” cell types of the NCA, , with the predefined target cell types .

3.2. Neuroevolution of NCAs: An Evolutionary Algorithm Approach to Morphogenesis

Evolutionary algorithms (EAs) are heuristic optimization algorithms that maintain and optimize a set, i.e., a population of parameters , also termed individuals, over successive generations to maximize an objective function, or a fitness score . Inspired by the ideas of natural selection and the DNA-based reproduction machinery of biological life, EAs (i) predominantly select the high-fitness individuals of a given population for reproduction, and utilize (ii) crossover and (iii) mutation operations to generate new offspring by (ii) merging the genomic material of two high-quality individuals from the current population , and (iii) occasionally mutating the offspring genomes by adding (typically Gaussian) noise to the parameters; the ⨁ symbol indicates a genuine merging operation of two genomes, which may depend on the particular EA implementation. In that way, a population of individuals is guided towards high-fitness regions in the parameter space , typically over many generations of successive selection and reproduction cycles (i)–(iii).

In contrast to biological life, many use cases of EAs do not require a distinction between individuals in the parameter space, i.e., genotypes , and the corresponding organisms in their natural environment, i.e., phenotypes, : while the genetic crossover and mutation operations of biological reproduction rely on bio-molecular mechanisms at the level of RNA and DNA, i.e., are performed in the genotype space, selection typically happens at the much more abstract level of an organism’s natural environment, i.e., in the phenotype space. Carrying this through computationally can be resource-demanding, depending on the complexity of a simulated environment. Nevertheless, to address the asymmetry between genotypes and phenotypes in multi-scale competency architectures, it is essential to evaluate the fitness score of the EA in the phenotype space instead of the genotype space .

We explicitly separate the genotype and phenotype representations of individuals by introducing a biologically inspired developmental layer [1] in between genotypes and phenotypes as illustrated in Figure 2. More precisely, we follow Section 3.1 and model the developmental process of morphogenesis in silico by utilizing NCAs: we treat an NCA j’s parameters, such as the set of initial cell states and the corresponding ANN parameters , as the (virtual) organism’s genome,

explicitly partitioning the genome into a structural (S) and a functional (F) part, as indicated by the superscripts. We then perform a fixed number of developmental steps employing Equation (1) and interpret the corresponding set of “final” cell types of the entire NCA, cf. Equation (2), as the mature phenotype,

representing a two-dimensional tissue of cells.

In an effort to evolve the parameters of an NCA j to achieve the morphogenesis of a two-dimensional spatial pattern of cell types that resembles a pattern of predefined target cell types of a total of cells on an square grid (see Section 3.1), we define the phenotype-based fitness score as

where (i) is the number of correctly assumed cell types after developmental steps, (ii) is the number of time steps at which the entire target cell type pattern is correctly assumed, i.e., whenever for all i, and (iii) is the number of successive time steps and , where all cell types stagnate, i.e., where for all i. With Equation (5), we thus reward the entire NCA j by counting all correctly assumed cell types after developmental steps (while discounting all incorrect cell types ), we reward maintaining the target pattern over time with a factor of , and discount a stagnation of a suboptimal pattern over time by a factor of . We consider the problem solved if a final fitness score of is reached. Notably, there is no explicit fitness or reward feedback at the level of the uni-cellular agents in our system; the fitness score is solely used as the selection criterion for sampling the next evolutionary generations, so the cellular collective needs to evolve an intrinsic signaling mechanism to successfully perform the requested morphogenesis task.

The here proposed setting of genotypes , corresponding phenotypes , and associated fitness scores , given by Equations (3)–(5), respectively, can be used in combination with any black-box evolutionary or genetic algorithm. We rely on the well-established Covariance Matrix Adaptation Evolutionary Strategy (CMA-ES) [103] to simultaneously evolve the set of initial cell state configurations (i.e., structural genes, ) and the set of corresponding ANN parameters of an NCA (i.e., functional genes, ) with the objective of the purely self-orchestrated formation of two-dimensional spatial tissue as illustrated by Figure 2A and described by Equation (1).

4. Results

4.1. The System: An Agential Substrate Evolves to Self-Assemble the Czech Flag

Evolution works with an active rather than a passive substrate, i.e., with biological cells with agendas of their own [1]. Thus, at every stage of development during morphogenesis, collective decisions are made at vastly different length- and time scales within an organism, guiding the formation of the mature phenotype. We aim to model exactly this process via the neural cellular automata (NCAs) described in Section 3.1 and employ evolutionary algorithms (EAs) to evolve the parameters of such NCAs so the latter perform well on a target morphogenesis task, see Section 3.2. Without loss of generality, we consider an Czech flag pattern (as a more complex version of the classic French flag problem of morphogenesis [115,116]) as the target pattern for our in silico morphogenesis experiments, with a fixed number of cells in total, distinct cell types (colored blue, white, and red, respectively) and hidden state, which renders the dimension of the NCA cell state . We use a square grid of cells with neighbors per cell and with fixed boundary conditions (see Appendix C for details).

Starting from a genotype defined in Equation (3), we perform a number of developmental steps per morphogenesis experiment to “grow” a phenotype , described by Equation (4), based on which the fitness score is evaluated following Equation (5) (see Figure 2B for an illustration of this process). During this entire process, we limit the magnitude of the numerical cell state values at all time steps to the interval , and, analogously, limit the magnitude of the proposed actions of each uni-cellular agent to the interval . This is achieved by clipping the numerical values of after a cell state update described by Equation (1), and the ANN outputs to the respective limits and . The noise level defined in Equation (1) is counted in units of the action limits and is thus sampled from a Gaussian distribution with zero mean and standard deviation independently for each of the cell state elements, thus affecting the cell state updates during development; the actual numerical values for the hyperparameters above turned out to be well suited for the problem at hand, especially to reasonably compare and discuss simulation results for the means of this contribution but are not crucial for the more general aspects of the evolutionary implications of multi-scale intelligence discussed here.

To study the effects of different types of decision-making machinery within a cell, we utilize two different architectures for the NCA artificial neural networks (ANNs), a Feedforward (FF) and a recurrent ANN inspired by gene regulatory networks [117,118,119,120] (RGRNs). (The terminology FF and RGRN stems from the respective agents’ Feedforward and Recurrent Gene Regulatory Network ANN controller layers (see Appendix A for details)). Notably, the RGRN-agent architecture augments cells with an internal memory that is independent of their states in the NCA and thus can not be accessed by the cells’ neighbors. To balance the length of the structural genome and functional genome defined in Equation (3), the two ANN architectures, FF and RGRN, are chosen such that the number of parameters and is roughly the same as the number of initial cell states . Thus, the ANNs utilized here—and detailed in Table A1 of Appendix A—are tiny compared to Ref. [101].

For each experiment of evolving the parameters of an NCA, i.e., for each independent run of the EA, we typically utilize a population of individuals and a maximum number of generations. As the EAs ultimate fitness criterion, we consider the average of statistically independent fitness scores of corresponding morphogenesis simulations starting from an individual j’s genotype and resulting in a corresponding phenotype after developmental steps; the developmental program described via Equation (1) is imperfect due to the developmental noise applied to the cell state updates and can thus lead to different, noise-induced phenotypic realizations. Typical values used here for the corresponding reward factors defined in Equation (5) are and . We consider the problem solved if a fitness of is reached, but since we reward individuals to maintain the target pattern over time (via ), the maximum possible fitness score after developmental time steps is in this example. Further details about the hyper-parameters of the EA and afforded computational resources can be found in Appendix D.

4.2. Direct vs. Multi-Scale Encoding: Cellular Competencies Affect System Level Evolvability

We aim in this contribution to investigate the evolutionary implications of biologically inspired multi-scale competency architectures [1,94]. Thus, we compare two qualitatively different evolutionary processes, both with the objective of morphogenetic pattern formation but whose genomes either (i) directly encode the phenotypic features of a two-dimensional target pattern (cf. Figure 1A), or (ii) encode the cellular competencies of a multi-scale architecture that gives rise to the same phenotypic features (cf. Figure 1C). Notably, different definitions of direct and indirect encodings in multi-agent systems have been used in the literature [54]. Here, we specifically distinguish between structural parameters in the search space that directly encode features of the phenotype, i.e., specific initial cell types and functional parameters that indirectly, or rather functionally, encode the target pattern by parametrizing the intercellular communication and intracellular information processing competencies of the NCA that facilitate the self-orchestrated pattern formation process.

If no ANN at all were present in our model, i.e., , and in the absence of noise , we would re-establish a direct mapping between the genotype and phenotype as , and thus a direct encoding of the target cell type pattern could be achieved . However, by default, we allow each cell to successively regulate its own cell state towards a target homeostatic value via an iterative perception–action cycle defined by Equation (1) and, moreover, to communicate in that way with neighboring cells. More specifically, each cell updates its cell state solely based on its own and the states of its adjacent neighbors, which, in turn, update their states based on their respective local environment. We explicitly avoid direct environmental feedback to the cells’ perception (such as an individual or collective reward signal) but fully restrict the NCA to intercellular communication (via cell state updates) and intracellular information processing. These uni-cellular agents thus exhibit a certain level of problem-solving competencies that can be utilized for the challenge at hand, in our case, for a collective system-level objective of forming a specific two-dimensional target pattern [95,101,102].

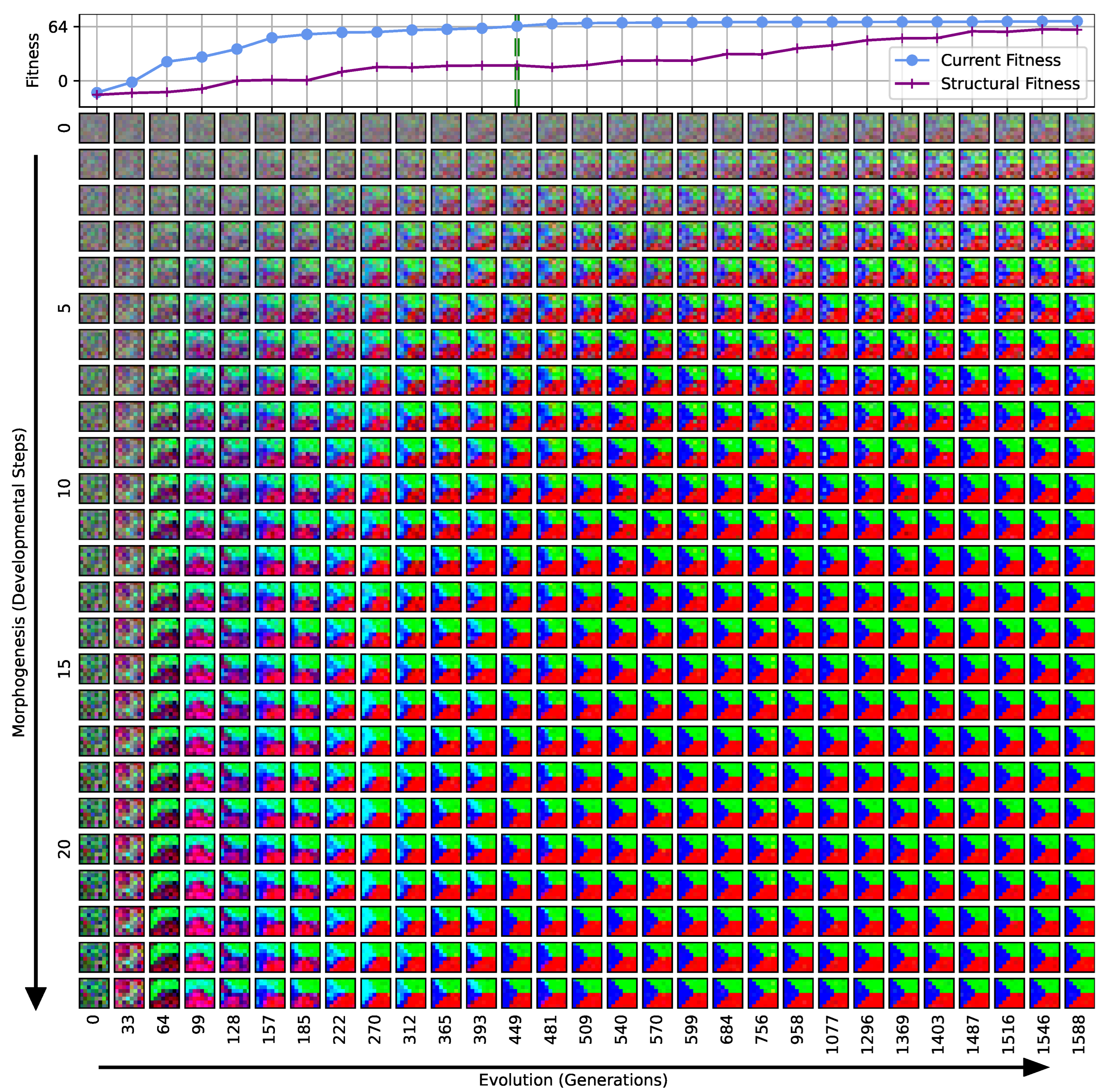

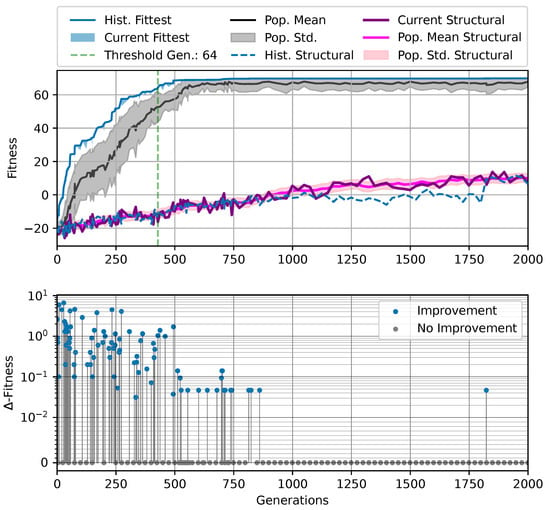

With the explicit partitioning of the genome into a structural part, i.e., , and a functional part, i.e., , we can study the effect of direct vs. multi-scale, or the competency-driven encoding of phenotypic traits in the process of evolution, and, moreover, quantitatively tackle the question of whether competent parts affect the process of evolution and evolvability. In any case, the initial cell state pattern is given by the structural part of the genome. Thus, in the absence of noise and without any active functional part in the genome, the set of initial cell states directly represents the final pattern, while otherwise, cell states can either be modified passively by noise in the system or actively through actions by the cells during the developmental stage. Thus, we employ CMA-ES [103] to either evolve the (i) structural, or both (ii) the structural and functional parts of the genome of an NCA simultaneously with the shared objective of self-assembling an Czech-flag pattern in developmental time steps in the presence of noise (cf. Section 3.2 and Section 4.1 for details). More explicitly, in case (i), we restrict the cell state update of the NCA by disabling all cell actions but formally keep the functional part of the genome in the evolutionary search. In turn, we allow the NCA in case (ii) to afford both the structural and functional parts of the genome, thus giving the evolutionary process the opportunity to prioritize one over the other. We thus bias the evolutionary process in case (i) to effectively search the space of direct phenotypic encodings, while keeping the search space dimensions balanced in both cases. The results of this experiment are presented in Figure 3.

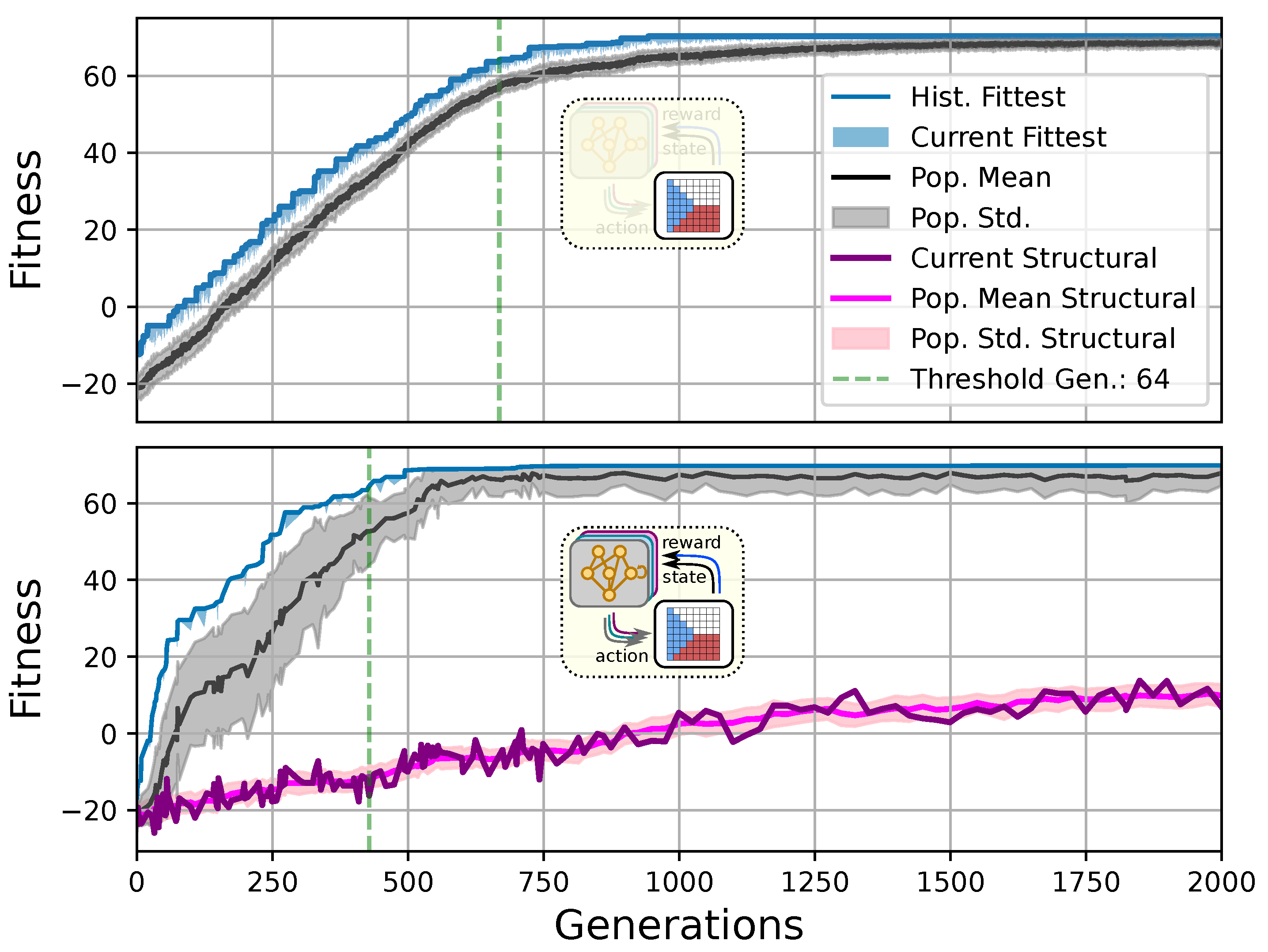

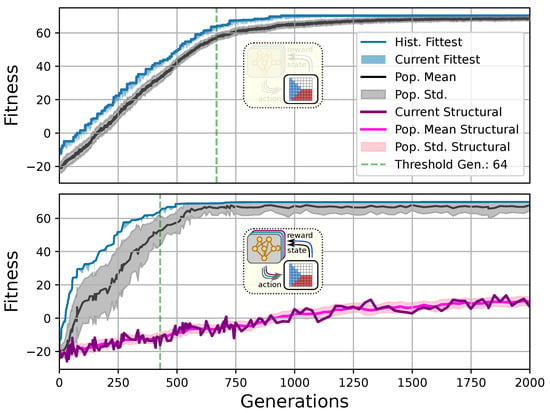

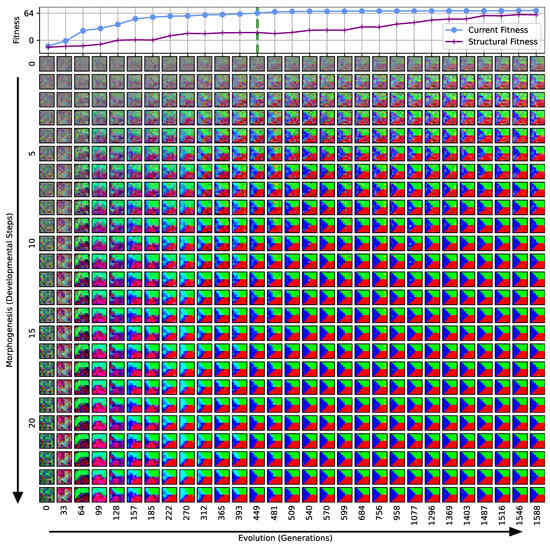

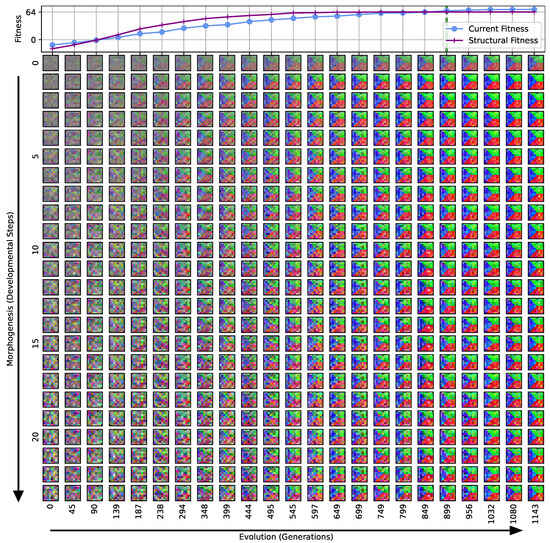

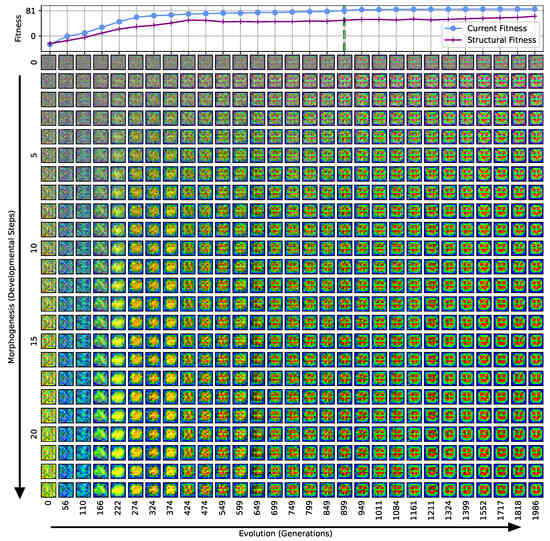

Figure 3.

Typical fitness trajectory over several generations of CMA-ES [103] of an NCA-based Czech-flag morphogenesis task without (top) and with competency (bottom), corresponding to (i) direct and (ii) a multi-scale competency encoding of the target pattern as discussed in the text, representative of related experiments at similar system parameters (cf. Figure 4). We present the historically- (blue) and currently best fitness value per generation (light blue), and the mean (black) and variance (gray) of the fitness of the entire population. Moreover, the current structural fitness (purple), the mean structural fitness of every generation (magenta), and the corresponding standard deviation (light-pink area) are presented; in the top panel, the structural and phenotypical fitness is equivalent, and thus only the latter is shown. The task is solved when a final fitness score of is reached (marked by the green dashed line), i.e., when cell types are correctly assumed after developmental steps. The cartoon insets represent the perception–action cycle of the NCA, assembling an initial (random) arrangement of cell types into the target pattern; for the direct case (top panel), the NCA ANN is disabled, which is illustrated by masking the agential parts in the cartoon.

We can see in Figure 3 that both the evolution of the (i) direct and (ii) multi-scale encoding schemes of the target pattern can be achieved with the presented framework, and a fitness threshold of is reached after ≈300–600 generations, thus solving the problem. However, depending on the encoding scheme (i) or (ii), we can identify clear qualitative differences in the strategy and the “efficiency” of the evolutionary process, i.e., how many generations it takes to reach a certain fitness threshold and eventually converge (cf. top and bottom panel of Figure 3, respectively). The respective fitness score of the direct case (i) grows steadily and almost monotonically over successive generations until the threshold of is reached after 668 generations for that particular run, and the EA converges at a maximum fitness of after 942 generations (see Section 4.1 for details on the threshold fitness values). In contrast, the evolutionary process of the multi-scale case (ii) undergoes significant leaps as reflected by the corresponding fitness score, which can increase rapidly if a suitable innovation, i.e., a favorable crossover or mutation event in the functional parameters , occurs; the initial standard deviation of the fitness of the entire population is significantly larger compared to the direct case (i), yet the threshold fitness of is reached in 428 generations, and the EA converges after 679 generations (although at a lower maximum fitness of ).

The results presented in Section 4.2 are based on selected evolutionary optimization runs that are representative of related experiments with similar parameterizations. However, one should keep in mind that such results are always susceptible to chance in initial conditions or mutations in the EA but also to developmental noise; moreover, the hyperparameters of the evolutionary search or even the specific ANN architectures can influence the evolvability of such NCA systems. Thus, we present in Section 4.3 below a more statistically significant analysis of the evolutionary implications of direct and multi-scale encodings under various conditions of the cellular agents’ competency levels and the developmental noise.

Our separation of the genotype into a structural and into a functional part moreover allows us to extract the structural (or genotypic) fitness along an entire evolutionary history: we define the structural fitness as the fitness score of a phenotype with evolved structural genes but with disabled agency . Notably, in the direct case (i), we have , which is illustrated in Figure 1A and reflected in the top panel of Figure 3; the structural fitness of the multi-scale case (ii) is explicitly visualized in the bottom panel of Figure 3. In the latter case, the structural fitness remains essentially detached from the phenotypic fitness during the entire evolutionary history (which also explains the convergence to a suboptimal maximal fitness level of in this particular NCA solution, as the final Czech flag pattern first needs to be assembled from the corresponding imperfect initial cell configurations ). This all suggests that in contrast to (i), the EA in (ii) can make the most use of exploring the functional part of the genome, i.e., the space of behavior-shaping signaling and information processing [1], and, in turn, that the mere presence of competent parts drastically changes the search space accessible to evolution [3]; to show this explicitly, we present in Appendix E an illustration of the evolution of the morphogenesis process.

Interestingly, we still observe a slow but steady increase in the structural fitness in the long term in case (ii), owed to the small additional reward signal reinforcing the cellular agents to maintain the target pattern over time. This can most efficiently be achieved if the agent starts from a perfect set of initial cell types, representing a particular sub-space in the parameter space that might not necessarily be easily accessible to the EA at all stages during the evolutionary search. However, we would like to stress that such a slow transfer of problem-specific competencies from an agential, highly adaptive functional part to a rather rigid structural part of the genome could be a manifestation of the Baldwin effect [14].

While remaining neutral with respect to the system level fitness score, this competency transfer seems to affect the entire population presented in Figure 3 as reflected by the successively increasing population-averaged structural fitness score. Notably, and as detailed in Appendix F, we identified an associated decrease in the robustness against increasingly noisy cell state updates of the corresponding solutions with larger structural fitness. This suggests a reduction in uni-cellular competencies and might relate to the “paradox of robustness” discussed in Refs. [51,52,53,121,122,123,124,125,126,127]. Through a computational lens, such a competency transfer would also allow, as soon as the structural part of the genome is reliable enough, to repurpose the system’s competency to adapt to other independent tasks, and thus may facilitate the, in biology, ubiquitous effects of adaptability and polycomputing in related systems [128].

This all illustrates that an agential material [1,94], or more precisely, a substrate composed of competent parts, can have significant effects on the process of evolution and evolvability, especially for morphogenesis tasks. We thus conclude that, if competent parts are available, evolution prefers exploiting competency over direct encoding—if the environment requires competency at all (see discussion in Section 4.3). This leads to the conclusion that “competency at the lowest level greatly affects evolution and evolvability at the system level”.

4.3. Evolution Exploits Competency over Direct Encoding, if Necessary

Here, we investigate the effects of varying different levels of competency at the cellular level of a multi-scale competency architecture on the evolutionary process of morphogenesis. More specifically, we introduce the decision-making probability (I) , which constrains the ability of each cell individually to perform cell state updates in the environment: defines the probability at which a proposed cell state update of each individual cell in the NCA is executed (or otherwise omitted). Thus, varying the decision-making probability from to smoothly transitions the system’s behavior from a direct encoding scheme without competency to an increasingly reliable multi-scale competency architecture (cf. Figure 3).

Another, somewhat hidden, level of competency we already discussed in Section 3.1 is each cell’s ANN architecture: An RGRN agent with internal memory can acquire and execute tasks differently than a simpler FF agent without any feedback connections except for its cell state . Comparing the evolutionary implications of such functionally different ANN architectures is, however, not trivial, and is thus kept to a minimum here.

However, we parameterize both FF and RGRN agents such that their controller part of the ANNs (cf. Figure 1C, Section 3.1, and Appendix A) are (II) stacks of R redundant copies of the same controller ANN, each copy with its own set of parameters, which take the same pre-processed aggregated sensor embedding as input, and whose individual outputs are averaged into a single action-output of a cell. Inspired by redundancy in error-correcting codes [105,106], we thus allow cells with higher values of this redundancy number R, i.e., with many alternative routes through the controller part of the ANN, to—in principle—integrate environmental signals more generally compared to , thus affecting the cells competency.

While scaling from to smoothly increases a cell’s competency to reliably regulate its cell state, increasing R enhances the computational capacities of the uni-cellular agents. Henceforward, we interpret (I) and (II) R as two competency levels in our system, which we can vary (I) continuously and (II) discretely.

Analogous to Section 4.1 and Section 4.2, we thus utilize CMA-ES to evolve the genotypic parameters of an NCA to self-assemble the Czech flag pattern under different conditions (I–II), and expose the cells to different noise-levels (III) during cell state updates defined in Equation (1).

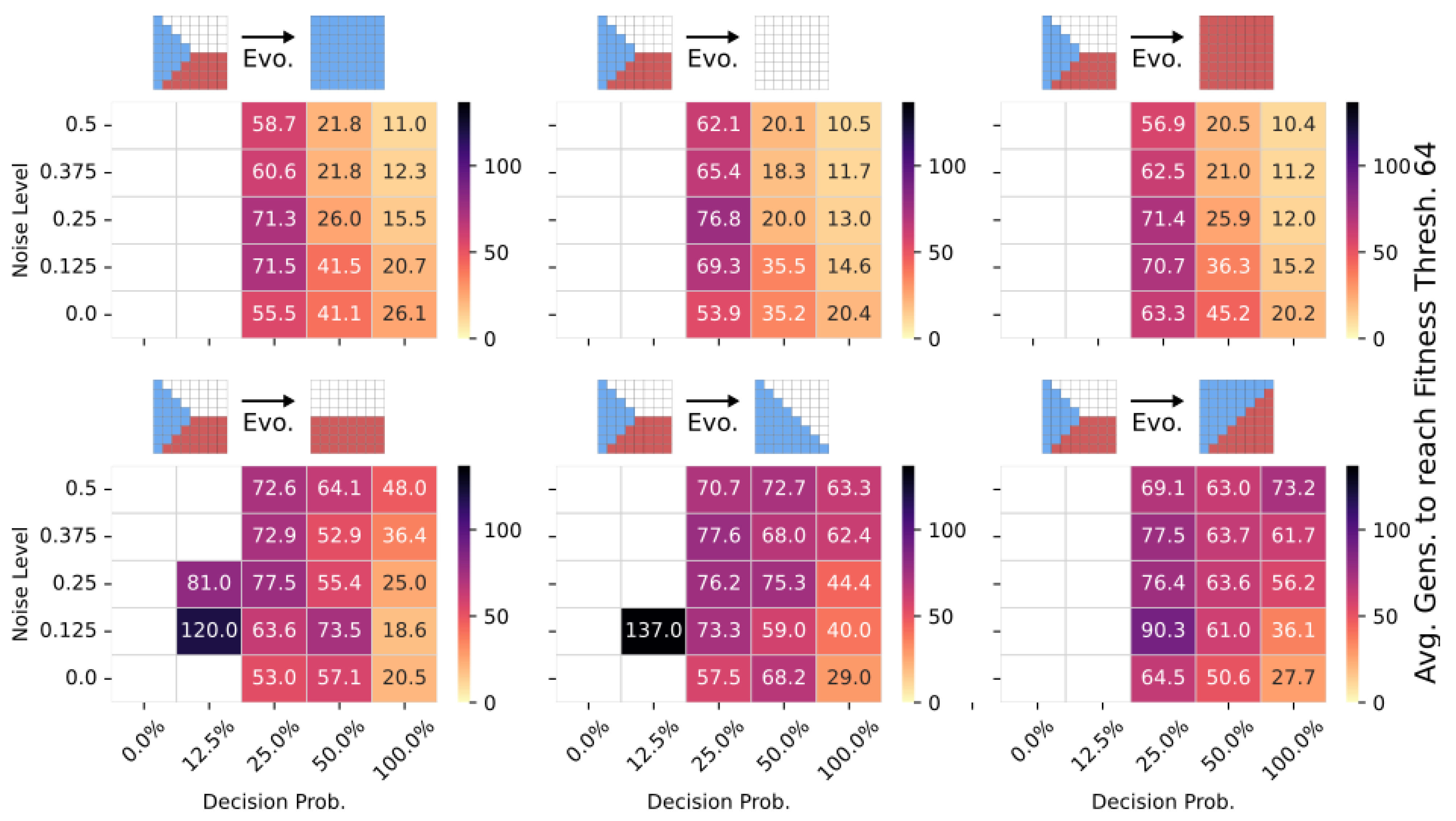

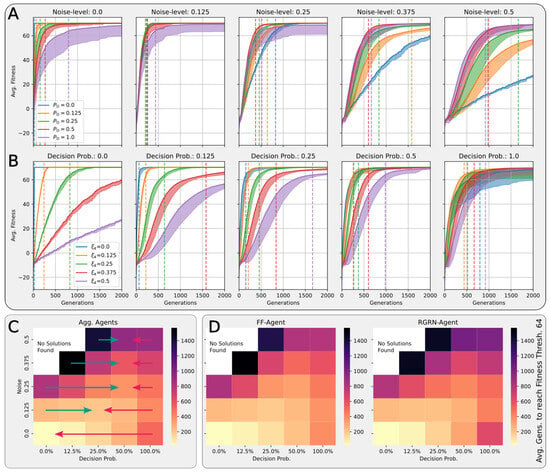

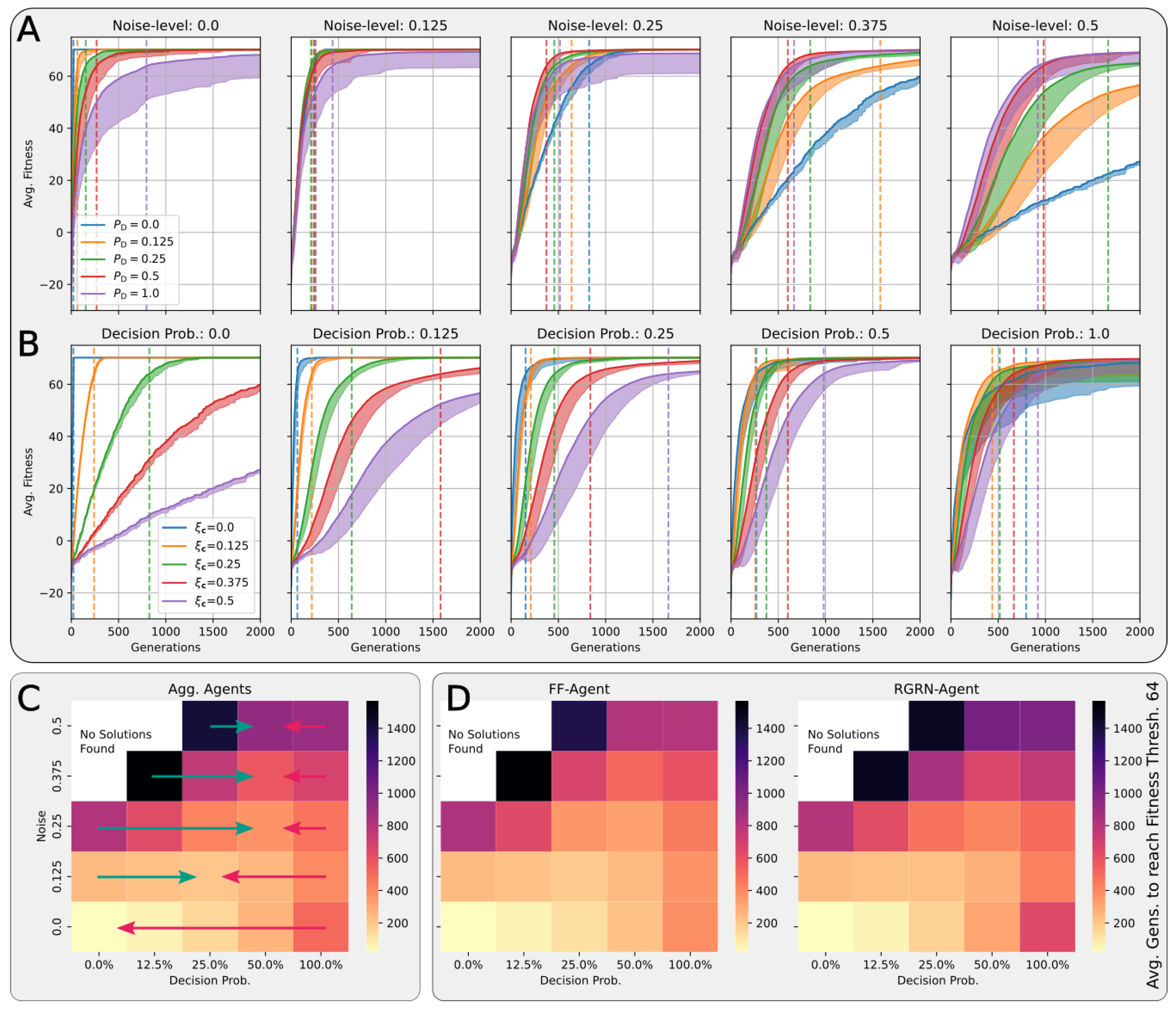

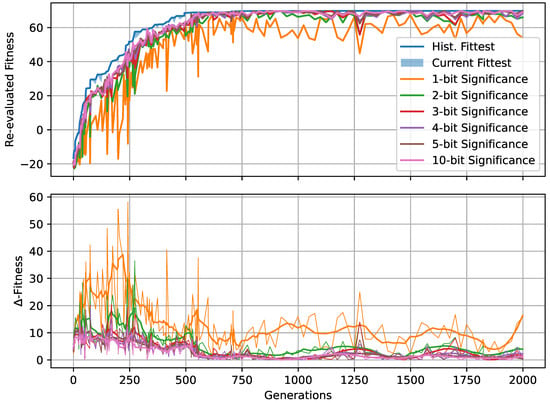

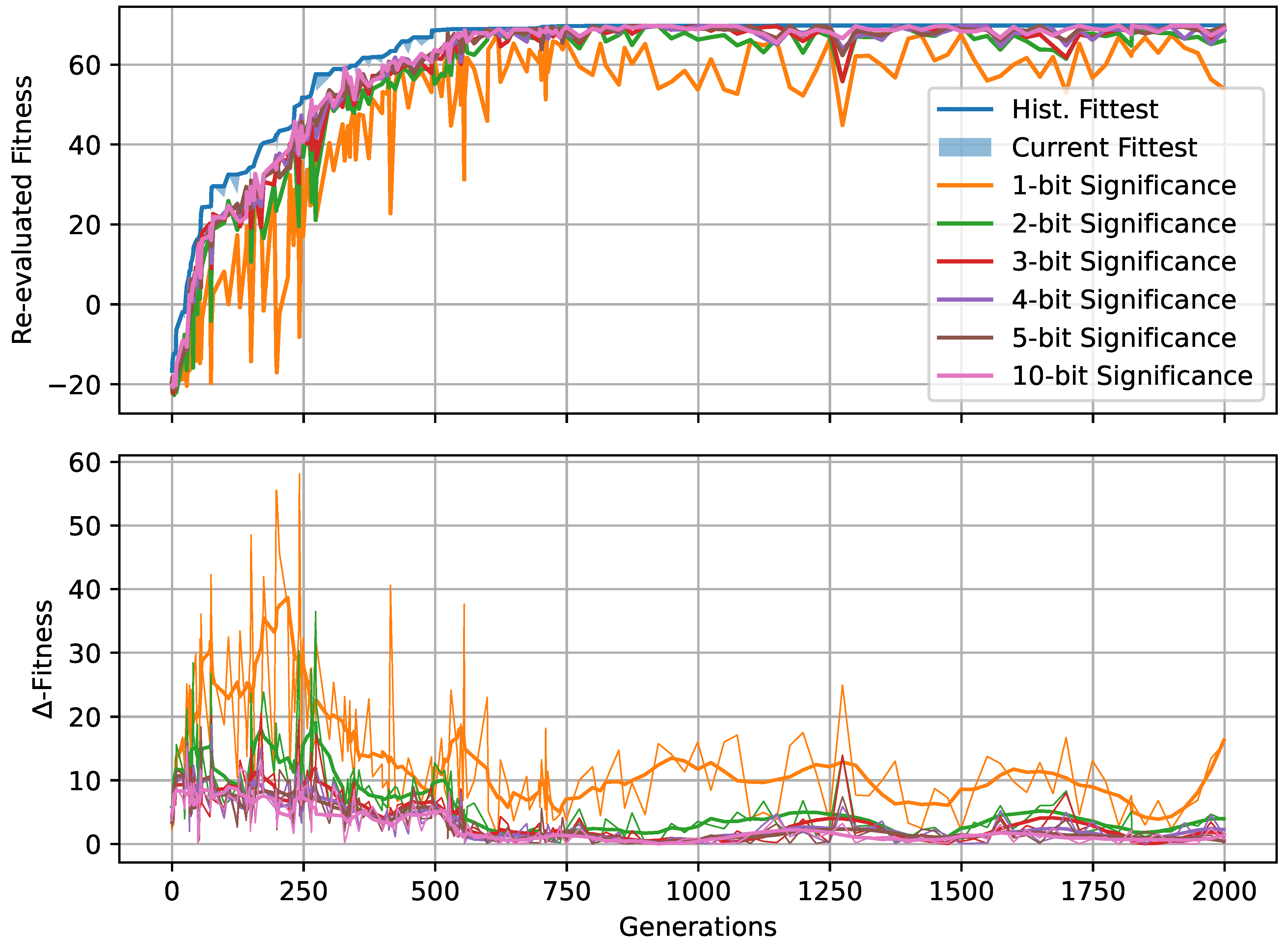

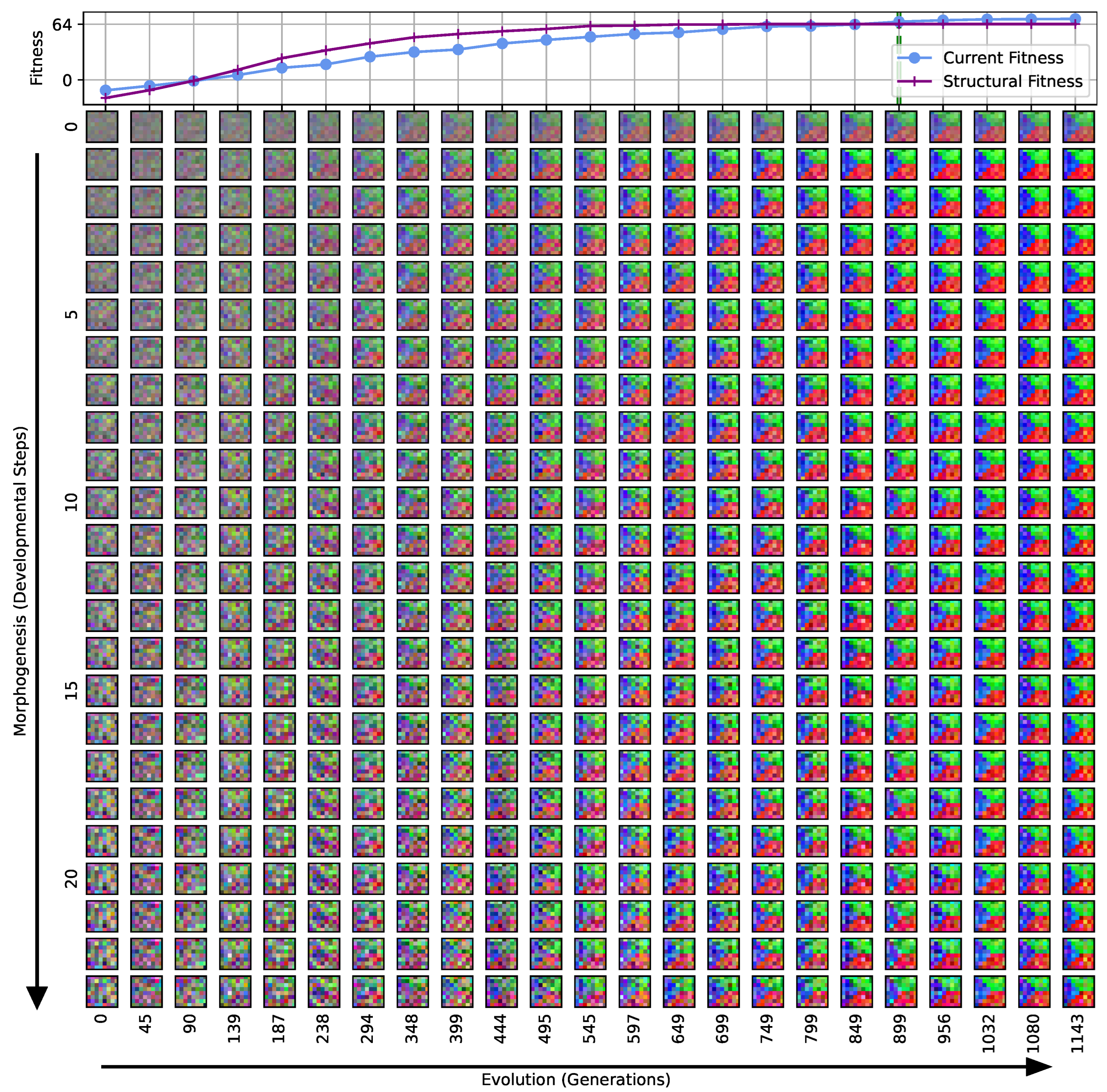

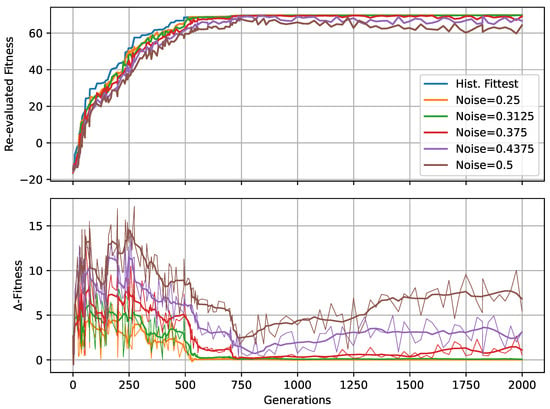

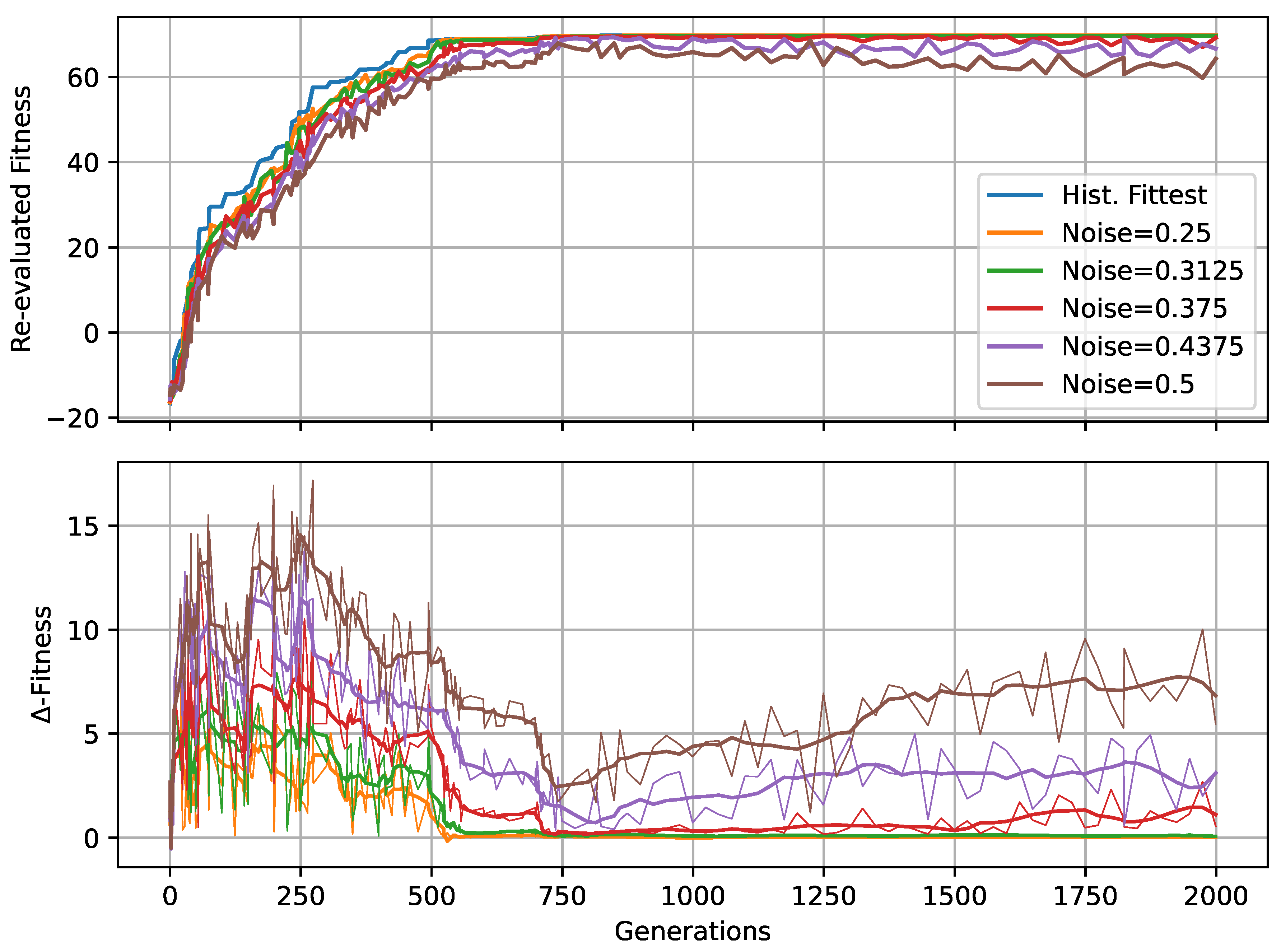

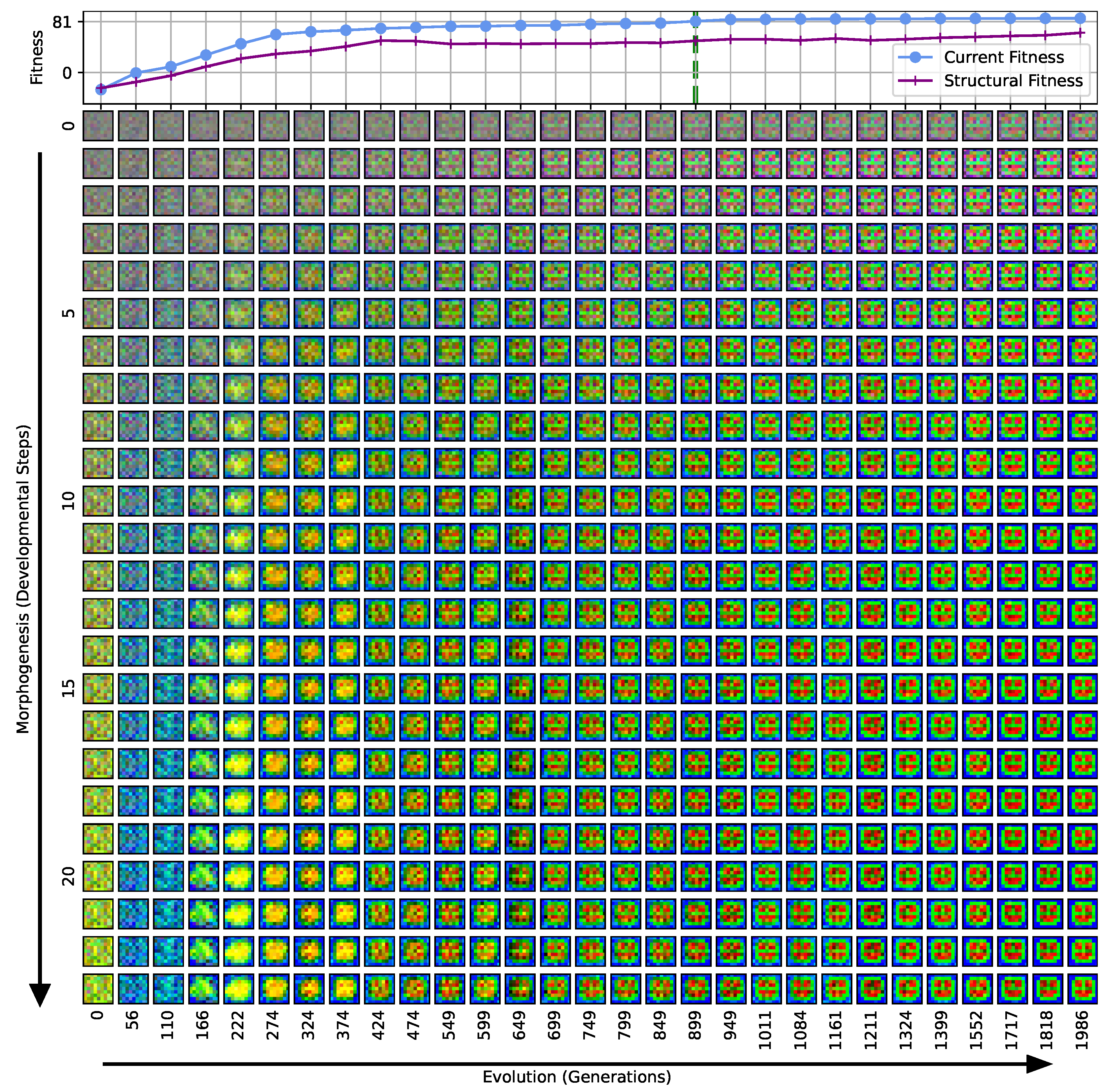

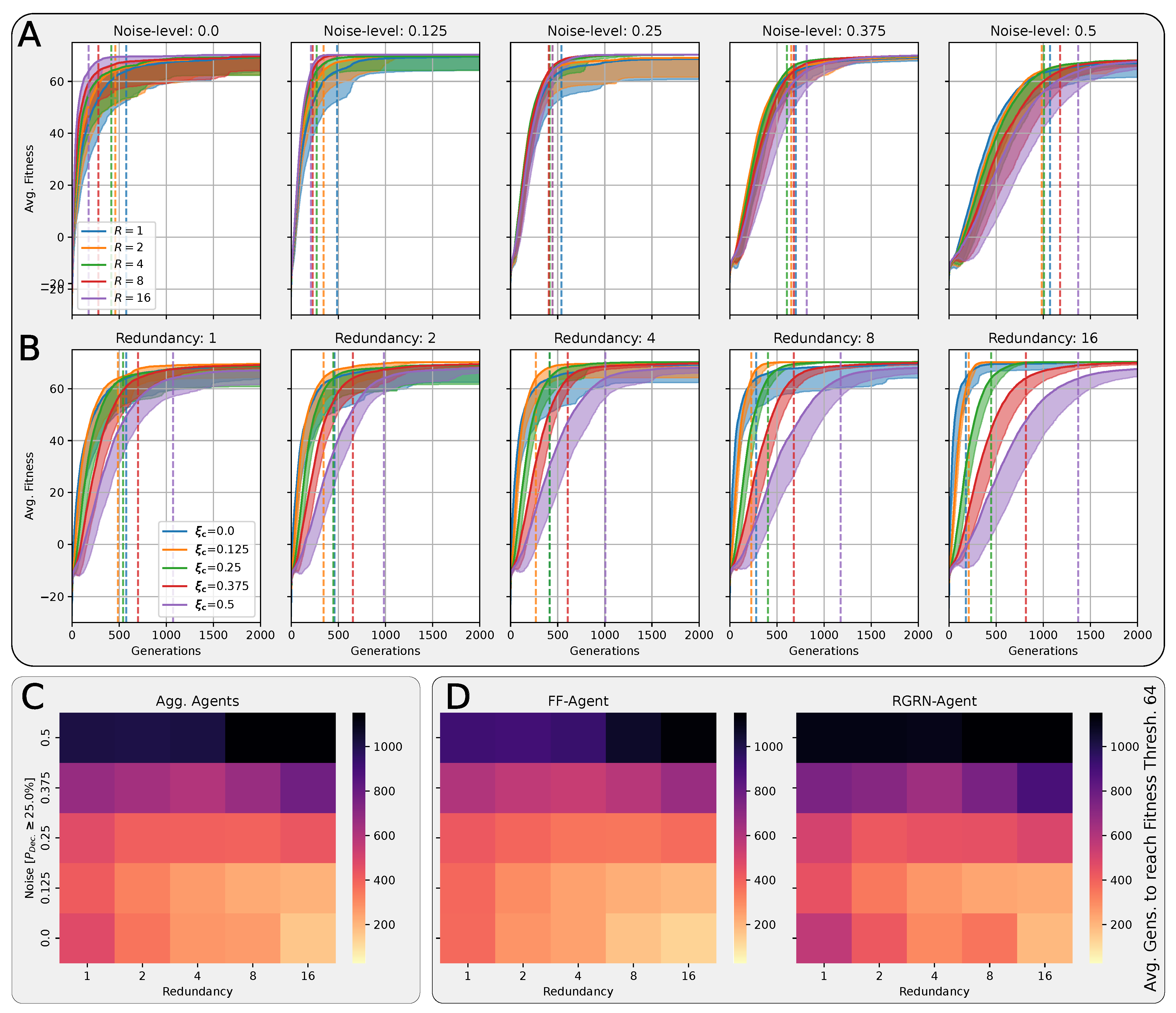

In Figure 4A,B, we present the corresponding fitness scores of a maximum of 2000 generations of CMA-ES for different noise levels , averaged over different values of the decision-making probability for both FFagents and RGRN agents. Moreover, for each realization of and , we utilize experiments with different redundancy numbers and employ 15 statistically independent EA runs for each parameter combination , , and R, and thus arrive at 75 statistically (and functionally, with respect to an agent’s ANN architecture) independent fitness trajectories per (, ) combination; see Section 4.1 and Appendix D for more details on the EA parameters. In Figure 4C, we present the average number of generations it takes to solve the problem (to reach a fitness threshold of ) for each combination of and , aggregated over the agents’ ANN architectures, FF or RGRN, and the respective redundancy numbers R for 15 statistically independent EA runs each; in Figure 4D, we present the data from Figure 4C but separately for both ANN architectures.

Figure 4.

(A,B) The average fitness per generation of the best-performing individual in a population of 65 independent evolutionary processes of the 8 × 8 Czech flag task, evaluated from left to right at different noise levels (decision-making probabilities) and color-coded by the decision-making probabilities (noise-levels), for panels (A,B) respectively; solid lines mark average fitness values, the shaded area marks the standard deviation (to lower values only), and dashed lines indicate when an average fitness threshold of 64 is crossed, solving the problem. (C) Heatmap of the average generation number when the fitness threshold of 64 is crossed at particular combinations of the decision-making probability and noise level as detailed in (A,B); green and red arrows respectively indicate directions along of increasing and decreasing values of the average fitness at fixed noise values. (D) Same as (C) but partitioned by the respective FF-agent or RGRN-agent architectures used in the respective CMA-ES runs.

Figure 4.

(A,B) The average fitness per generation of the best-performing individual in a population of 65 independent evolutionary processes of the 8 × 8 Czech flag task, evaluated from left to right at different noise levels (decision-making probabilities) and color-coded by the decision-making probabilities (noise-levels), for panels (A,B) respectively; solid lines mark average fitness values, the shaded area marks the standard deviation (to lower values only), and dashed lines indicate when an average fitness threshold of 64 is crossed, solving the problem. (C) Heatmap of the average generation number when the fitness threshold of 64 is crossed at particular combinations of the decision-making probability and noise level as detailed in (A,B); green and red arrows respectively indicate directions along of increasing and decreasing values of the average fitness at fixed noise values. (D) Same as (C) but partitioned by the respective FF-agent or RGRN-agent architectures used in the respective CMA-ES runs.

We observe in Figure 4 that, depending on these two parameters and , for no- or very low noise levels , the evolutionary search is most efficient, i.e., finds the solution in the fewest number of generations, on average, for low values of the competency level . Thus, in these situations, direct encoding (achieved via ) seems to be preferable to competency-driven encodings with (as indicated by the bottom red arrow in Figure 4C); this is partly owed to the specific definition of the cell types given by Equation (2), making a noiseless search very simple for the EA. However, for more realistic, noise conditions , the situation changes drastically. With increasing the noise level, the evolutionary efficiency of NCAs with higher competency levels is significantly greater compared to those with low competency levels, especially for the direct encoding scheme (as indicated by the green arrows in Figure 4C); for noise levels of and , the EA does not even find solutions for the direct encoding case with in 2000 generations, as cell state updates become increasingly necessary to counteract the noise in the system. There is a clear trend of increasing the evolutionary efficiency in our in silico morphogenesis experiments by increasing the competency level for increasingly difficult environments with high noise levels.

Thus, we conclude that scaling competency has a strong effect on the process of evolution, and in realistic situations (with moderate to high noise), competency may greatly improve the evolutionary efficiency and evolvability of collective self-regulative systems.

It might be noteworthy that for evolving the Czech flag pattern, essentially no qualitative difference in the evolutionary efficiency between FF agents and RGRN agents with the given number of parameters was observed. Also, the evolutionary implications of utilizing a number of redundant copies within the controller ANNs of the cells of an NCA is much less pronounced, compared to the results depicted in Figure 4 as can be seen in Figure A8 of Appendix H. However, for more advanced problems such as assembling a smiley-face pattern (see Appendix G), RGRN agents seem to outperform simpler FF agents significantly in terms of evolutionary efficiency. Moreover, a larger redundancy number of is required by the evolutionary process to more efficiently evolve the functional parameters of an NCA compared to a direct encoding scheme, hinting at a capacity bottleneck of the deployed ANNs.

4.4. There Is a Trade-Off between Competency and Direct Encoding Depending on Developmental Noise

A careful analysis of the results shown in Figure 4 reveals that the largest competency level of does not result in the highest evolutionary efficiency for any presented noise level. On the contrary, populations with slightly lower competency levels of or even perform best at noise levels and , respectively (as indicated by the green and red arrow ends in Figure 4C). In fact, cells with an initially random genome (comprising the ANN and initial cell state parameters) that are forced to make “uninformed”, i.e., initially random, decisions at every time step can interfere with the performance of the EA, as even initially perfect cell state configurations will be destroyed during such a randomized developmental stage. We suspect that this leads to corresponding delays in the evolutionary search compared to situations where populations can better rely on the structural part of the genome. Indeed, populations with “overconfident” actions can be trapped in local optima for many generations at all stages of the EA, which, in our system, may only be resolved by very specific but random mutations of the functional part of the genome (as we show later through Figure 5 in Section 4.4). This is reflected in Figure 4A,B by the large deviations in the average fitness trajectories for large values.

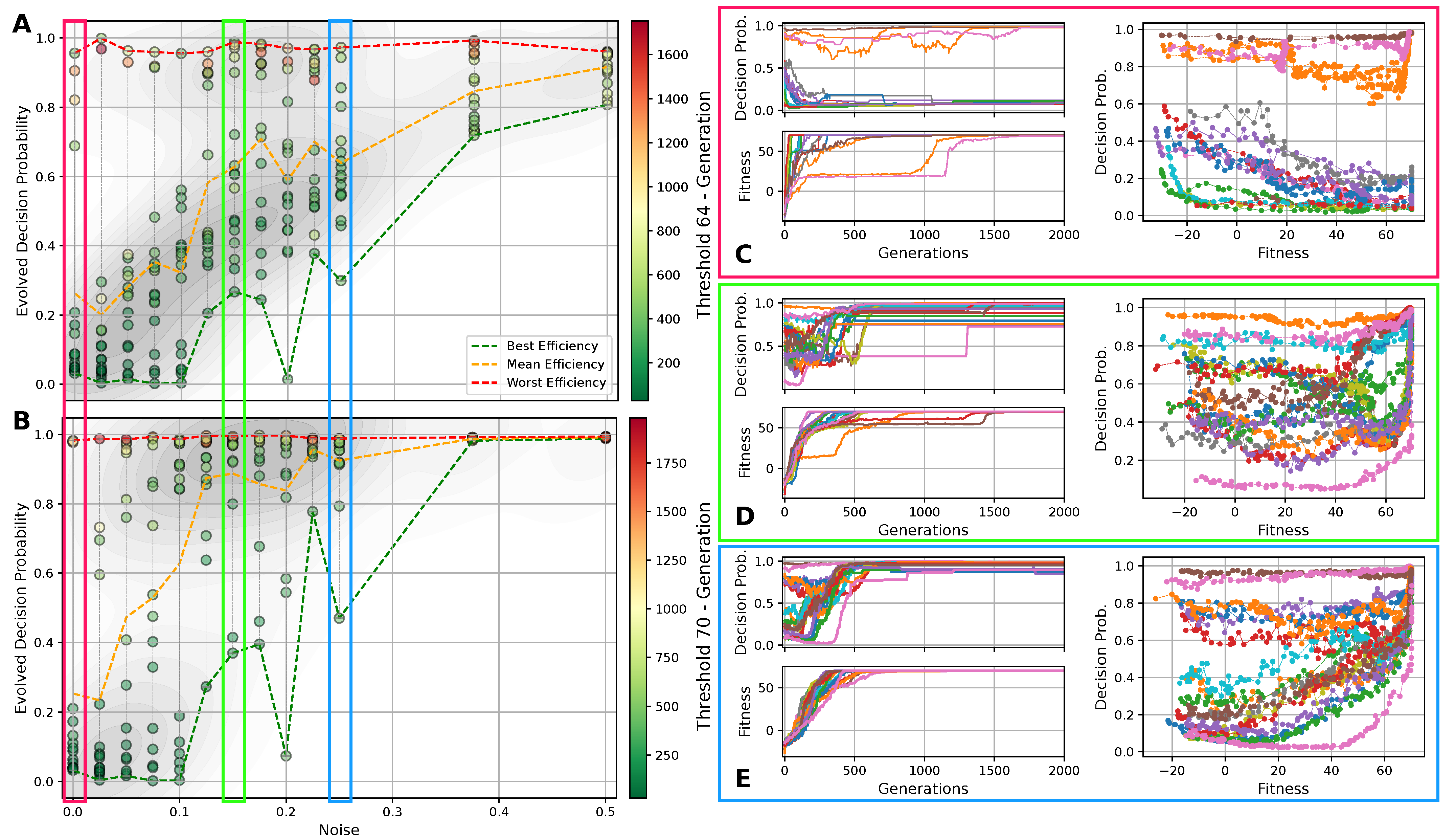

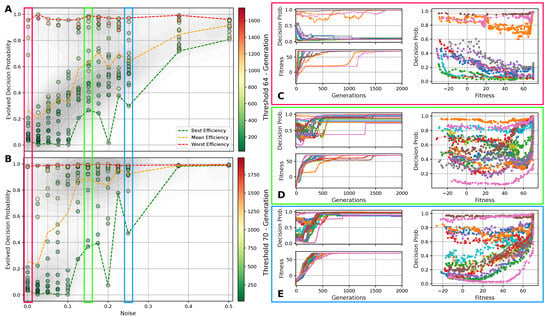

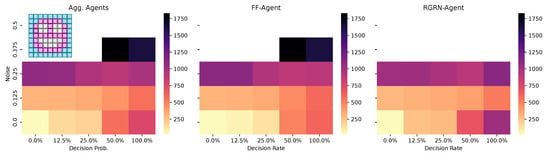

Figure 5.

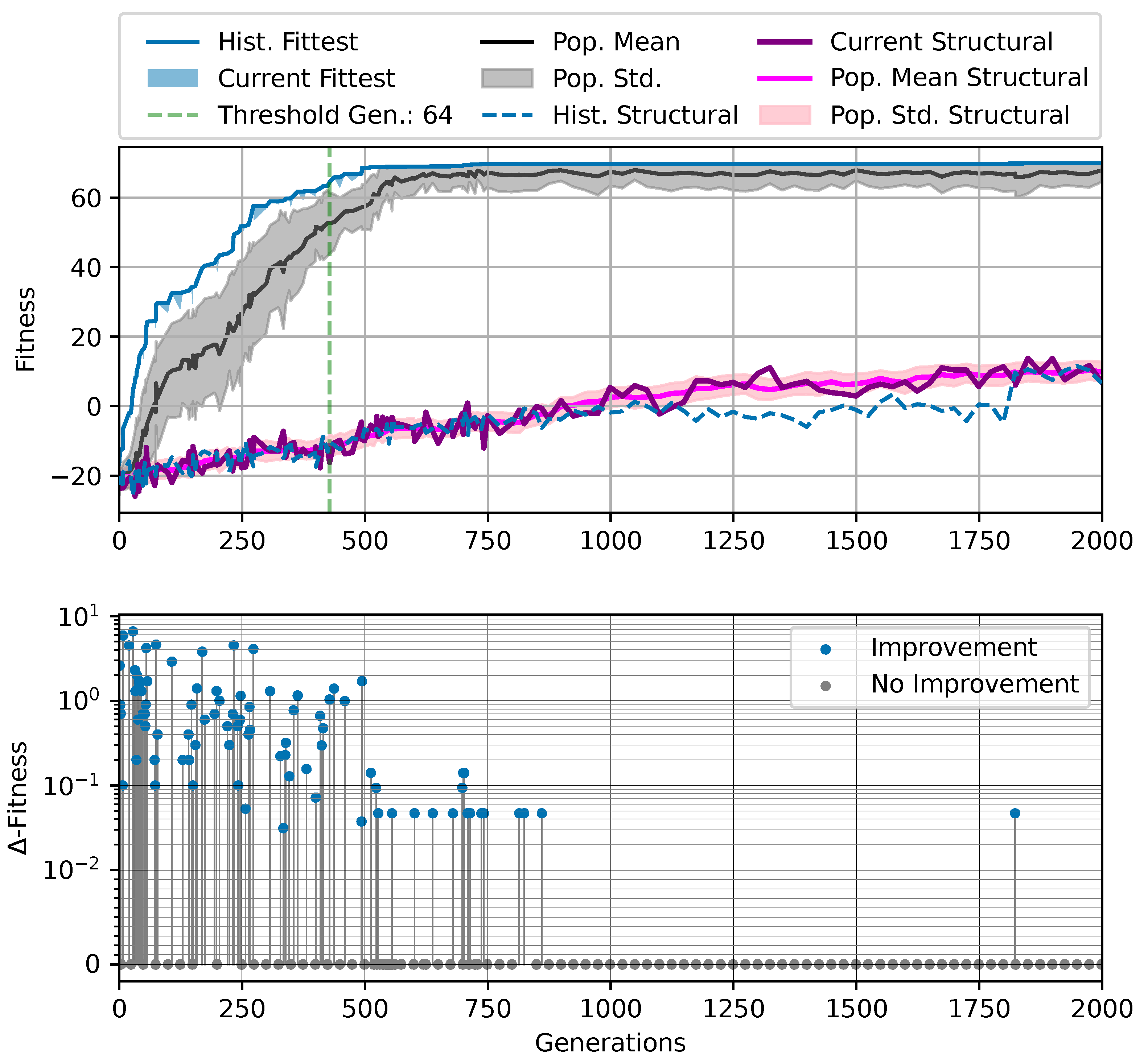

(A) The evolved decision-making probability for different noise levels when a fitness threshold of 64 for the Czech flag task is reached; each symbol represents an independent lineage with a color-coding that indicates the number of generations it took for that particular lineage to cross the specified fitness threshold. The green/orange/red dashed lines indicate at which value of the evolutionary process crossed the fitness threshold the fastest/on average/the slowest (i.e., in the least, average, or largest number of generations) for each noise level. (B) Same as (A) but with a fitness threshold of 70. For both (A,B), the red/green/blue frames emphasize the noise level and corresponding to panels (C–E), respectively: The latter show the evolution of the decision-making probability/fitness (top/bottom left panel) and the value of the decision-making probability as a function of the corresponding fitness during the evolutionary process of each lineage (right panel) for all lineages (indicated via color-coding) at the specified noise level. Results are shown for an RGRN-agent architecture with redundancy , and are qualitatively similar to those of an FF-agent architecture.

The insights from the above lead to the questions of whether there is a “natural” or optimal competency level, with respect to the decision-making probability , or whether a mutable competency level can be utilized by the evolutionary process to improve the efficiency of guiding a population towards high-fitness regions in the parameter space. Thus, we include the decision-making probability as an additional competency gene into the NCA genome , cf. Equation (3), and we perform in silico morphogenesis evolution experiments of the Czech flag pattern for different noise levels , analogous to Section 4.3. We analogously limit the numerical range of the competency gene to the interval , and extract the corresponding decision-making probability via . Notably, for the experiments shown in this sub-section, we use regularization on the genotypic parameters through subtracting from the fitness score defined in Equation (5), with (the regularization applied to does not introduce a bias between the minimal and maximal competency levels, as the regularization is applied to , not ; both and the regularization are symmetric with respect to the sign of ).

In Figure 5A,B, we present the evolved competency level for different noise levels after fitness thresholds of 64 and 70 are crossed, respectively, for 10 independent lineages per noise level for an RGRN architecture. The problem is considered solved at a fitness of 64, but since we reward the NCAs to maintain the target pattern over time via in Equation (5), a higher maximal fitness score of can be reached after developmental steps for a sufficiently long evolution. Thus, we here relate Figure 5A to the evolutionary stage of having achieved the process of morphogenesis, and Figure 5B of having achieved morphostasis. For both cases, we essentially see two strategies emerging (see also Figure 5C–E): (i) one, where competency is maximized very early during the evolutionary process that then remains near the maximally possible value of , and (ii) a hybrid strategy where a significantly lower competency level is assumed that still allows to solve the problem.

Notably, strategy (i) is predominantly pursued at high noise levels, where large cell state fluctuations in the environment favor informed actions by the cellular agents. In contrast, the second strategy (ii) emerges more frequently in lineages evolved at low noise levels where, especially at very low noise levels , most of the evolutionary processes result in solutions that avoid competency altogether, and a direct encoding scheme ( = 0) is evolved. Intermediate competency levels evolve in the corresponding intermediate noise regime. Following the trend of evolving morphogenesis (by crossing a fitness score of 64) to morphostatsis (by converging to the maximal fitness value of ≈70) in Figure 5A through B, we see that the two strategies, (i) and (ii), “sharpen” during the course of the evolutionary process such that predominantly converges to the minimally or maximally possible values of 0 and 1, depending on the environmental conditions.

We also illustrate the evolved competency level of the particular lineage at all noise levels in Figure 5A,B, at which the respective fitness threshold is crossed in the minimal and maximal number of generations (and on average) amongst all 10 independent lineages per noise level. This clearly reveals that evolutionary processes that follow a more direct encoding strategy (ii) can evolve the problem at hand efficiently—if this is permitted by the developmental noise. However, when increasing the noise level, the evolutionary process can afford to evolve—or put differently, increasingly relies on evolving—the multi-cellular intelligence of the NCA to perform morphogenesis and morphostasis, thus following a third strategy (iii) that integrates both strategies (i) and (ii) in a non-trivial way. We observe in Figure 5A that the most efficient strategy for evolving morphogenesis seems indeed to be such a hybrid approach (iii), where a minimally necessary competency level is utilized at a specific noise level such that the corresponding evolutionary process can, again, be very efficient in solving the task.

Moreover, this also holds for the stage where morphostasis is reached, cf. Figure 5B: lineages that efficiently evolved to solve morphogenesis in our experiments also (typically) evolve to solve morphostasis efficiently. To emphasize this, we present in Figure 5C–E the “temporal dynamics” of the population-wise highest fitness and the corresponding competency level per generation for all lineages at selected noise levels ; we also present for all corresponding lineages that have been evolved at these selected noise levels the genotypic competency level against the corresponding phenotypic fitness scores , and we find an apparent yet non-trivial relation between these two quantities: typically, an initial rise in fitness in early generations is associated with a decline in which is more pronounced at lower noise levels. For intermediate noise levels , we find that often assumes a minimum (i.e., a minimally required yet finite competency level) when the evolutionary process reaches a fitness level of ≈64. We suspect that this allows the evolving morphogenetic process to establish good starting configurations based on changes in the structural genome, which can most efficiently be performed at a minimal(ly necessary) competency level given a certain developmental noise level in the environment. However, the competency is then quickly pulled towards a maximum level of when the EA converges at a maximum fitness score of ≈70, at the morphostasis stage. For large noise levels, e.g., as depicted in Figure 5E, the competency level rises with the corresponding fitness score in a much more monotonic way, emphasizing the necessity of the corresponding NCAs to utilize the cellular competency to solve the problem already at an early stage of the evolutionary process.

Curiously, we also see lineages that settle at the highest possible competency levels throughout their evolutionary history, even in conditions without noise as can be seen in Figure 5C: here, an initial “frozen accident” may cause an entire lineage to maintain high competency levels due to a lack of diversity in the corresponding gene, although this is not even necessary to solve the task. However, these high competency levels early on during the evolutionary process can cause the population to stagnate at sub-optimal regions in the parameter space for many generations if the corresponding policy of the cells is sub-optimal but rigid to strategy changes via small mutations in the genome. The population seems “trapped” until a favorable mutation or crossover event occurs in the functional part of the genome of an individual that guides the entire population towards higher fitness scores, eventually solving the problem. We suspect that this is also the reason for the lower evolutionary efficiency of the “most competent” configurations (with ) compared to the slightly less competent cases (with ) of the experiments depicted in Figure 4 [129].

Thus, we conclude, that if the evolutionary process can afford to evolve its own competency level, there seems to be a trade-off—during the entire course of the evolutionary process—between “going direct” or “going competent”, depending on the developmental noise. Moreover, the randomly initialized starting conditions may favor either direct or multi-scale encoding strategies, which may not only affect the “final” competency level that the evolutionary process converges to but can also greatly influence the efficiency of the evolutionary process itself. In general, the most efficient strategy for evolving morphogenesis tasks seems to be a non-trivial trade-off between finding a suitable initial cell state configuration that then allows the competency-based self-assembly of the target pattern to “kick in” and solve the task efficiently.

4.5. Competency Can Lead to Generalization

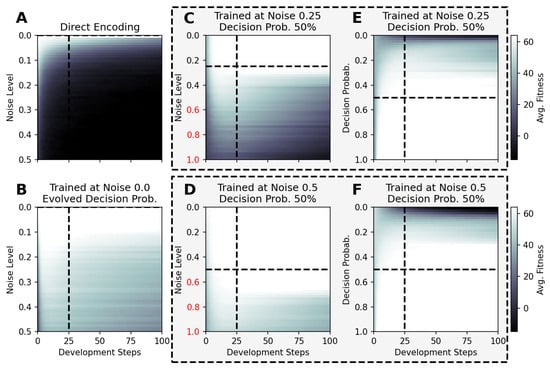

We are ultimately interested in the question of whether a substrate of competent parts shows the ability to generalize to environmental conditions that have never been experienced by its evolutionary predecessors, and hence would allow the evolutionary process to adapt an organism to changing environmental conditions more efficiently compared to a direct encoding scheme. Thus, we systematically vary in Figure 6 the system parameters, i.e., the noise level and the decision-making probability competency level, for selected NCA solutions of the Czech flag problem that have been trained with certain sets of the system parameters above.

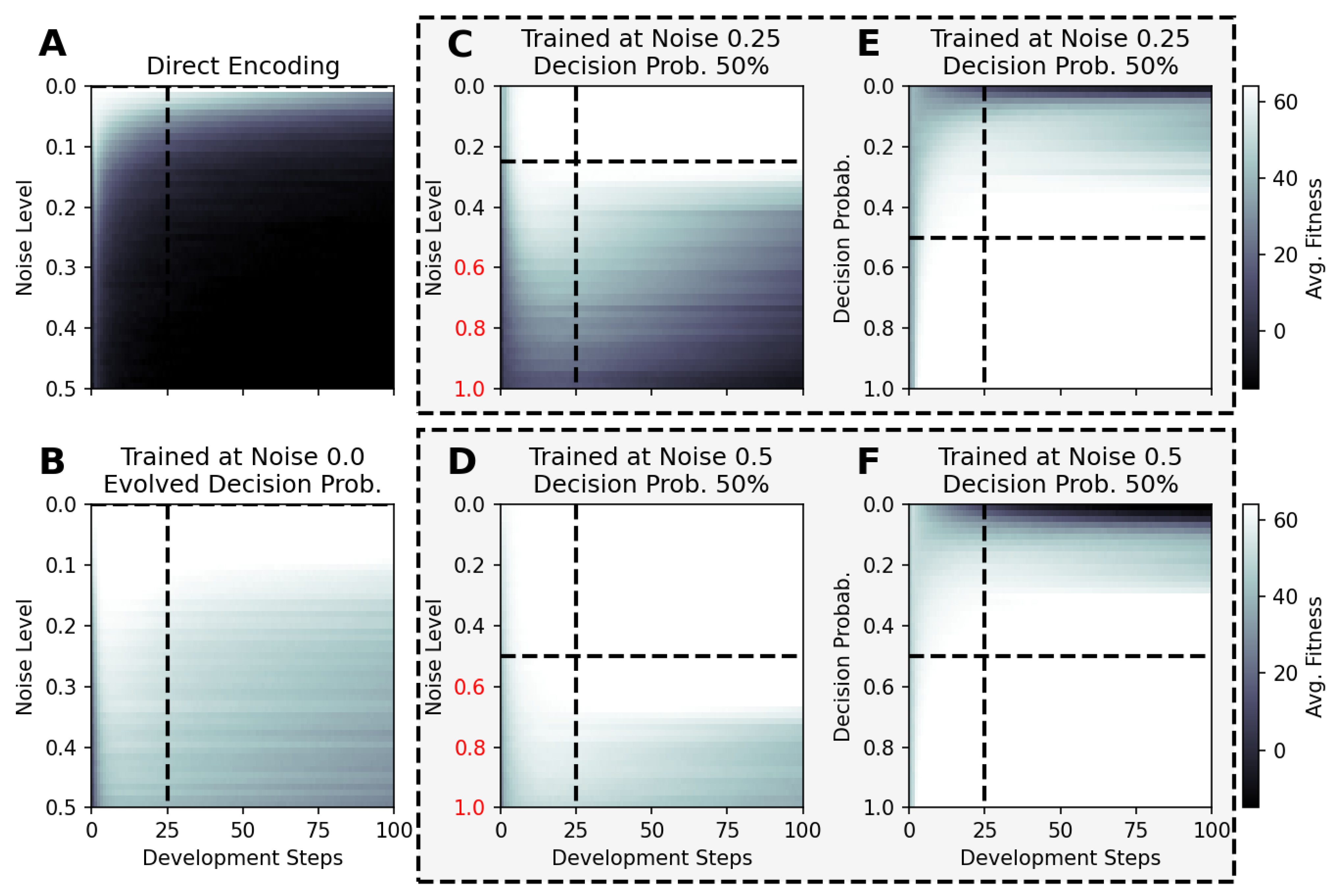

Figure 6.

The average fitness score of 100 independent evaluations of selected NCA results utilized at noise (A–D) and competency-level conditions (E,F), which have not been experienced during training for an increased total lifetime of 100 time steps. The respective NCAs have been evolved at zero-noise without competency (A), with evolvable competency (B), and under different noise conditions and decision-making probabilities (C–F), with a fixed number of developmental steps; the results of all panels except for (B) are based on RGRN-agent architectures with the training conditions given by titles and dashed lines. The data presented in panels (C,E) and (D,F) are respectively based on the same NCA solution (indicated by the dashed frames), while the noise level is varied in (C,D) at a fixed competency level of , and the competency level is varied in (E,F) at a fixed noise level of (, )], respectively.

For instance, we utilize NCA solutions that have been evolved to solve the Czech flag problem in developmental steps (see above) under zero-noise conditions without and with evolvable competency. Here, we utilize such solutions for larger noise levels of and for lifetimes of 100 time steps and present the average fitness values of 100 statistically independent simulations at each particular noise level in Figure 6A,B, respectively, without any further evolutionary optimization. Analogously, we expose NCA solutions that have evolved with a competency level of and noise levels of and , respectively, to vastly different noise levels of compared to the conditions during their respective evolutionary processes, and present the results in Figure 6C,D. Eventually, we again deploy the latter NCA solutions but vary the competency level instead, at respectively fixed noise levels of and , with the results depicted in Figure 6E,F. Notably, we only consider the “correctness” part of the fitness score, i.e., the first term in Equation (5) by setting and .

The results in Figure 6 demonstrate that the performance of the here evolved NCAs, optimized with evolutionary methods to assemble and maintain a target morphology over time at particular system parameters, differs greatly between NCA solutions that follow the direct- or multi-scale encoding paradigms when subjected to novel environmental conditions: The typical fitness over the lifetime of an NCA without competency that encodes the target phenotype pattern directly (cf. Figure 6A) is constantly affected by random fluctuations and thus decreases in fewer time steps with increasing noise levels in a diffusive process; the duration of how long the corresponding maximum fitness score of 64 can be maintained and the speed at which the fitness eventually decays during the lifetime of the here discussed Czech flag NCA depend on the particular noise level and on the values of the initial cell states, which are limited numerically to the interval for each cell. In contrast, NCA solutions with larger competency levels that have been evolved at finite noise-level conditions still perform well—and can maintain the target pattern for exceptionally long times—also when changing the system parameters dramatically (cf. Figure 6B–F); note the noise-level axis of to 1, compared to the maximum noise levels of during training.

The results in panel Figure 6B are especially curious, as the corresponding NCA has been trained to evolve its decision-making probability alongside the structural and functional parts of the genome at zero noise conditions. While no competency at all would have been required to solve this task, the presented NCA solution evolved to afford a maximum competency of (cf. Figure 5C). Strikingly, this particular NCA is capable of resisting much larger noise levels of while maintaining the pattern perfectly for at least steps, and the average fitness score of 100 independent solutions does still not drop below a certain threshold of ≈40–50 for even higher noise levels and for 100 time steps. Notably, there appears to be a bifurcation of the long-term behavior of these NCA solutions (not shown here) where the NCA—in some realizations—maintains the target pattern perfectly for long times, while in other independent runs, the fitness drops quickly.

In this sub-section, we thus show that NCAs that have evolved to assembly and maintain a target pattern within a relatively short developmental stage are capable of maintaining the corresponding target pattern over much longer time scales—without any further optimization—and thus show great signs of functional, morphostatic generalizability. Moreover, the here-discussed in silico morphogenesis and morphostasis model systems are capable of handling, essentially on the fly, system–parameter combinations that neither they nor their evolutionary ancestors ever experienced before. Thus, we conclude that such multi-scale competency architectures [1], whose substrate is composed of competent rather than passive parts, can be more than capable of generalizing to changes in their environment—within reasonable boundaries, of course—by allocating robust problem-solving competencies at many scales [93,94].

4.6. Competency Can Augment Transferability to New Problems

Deducing from the discussion in Section 4.5 about the generalizability of multi-scale competency architectures [1] towards changing environmental conditions, such systems should also exhibit increased evolvability and transferability properties to new problems: if such multi-scale competency architectures are capable of adapting their behavior towards changing environmental conditions on the fly during a single lifetime (cf. Figure 6), this has great consequences for the evolutionary process when environmental conditions change.

Thus, we utilized the NCA solution discussed in Figure 5C and Figure 6D and performed subsequent CMA-ES on the Czech flag problem at changed environmental conditions, i.e., at higher noise levels: only a single or, at most, a handful of generations are necessary for solving the task even at intermediate and high noise levels of and .

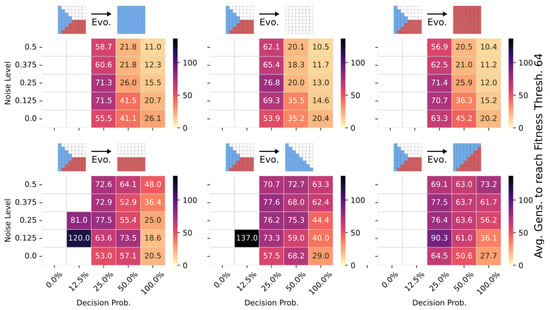

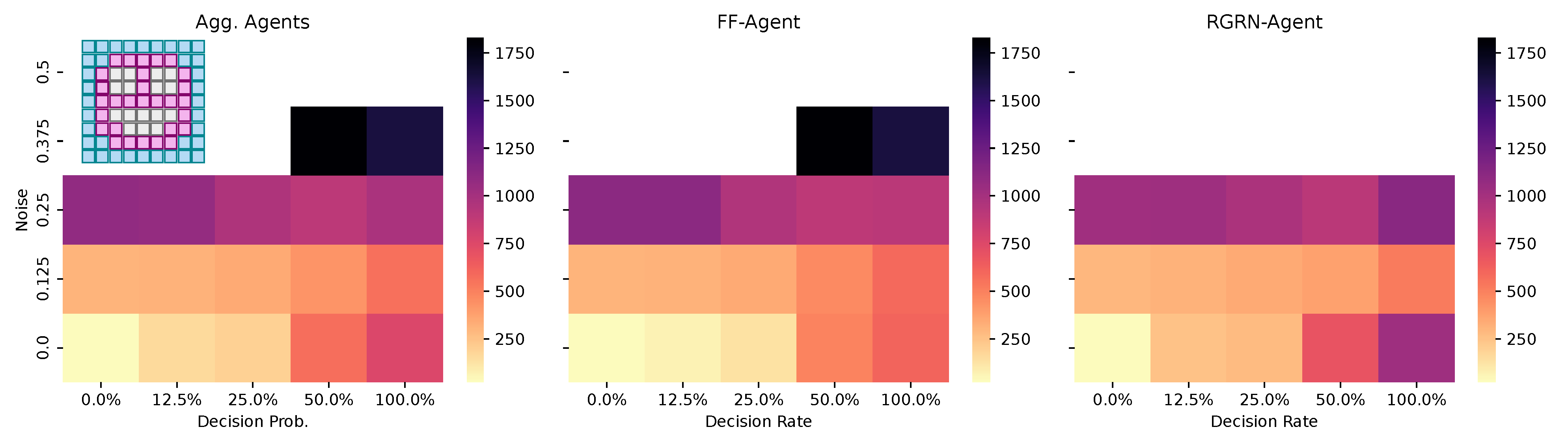

To emphasize the potential of the transferability of multi-scale competency architectures, we here investigate the adaptation capability of pre-evolved NCAs when their objective function is suddenly changed, i.e., when the environment starts selecting for different target patterns than the one they have originally been evolved for. More specifically, we utilize NCA solutions from Section 4.3, and discussed in Figure 4, which successfully solve the Czech-flag task, and additionally perform 1000 evolutionary cycles of CMA-ES on a related blue-, white-, red-, Viennese-, blue/white-, and blue/red-flag morphogenesis task for various noise and competency levels. We allow changes to both the structural and functional parts of the genomes of the pre-evolved NCA.

In Figure 7, we present the corresponding number of generations it takes for 10–60 CMA-ES runs on average to adapt a pre-evolved, i.e., “informed”, NCA solution that can solve the Czech-flag morphogenesis task to then solve the respective new morphogenesis task under different environmental conditions. We see a clear advantage in terms of the evolvability and adaptability of pre-evolved individuals at high-competency levels (in contrast to individuals with lower competency levels) so that adaptation can happen in as few as ≈10 generations. While the Czech→blue-, white-, and red-flag tasks are rather trivial (see top panels in Figure 7), computationally, the Czech→Viennese-, blue/white-, and blue/red-flag adaptation tasks (bottom panels in Figure 7) are more complicated. Still, the latter can be solved in as few as ≈20 generations compared to ≫100 generations of evolving a corresponding randomly initialized NCA to solve the Czech-flag problem from scratch as shown in Section 4.2 and Section 4.3.

Figure 7.

The average number of generations it takes for the CMA-ES to adapt a pre-evolved NCA solution that can solve the Czech-flag morphogenesis task to adapt, respectively, to the blue-, white-, red-, Viennese-, blue/white-, and blue/red-flag morphogenesis tasks instead (cf. panel insets) and reach a correctness fitness score of 64. We specifically adapt Czech-flag NCA solutions that have been pre-evolved at a noise level of but with corresponding competency levels according to the horizontal axis in Figure 4, and deploy CMA-ES for 1000 generations at the corresponding noise/competency levels depicted here on the vertical/horizontal axis, and average over multiple CMA-ES runs and corresponding redundancy numbers .

Thus, we conclude that pre-evolved (or “informed”) competency at subordinate scales of a multi-scale competency architecture greatly enhances a collective system’s capability of adaptation. Thus, a competent and informed substrate has great effects on a multi-scale competency architecture’s evolvability towards changing environmental conditions and on the transferability of already acquired (evolved) solutions to new problems.

5. Conclusions

We have investigated the evolutionary implications of multi-scale intelligence on an example of the in silico morphogenesis of two-dimensional tissue of locally interacting cells that are equipped with tunable decision-making machinery. More specifically, we have utilized evolutionary algorithms (EAs) [103] to evolve the parameters of neural cellular automata (NCAs) [100] on morphogenesis tasks under various conditions of the competency level of the uni-cellular agents and the developmental noise in the system.