Abstract

Background/Objectives: The growing use of artificial intelligence (AI) in musculoskeletal radiographs presents significant potential to improve diagnostic accuracy and optimize clinical workflow. However, assessing its performance in clinical environments is essential for successful implementation. We hypothesized that our AI applied to urgent bone X-rays could detect fractures, joint dislocations, and effusion with high sensitivity (Sens) and specificity (Spec). The specific objectives of our study were as follows: 1. To determine the Sens and Spec rates of AI in detecting bone fractures, dislocations, and elbow joint effusion compared to the gold standard (GS). 2. To evaluate the concordance rate between AI and radiology residents (RR). 3. To compare the proportion of doubtful results identified by AI and the RR, and the rates confirmed by GS. Methods: We conducted an observational, double-blind, retrospective study on adult bone X-rays (BXRs) referred from the emergency department at our center between October and November 2022, with a final sample of 792 BXRs, categorized into three groups: large joints, small joints, and long-flat bones. Our AI system detects fractures, dislocations, and elbow effusions, providing results as positive, negative, or doubtful. We compared the diagnostic performance of AI and the RR against a senior radiologist (GS). Results: The study population’s median age was 48 years; 48.6% were male. Statistical analysis showed Sens = 90.6% and Spec = 98% for fracture detection by the RR, and 95.8% and 97.6% by AI. The RR achieved higher Sens (77.8%) and Spec (100%) for dislocation detection compared to AI. The Kappa coefficient between RR and AI was 0.797 for fractures in large joints, and concordance was considered acceptable for all other variables. We also analyzed doubtful cases and their confirmation by GS. Additionally, we analyzed findings not detected by AI, such as chronic fractures, arthropathy, focal lesions, and anatomical variants. Conclusions: This study assessed the impact of AI in a real-world clinical setting, comparing its performance with that of radiologists (both in training and senior). AI achieved high Sens, Spec, and AUC in bone fracture detection and showed strong concordance with the RR. In conclusion, AI has the potential to be a valuable screening tool, helping reduce missed diagnoses in clinical practice.

1. Introduction

The plain radiograph is the most widely used imaging technique globally and also the most frequently requested first-line test from emergency departments for identifying the most common osteoarticular pathologies, especially traumatic conditions such as fractures and dislocations [1].

Interpreting plain radiographs is a complex task that requires adequate training. In routine clinical practice, emergency physicians interpret these radiographs on their own, without awaiting the radiologist’s report. This can result in a significant number of diagnostic errors [2], with missed fractures being the most common. According to Guly HR’s study, missed fractures account for up to 80% of errors in the interpretation of emergency bone radiographs [3].

In response to this issue, several commercial companies have developed artificial intelligence (AI) software capable of detecting different items in plain radiographic images, yielding promising results. AI in plain radiography is here to stay, as it is a useful tool both for screening and decision-making, providing a second reading of the imaging test [4].

This study hypothesizes that AI, when applied to the interpretation of urgent osteoarticular radiographs, demonstrates high sensitivity and specificity, as well as good agreement with the interpretations made by radiology residents.

Therefore, the main objective of our study is to assess the sensitivity and specificity of the interpretations made by the AI software available in our department (Arterys MSK AI, Milvue, hereinafter Milvue) in comparison to the interpretations of senior radiologists (considered the gold standard) of the plain osteoarticular radiographs of patients attending the emergency department at Hospital Universitario QuironSalud Madrid. Additionally, this study aims to evaluate the level of agreement between the interpretation made by Milvue and those made by radiology residents in training.

2. Materials and Methods

2.1. Study Type and Subjects

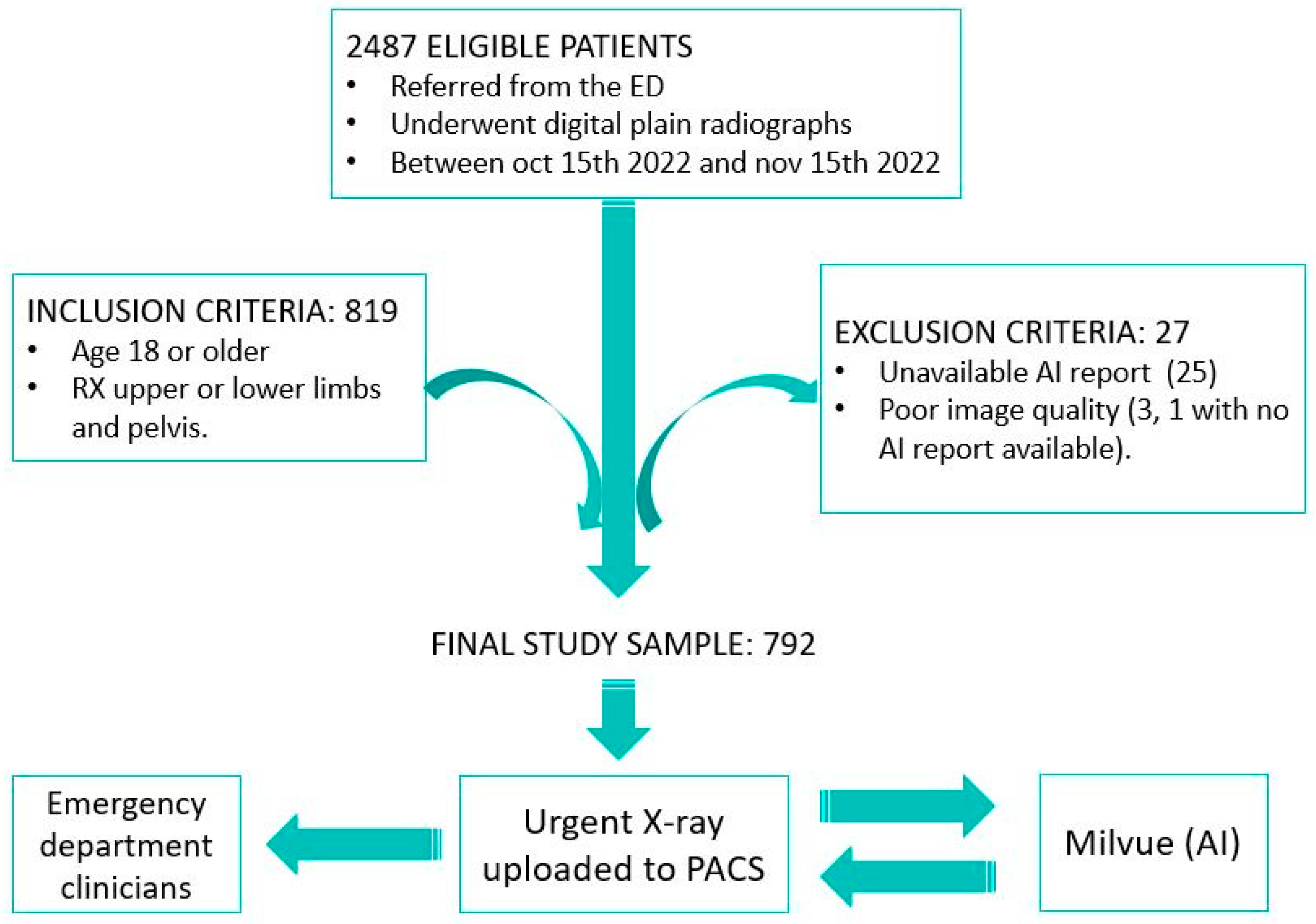

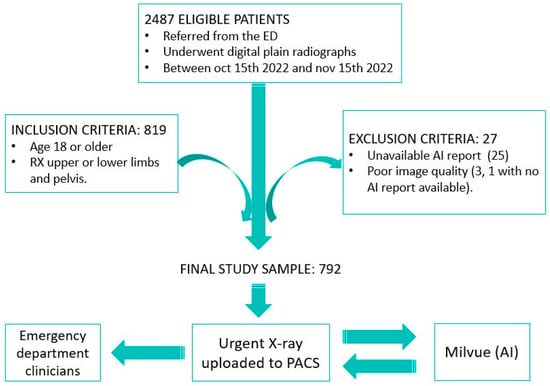

We designed an observational, retrospective, descriptive, and double-blind study. The sample included all osteoarticular radiographs (pelvis, upper limb, and lower limb; excluding other anatomical locations, such as spine or ribs) performed on patients who visited the emergency department at Hospital Universitario Quironsalud Madrid between 15 October and 15 November 2022. The data were extracted from the hospital’s Picture Archiving and Communication System (PACS) and analyzed retrospectively. From the initial sample of 2487 eligible patients, the established inclusion and exclusion criteria were applied. The inclusion criteria consisted of selecting only patients over 18 years of age to avoid the potential interference of growth plate lines in fracture detection. The exclusion criteria included cases in which it was not possible to access the report of the radiograph interpretation performed by Milvue, which occurred in 25 patients, and cases in which radiographs were discarded due to poor diagnostic quality, affecting 3 patients, one of whom also lacked the Milvue report. After applying these criteria, the final sample was reduced to 792 patients (Figure 1).

Figure 1.

Overview of the patient selection process for the study. After applying inclusion and exclusion criteria, 792 patients with X-rays of the appendicular skeleton and pelvis were included in the final sample. Musculoskeletal radiographs uploaded to the PACS were automatically analyzed by AI, which generates a separate report to be displayed alongside the original X-ray so that both radiologists and clinicians can review it.

2.2. Acquisition Protocol

The two conventional digital radiology devices available in our facilities were used (Discovery XR656 HD—GE Healthcare—and YSIO X.pree—Siemens Healthineers-).

2.3. Reading and Data Collection Protocol

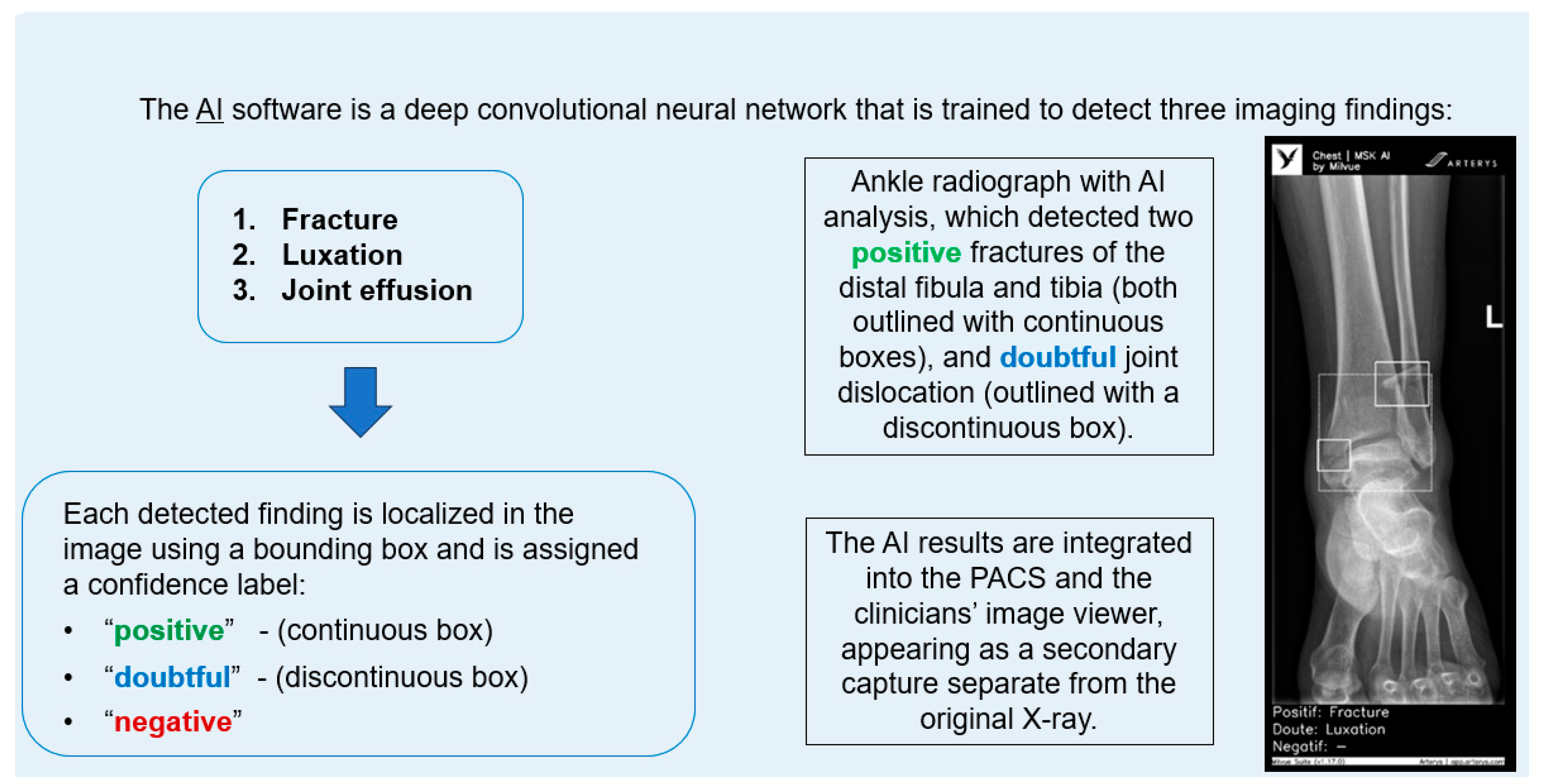

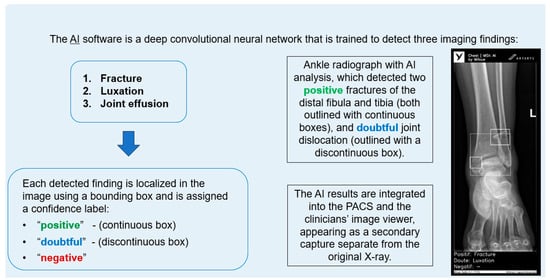

The AI software (Arterys Chest MICA v29.4.0, developed by Arterys, a company that has been acquired by a Paris-based company called Milvue) was the world’s first online medical imaging platform fully cloud native, powered by AI and FDA cleared. It was a clinical application (CE marked as a Class IIa medical device) designed to process appendicular skeleton and pelvis radiographic series and identify three imaging findings (categorical variables): fracture, dislocation, and elbow joint effusion.

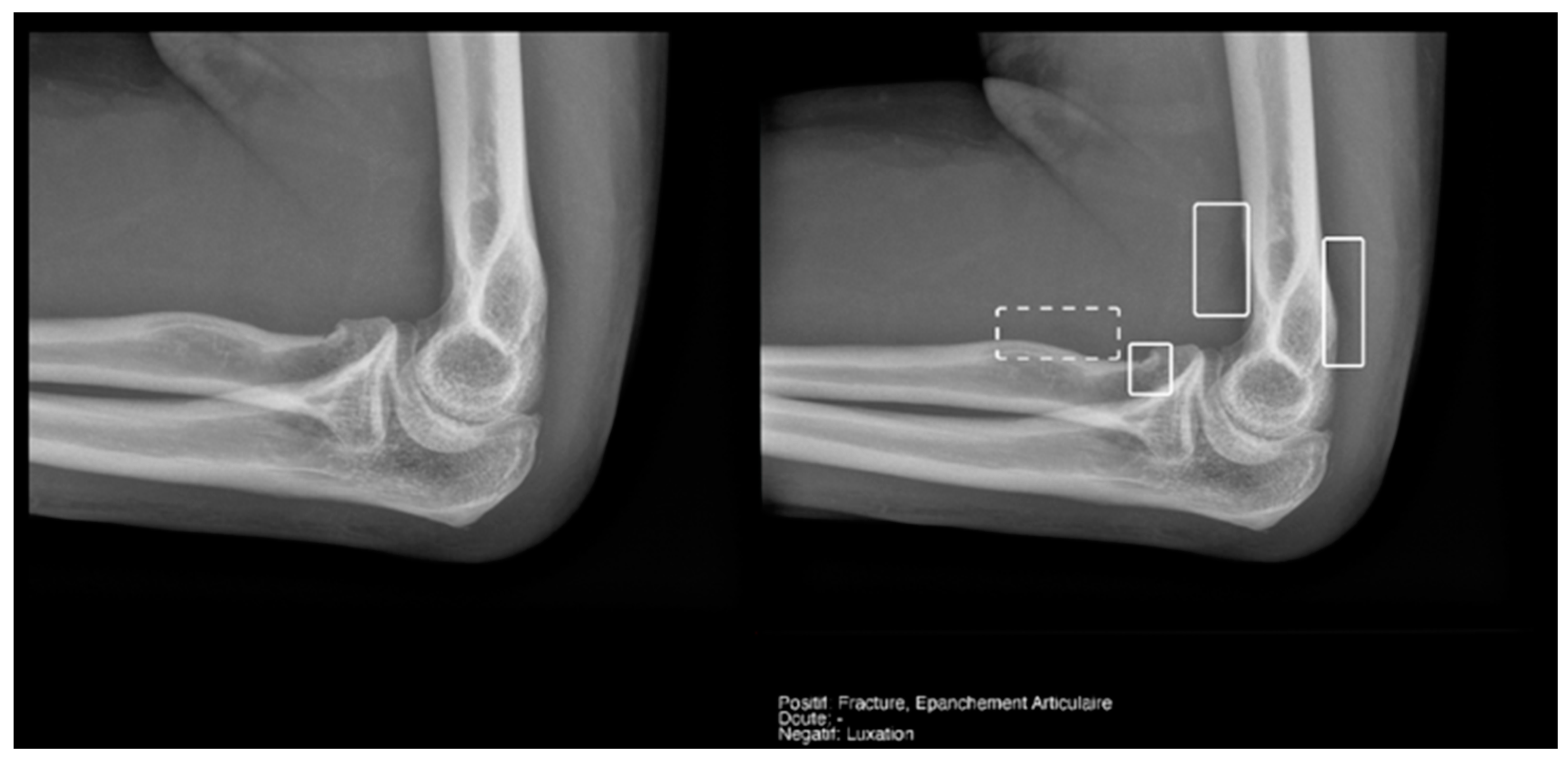

Each detected finding was localized in the image using a bounding box and was assigned a confidence label, either “positive” (continuous line) or “doubtful” (dashed line). Moreover, the algorithm provided a list of findings not detected in the current radiographic view (Figure 2).

Figure 2.

Diagram summarizing the functionality of the AI software and an example analyzing an ankle X-ray.

All findings were detected by a deep learning model that processed all radiographic views included in the series. The AI results were integrated into the institutional PACS and the clinicians’ image viewer, displayed in a secondary capture apart from the original radiograph. In practice, radiologists first evaluated the bone X-ray images, and then reviewed the AI results on the reading workstation to assist in completing the radiology report. When the requesting physicians examined the bone X-ray in the viewer, they could also see the AI’s analysis.

According to the information provided by the commercial company, the deep learning algorithm within the AI musculoskeletal product consists of a convolutional neural network that detected the aforementioned findings in each radiographic view. The model was trained and validated using 1,262,467 and 157,181 radiographic images. Afterwards, it was calibrated on another independent database of 4759 images. The data used for model development were sourced from a multicenter database, which included both pediatric and adult patients). However, our research team was not involved in the development or programming of the AI software. Our role was solely as end users, acting as independent testers of a commercially available product, without any direct commercial relationship with the company.

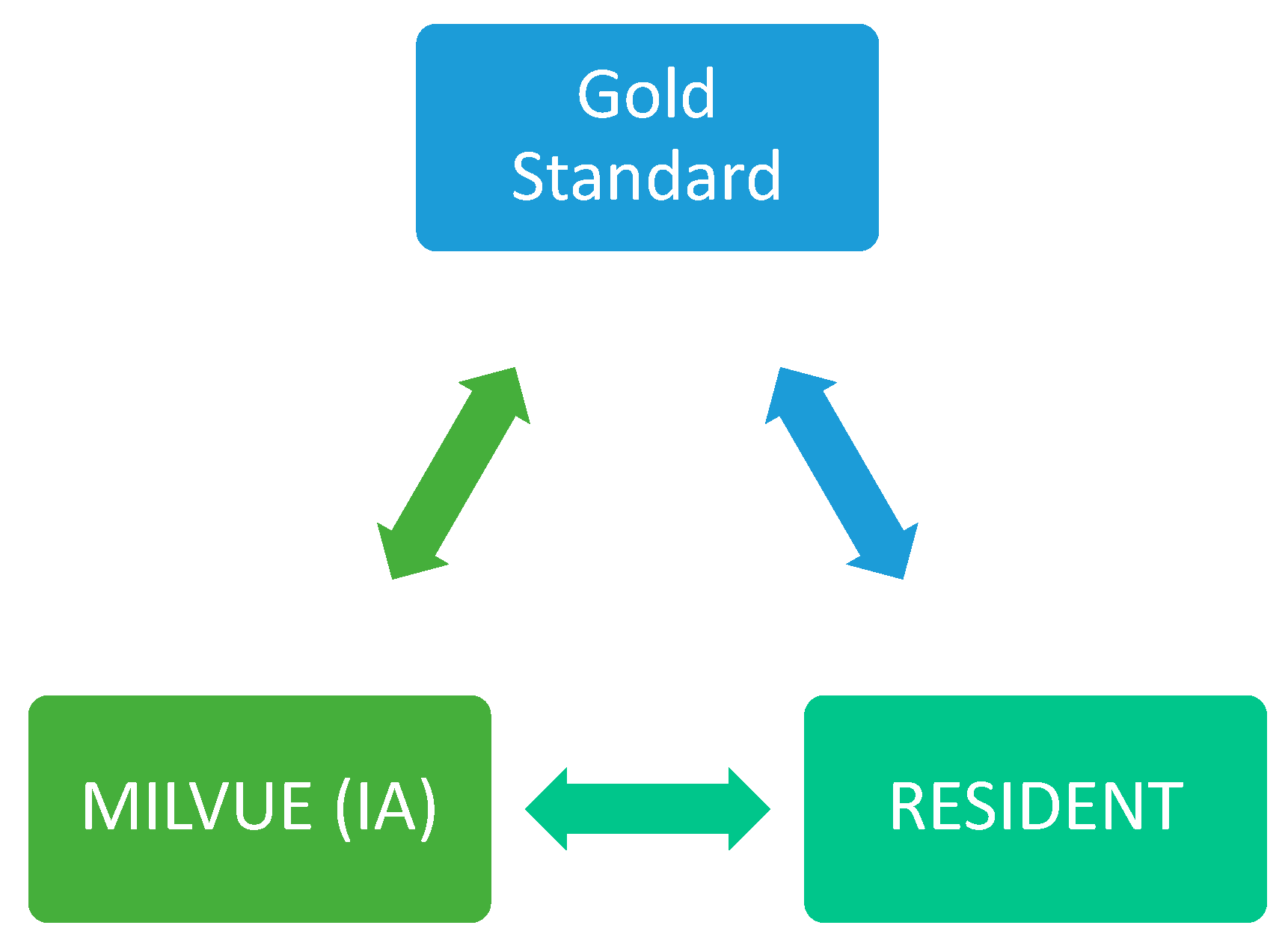

Each of the radiographs in our study was read and interpreted by the AI software (Milvue) as well as by the resident and the senior attending radiologist (gold standard). Only if the senior radiologist’s reading revealed any doubtful findings was a consensus sought with the reading of another senior radiologist. In all cases, neither the residents nor the attendings had access to the clinical data of the emergency episode through the hospital’s Electronic Health Record.

A first-year radiology resident was responsible for reading the radiographs, recording all study variables and a series of additional items in a table, without access to the report provided by Milvue for each of them. Their reading could result in a positive, negative, or doubtful outcome for each of the items listed in the “variables” section.

Subsequently, a senior attending radiologist with 13 years of experience indeendently reviewed these radiographs, also without access to the Milvue report. The reading performed by the senior attending radiologist was considered the gold Sstandard; therefore, it could only have a positive or negative outcome for the findings in question (the “doubtful” category could not be applied).

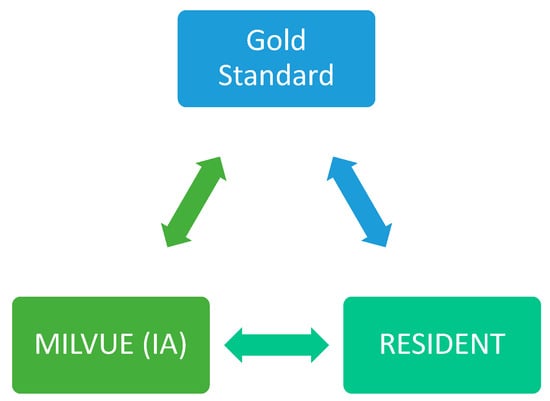

Finally, a second radiology resident independently collected the information provided by Milvue for each of these radiographs. This allowed for a subsequent comparison of the results obtained by the AI, the resident, and the senior radiologist, as well as an assessment of the level of agreement between the readings of the AI and the radiology resident (Figure 3).

Figure 3.

Graph showing the three readers of the X-rays. (GS: gold standard).

2.4. Study Variables

A series of sociodemographic and radiological variables were analyzed for each patient.

Regarding sociodemographic variables, age and sex of the patients were included; also considering the number of radiographic projections performed, radiograph quality, joint groups (small and large joints, as well as flat/long bones), and the studied joint.

The radiological variables that Milvue is trained to recognize and that we included in the study were as follows:

- -

- Fracture.

- -

- Dislocation.

- -

- Joint effusion (only available for elbow radiographs).

In addition, we have studied other variables that Milvue is not trained to recognize. We believe that analyzing these variables is highly relevant for evaluating the overall impact of Milvue in a way that aligns with daily clinical practice, as well as its strengths and weaknesses compared to the systematic interpretation of radiographs performed by radiology residents and senior radiologists. These variables are as follows:

- -

- Sequelae of a fracture; understanding this variable as a chronic fracture or dislocation.

- -

- Arthropathy, which includes osteoarthritis and erosive arthritis; however, in our sample, we did not have cases of erosive arthritis, so in our study, arthropathy refers exclusively to osteoarthritis.

- -

- Focal lesion.

- -

- Anatomical variant.

- -

- Other findings: includes other findings not covered in previous categories.

Our study was approved by the Ethics Committee of our institutional review board (Hospital Fundación Jiménez Díaz; Grupo QuironSalud) on 10 January 2023 (no 01/23) and reapproved after minor revisions on 28 February 2023 (no 04/23) with the code EO017-23_HUQM.

3. Results

3.1. Demographic Characteristics

We briefly summarize the distribution of the demographic characteristics of our sample, which is detailed in Table 1.

Table 1.

Study sample characteristics.

In our series, the median age of the patients was 48 years, with 51.4% women (n = 407). The majority of radiographs were of optimal quality (97.2%) and had two projections per study (90.2%). Dividing the anatomical regions into three major families was considered useful for a better evaluation of diagnostic capabilities and the degree of agreement between Milvue and the residents. The groups were organized as follows:

- Small joints which included hand, feet, fingers, calcaneus, wrist, and ankle. This was the most frequently found group, accounting for up to 51.4% of the cases.

- Large joints which included shoulder, elbow, pelvis or hip, and knee. This was the second most frequent group, representing 43.3% of the cases.

- Flat or long bones which included clavicle, humerus, forearm, femur, and tibia. This was the least frequent group (5.3%).

3.2. Prevalence

As can be seen in Table 2, from the reading of the gold standard, we can estimate the natural prevalence of each diagnosis in the studied sample. Acute fractures stand out due to their frequency (overall prevalence of 16.9%), with the highest numbers found in the small joint group (20.6%). For the other variables evaluated by Milvue, their overall prevalences were 2.5% for dislocations and 25% for joint effusion in elbow radiographs.

Table 2.

Prevalence.

Regarding the variables not analyzed by Milvue, the prevalence of 24.6% for the “other findings” variable stands out. Despite this, it proved to be a very unresolving item because the findings included in this category were recorded under a free-text criterion. Several of them could be observed simultaneously in the same patient and were sometimes of little clinical relevance in the emergency setting. Some examples include the increase in periarticular soft tissues, enthesopathy, or the presence of post-surgical material.

Also notable is the prevalence of the variables’ arthropathy and anatomical variants, at 19.8% and 12.6%, respectively. Regarding the first variable, most of the examinations corresponded to osteoarthritic changes in elderly patients, as would be expected given the age distribution in the study sample. On the other hand, the most prevalent anatomical variant was the fabella in knee radiographs, followed by other accessory bones in ankle and foot radiographs.

The low prevalence of focal bone lesions (1.9%) can be explained by the fact that the sample was extracted from studies requested by the emergency department, with most being benign bone lesions diagnosed incidentally, except for one pathological fracture in the femur secondary to a bone metastasis from a lung carcinoma.

3.3. Analysis of the Resident’s and AI’s Performance Validity Compared to the Gold Standard

3.3.1. Acute Fracture

The analysis of the accuracy of the resident’s and Milvue’s readings compared to the gold standard can be seen in Table 3, Table 4 and Table 5. Additionally, we would like to highlight the analysis of the results marked as doubtful by AI and the resident and how many of them were confirmed by the gold standard. Therefore, doubtful cases were excluded in the calculation of sensitivity, specificity, PPV, NPV, and ROC area.

Table 3.

Acute fracture.

Table 4.

Acute joint dislocation.

Table 5.

Elbow joint effusion.

Regarding fracture detection (Table 3), both readings present generally very high values for sensitivity, specificity, positive predictive value (PPV), negative predictive value (NPV), and area under the curve (AUC). In the overall cases, Milvue achieved a sensitivity of 95.8% (CI 90.5–98.6), a specificity of 97.6% (CI 96–98.6), and an AUC of 0.967 (0.948–0.986).

If we pay attention to the subgroup analysis, the trend is similar, especially highlighting the data of the resident in the subgroup of flat/long bones, where sensitivity reached 100% (CI 75.3–100) and specificity reached 100% (87.7–100).

Furthermore, it is worth noting that Milvue generated more doubtful results than the resident (58 doubtful cases from the AI vs. 12 from the resident), with the positivity for those doubts being lower than in the resident’s reading (only 25.9% of the doubtful cases in the AI report were truly positive for fractures, while 50% of the doubtful results from the resident’s reading were ultimately fractures).

3.3.2. Acute Joint Dislocation

As we mentioned earlier, the prevalence of dislocations was very low, which means that their sensitivity values are only valid in the group of large joints in the resident’s reading, although with very wide confidence intervals. Thus, we found a high sensitivity of 77.8 (CI 52.4–93.6) in the overall cases for the resident’s reading, which improved to 84.6 (CI 54.6–98.1) in the large joint group. However, AI obtained worse sensitivity results of 35 (CI 15.4–59.2) and 35.7 (CI 12.8–64.9) for the overall cases and for the large joint group, respectively (Table 4).

On the other hand, the specificity values were excellent for all readings and all groups, ranging between 99.5 and 100. Additionally, we highlight that the resident achieved excellent AUC values in all subgroups, especially in the large joint group, with an AUC of 0.923 (CI 0.821–1); whereas the AI only achieved a good AUC in the small joint subgroup, with an AUC of 0.831 (CI 0.504–1).

The specific case of acromioclavicular dislocations is particularly striking, as none of these cases were read as positive or doubtful by Milvue, making it impossible to perform the corresponding statistical calculations.

3.3.3. Elbow Joint Effusion

Our sample included twenty-eight elbow X-rays, of which only seven showed joint effusion. These prevalent data influenced the calculation of the other variables. Thus, despite finding a sensitivity of 100 (CI 54.1–100) in the Milvue reading and 100 (CI 59–100) in the resident’s reading, the 95% confidence intervals were very wide (Table 5).

In contrast, specificity and negative predictive values were more reliable for both readings, as well as their confidence intervals, with excellent areas under the curve. Notably, for the AI reading, a specificity of 94.4 (CI 72.7–99.9) was achieved, with an AUC of 0.972 (CI 0.918–1).

The AI recorded four doubtful cases of joint effusion, of which only one was confirmed by the gold standard. The resident did not record any doubtful cases.

3.3.4. Degree of Agreement Between the Resident and AI

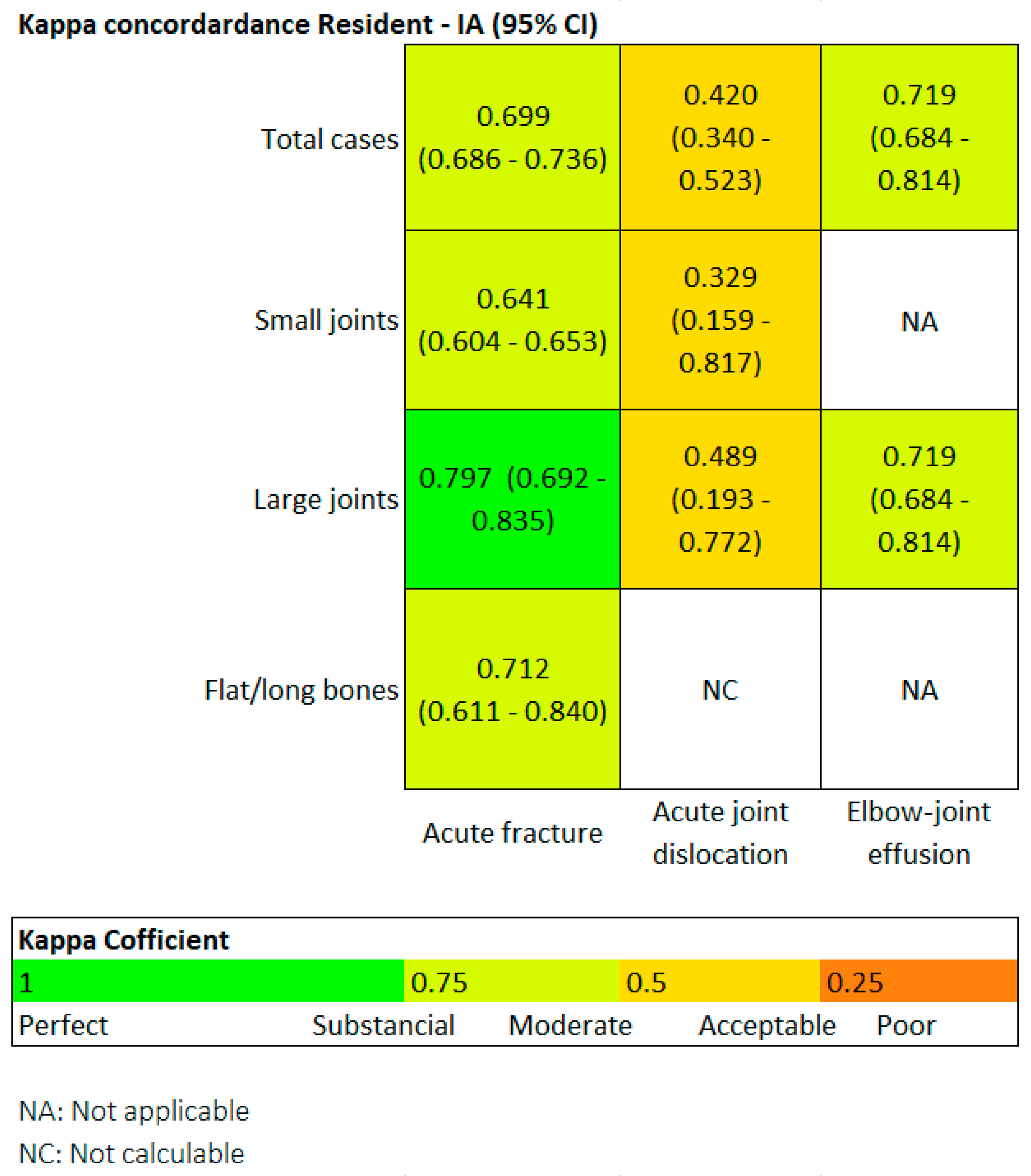

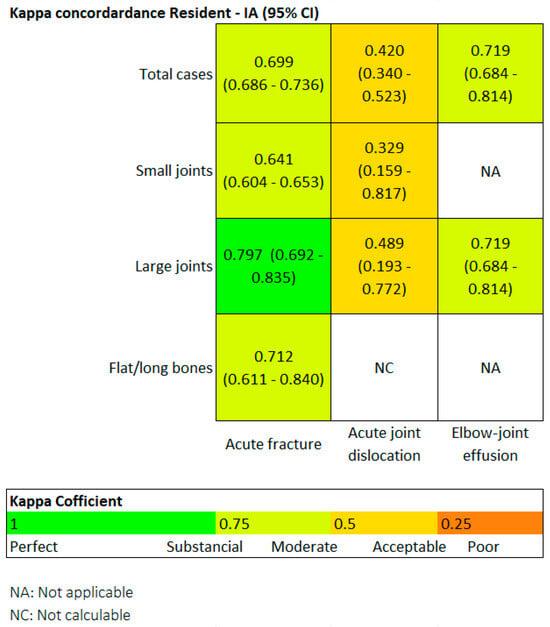

Finally, we also evaluated the degree of agreement between the two readers using the Kappa coefficient. Generally speaking, a Kappa value above 0.4 indicates moderate agreement, above 0.6 is substantial, and above 0.8 is considered almost perfect.

Figure 4 is a heat map. Regarding fractures, in the overall group, the Kappa value was 0.69, and it was particularly high in the large joint group (0.79). In terms of joint dislocations, moderate agreement was found in both the overall group and the large joint group, with Kappa values around 0.4.

Figure 4.

Heat map. For fractures in the overall group, Kappa was 0.69, and it was particularly high for the large joint group (0.79).

In the small joint group, the agreement was only fair, with a Kappa of 0.3. The flat and long bones group could not be assessed because Milvue did not identify any dislocations in this group. Regarding elbow joint effusion, the agreement was substantial, with a Kappa value of 0.719.

However, it is important to note that Kappa values may depend on the prevalence of the evaluated condition, and the prevalence of fractures was significantly higher than that of dislocations or elbow joint effusion.

3.4. Analysis of the “Other Findings” That Milvue Has Not Been Trained to Detect

Below, we carry out a brief analysis of the variables that our AI software has not been trained to detect and that are reflected in Table 6. For the calculation of these variables, we only took into account the reading by the resident and the gold standard.

Table 6.

“Other findings” that Milvue has not been trained to detect.

The low prevalence of most variables only makes the analysis of two of them interesting: anatomical variants and arthropathy. In the anatomical variant variable, sensitivity reaches 90% in the large joint group, and specificity and NPV values were higher than the PPV values, with particularly high AUCs for the large joint group. Regarding the detection of the arthropathy variable, sensitivity stands out in the large joints at 79.8%, with high specificity, NPV, and AUC values ranging between 0.74 and 0.891.

4. Discussion

Of all the applications that use AI in the field of Medicine, a large part of them focuses specifically on radiology [5,6], with the number of related publications having grown exponentially in recent years [7], especially those applied in emergency radiology [8,9,10].

The incorporation of AI in musculoskeletal radiology is bringing a significant change in the diagnosis and analysis of pathologies [11]. Its use allows the automatic detection of fractures [12,13], dislocations [14,15], and bone lesions with great precision [16]. Likewise, it facilitates the detection of joint effusions [17,18], the estimation of bone age using the Greulich and Pyle method [19,20], and the measurement of angles in bone structures such as the Cobb angle for scoliosis [21,22].

It is also capable of generating three-dimensional reconstructions that support surgical planning or diagnosing and monitoring the progression of metabolic diseases such as osteoporosis [23,24].

Many of these applications are of particular relevance in work environments where the radiologist works remotely, in settings with a high demand for radiological examinations (such as in the emergency department), or in both circumstances simultaneously. In these cases, the number of examinations reported by the radiologist is on demand, or the X-rays are directly evaluated by emergency physicians.

In this field, it has been demonstrated that the use of AI increases the level of accuracy in diagnoses made using plain radiography, allowing both general practitioners and radiology residents to enhance their ability to detect various items [25,26]. Thus, according to the study by Oppenheimer et al., the use of AI in the interpretation of X-rays by radiology residents could increase the sensitivity for fracture detection by up to 7% [27].

In the case of plain musculoskeletal radiography, the items AI has been trained to detect may vary depending on the provider’s design, but in general, they have been validated for the detection of acute fractures. There are numerous bibliographic references that have evaluated its potential, both in adults and in pediatric populations. In adults, Duron et al. have described that AI increases the diagnostic sensitivity of physicians and reduces the number of false positives, without resulting in an increased reading time. Furthermore, they report better results for AI compared to humans, although the design of their study presents an inclusion bias, as the most obvious fractures were excluded from the sample [1]. Similarly, in the study by Wood, they found similar findings, demonstrating that the use of AI by radiologists allowed them to improve fracture detection, in addition to performing at a faster pace and with less uncertainty in their diagnosis [28].

In the same way, and including both adult and pediatric populations, Regnard et al. describe in their study that AI is capable of increasing the detection of fractures that may go unnoticed by radiologists, so that the combination of AI and radiologists results in the best diagnostic approach [14].

In our study, we aim to reflect routine clinical practice, not only evaluating the accuracy of Milvue but also comparing the AI results with those of a radiology resident. This comparison is important, as the interpretation of a trauma specialist can differ significantly from that of emergency department clinicians, which may complicate direct comparisons. To make the evaluation more relevant, we chose to use the radiology resident’s readings as a closer approximation to the interpretations of emergency department clinicians.

Regarding fracture detection (Table 3), a particularly notable finding was that the readings by Milvue and the resident show generally very high values for Sens, Spec, PPV, NPV, and AUC, with data similar to those described in the literature. (Figure 5 and Figure 6).

Figure 5.

True positive fracture of the distal fibula correctly recorded by AI and radiology resident.

Figure 6.

Fracture of the proximal phalanx of the fifth finger. It was recorded as doubtful by AI, but as positive by the radiology resident.

In the overall cases, Milvue achieved a sensitivity of 95.8, a specificity of 97.6, and an AUC of 0.967. These results are consistent with those described in the literature, such as in the study by Franco et al., where AI achieved a sensitivity for fracture detection of 91.3 (CI 87.6–94.3) and a specificity slightly lower than that obtained in our study, at 76.7 (CI 71.5–81.3) [29]. Similarly, in the study by Xie et al., they obtained a high sensitivity that varied by location between 0.83 and 0.91, with an AUC greater than 0.92 for all studied locations [30].

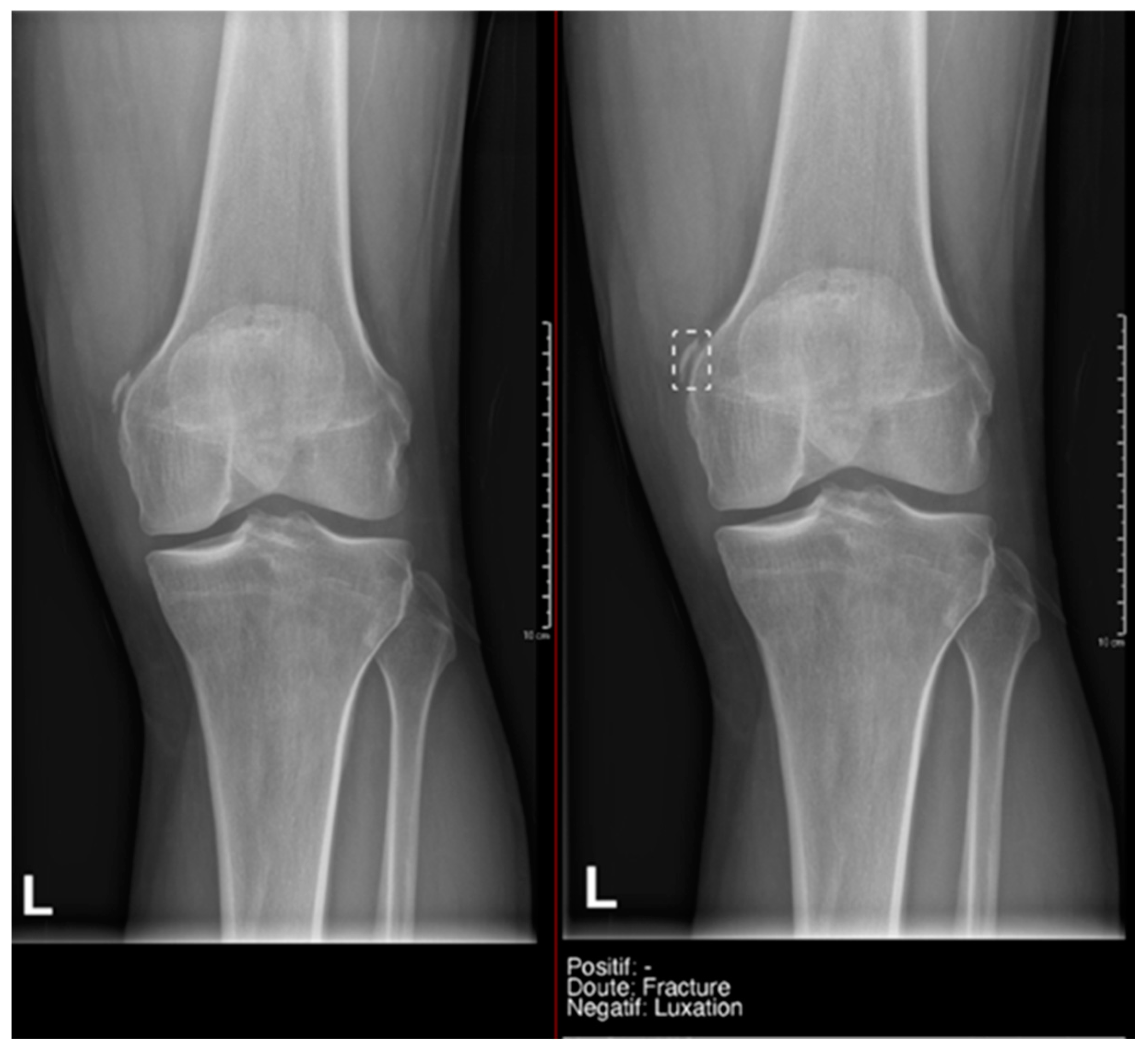

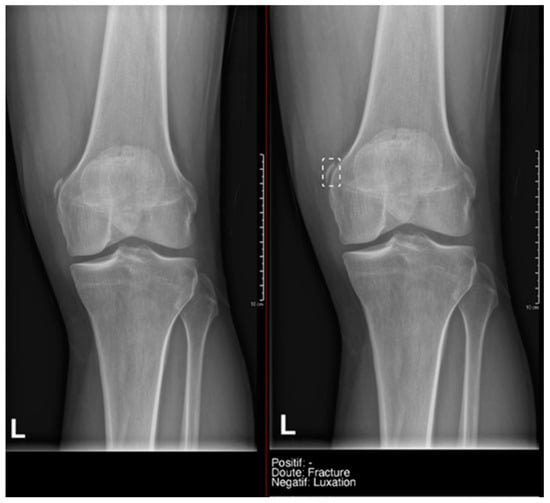

What we found particularly noteworthy was that in the overall group, we recorded 12 doubts by the resident, and 50% of them were confirmed by the gold standard. Milvue provided a higher number of doubtful cases—58 cases—but the proportion of positive cases was lower (only 25.9%) (Figure 7).

Figure 7.

Example of a doubtful fracture marked with a dashed-line box on the AP knee radiograph, which corresponded to a Pellegrini–Stieda lesion.

This difference in the positivity of cases marked as doubtful was influenced by the presence of anatomical variants in the following:

- Ankle and foot: On six occasions, Milvue marked the fracture variable as doubtful in cases with a bipartite medial sesamoid (two patients), an accessory sesamoid at the base of the 5th metatarsal, synphalangism, os peroneum, and os naviculare. (Figure 8).

Figure 8. Example of an anatomical variant (bipartite hallux sesamoid), which was recorded as doubt fracture by AI and as negative by the radiology resident.

Figure 8. Example of an anatomical variant (bipartite hallux sesamoid), which was recorded as doubt fracture by AI and as negative by the radiology resident. - Hand: Milvue marked the fracture variable as doubtful in the case of multiple accessory ossicles.

- Wrist: On four occasions, Milvue marked the fracture variable as doubtful in cases of os paranaviculare, os trapezium secundarium, os ulnar styloid, and os paratrapezium. However, Milvue did not detect fractures in three cases of os ulnar styloid, two cases of accessory ulnar styloid, nor in cases of os hypolunatum and os epilunatum.

Overall, the presence of anatomical variants did not cause any diagnostic confusion for the resident, except in two patients with os paranaviculare and os paratrapezium, which were mistakenly classified as fractures.

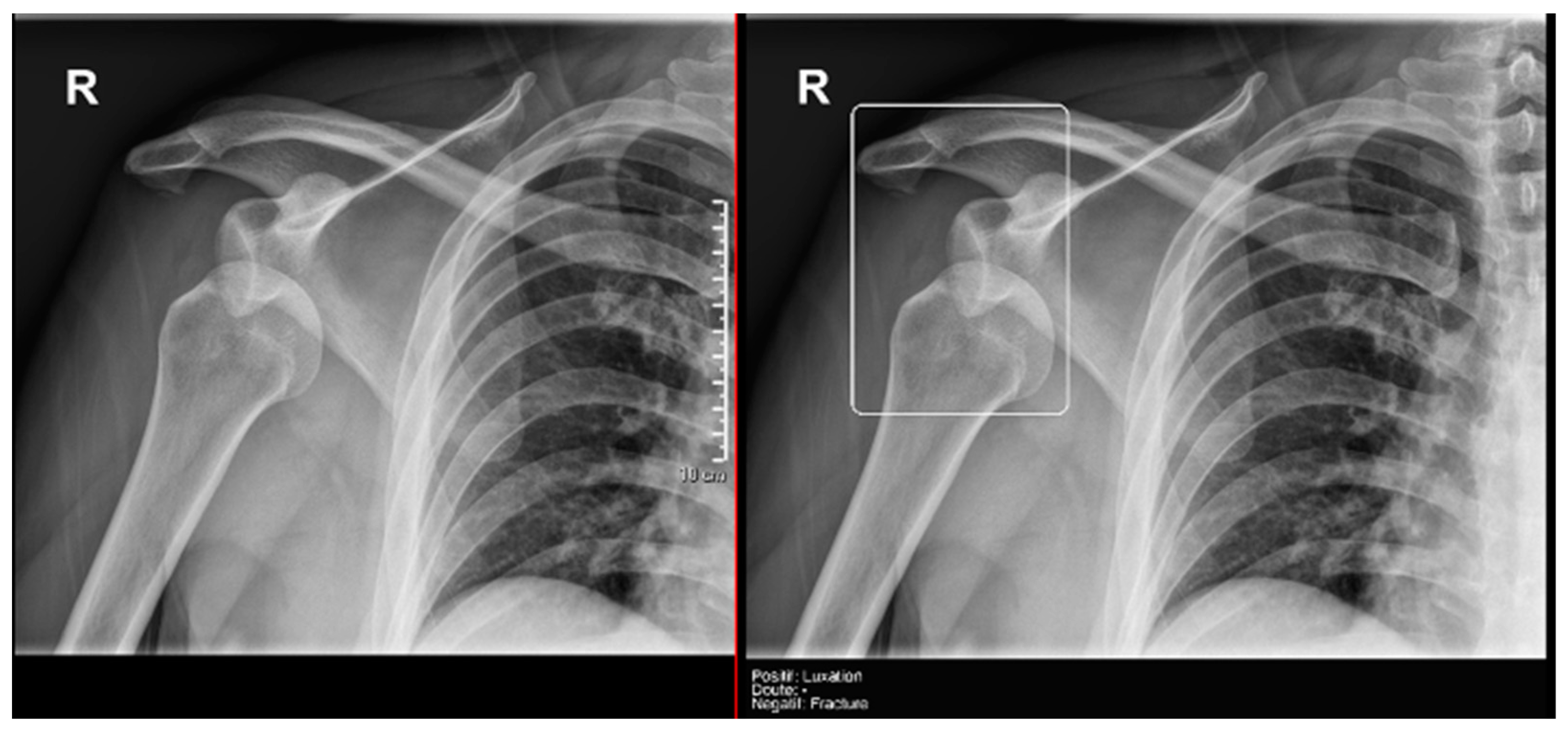

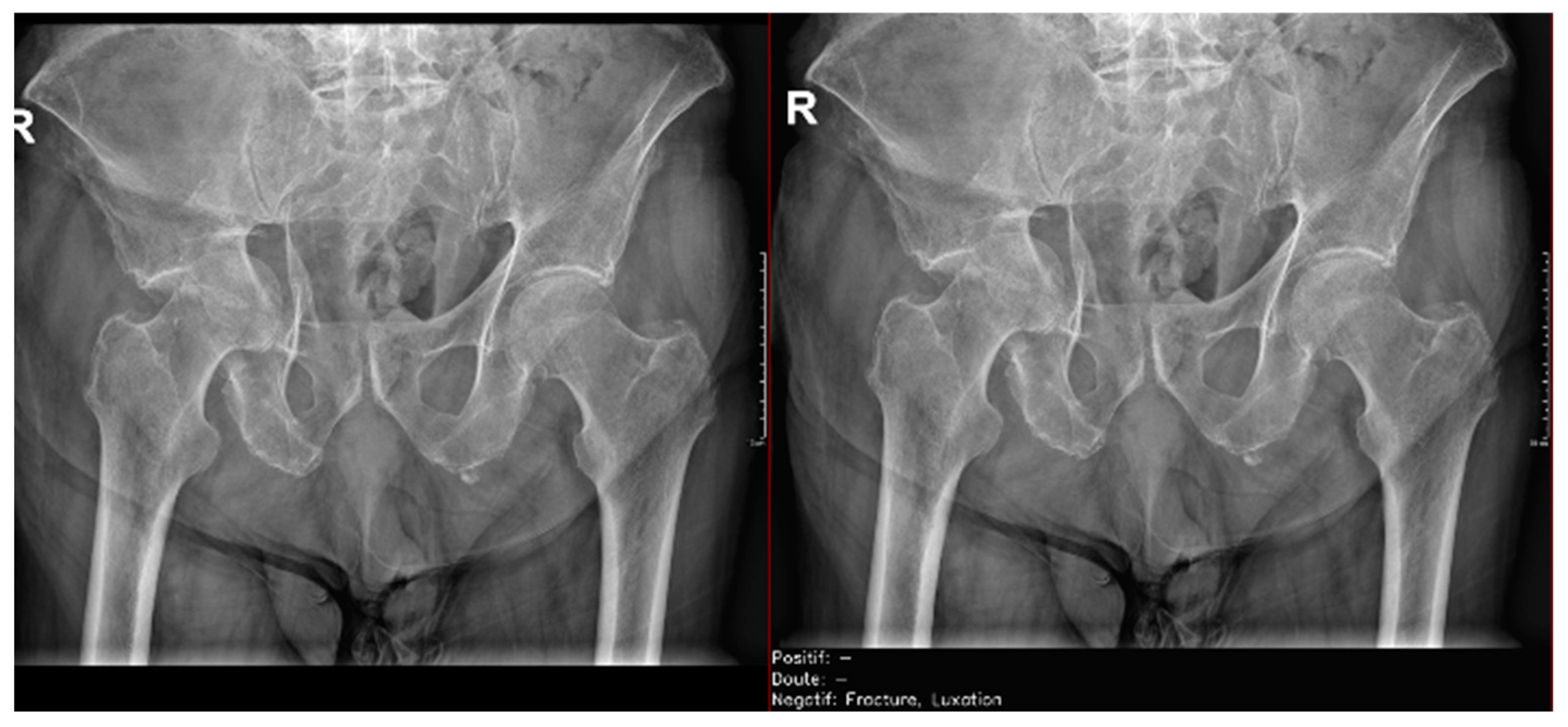

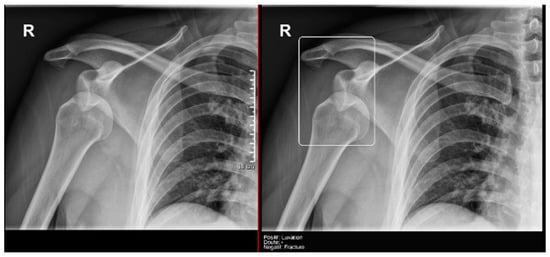

Regarding the detection of dislocations (Table 4), we observed better sensitivity results in the resident’s reading compared to that of the AI, with a high sensitivity of 77.8% in the overall cases, which improved to 84.6% in the large joint group. In contrast, the AI showed lower sensitivity values, with 35% in the overall cases and 35.7% in the large joints. However, specificity values were excellent for all readings and groups, reaching between 99.5% and 100%. (Figure 9 and Figure 10).

Figure 9.

Glenohumeral joint dislocation that was correctly recorded by both the AI and radiology resident.

Figure 10.

Pelvic fracture and coxofemoral dislocation, which was recorded as negative by AI but correctly detected by the radiology resident.

All these results were heavily influenced by the low prevalence of dislocations in our sample. If we refer to the literature, in the study by Regnard et al., they obtained sensitivity and specificity values for AI readings that were higher than those shown in our study, with 89.9 and 99.1, respectively [14]. However, they also align with our research in that the sensitivity and specificity values are higher in the radiologist’s reading compared to AI.

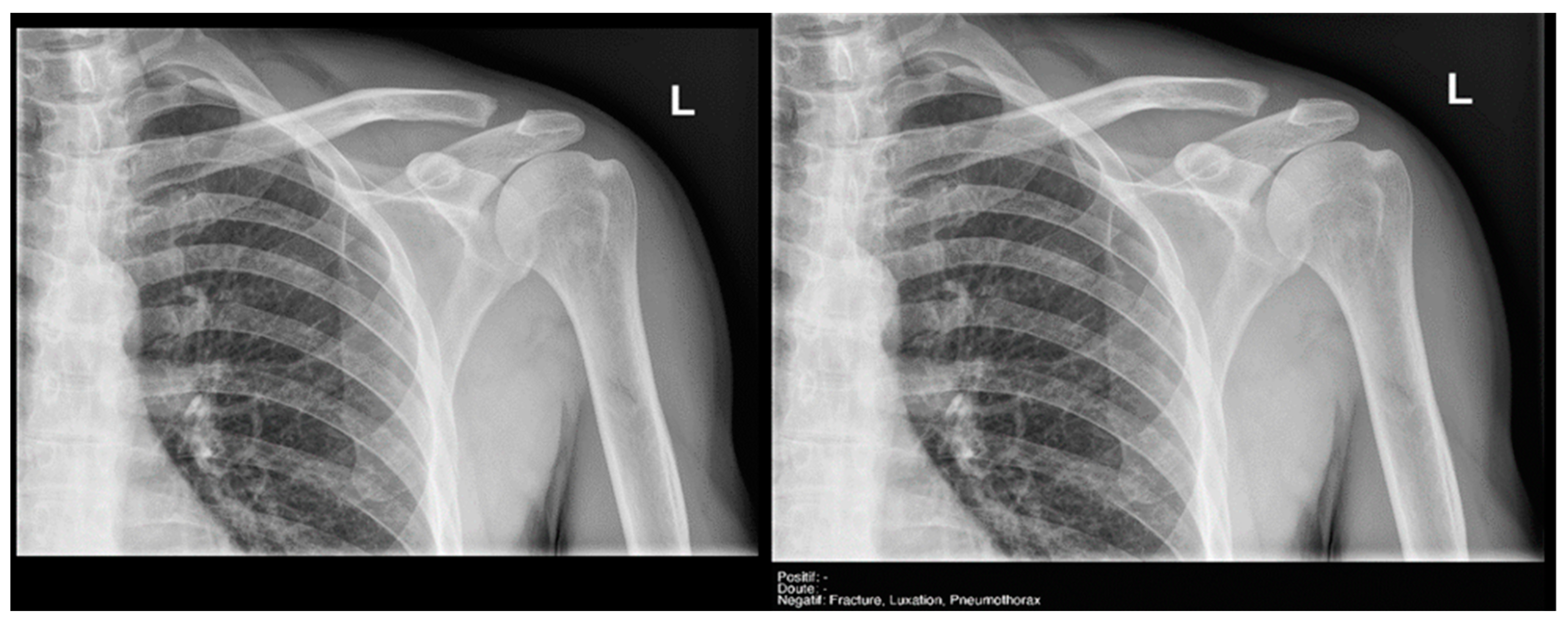

Finally, the specific case of acromioclavicular dislocations is particularly striking, as none of the cases were reported as positive or doubtful by Milvue (Figure 11). We have also not found studies that evaluate the diagnostic capacity of AI in detecting acromioclavicular dislocations. This leads us to propose that future updates of the AI system should focus on expanding the training dataset to include a larger sample of acromioclavicular joint cases, particularly those with pathological conditions, in order to improve the system’s ability to accurately detect these dislocations.

Figure 11.

Acromioclavicular joint dislocation recorded as negative by AI and positive by radiology resident.

Given our interest in integrating AI tools into clinical practice, after concluding the study, we had the opportunity to meet with Milvue representatives on May 11, 2023. During this meeting, we presented our study findings, including identified pitfalls and areas for improvement. The company welcomed our feedback and committed to considering user input worldwide to refine and enhance the software in future updates, aiming to improve its diagnostic performance.

Regarding the detection of joint effusion in the elbow (Table 5), the low prevalence of joint effusion in our sample affected the calculation of the variables. Although a sensitivity of 100% was found in both the Milvue and the resident’s readings, the confidence intervals were very wide. On the other hand, specificity and negative predictive values were more reliable for both readings, with the AI reading standing out in particular, showing a specificity of 94.4% (Figure 12).

Figure 12.

Radial head fracture and joint effusion, correctly detected by AI and radiology resident.

The data obtained in our study are consistent with those presented in the study by Huhtanen [17], in which, after analyzing more than 215 lateral elbow X-rays using AI and comparing them with three radiologists, they obtained sensitivity, specificity, and AUC values for the AI detection of joint effusion in the adult group of 91.7 (CI 87–90.1), 92.4 (CI 89.2–95.6), and 0.966 (CI 0.96–0.97), respectively.

However, in their study, Dupuis et al. [31] analyzed 1637 pediatric emergency elbow X-rays with AI, obtaining a sensitivity >89% for the detection of fracture and joint effusion, similar to what has been described in the literature. Nevertheless, the specificities were lower than those reported in previous studies, with 63% for joint effusion and 77% for fractures. They also found NPV >92% and PPV ranging between 54 and 73%. These results may have been influenced by the age group of the sample.

After analyzing the results of our study and those found in the literature, we can conclude that both the AI system and the resident demonstrated NPVs greater than 95% and AUC values above 0.8, with the exception of joint dislocations, likely due to their low prevalence in our sample. These results were accompanied by high 95% CIs, indicating statistically reliable performance. This suggests that AI has the potential to serve as an effective screening tool in emergency departments, efficiently identifying normal musculoskeletal radiographs and integrating smoothly into the workflow. By enhancing clinicians’ autonomy, AI can help reduce the workload of radiologists, allowing them to focus on more complex cases. However, since many of the doubtful cases classified by AI were not confirmed as true positives, emergency physicians should continue to consult radiologists for these specific cases. While AI can assist in the diagnostic process, it cannot yet fully replace the human touch in patient care, as the expertise and judgment of clinicians remain essential, particularly in uncertain or complex cases.

Finally, we analyzed the degree of agreement between the readings of the AI and the radiology resident (which is comparable to that of an emergency physician), a particularly innovative aspect that we have not found analyzed in the literature. This degree of agreement was assessed by calculating the Kappa coefficient, represented by a heat map in Figure 4.

For the overall sample, in the detection of fractures, the Kappa coefficient resulted in 0.69 (moderate agreement), and it was particularly high for the subgroup of large joints, with a result of 0.79 (substantial/almost perfect agreement).

A moderate correlation was also noteworthy, with a Kappa coefficient of 0.71. The correlation ranged from acceptable to slight in the detection of dislocations.

Despite our study design efforts, we must acknowledge several limitations. First, it was not a multicenter study, and the population analyzed was limited to adults. Additionally, the gold standard used in our study was the interpretation of a senior radiologist, with no other confirmatory tests considered.

Another limitation stems from the low prevalence of certain conditions in our sample, which may impact the results, particularly in statistical parameters such as ROC areas, confidence intervals, and Kappa values.

Furthermore, the readings of emergency department physicians were not included. In our effort to reflect routine clinical practice, we aimed not only to evaluate the accuracy of Milvue but also to compare its results with those of a radiology resident, as their readings were considered a closer approximation to those of experienced emergency department clinicians. However, further research is needed to determine whether AI can achieve a similar level of accuracy in musculoskeletal radiographs as emergency department physicians.

An additional important limitation is the lack of available clinical information, which is crucial in the emergency room setting. Without this context, it becomes difficult to properly localize lesions, as attention is not focused on the areas of clinical interest that are unknown to us.

Finally, our study was limited to the detection of joint effusion in the elbow joint because the AI model was not trained to identify it in other joints. We hypothesize that, as AI technology continues to develop, future studies that include additional joints may improve the detection of joint effusion.

5. Conclusions

Our study demonstrates that AI applied to the interpretation of standard X-ray examinations shows promising performance in detecting fractures in large joints, positioning it as a useful tool for screening in emergency departments. However, the AI produced more “doubtful” results than the resident, with a lower proportion of positive findings, and showed limitations in detecting dislocations, particularly in the acromioclavicular joint.

Interobserver agreement between the AI and the resident ranged from moderate to substantial across all variables, highlighting its high reliability. These findings underscore the potential of AI to complement medical diagnosis, helping to reduce the rate of undetected injuries and optimize screening in high-demand clinical settings.

Author Contributions

Conceptualization, V.M.D.V.; methodology, R.C.A., A.F.A., J.C.A., M.R.R. and V.M.D.V.; resources, A.D.M. and A.Á.V.; project administration, R.C.A.; data curation, A.D.M., J.L.A., D.G.C. and L.S.G.; formal analysis, C.A.-V. and I.J.T.V.; visualization, A.D.M.; writing—original draft preparation, A.D.M. and J.L.A.; writing—review and editing, R.C.A. and A.F.A.; supervision, R.C.A., A.F.A. and V.M.D.V. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

This study was conducted in accordance with the Declaration of Helsinki and approved by the Ethics Committee of Hospital Fundación Jiménez Díaz; Grupo QuironSalud (EO017-23_HUQM 28 February 2023) for studies involving humans.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original data presented in the study are openly available in FigShare at doi:10.6084/m9.figshare.28250348.

Acknowledgments

This study was previously presented with partial results as a scientific poster at the following conferences: 1. Díaz Moreno, A.; López Alcolea, J.; García Castellanos, D.; Cano Alonso, R.; Fernández Alfonso, A.; Martínez de Vega, V.; Álvarez Vazquez, A. Assessment of an AI aid in detection of adult appendicular skeletal fractures, joint dislocation and joint effusion by emergency radiologists. In Proceedings of the RSNA Conference, Chicago, USA, December 1, 2024; Díaz Moreno, A.; López Alcolea, J.; García Castellanos, D.; Cano Alonso, R.; Fernández Alfonso, A.; 2. Andreu Vázquez, C.; Thuissard Vasallo, I.J.; Martínez de Vega Fernández, V. Radiografía ósea en la urgencia. ¿Quién diagnostica mejor? ¿La inteligencia artificial o el residente? In Proceedings of the Congreso Nacional de la SERAM, Barcelona, Spain, May 2024; 3. Díaz Moreno, A.; López Alcolea, J.; García Castellanos, D.; Sanabria, L.; Cano Alonso, R.; Fernández Alfonso, A.; Carrascoso Arranz, J.; Martínez de Vega, V. Diagnostic performance of an Artificial Intelligence software for the evaluation of Bone X-ray examinations referred from the Emergency Department. In Proceedings of the ECR 2024, Vienna, Austria, 29 March 2024, C-11952.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Duron, L.; Ducarouge, A.; Gillibert, A.; Lainé, J.; Allouche, C.; Cherel, N.; Zhang, Z.; Nitche, N.; Lacave, E.; Pourchot, A.; et al. Assessment of an AI Aid in Detection of Adult Appendicular Skeletal Fractures by Emergency Physicians and Radiologists: A Multicenter Cross-sectional Diagnostic Study. Radiology 2021, 300, 120–129. [Google Scholar] [CrossRef] [PubMed]

- Fernholm, R.; Pukk Härenstam, K.; Wachtler, C.; Nilsson, G.H.; Holzmann, M.J.; Carlsson, A.C. Diagnostic errors reported in primary healthcare and emergency departments: A retrospective and descriptive cohort study of 4830 reported cases of preventable harm in Sweden. Eur. J. Gen. Pract. 2019, 25, 128–135. [Google Scholar] [CrossRef]

- Guly, H.R. Diagnostic errors in an accident and emergency department. Emerg. Med. J. 2001, 18, 263–269. [Google Scholar] [CrossRef]

- Kuo, R.Y.L.; Harrison, C.; Curran, T.-A.; Jones, B.; Freethy, A.; Cussons, D.; Stewart, M.; Collins, G.S.; Furniss, D. Artificial Intelligence in Fracture Detection: A Systematic Review and Meta-Analysis. Radiology 2022, 304, 50–62. [Google Scholar] [CrossRef]

- Gore, J.C. Artificial intelligence in medical imaging. Magn. Reson. Imaging 2020, 68, A1–A4. [Google Scholar] [CrossRef]

- Van Leeuwen, K.G.; Schalekamp, S.; Rutten, M.J.C.M.; van Ginneken, B.; de Rooij, M. Artificial intelligence in radiology: 100 commercially available products and their scientific evidence. Eur. Radiol. 2021, 31, 3797–3804. [Google Scholar] [CrossRef]

- West, E.; Mutasa, S.; Zhu, Z.; Ha, R. Global trend in artificial intelligence-based publications in radiology from 2000 to 2018. Am. J. Roentgenol. 2019, 213, 1204–1206. [Google Scholar] [CrossRef]

- López Alcolea, J.; Fernández Alfonso, A.; Cano Alonso, R.; Álvarez Vázquez, A.; Díaz Moreno, A.; García Castellanos, D.; Greciano, L.S.; Hayoun, C.; Rodríguez, M.R.; Vázquez, C.A.; et al. Diagnostic Performance of Artificial Intelligence in Chest Radiographs Referred from the Emergency Department. Diagnostics 2024, 14, 2592. [Google Scholar] [CrossRef] [PubMed]

- Cellina, M.; Cè, M.; Irmici, G.; Ascenti, V.; Caloro, E.; Bianchi, L.; Pellegrino, G.; D’amico, N.; Papa, S.; Carrafiello, G. Artificial Intelligence in Emergency Radiology: Where Are We Going? Diagnostics 2022, 12, 3223. [Google Scholar] [CrossRef]

- Katzman, B.D.; van der Pol, C.B.; Soyer, P.; Patlas, M.N. Artificial intelligence in emergency radiology: A review of applications and possibilities. Diagn. Interv. Imaging 2023, 104, 6–10. [Google Scholar] [CrossRef]

- Debs, P.; Fayad, L.M. The promise and limitations of artificial intelligence in musculoskeletal imaging. Front. Radiol. 2023, 3, 1242902. [Google Scholar] [CrossRef]

- Hayashi, D.; Kompel, A.J.; Ventre, J.; Ducarouge, A.; Nguyen, T.; Regnard, N.-E.; Guermazi, A. Automated detection of acute appendicular skeletal fractures in pediatric patients using deep learning. Skelet. Radiol. 2022, 51, 2129–2139. [Google Scholar] [CrossRef] [PubMed]

- Zech, J.R.; Santomartino, S.M.; Yi, P.H. Artificial Intelligence (AI) for Fracture Diagnosis: An Overview of Current Products and Considerations for Clinical Adoption, From the AJR Special Series on AI Applications. Am. J. Roentgenol. 2022, 219, 869–879. [Google Scholar] [CrossRef]

- Regnard, N.-E.; Lanseur, B.; Ventre, J.; Ducarouge, A.; Clovis, L.; Lassalle, L.; Lacave, E.; Grandjean, A.; Lambert, A.; Dallaudière, B.; et al. Assessment of performances of a deep learning algorithm for the detection of limbs and pelvic fractures, dislocations, focal bone lesions, and elbow effusions on trauma X-rays. Eur. J. Radiol. 2022, 154, 110447. [Google Scholar] [CrossRef] [PubMed]

- Wong, C.R.; Zhu, A.; Baltzer, H.L. The Accuracy of Artificial Intelligence Models in Hand/Wrist Fracture and Dislocation Diagnosis. JBJS Rev. 2024, 12, e24.00106. [Google Scholar] [CrossRef]

- Zhao, Z.; Pi, Y.; Jiang, L.; Xiang, Y.; Wei, J.; Yang, P.; Zhang, W.; Zhong, X.; Zhou, K.; Li, Y.; et al. Deep neural network based artificial intelligence assisted diagnosis of bone scintigraphy for cancer bone metastasis. Sci. Rep. 2020, 10, 17046. [Google Scholar] [CrossRef]

- Huhtanen, J.T.; Nyman, M.; Doncenco, D.; Hamedian, M.; Kawalya, D.; Salminen, L.; Sequeiros, R.B.; Koskinen, S.K.; Pudas, T.K.; Kajander, S.; et al. Deep learning accurately classifies elbow joint effusion in adult and pediatric radiographs. Sci. Rep. 2022, 12, 11803. [Google Scholar] [CrossRef] [PubMed]

- England, J.R.; Gross, J.S.; White, E.A.; Patel, D.B.; England, J.T.; Cheng, P.M. Detection of Traumatic Pediatric Elbow Joint Effusion Using a Deep Convolutional Neural Network. Am. J. Roentgenol. 2018, 211, 1361–1368. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Zhou, B.; Gong, P.; Zhang, T.; Mo, Y.; Tang, J.; Shi, X.; Wang, J.; Yuan, X.; Bai, F.; et al. Artificial Intelligence–Assisted Bone Age Assessment to Improve the Accuracy and Consistency of Physicians With Different Levels of Experience. Front. Pediatr. 2022, 10, 818061. [Google Scholar] [CrossRef] [PubMed]

- Liang, Y.; Chen, X.; Zheng, R.; Cheng, X.; Su, Z.; Wang, X.; Du, H.; Zhu, M.; Li, G.; Zhong, Y.; et al. Validation of an AI-Powered Automated X-ray Bone Age Analyzer in Chinese Children and Adolescents: A Comparison with the Tanner–Whitehouse 3 Method. Adv. Ther. 2024, 41, 3664–3677. [Google Scholar] [CrossRef]

- Yang, J.; Wang, J.; Meng, M.Q.-H. A Landmark-aware Network for Automated Cobb Angle Estimation Using X-ray Images. arXiv 2024, arXiv:2405.19645. [Google Scholar] [CrossRef]

- Li, H.; Qian, C.; Yan, W.; Fu, D.; Zheng, Y.; Zhang, Z.; Meng, J.; Wang, D. Use of Artificial Intelligence in Cobb Angle Measurement for Scoliosis: Retrospective Reliability and Accuracy Study of a Mobile App. J. Med. Internet Res. 2024, 26, e50631. [Google Scholar] [CrossRef]

- Joseph, G.B.; McCulloch, C.E.; Sohn, J.H.; Pedoia, V.; Majumdar, S.; Link, T.M. AI MSK clinical applications: Cartilage and osteoarthritis. Skelet. Radiol. 2022, 51, 331–343. [Google Scholar] [CrossRef]

- Lee, J.-S.; Adhikari, S.; Liu, L.; Jeong, H.-G.; Kim, H.; Yoon, S.-J. Osteoporosis detection in panoramic radiographs using a deep convolutional neural network-based computer-assisted diagnosis system: A preliminary study. Dentomaxillofacial Radiol. 2019, 48, 20170344. [Google Scholar] [CrossRef]

- Meetschen, M.; Salhöfer, L.; Beck, N.; Kroll, L.; Ziegenfuß, C.D.; Schaarschmidt, B.M.; Forsting, M.; Mizan, S.; Umutlu, L.; Hosch, R. AI-Assisted X-ray Fracture Detection in Residency Training: Evaluation in Pediatric and Adult Trauma Patients. Diagnostics 2024, 14, 596. [Google Scholar] [CrossRef] [PubMed]

- Guermazi, A.; Tannoury, C.; Kompel, A.J.; Murakami, A.M.; Ducarouge, A.; Gillibert, A.; Li, X.; Tournier, A.; Lahoud, Y.; Jarraya, M.; et al. Improving Radiographic Fracture Recognition Performance and Efficiency Using Artificial Intelligence. Radiology 2022, 302, 627–636. [Google Scholar] [CrossRef] [PubMed]

- Oppenheimer, J.; Lüken, S.; Hamm, B.; Niehues, S.M. A Prospective Approach to Integration of AI Fracture Detection Software in Radiographs into Clinical Workflow. Life 2023, 13, 223. [Google Scholar] [CrossRef]

- Wood, G.; Knapp, K.M.; Rock, B.; Cousens, C.; Roobottom, C.; Wilson, M.R. Visual expertise in detecting and diagnosing skeletal fractures. Skelet. Radiol. 2013, 42, 165–172. [Google Scholar] [CrossRef]

- Franco, P.N.; Maino, C.; Mariani, I.; Gandola, D.G.; Sala, D.; Bologna, M.; Franzesi, C.T.; Corso, R.; Ippolito, D. Diagnostic performance of an AI algorithm for the detection of appendicular bone fractures in pediatric patients. Eur. J. Radiol. 2024, 178, 111637. [Google Scholar] [CrossRef] [PubMed]

- Xie, Y.; Li, X.; Chen, F.; Wen, R.; Jing, Y.; Liu, C.; Wang, J. Artificial intelligence diagnostic model for multi-site fracture X-ray images of extremities based on deep convolutional neural networks. Quant. Imaging Med. Surg. 2024, 14, 1930–1943. [Google Scholar] [CrossRef]

- Dupuis, M.; Delbos, L.; Rouquette, A.; Adamsbaum, C.; Veil, R. External validation of an artificial intelligence solution for the detection of elbow fractures and joint effusions in children. Diagn. Interv. Imaging 2024, 105, 104–109. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).