Using Mental Shadowing Tasks to Improve the Sound-Evoked Potential of EEG in the Design of an Auditory Brain–Computer Interface

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

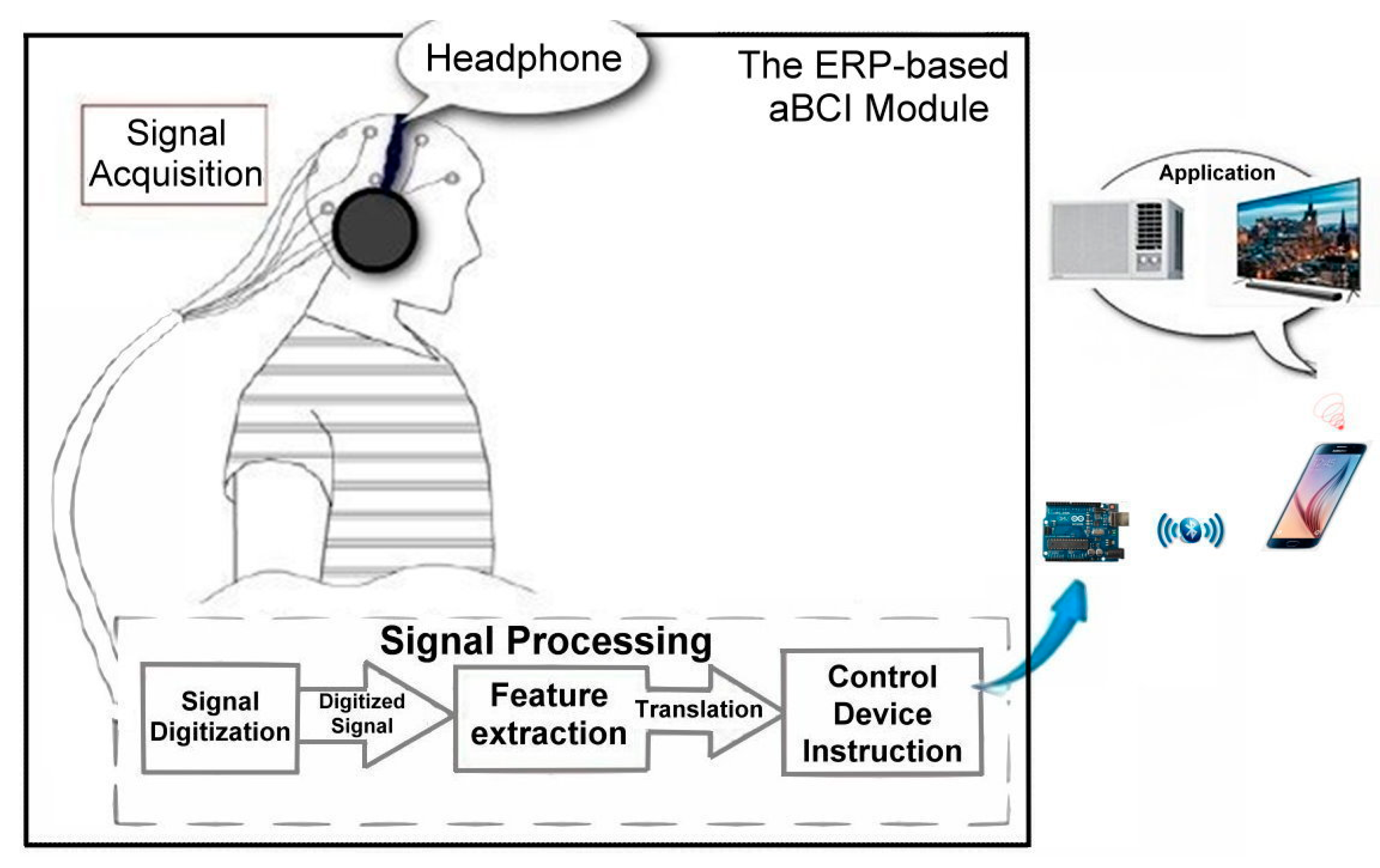

2.2. The aBCI System

2.2.1. The Prototype of the aBCI System Module

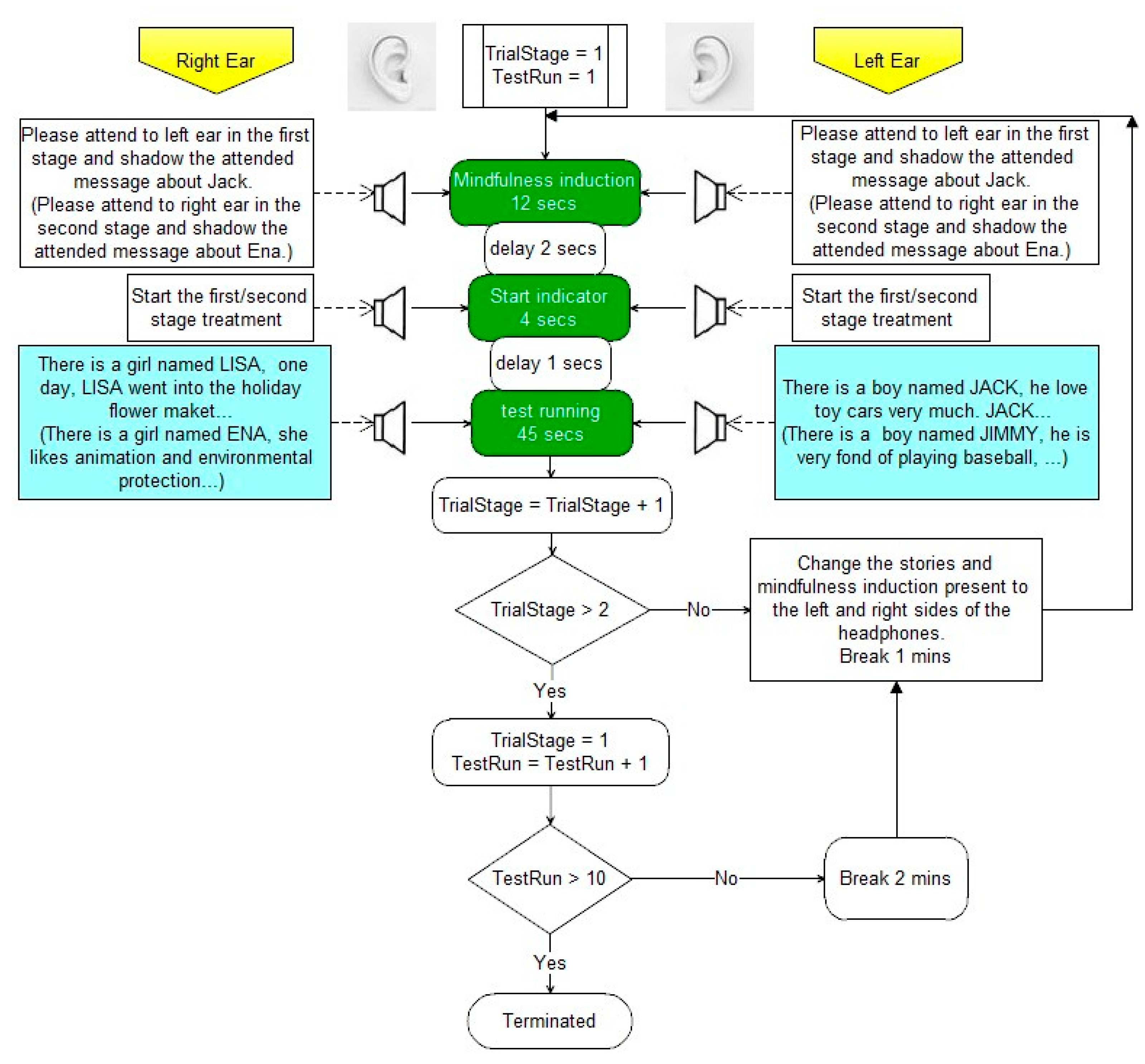

2.2.2. The Stimulation Trials Using Audio Story

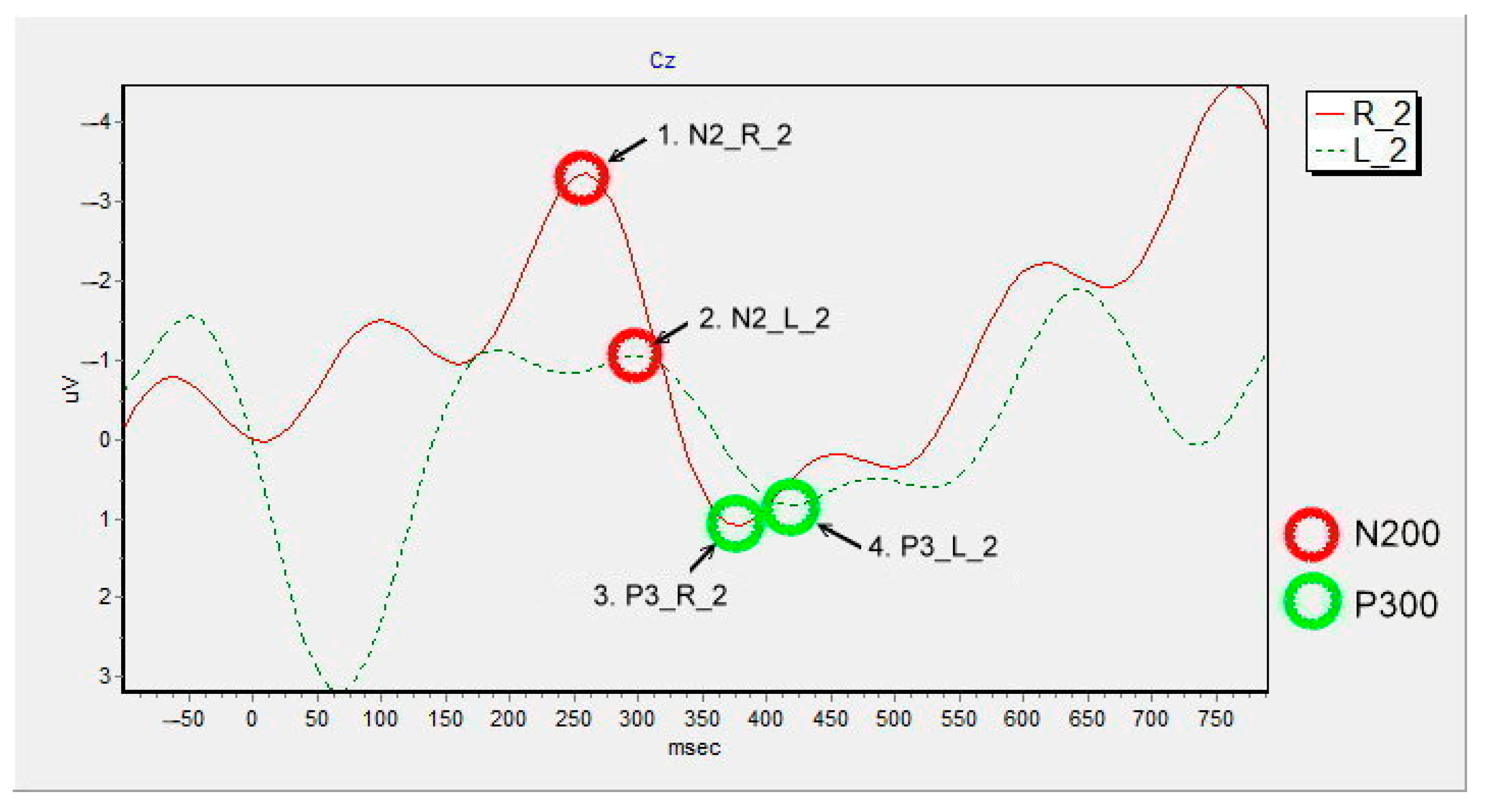

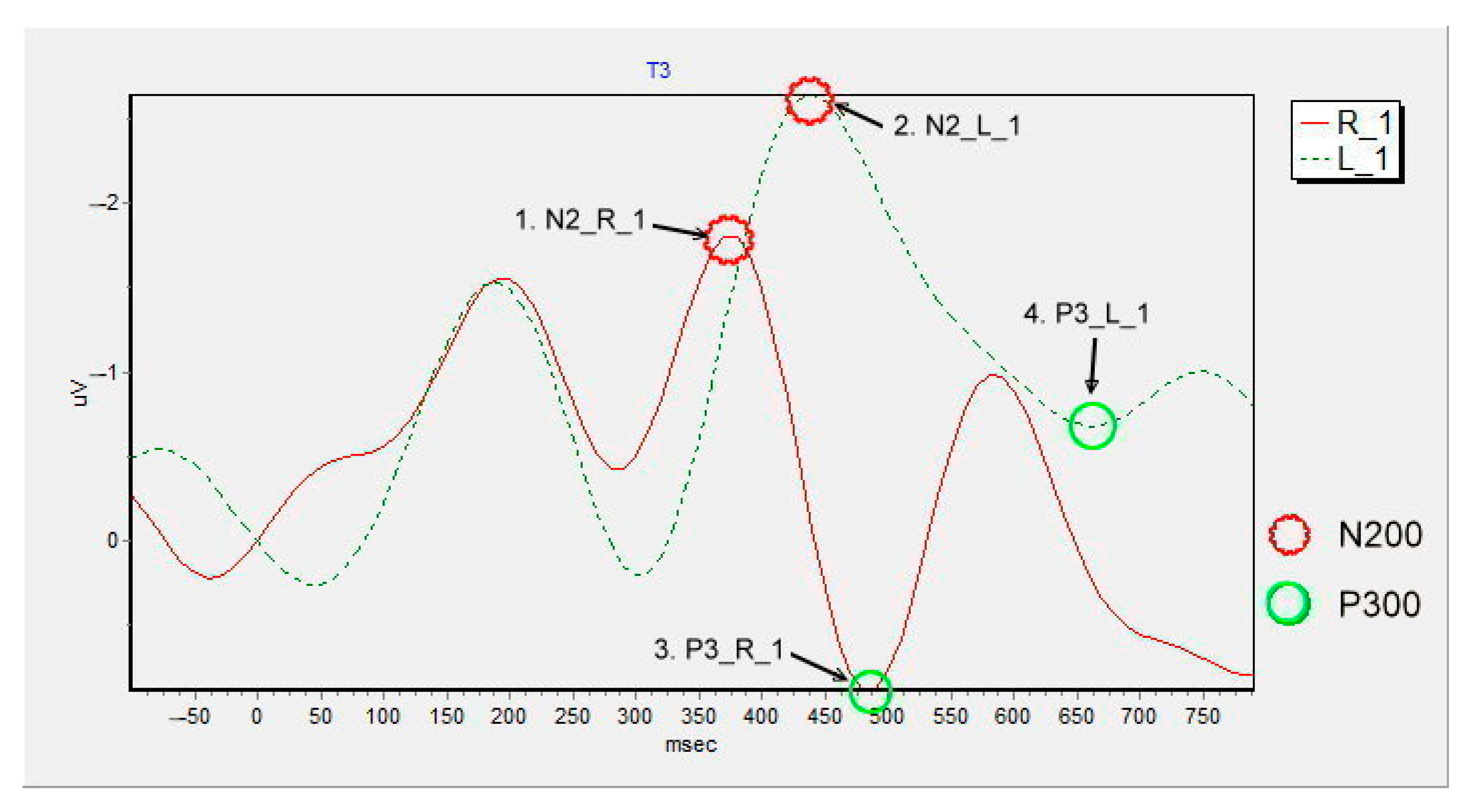

2.2.3. ERP Trial Features

2.3. Experimental Program

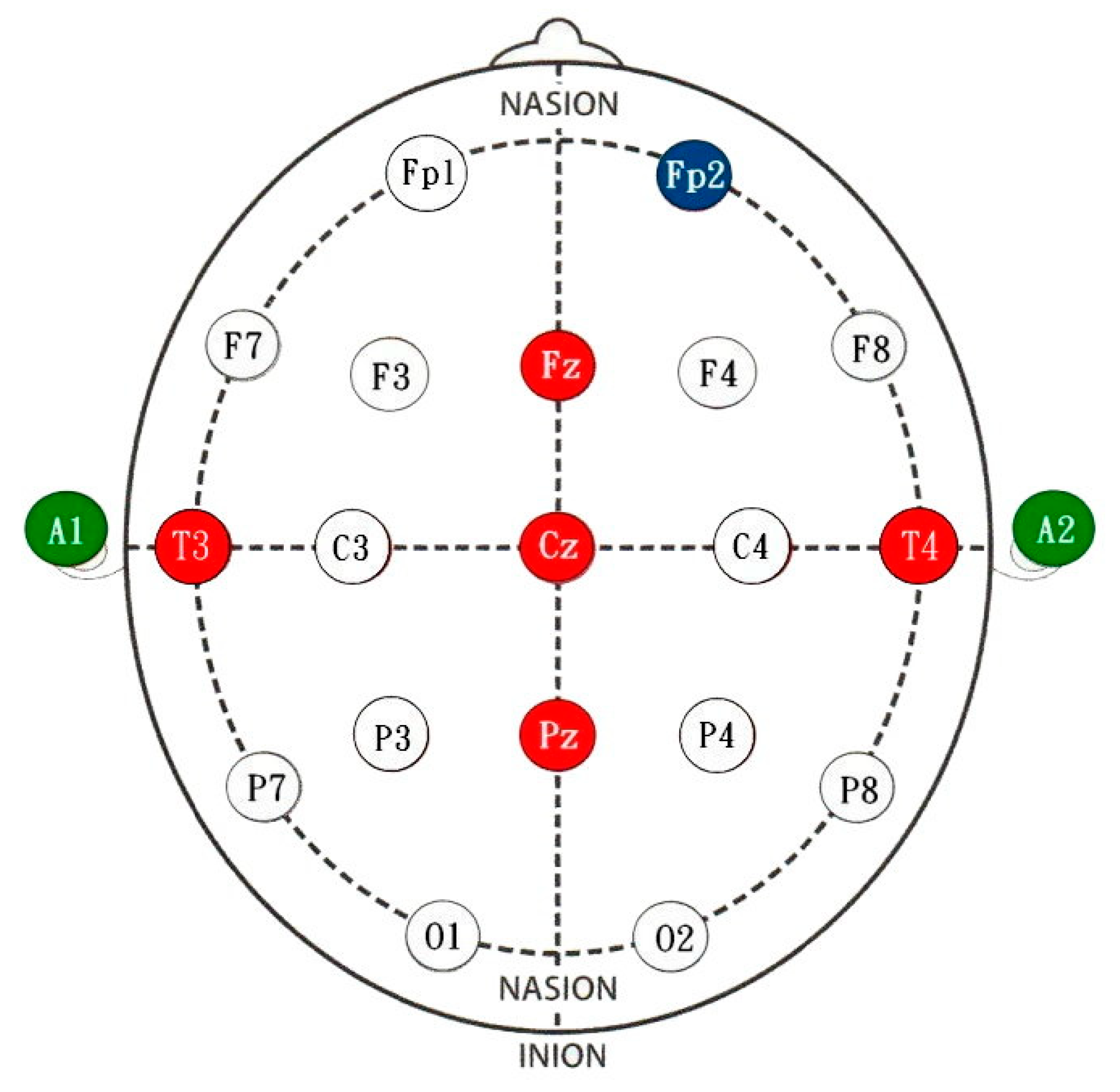

2.3.1. Experimental Equipment

2.3.2. Data Collection

2.3.3. Data Processing

- Stimuli presentation: The system synchronously plays two different audio stories via the left and the right headphones as the stimuli of the aBCI experiment.

- ERPs acquisition: One keyword appears seven times in each audio story file. The system ignores first time the keyword appears and then obtains the subject’s brainwaves the remaining six onset times of the keyword. Therefore, six ERP segments were retrieved one by one inside −100 to 800 ms based on each onset time of six keywords. Then, the aBCI system uses signal accumulation and averaging methods to treat the six ERP segments for every option to gain the ERP features.

- ERP features interpretation: After the processing of ERPs acquisition, our aBCI system thus finds out P3 and N2 potential and calculates the N2P3 potential. Then, the system would determine which audio story was focused on by the user during the trial after it estimated the component potential for each option with each other.

2.4. Experimental Procedure

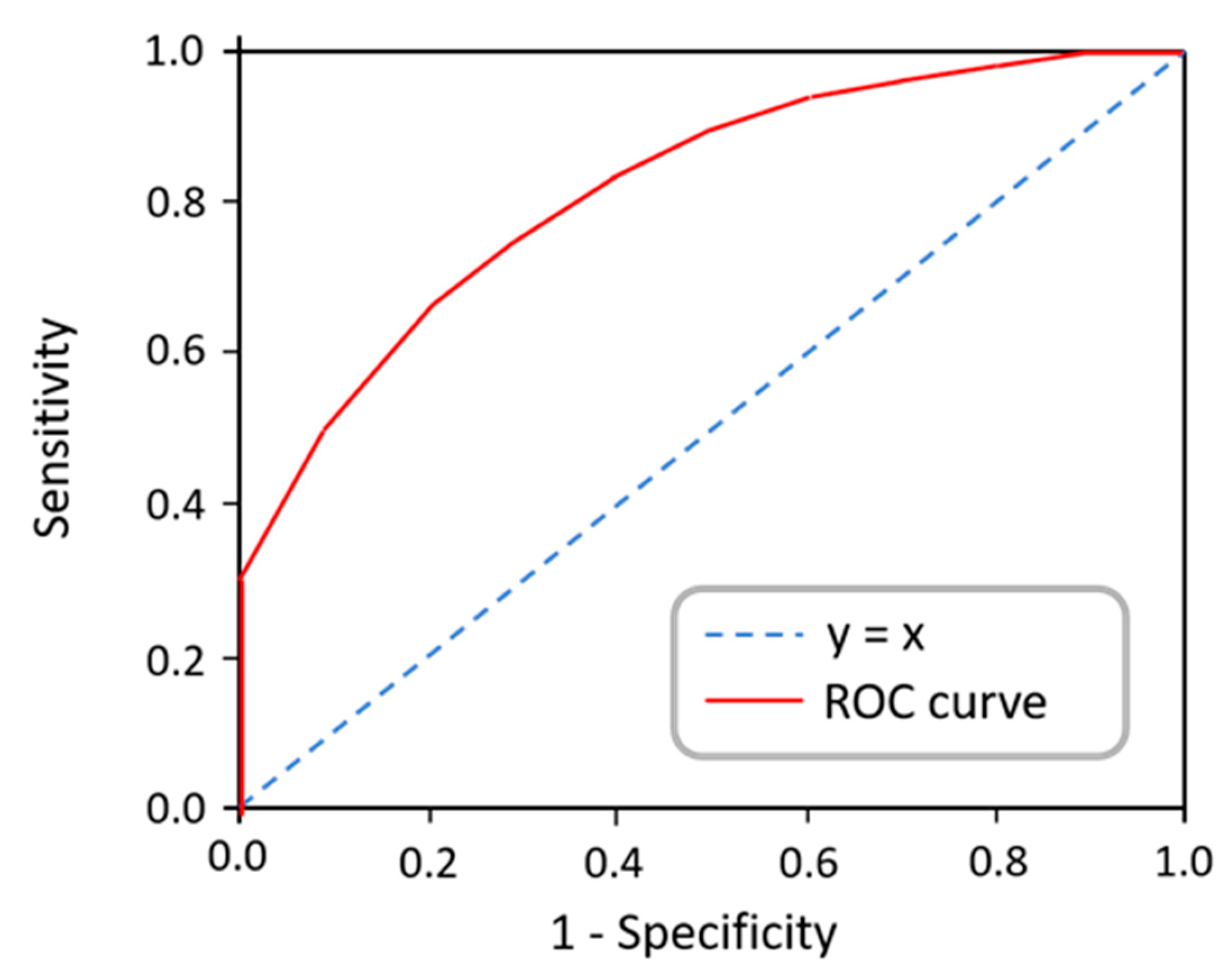

2.5. System Evaluation

2.5.1. Information Transfer Rate

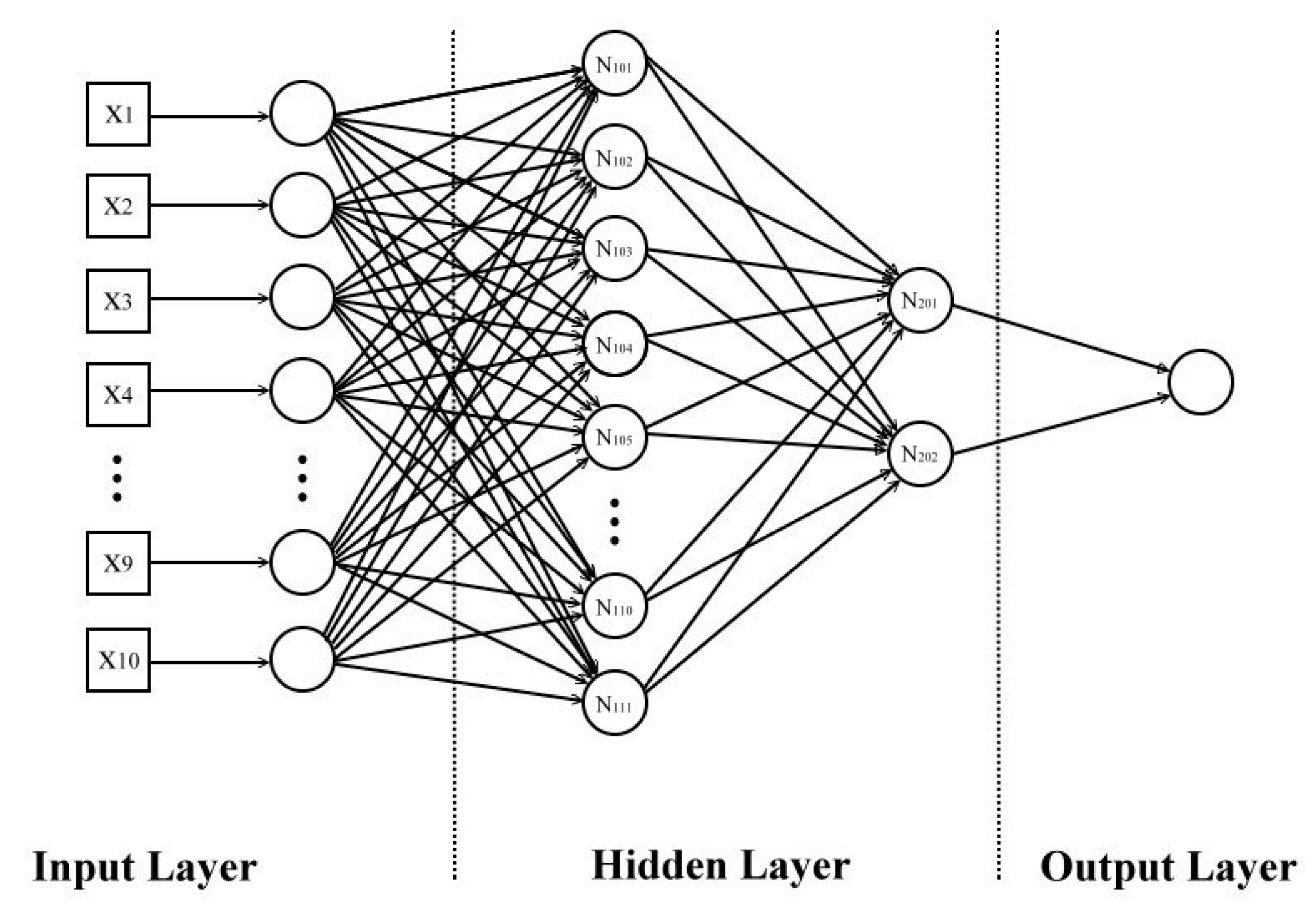

2.5.2. Neural Network

3. Results

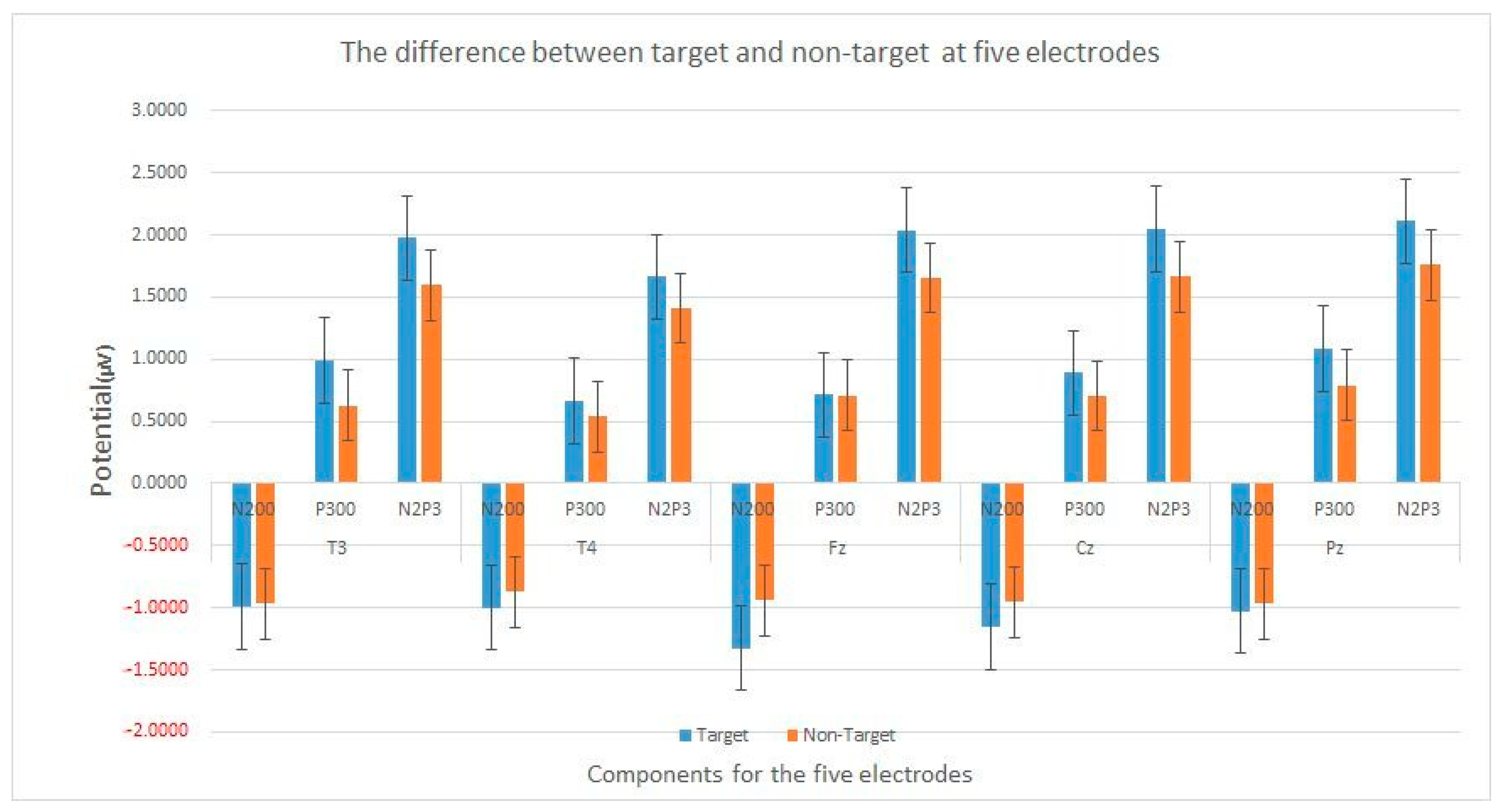

3.1. Discriminating the Sound-Evoked Potential in EEG

3.2. Accuracy Analyses of Experimental Results

3.2.1. Accuracy Analyses for All Output Data

3.2.2. Accuracy Analyses via Neural Network

3.2.3. Analysis of the Average Accuracies of the ERP Components

3.2.4. Effect of Gender Voice Differences on Accuracy

3.2.5. Effect of the Different Gender of Subjects on Accuracy

3.3. Bit-Rate Analysis

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Van Es, M.A.; Hardiman, O.; Chio, A.; Al-Chalabi, A.; Pasterkamp, R.J.; Veldink, J.H.; van den Berg, L.H. Amyotrophic Lateral Sclerosis. Lancet 2017, 390, 2084–2098. [Google Scholar] [CrossRef]

- Kiernan, M.C.; Vucic, S.; Cheah, B.C.; Turner, M.R.; Eisen, A.; Hardiman, O.; Burrell, J.R.; Zoing, M.C. Amyotrophic Lateral Sclerosis. Lancet 2011, 377, 942–955. [Google Scholar] [CrossRef] [PubMed]

- Turner, M.R.; Agosta, F.; Bede, P.; Govind, V.; Lulé, D.; Verstraete, E. Neuroimaging in Amyotrophic Lateral Sclerosis. Biomark. Med. 2012, 6, 319–337. [Google Scholar] [CrossRef] [PubMed]

- Kiernan, M.C.; Vucic, S.; Talbot, K.; McDermott, C.J.; Hardiman, O.; Shefner, J.M.; Al-Chalabi, A.; Huynh, W.; Cudkowicz, M.; Talman, P.; et al. Improving Clinical Trial Outcomes in Amyotrophic Lateral Sclerosis. Nat. Rev. Neurol. 2021, 17, 104–118. [Google Scholar] [CrossRef] [PubMed]

- Vahsen, B.F.; Gray, E.; Thompson, A.G.; Ansorge, O.; Anthony, D.C.; Cowley, S.A.; Talbot, K.; Turner, M.R. Non-Neuronal Cells in Amyotrophic Lateral Sclerosis—From Pathogenesis to Biomarkers. Nat. Rev. Neurol. 2021, 17, 333–348. [Google Scholar] [CrossRef]

- Huang, T.W. Design of Chinese Spelling System Based on ERPs. Master’s Thesis, National University of Tainan, Tainan, Taiwan, 2011. [Google Scholar]

- Sun, K.T.; Huang, T.W.; Chen, M.C. Design of Chinese Spelling System Based on ERP. In Proceedings of the 11th IEEE International Conference on Bioinformatics and Bioengineering, BIBE 2011, Taichung, Taiwan, 24–26 October 2011; pp. 310–313. [Google Scholar]

- Liu, Y.-H.; Wang, S.-H.; Hu, M.-R. A Self-Paced P300 Healthcare Brain-Computer Interface System with SSVEP-Based Switching Control and Kernel FDA + SVM-Based Detector. Appl. Sci. 2016, 6, 142. [Google Scholar] [CrossRef]

- Hong, B.; Guo, F.; Liu, T.; Gao, X.; Gao, S. N200-Speller Using Motion-Onset Visual Response. Clin. Neurophysiol. 2009, 120, 1658–1666. [Google Scholar] [CrossRef] [PubMed]

- Yin, E.; Zhou, Z.; Jiang, J.; Chen, F.; Liu, Y.; Hu, D. A Speedy Hybrid BCI Spelling Approach Combining P300 and SSVEP. IEEE Trans. Biomed. Eng. 2014, 61, 473–483. [Google Scholar] [CrossRef]

- Wolpaw, J.R.; Birbaumer, N.; McFarland, D.J.; Pfurtscheller, G.; Vaughan, T.M. Brain-Computer Interfaces for Communication and Control. Clin. Neurophysiol. 2002, 113, 767–791. [Google Scholar] [CrossRef]

- Martínez-Cagigal, V.; Santamaría-Vázquez, E.; Gomez-Pilar, J.; Hornero, R. Towards an Accessible Use of Smartphone-Based Social Networks through Brain-Computer Interfaces. Expert Syst. Appl. 2019, 120, 155–166. [Google Scholar] [CrossRef]

- Sun, K.T.; Hsieh, K.L.; Syu, S.R. Towards an Accessible Use of a Brain-Computer Interfaces-Based Home Care System through a Smartphone. Comput. Intell. Neurosci. 2020, 2020, 16–18. [Google Scholar] [CrossRef] [PubMed]

- Hsieh, K.L.; Sun, K.T. Auditory Brain Computer Interface Design. In Proceedings of the 2017 International Conference on Applied System Innovation (ICASI), Sapporo, Japan, 13–17 May 2017; pp. 11–14. [Google Scholar]

- Matsumoto, Y.; Makino, S.; Mori, K.; Rutkowski, T.M. Classifying P300 Responses to Vowel Stimuli for Auditory Brain-Computer Interface. In Proceedings of the 2013 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference, APSIPA 2013, Kaohsiung, Taiwan, 29 October–1 November 2013; pp. 1–5. [Google Scholar]

- Borirakarawin, M.; Punsawad, Y. Event-Related Potential-Based Brain-Computer Interface Using the Thai Vowels’ and Numerals’; Auditory Stimulus Pattern. Sensors 2022, 22, 5864. [Google Scholar] [CrossRef]

- Zeyl, T.; Yin, E.; Keightley, M.; Chau, T. Improving Bit Rate in an Auditory BCI: Exploiting Error-Related Potentials. Brain-Comput. Interfaces 2016, 3, 75–87. [Google Scholar] [CrossRef]

- Aydin, E.A.; Bay, O.F.; Guler, I. P300-Based Asynchronous Brain Computer Interface for Environmental Control System. IEEE J. Biomed. Health Inform. 2018, 22, 653–663. [Google Scholar] [CrossRef] [PubMed]

- Abiri, R.; Borhani, S.; Sellers, E.W.; Jiang, Y.; Zhao, X. A Comprehensive Review of EEG-Based Brain-Computer Interface Paradigms. J. Neural Eng. 2019, 16, 011001. [Google Scholar] [CrossRef] [PubMed]

- Islam, M.S.; Hussain, I.; Rahman, M.M.; Park, S.J.; Hossain, M.A. Explainable Artificial Intelligence Model for Stroke Prediction Using EEG Signal. Sensors 2022, 22, 9859. [Google Scholar] [CrossRef]

- Domingos, C.; da Silva Caldeira, H.; Miranda, M.; Melicio, F.; Rosa, A.C.; Pereira, J.G. The Influence of Noise in the Neurofeedback Training Sessions in Student Athletes. Int. J. Environ. Res. Public Health 2021, 18, 13223. [Google Scholar] [CrossRef]

- Cheng, P.W.; Tian, Y.J.; Kuo, T.H.; Sun, K.T. The Relationship between Brain Reaction and English Reading Tests for Non-Native English Speakers. Brain Res. 2016, 1642, 384–388. [Google Scholar] [CrossRef]

- Sutton, S.; Braren, M.; Zubin, J.; John, E.R. Evoked-Potential Correlates of Stimulus Uncertainty. Science 1965, 150, 1187–1188. [Google Scholar] [CrossRef]

- Kappenman, E.S.; Farrens, J.L.; Zhang, W.; Stewart, A.X.; Luck, S.J. ERP CORE: An Open Resource for Human Event-Related Potential Research. Neuroimage 2021, 225, 117465. [Google Scholar] [CrossRef]

- Jamil, N.; Belkacem, A.N.; Ouhbi, S.; Lakas, A. Noninvasive Electroencephalography Equipment for Assistive, Adaptive, and Rehabilitative Brain–Computer Interfaces: A Systematic Literature Review. Sensors 2021, 21, 4754. [Google Scholar] [CrossRef] [PubMed]

- Gamble, M.L.; Luck, S.J. N2ac: An ERP Component Associated with the Focusing of Attention within an Auditory Scene. Psychophysiology 2011, 48, 1057–1068. [Google Scholar] [CrossRef] [PubMed]

- Regan, D. Human Brain Electrophysiology: Evoked Potentials and Evoked Magnetic Fields in Science and Medicine; Elsevier: New York, NY, USA, 1989. [Google Scholar]

- Lakey, C.E.; Berry, D.R.; Sellers, E.W. Manipulating Attention via Mindfulness Induction Improves P300-Based Brain-Computer Interface Performance. J. Neural Eng. 2011, 8, 025019. [Google Scholar] [CrossRef] [PubMed]

- Picton, T.W. The P300 Wave of the Human Event-Related Potential. J. Clin. Neurophysiol. 1992, 9, 456–479. [Google Scholar] [CrossRef] [PubMed]

- Panicker, R.C.; Puthusserypady, S.; Sun, Y. Adaptation in P300 Braincomputer Interfaces: A Two-Classifier Cotraining Approach. IEEE Trans. Biomed. Eng. 2010, 57, 2927–2935. [Google Scholar] [CrossRef]

- Marassi, A.; Budai, R.; Chittaro, L. A P300 Auditory Brain-Computer Interface Based on Mental Repetition. Biomed. Phys. Eng. Express 2018, 4, 035040. [Google Scholar] [CrossRef]

- Hoffmann, U.; Vesin, J.M.; Ebrahimi, T.; Diserens, K. An Efficient P300-Based Brain-Computer Interface for Disabled Subjects. J. Neurosci. Methods 2008, 167, 115–125. [Google Scholar] [CrossRef]

- Patel, S.H.; Azzam, P.N. Characterization of N200 and P300: Selected Studies of the Event-Related Potential. Int. J. Med. Sci. 2005, 2, 147–154. [Google Scholar] [CrossRef]

- Donchin, E.; Spencer, K.M.; Wijesinghe, R. The Mental Prosthesis: Assessing the Speed of a P300-Based Brain- Computer Interface. IEEE Trans. Rehabil. Eng. 2000, 8, 174–179. [Google Scholar] [CrossRef]

- Halgren, E.; Marinkovic, K.; Chauvel, P. Generators of the Late Cognitive Potentials in Auditory and Visual Oddball Tasks. Electroencephalogr. Clin. Neurophysiol. 1998, 106, 156–164. [Google Scholar] [CrossRef]

- Zhang, R.; Wang, Q.; Li, K.; He, S.; Qin, S.; Feng, Z.; Chen, Y.; Song, P.; Yang, T.; Zhang, Y.; et al. A BCI-Based Environmental Control System for Patients with Severe Spinal Cord Injuries. IEEE Trans. Biomed. Eng. 2017, 64, 1959–1971. [Google Scholar] [CrossRef] [PubMed]

- Cherry, E.C. Some Experiments on the Recognition of Speech, with One and with Two Ears. J. Acoust. Soc. Am. 1953, 25, 975–979. [Google Scholar] [CrossRef]

- Matsumoto, Y.; Nishikawa, N.; Yamada, T.; Makino, S.; Rutkowski, T.M. Auditory Steady-State Response Stimuli Based BCI Application-the Optimization of the Stimuli Types and Lengths. In Proceedings of the Signal and Information Processing Association Annual Summit and Conference (APSIPA), Kaohsiung, Taiwan, 29 October–1 November 2013; pp. 285–308. [Google Scholar]

- Höhne, J.; Tangermann, M. Towards User-Friendly Spelling with an Auditory Brain-Computer Interface: The CharStreamer Paradigm. PLoS ONE 2014, 9, e102630. [Google Scholar] [CrossRef]

- Sosulski, J.; Hübner, D.; Klein, A.; Tangermann, M. Online Optimization of Stimulation Speed in an Auditory Brain-Computer Interface under Time Constraints. arXiv 2021, arXiv:2109.06011. [Google Scholar]

- Kaongoen, N.; Jo, S. A Novel Hybrid Auditory BCI Paradigm Combining ASSR and P300. J. Neurosci. Methods 2017, 279, 44–51. [Google Scholar] [CrossRef] [PubMed]

- Lu, Z.; Li, Q.; Gao, N.; Yang, J.; Bai, O. Happy Emotion Cognition of Bimodal Audiovisual Stimuli Optimizes the Performance of the P300 Speller. Brain Behav. 2019, 9, e01479. [Google Scholar] [CrossRef]

- Oralhan, Z. A New Paradigm for Region-Based P300 Speller in Brain Computer Interface. IEEE Access 2019, 7, 106618–106627. [Google Scholar] [CrossRef]

- Lu, Z.; Li, Q.; Gao, N.; Yang, J.; Bai, O. A Novel Audiovisual P300-Speller Paradigm Based on Cross-Modal Spatial and Semantic Congruence. Front. Neurosci. 2019, 13, 1040. [Google Scholar] [CrossRef]

- Heo, J.; Baek, H.J.; Hong, S.; Chang, M.H.; Lee, J.S.; Park, K.S. Music and Natural Sounds in an Auditory Steady-State Response Based Brain–Computer Interface to Increase User Acceptance. Comput. Biol. Med. 2017, 84, 45–52. [Google Scholar] [CrossRef]

- Nishikawa, N.; Makino, S.; Rutkowski, T.M. Spatial Auditory BCI Paradigm Based on Real and Virtual Sound Image Generation. In Proceedings of the 2013 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference, APSIPA 2013, Kaohsiung, Taiwan, 29 October–1 November 2013. [Google Scholar]

- Chang, M.; Mori, K.; Makino, S. Spatial Auditory Two-Step Input Japanese Syllabary Brain-Computer Interface Speller. Procedia Technol. 2014, 18, 25–31. [Google Scholar] [CrossRef]

- Baykara, E.; Ruf, C.A.; Fioravanti, C.; Käthner, I.; Simon, N.; Kleih, S.C.; Kübler, A.; Halder, S. Effects of Training and Motivation on Auditory P300 Brain-Computer Interface Performance. Clin. Neurophysiol. 2016, 127, 379–387. [Google Scholar] [CrossRef] [PubMed]

- Moller, A.R. Hearing: Anatomy, Physiology, and Disorders of the Auditory System, 2nd ed.; Academic Press: San Diego, CA, USA, 2006; ISBN 978-0-12-372519-6. [Google Scholar]

- Lobato, B.Y.M.; Ramirez, M.R.; Rojas, E.M.; Moreno, H.B.R.; Soto, M.D.C.S.; Nuñez, S.O.V. Controlling a Computer Using BCI, by Blinking or Concentration. In Proceedings of the 2018 International Conference on Algorithms, Computing and Artificial Intelligence; Association for Computing Machinery, New York, NY, USA, 21–23 December 2018. [Google Scholar]

- Da Silva-Sauer, L.; Valero-Aguayo, L.; dela Torre-Luque, A.; Ron-Angevin, R.; Varona-Moya, S. Concentration on Performance with P300-Based BCI Systems: A Matter of Interface Features. Appl. Ergon. 2016, 52, 325–332. [Google Scholar] [CrossRef] [PubMed]

- Da Silva Souto, C.; Lüddemann, H.; Lipski, S.; Dietz, M.; Kollmeier, B. Influence of Attention on Speech-Rhythm Evoked Potentials: First Steps towards an Auditory Brain-Computer Interface Driven by Speech. Biomed. Phys. Eng. Express 2016, 2, 325–332. [Google Scholar] [CrossRef]

- McDermott, J.H. The Cocktail Party Problem. Curr. Biol. 2009, 19, R1024–R1027. [Google Scholar] [CrossRef] [PubMed]

- Revlin, R. Human Cognition: Theory and Practice; Worth Publishers: New York, NY, USA, 2007; ISBN 9780716756675. [Google Scholar]

- Peschke, C.; Ziegler, W.; Kappes, J.; Baumgaertner, A. Auditory-Motor Integration during Fast Repetition: The Neuronal Correlates of Shadowing. Neuroimage 2009, 47, 392–402. [Google Scholar] [CrossRef] [PubMed]

- DeVos, M.; Gandras, K.; Debener, S. Towards a Truly Mobile Auditory Brain-Computer Interface: Exploring the P300 to Take Away. Int. J. Psychophysiol. 2014, 91, 46–53. [Google Scholar] [CrossRef] [PubMed]

- Jurcak, V.; Tsuzuki, D.; Dan, I. 10/20, 10/10, and 10/5 Systems Revisited: Their Validity as Relative Head-Surface-Based Positioning Systems. Neuroimage 2007, 34, 1600–1611. [Google Scholar] [CrossRef]

- Wagner, A.; Ille, S.; Liesenhoff, C.; Aftahy, K.; Meyer, B.; Krieg, S.M. Improved Potential Quality of Intraoperative Transcranial Motor-Evoked Potentials by Navigated Electrode Placement Compared to the Conventional Ten-Twenty System. Neurosurg. Rev. 2022, 45, 585–593. [Google Scholar] [CrossRef]

- Ng, C.R.; Fiedler, P.; Kuhlmann, L.; Liley, D.; Vasconcelos, B.; Fonseca, C.; Tamburro, G.; Comani, S.; Lui, T.K.-Y.; Tse, C.-Y.; et al. Multi-Center Evaluation of Gel-Based and Dry Multipin EEG Caps. Sensors 2022, 22, 8079. [Google Scholar] [CrossRef]

- Wolpaw, J.R.; Birbaumer, N.; Heetderks, W.J.; McFarland, D.J.; Peckham, P.H.; Schalk, G.; Donchin, E.; Quatrano, L.A.; Robinson, C.J.; Vaughan, T.M. Brain-Computer Interface Technology: A Review of the First International Meeting. IEEE Trans. Rehabil. Eng. 2000, 8, 164–173. [Google Scholar] [CrossRef]

- Abiodun, O.I.; Jantan, A.; Omolara, A.E.; Dada, K.V.; Mohamed, N.A.; Arshad, H. State-of-the-Art in Artificial Neural Network Applications: A Survey. Heliyon 2018, 4, e00938. [Google Scholar] [CrossRef] [PubMed]

- An, X.; Höhne, J.; Ming, D.; Blankertz, B. Exploring Combinations of Auditory and Visual Stimuli for Gaze-Independent Brain-Computer Interfaces. PLoS ONE 2014, 9, e111070. [Google Scholar] [CrossRef] [PubMed]

- Ahsan, M.M.; Luna, S.A.; Siddique, Z. Machine-Learning-Based Disease Diagnosis: A Comprehensive Review. Healthcare 2022, 10, 541. [Google Scholar] [CrossRef]

- Dornhege, G.; Blankertz, B.; Curio, G.; Müller, K.-R. Increase Information Transfer Rates in BCI by CSP Extension to Multi-Class. In Proceedings of the NIPS 2003, Vancouver, BC, Canada, 8–13 December 2003. [Google Scholar]

- Chang, M.; Nishikawa, N.; Struzik, Z.R.; Mori, K.; Makino, S.; Mandic, D.; Rutkowski, T.M. Comparison of P300 Responses in Auditory, Visual and Audiovisual Spatial Speller BCI Paradigms. arXiv 2013, arXiv:1301.6360. [Google Scholar] [CrossRef]

| Options | N2P3 | P300 | N200 | Target | Result | |

|---|---|---|---|---|---|---|

| Specified | On Line | Off Line | ||||

| R | 4.4627 * | 1.0947 * | −3.3680 * | ✓ | ✓ | N2P3, P300, N200 |

| L | 1.0026 | −0.1217 | −1.1242 | |||

| Unit: % | ||||||

|---|---|---|---|---|---|---|

| Subjects | T3 | T4 | Fz | Cz | Pz | Average |

| N01 | 50.00 | 50.00 | 70.00 * | 60.00 | 45.00 | 55.00 |

| N02 | 60.00 | 65.00 | 75.00 | 80.00 * | 70.00 | 70.00 |

| N03 | 55.00 | 45.00 | 50.00 | 60.00 * | 50.00 | 52.00 |

| N04 | 55.00 | 65.00 | 85.00 * | 75.00 | 60.00 | 68.00 |

| N05 | 40.00 | 65.00 * | 60.00 | 60.00 | 35.00 | 52.00 |

| N06 | 55.00 | 40.00 | 60.00 | 55.00 | 65.00 * | 55.00 |

| N07 | 60.00 | 65.00 * | 60.00 | 60.00 | 50.00 | 59.00 |

| N08 | 60.00 | 65.00 * | 60.00 | 50.00 | 55.00 | 58.00 |

| N09 | 55.00 * | 50.00 | 55.00 * | 55.00 * | 55.00 * | 54.00 |

| N10 | 40.00 | 45.00 | 70.00 * | 70.00 * | 65.00 | 58.00 |

| N11 | 50.00 * | 40.00 | 50.00 * | 50.00 * | 50.00 * | 48.00 |

| N12 | 50.00 | 35.00 | 65.00 * | 45.00 | 50.00 | 49.00 |

| N13 | 70.00 | 70.00 | 70.00 | 75.00 * | 45.00 | 66.00 |

| N14 | 45.00 | 80.00 * | 50.00 | 60.00 | 65.00 | 60.00 |

| N15 | 45.00 | 70.00 * | 65.00 | 65.00 | 50.00 | 59.00 |

| N16 | 50.00 | 65.00 * | 45.00 | 40.00 | 40.00 | 48.00 |

| N17 | 45.00 | 80.00 * | 70.00 | 70.00 | 70.00 | 67.00 |

| N18 | 50.00 | 65.00 | 70.00 | 75.00 * | 65.00 | 65.00 |

| N19 | 70.00 * | 60.00 | 70.00 * | 70.00 * | 65.00 | 67.00 |

| N20 | 45.00 | 55.00 | 75.00 * | 60.00 | 45.00 | 56.00 |

| N21 | 55.00 | 55.00 | 70.00 * | 65.00 | 60.00 | 61.00 |

| N22 | 75.00 * | 55.00 | 60.00 | 55.00 | 45.00 | 58.00 |

| N23 | 60.00 | 65.00 * | 55.00 | 55.00 | 50.00 | 57.00 |

| N24 | 75.00 * | 50.00 | 75.00 * | 50.00 | 60.00 | 62.00 |

| Average | 54.79 | 58.33 | 63.96 * | 60.83 | 54.58 | 58.50 |

| Unit: % | ||||||

|---|---|---|---|---|---|---|

| Subjects | T3 | T4 | Fz | Cz | Pz | Average |

| N01 | 75.00 * | 75.00 * | 60.00 | 75.00 * | 75.00 * | 72.00 |

| N02 | 40.00 | 60.00 * | 35.00 | 30.00 | 35.00 | 40.00 |

| N03 | 65.00 | 65.00 | 50.00 | 60.00 | 70.00 * | 62.00 |

| N04 | 70.00 * | 55.00 | 45.00 | 50.00 | 55.00 | 55.00 |

| N05 | 70.00 * | 45.00 | 45.00 | 50.00 | 55.00 | 53.00 |

| N06 | 60.00 | 40.00 | 50.00 | 60.00 | 65.00 * | 55.00 |

| N07 | 55.00 | 55.00 | 60.00 | 55.00 | 65.00 * | 58.00 |

| N08 | 50.00 | 60.00 | 80.00 | 65.00 | 85.00 * | 68.00 |

| N09 | 65.00 * | 60.00 | 50.00 | 65.00 * | 55.00 | 59.00 |

| N10 | 60.00 | 55.00 | 65.00 * | 55.00 | 65.00 * | 60.00 |

| N11 | 65.00 | 55.00 | 80.00 * | 60.00 | 60.00 | 64.00 |

| N12 | 55.00 | 65.00 * | 60.00 | 60.00 | 60.00 | 60.00 |

| N13 | 55.00 * | 55.00 * | 55.00 * | 55.00 * | 40.00 | 52.00 |

| N14 | 75.00 | 50.00 | 55.00 | 80.00 * | 75.00 | 67.00 |

| N15 | 70.00 | 60.00 | 75.00 | 80.00 | 85.00 * | 74.00 |

| N16 | 70.00 | 60.00 | 55.00 | 65.00 | 75.00* | 65.00 |

| N17 | 65.00 * | 60.00 | 60.00 | 60.00 | 55.00 | 60.00 |

| N18 | 65.00 | 45.00 | 70.00 * | 60.00 | 70.00 * | 62.00 |

| N19 | 65.00 | 75.00 * | 55.00 | 60.00 | 65.00 | 64.00 |

| N20 | 75.00 | 65.00 | 75.00 | 85.00 * | 85.00 * | 77.00 |

| N21 | 70.00 * | 70.00 * | 60.00 | 45.00 | 55.00 | 60.00 |

| N22 | 55.00 | 70.00 * | 55.00 | 60.00 | 55.00 | 59.00 |

| N23 | 70.00 * | 60.00 | 55.00 | 70.00 * | 55.00 | 62.00 |

| N24 | 35.00 | 75.00 * | 55.00 | 50.00 | 45.00 | 52.00 |

| Average | 62.50 | 59.79 | 58.54 | 60.63 | 62.71 * | 60.83 |

| Unit: % | ||||||

|---|---|---|---|---|---|---|

| Subjects | T3 | T4 | Fz | Cz | Pz | Average |

| N01 | 80.00 | 65.00 | 75.00 | 85.00 * | 65.00 | 74.00 |

| N02 | 80.00 * | 75.00 | 60.00 | 65.00 | 65.00 | 69.00 |

| N03 | 75.00 * | 55.00 | 70.00 | 55.00 | 70.00 | 65.00 |

| N04 | 75.00 | 75.00 | 80.00 | 90.00 * | 85.00 | 81.00 |

| N05 | 75.00 * | 60.00 | 65.00 | 70.00 | 50.00 | 64.00 |

| N06 | 55.00 | 50.00 | 65.00 | 75.00 | 80.00 * | 65.00 |

| N07 | 65.00 | 60.00 | 60.00 | 65.00 | 75.00 * | 65.00 |

| N08 | 65.00 | 70.00 | 75.00 | 80.00 * | 75.00 | 73.00 |

| N09 | 70.00 * | 60.00 | 65.00 | 70.00 * | 70.00 * | 67.00 |

| N10 | 70.00 | 85.00 * | 75.00 | 80.00 | 80.00 | 78.00 |

| N11 | 65.00 | 45.00 | 70.00 * | 60.00 | 65.00 | 61.00 |

| N12 | 65.00 | 60.00 | 80.00 * | 65.00 | 60.00 | 66.00 |

| N13 | 60.00 | 60.00 | 60.00 | 45.00 | 70.00 * | 59.00 |

| N14 | 65.00 | 90.00 * | 70.00 | 75.00 | 90.00 * | 78.00 |

| N15 | 55.00 | 70.00 | 75.00 | 65.00 | 85.00 * | 70.00 |

| N16 | 80.00 * | 60.00 | 55.00 | 60.00 | 80.00 * | 67.00 |

| N17 | 65.00 | 65.00 | 75.00 | 85.00 * | 75.00 | 73.00 |

| N18 | 55.00 | 65.00 | 75.00 | 85.00 | 90.00 * | 74.00 |

| N19 | 65.00 | 90.00 * | 70.00 | 80.00 | 80.00 | 77.00 |

| N20 | 70.00 | 85.00 * | 80.00 | 80.00 | 70.00 | 77.00 |

| N21 | 70.00 | 75.00 | 65.00 | 80.00 * | 55.00 | 69.00 |

| N22 | 85.00 * | 65.00 | 75.00 | 65.00 | 60.00 | 70.00 |

| N23 | 80.00 * | 70.00 | 55.00 | 65.00 | 70.00 | 68.00 |

| N24 | 35.00 | 45.00 | 55.00 | 60.00 * | 50.00 | 49.00 |

| Average | 67.71 | 66.67 | 68.75 | 71.04 | 71.46 * | 69.13 |

| α = 0.01, N = 480 | ||||||

|---|---|---|---|---|---|---|

| Components | Electrode | Case | Accuracy (%) | Potential (µV) | ||

| T-Value | p-Value | T-Value | p-Value | |||

| N200 | T3 | target vs. non-target | 2.335 | 0.028 | −0.1772 | 0.859 |

| T4 | 3.366 | 0.002 * | −1.237 | 0.217 | ||

| Fz | 6.915 | 0.000 *** | −3.971 | 0.000 *** | ||

| Cz | 5.159 | 0.000 *** | −2.249 | 0.025 | ||

| Pz | 2.298 | 0.031 * | −0.576 | 0.565 | ||

| P300 | T3 | target vs. non-target | 5.873 | 0.000 *** | 2.521 | 0.012 |

| T4 | 5.113 | 0.000 *** | 1.021 | 0.308 | ||

| Fz | 3.743 | 0.001 * | 0.028 | 0.977 | ||

| Cz | 4.335 | 0.000 *** | 2.011 | 0.045 * | ||

| Pz | 4.663 | 0.000 *** | 2.792 | 0.005 * | ||

| N2P3 | T3 | target vs. non-target | 7.935 | 0.000 *** | 4.288 | 0.000 *** |

| T4 | 6.496 | 0.000 *** | 3.046 | 0.002 * | ||

| Fz | 11.327 | 0.000 *** | 4.224 | 0.000 *** | ||

| Cz | 9.182 | 0.000 *** | 4.522 | 0.000 *** | ||

| Pz | 9.245 | 0.000 *** | 4.160 | 0.000 *** | ||

| α = 0.01, N = 480 | |||

|---|---|---|---|

| Component | Case | T-Value | p-Value |

| N2P3 | T4 vs. Pz | −1.809 | 0.084 |

| Unit: % | |||

|---|---|---|---|

| Subjects | N200 | P300 | N2P3 |

| N01 | 70.00 | 95.00 * | 90.00 |

| N02 | 85.00 * | 55.00 | 85.00 * |

| N03 | 60.00 | 75.00 | 80.00 * |

| N04 | 70.00 | 60.00 | 85.00 * |

| N05 | 65.00 | 65.00 | 70.00 * |

| N06 | 65.00 | 60.00 | 85.00 * |

| N07 | 70.00 * | 70.00 * | 70.00 * |

| N08 | 60.00 | 80.00 * | 80.00 * |

| N09 | 60.00 | 65.00 | 80.00 * |

| N10 | 80.00 | 70.00 | 85.00 * |

| N11 | 50.00 | 80.00 * | 65.00 |

| N12 | 55.00 | 70.00 | 75.00 * |

| N13 | 75.00 * | 65.00 | 75.00 * |

| N14 | 70.00 | 75.00 | 80.00 * |

| N15 | 70.00 | 90.00 * | 75.00 |

| N16 | 45.00 | 70.00 | 75.00 * |

| N17 | 80.00 | 55.00 | 85.00 * |

| N18 | 75.00 | 80.00 | 90.00 * |

| N19 | 70.00 | 65.00 | 85.00 * |

| N20 | 65.00 | 80.00 | 85.00 * |

| N21 | 65.00 | 85.00 * | 85.00 * |

| N22 | 60.00 | 65.00 | 80.00 * |

| N23 | 70.00 * | 65.00 | 70.00 * |

| N24 | 65.00 * | 50.00 | 60.00 |

| Average | 66.67 | 70.42 | 78.96 * |

| Dependent Variable: Average Accuracies | Unit: % | |||||

|---|---|---|---|---|---|---|

| Components | T3 | T4 | Fz | Cz | Pz | NN Technology |

| N200 | 54.79 | 58.33 | 63.96 | 60.83 | 54.58 | 66.67 |

| P300 | 62.50 | 59.79 | 58.54 | 60.63 | 62.71 | 70.42 |

| N2P3 | 67.71 | 66.67 | 68.75 | 71.04 | 71.46 | 78.96 |

| Dependent Variable: Average Accuracies from the Analysis of NN Technology | |||

|---|---|---|---|

| Electrode(I) | Electrode(J) | Mean Discrepancy(I-J) | p-Value |

| N2P3 | N200 | 12.29167 *** | 0.000 *** |

| P300 | 8.54167 * | 0.011 * | |

| P300 | N200 | 3.75000 | 0.400 |

| Unit: % | ||||||

|---|---|---|---|---|---|---|

| Subjects | N200 | P300 | N2P3 | |||

| DG | SG | DG | SG | DG | SG | |

| N01 | 46.00 | 64.00 | 82.00 | 62.00 | 88.00 | 60.00 |

| N02 | 68.00 | 72.00 | 48.00 | 32.00 | 72.00 | 66.00 |

| N03 | 44.00 | 60.00 | 60.00 | 64.00 | 60.00 | 70.00 |

| N04 | 62.00 | 74.00 | 60.00 | 50.00 | 82.00 | 80.00 |

| N05 | 46.00 | 58.00 | 52.00 | 54.00 | 60.00 | 68.00 |

| N06 | 62.00 | 48.00 | 44.00 | 66.00 | 64.00 | 66.00 |

| N07 | 64.00 | 54.00 | 56.00 | 60.00 | 60.00 | 70.00 |

| N08 | 60.00 | 56.00 | 68.00 | 68.00 | 70.00 | 76.00 |

| N09 | 62.00 | 46.00 | 64.00 | 54.00 | 72.00 | 62.00 |

| N10 | 56.00 | 60.00 | 66.00 | 54.00 | 86.00 | 70.00 |

| N11 | 66.00 | 30.00 | 56.00 | 72.00 | 64.00 | 58.00 |

| N12 | 34.00 | 64.00 | 60.00 | 60.00 | 52.00 | 80.00 |

| N13 | 66.00 | 66.00 | 62.00 | 42.00 | 64.00 | 54.00 |

| N14 | 52.00 | 68.00 | 84.00 | 50.00 | 78.00 | 78.00 |

| N15 | 60.00 | 58.00 | 78.00 | 70.00 | 78.00 | 62.00 |

| N16 | 44.00 | 52.00 | 64.00 | 66.00 | 60.00 | 74.00 |

| N17 | 62.00 | 72.00 | 68.00 | 52.00 | 72.00 | 74.00 |

| N18 | 58.00 | 72.00 | 66.00 | 58.00 | 70.00 | 78.00 |

| N19 | 70.00 | 64.00 | 62.00 | 66.00 | 80.00 | 74.00 |

| N20 | 48.00 | 64.00 | 82.00 | 72.00 | 78.00 | 76.00 |

| N21 | 58.00 | 64.00 | 54.00 | 66.00 | 64.00 | 74.00 |

| N22 | 48.00 | 68.00 | 66.00 | 52.00 | 62.00 | 78.00 |

| N23 | 66.00 | 48.00 | 68.00 | 56.00 | 76.00 | 60.00 |

| N24 | 62.00 | 62.00 | 60.00 | 44.00 | 56.00 | 42.00 |

| Average | 56.83 | 60.17 | 63.75 | 57.92 | 69.50 | 68.75 |

| t-test | p = 0.2827 | p = 0.0353 * | p = 0.7764 | |||

| α = 0.01, N = 480 | |||

|---|---|---|---|

| Case | T-Value | p-Value | |

| N200 | correct selected R vs. correct selected L | 1.066 | 0.292 |

| P300 | correct selected R vs. correct selected L | −0.639 | 0.525 |

| N2P3 | correct selected R vs. correct selected L | −0.289 | 0.774 |

| α = 0.01, N = 340 for Boys and 140 for Girls | ||||

|---|---|---|---|---|

| Components | Electrode | Case | T-Value | p-Value |

| N200 | T3 | boys vs. girls | −1.193 | 0.246 |

| T4 | boys vs. girls | −0.327 | 0.746 | |

| Fz | boys vs. girls | −4.405 | 0.000 *** | |

| Cz | boys vs. girls | −2.348 | 0.028 * | |

| Pz | boys vs. girls | −2.116 | 0.045 * | |

| NN | boys vs. girls | −1.398 | 0.176 | |

| P300 | T3 | boys vs. girls | 0.564 | 0.590 |

| T4 | boys vs. girls | −1.284 | 0.212 | |

| Fz | boys vs. girls | 0.586 | 0.564 | |

| Cz | boys vs. girls | 1.328 | 0.226 | |

| Pz | boys vs. girls | 0.973 | 0.341 | |

| NN | boys vs. girls | 0.709 | 0.486 | |

| N2P3 | T3 | boys vs. girls | 0.983 | 0.336 |

| T4 | boys vs. girls | −1.600 | 0.124 | |

| Fz | boys vs. girls | −0.203 | 0.841 | |

| Cz | boys vs. girls | −1.788 | 0.088 | |

| Pz | boys vs. girls | 0.201 | 0.842 | |

| NN | boys vs. girls | −1.303 | 0.206 | |

| Dependent Variable: Average Bit-Rate | ||||||

|---|---|---|---|---|---|---|

| Components | T3 | T4 | Fz | Cz | Pz | NN Technology |

| N200 | 0.0114 | 0.0345 | 0.0977 | 0.0585 | 0.0104 | 0.1401 |

| P300 | 0.0781 | 0.0477 | 0.0363 | 0.0563 | 0.0808 | 0.2123 |

| N2P3 | 0.1585 | 0.1401 | 0.1782 | 0.2260 | 0.2353 | 0.4418 |

| References | Stimulation Modality | Electrodes | Subjects | Advantages | Drawbacks |

|---|---|---|---|---|---|

| [46] | P300 Spatial real, virtual sounds | Cpz, Poz, P3, P4, P5, P6, Cz, Pz in 10/10 | 9 HS | Both stimuli types generate different event-related potential response patterns allowing for their separate classification. |

|

| [48] | P300 Spatial vs. non-spatial | F3, Fz, F4, T7, C3, Cz, C4, T8, Cp3, Cp4, P3, Pz, P4, PO7, PO8, Oz | 16 HS | Training improves performance in an auditory BCI paradigm. Motivation influences performance and P300 amplitude. |

|

| [17] | P300 Spatial auditory | 32 channels in the extended 10–20 system | 9HS | ErrP-based error correction can be used to make a substantial improvement in the performance of aBCIs. |

|

| [41] | ASSR+P300 Earphone auditory | Fz, Cz, Pz, P3, P4, Oz, T3 and T4 | 10 HS | The average accuracy of the hybrid system is better than that of P300 or ASSR alone. |

|

| [45] | ASSR Earphone auditory | Cz, Oz, T7, and T8 | 6 HS | The average classification accuracies online were excellent, more than 80%. |

|

| [31] | P300 Headphone auditory | Fz, Cz, Pz, Oz, P3, P4, PO7, PO8 | 10 HS | Mental repetition can be a simpler alternative to the mental count to reduce the mental workload. |

|

| [16] | Speakers | 19 channels | 12HS | Multi-loudspeaker patterns through vowel and numeral sound stimulation provided an accuracy greater than 85% of the average accuracy. |

|

| The proposed method | P300 Headphone auditory | T3, T4, Fz, Cz, Pz | 24HS | The method of mental shadowing tasks helps the user focus on the option he wants with ease to reduce the mental workload. | Average accuracy = 78.69%, and it will be better if the accuracy rate can be higher. |

| N2P3 | Specified Condition | Total | ||

|---|---|---|---|---|

| R | L | |||

| Classification result | R | 921 | 226 | 1147 |

| L | 279 | 974 | 1253 | |

| Total | 1200 | 1200 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, K.-T.; Hsieh, K.-L.; Lee, S.-Y. Using Mental Shadowing Tasks to Improve the Sound-Evoked Potential of EEG in the Design of an Auditory Brain–Computer Interface. Appl. Sci. 2023, 13, 856. https://doi.org/10.3390/app13020856

Sun K-T, Hsieh K-L, Lee S-Y. Using Mental Shadowing Tasks to Improve the Sound-Evoked Potential of EEG in the Design of an Auditory Brain–Computer Interface. Applied Sciences. 2023; 13(2):856. https://doi.org/10.3390/app13020856

Chicago/Turabian StyleSun, Koun-Tem, Kai-Lung Hsieh, and Shih-Yun Lee. 2023. "Using Mental Shadowing Tasks to Improve the Sound-Evoked Potential of EEG in the Design of an Auditory Brain–Computer Interface" Applied Sciences 13, no. 2: 856. https://doi.org/10.3390/app13020856

APA StyleSun, K.-T., Hsieh, K.-L., & Lee, S.-Y. (2023). Using Mental Shadowing Tasks to Improve the Sound-Evoked Potential of EEG in the Design of an Auditory Brain–Computer Interface. Applied Sciences, 13(2), 856. https://doi.org/10.3390/app13020856