1. Introduction

According to the International Atomic Energy Agency (IAEA), micro nuclear reactors are classified as a type of small modular reactor (SMR) that possess an electrical power output of less than 10 MW [

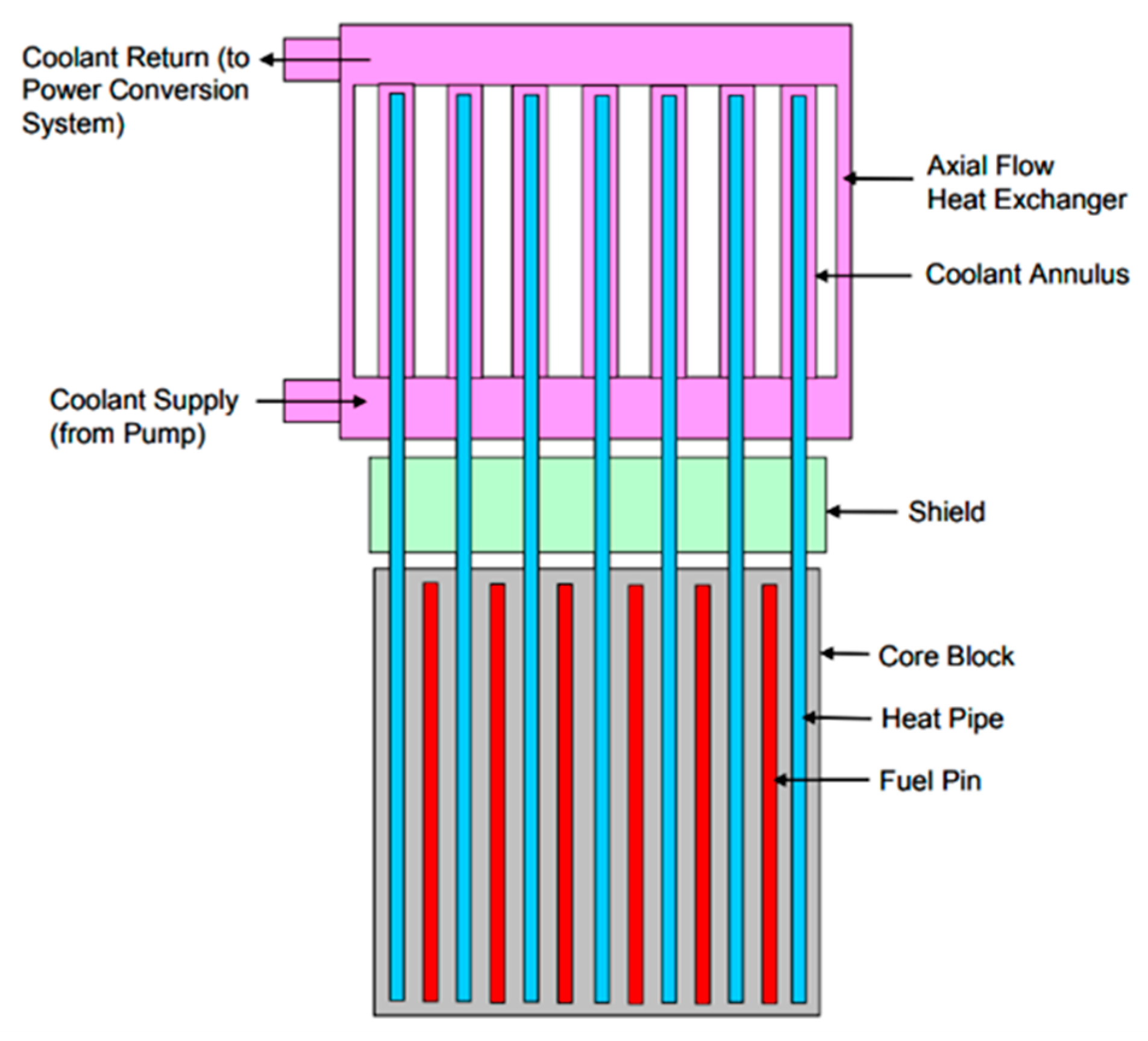

1]. One of the modern reactors in SMR systems is the HPR, which has a solid core and utilizes heat pipes to transfer heat from the core. Thanks to the solid-state property of the reactor and the use of heat pipes, the HPR possesses several advantageous features, including inherent safety, compactness, simple system architecture, and strong transportability. Furthermore, HPRs exhibit broad application prospects in low-power, unmanned applications, such as remote islands and underwater vehicles [

2].

Since the 1960s, space exploration has made significant contributions to the advancement of space reactors, including HPRs, liquid metal reactors, and gas-cooled reactors. In 1968, a space power system was conceptualized by C. A. Heath and colleagues, which utilized a heat pipe to establish a connection between the core and the thermionic diodes [

3]. The proposed design concept effectively mitigated the adverse effects of high neutron flux and core expansion on the performance of thermionic diodes through utilizing heat pipes to transfer heat and isolate them from the harsh reactor environment. Anderson et al. conducted a study in the same year on a space reactor power system that utilized heat pipes for heat transfer, out-of-pile thermionic diodes for electricity generation, and dual central absorber rods for reactor power control. This resulted in a reduction in the size and weight of the reactor system compared to the conventional space reactor that employs thermionic diodes within the reactor at the same power capacity [

4]. A study conducted by the Los Alamos National Laboratory (LANL) in the United States involved the comparison of three distinct designs of heat pipe cooled reactors. The electrical power output of these reactors ranged from 1 to 500 kW, with core temperatures up to 1200–1700 K [

5]. According to the calculation results and analysis, it was determined that none of the three HPRs surpassed the safety thresholds for any of the operation parameters. The authors of the study noted that augmenting the power of the HPR would necessitate an increase in the quantity of heat pipes, which would result in the amplification of system weight, size, and intricacy.

Since the 21st century, the development of materials, high-temperature heat pipes, and other key technologies have once again promoted the development of HPRs. In 2000, based on LANL’s heat-pipe power system (HPS) project, Poston et al. proposed a heat-pipe cooled nuclear reactor power system [

6] for a Mars exploration mission and gradually developed it into the heat-pipe-operated mars exploration reactor (HOMER) [

7] in subsequent studies. After that, many institutions put forward different HPR designs for the different application scenarios and requirements: for example, SAIRS [

8] and HP-STMCs [

9] proposed by NMU, MSR-A [

10] proposed by MIT, Kilopower [

11] proposed by LANL, eVinci [

12] proposed by Westinghouse, etc. In 2012, LANL and NASA’s Glenn Research Center (GRC) conducted the first nuclear test in the history of the HPR, in which the heat pipe exported heat from the core and served as a heat source for a Stirling engine to generate kilowatts of electrical power [

11]. LANL and the GRC then continued to refine the Kilopower project, culminating in the development of the KRUSTY (kilowatt reactor using Stirling technology) [

13,

14,

15]. After almost six decades of development, the HPR has established a comprehensive design plan and technical route and has achieved significant advancements in numerous pivotal technologies. The HPR also faces several obstacles, including the manufacturing process of heat pipes that can withstand high temperatures, the use of monolith and cladding materials that are resistant to high temperatures, effective thermoelectric conversion technology, and independent and reliable control methods.

One of the challenges encountered in the modeling of the HPR is related to heat transfer models for high-temperature heat pipes. The modeling methods are divided into the thermal resistance network model method and the two-phase flow model method. And the thermal resistance network modeling method is a fast and approximate method for calculating transient and steady-state heat transfer in heat pipes with low computational complexity [

16]. Zuo and Faghri proposed the first practical thermal resistance network for analyzing the transient processes in heat pipes in 1998. They abstracted the sections of the heat pipe into thermal resistances, while assuming that the heat transfer in the evaporation and condensation sections only occurs in the radial direction and that heat transfer in the adiabatic section only occurs in the axial direction [

17]. This method adopts the idea of lumped parameters, which greatly simplifies the heat pipe model and reduces the heat transfer equation of the heat pipe to a set of non-homogeneous linear equations. Guo et al. established the super thermal conductivity model (STCM) based on the thermal resistance network model, which can not only calculate the heat transfer process of the heat pipe but also calculate the flow rates of liquid and gas working fluids [

18]. Through introducing the calculation of heat transfer limits, the STCM can analyze the safety margin of the heat pipe. Jibin et al. equate the solid region and the liquid region as different thermal resistive, thermal capacitive, or thermal inductive elements, thus establishing a lumped parameter network model of the wick-type heat pipe. The simulation results show that the total thermal resistance of the heat pipe decreases with increasing input heat flux, but there is a lower limit for the total thermal resistance. And the total thermal resistance of the heat pipe is closely related to the thickness and porosity of the wick and the type of working fluid of the heat pipe [

19].

During the process of heating up from a cold to a hot state in solid-state reactors such as HPRs, the density of the material in the reactor core increases as its temperature increases, and the distance between the molecules or atoms of the material increases, which results in an increase in the leakage rate of the neutrons. So, the thermal expansion effect of the material causes changes in the HPR’s reactivity. Traditional nuclear–thermal analysis is no longer sufficient to meet the requirements of HPR simulation analysis; therefore, some high-fidelity simulation methods are adopted to study the transient phenomena of HPRs nowadays. For example, Wei Xiao et al. built a high-fidelity 3D neutronics–thermal elasticity multi-physics coupling model of the HPR KRUSTY using the open-source software OpenMC, Nektar++, and SfePy based on the Monte Carlo and finite element methods [

20]. Their calculation results showed that the reactivity change due to thermal expansion accounted for 89.4% of the total reactivity feedback, and even if a limited number of heat pipe failure accidents occurred, the remaining heat pipes could still provide sufficient thermal conductivity to ensure the safety of the core. GUO et al. proposed a coupled calculation method called “thermalMechanicsFoam” for solving transient multi-physics fields in HPRs which uses Reactor Monte Carlo (RMC) to calculate the power distribution within the core and cooperates with OpenFOAM to calculate core temperature and expansion [

21]. Tao Li et al. established a transient analysis method combining three-dimensional core heat transfer with two-dimensional heat pipe heat transfer [

22]. In the study of the steady-state operating conditions of the HPR, it showed that the isothermal properties of the heat pipe improved the axial and radial heat transfer in the reactor. The research on the start-up condition found that the isothermal characteristics of the heat pipe caused the reverse heat transfer between the heat pipe and the core structure, which could flatten the temperature distribution in the reactor core.

For the structure, design, and safety analysis of HPRs, the high-fidelity simulation method of the neutronics–thermal elasticity multi-physics coupling model mentioned above is necessary. However, its cost is the complexity of the calculation process and a high computational workload. When studying control methods for the HPR, the model not only needs to accurately reflect changes in parameters during the dynamic process but also requires a small amount of calculation to adapt to the rapid simulation verification of the control method.

In the aspect of reactor control, ensuring energy balance is one of the most important control objectives of nuclear reactor installation. The proportion–integration-–differentiation (PID) algorithm has become the most widely used controller due to its simple principle and easy implementation. However, before the PID controller is put into use, it must go through a tedious parameter-setting process, and once the operating state of the control object changes significantly, a PID controller with the same parameters may not achieve the same control effect as before. Therefore, optimization algorithms such as the genetic algorithm and particle swarm optimization algorithm [

23,

24] and intelligent algorithms such as fuzzy theory [

25] are often used to improve the traditional PID control algorithm to achieve better control effects.

In addition, control methods based on deep reinforcement learning (DRL) are also applied to reactor control. Chen et al. used the deep deterministic policy gradient (DDPG) strategy to train the RL controller for the power control of a boiling water reactor [

26]. In the simulation of continuously introducing disturbances, the reactor power has obvious oscillation under the control of the method, but after using the RL method, the reactor system can overcome various disturbances and ensure that the power regulation process is rapid and stable. In fact, the control methods based on reinforcement learning regard the reactor system as a “black box”, and the agent is trained in the virtual environment and learns how to obtain higher rewards driven by specific learning strategies such as deep Q-network (DQN), DDPG, and TD3 [

27].

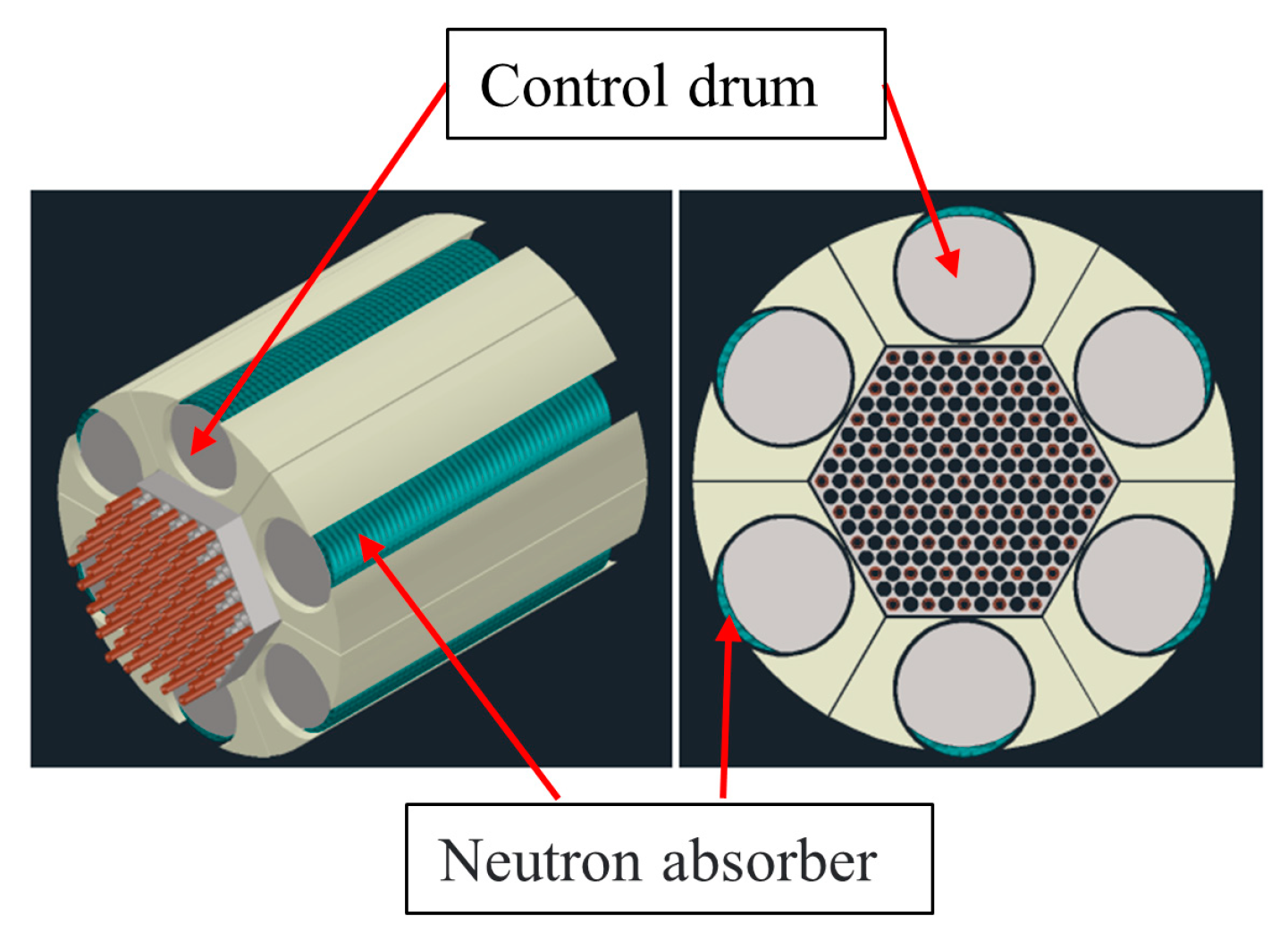

In this work, in response to the requirements of speed and accuracy of the simulation model for research on HPR power control methods, a lightweight dynamic model of the HPR from core to heat pipe exchanger is established. Taking the MegaPower HPR as example, the arrangement of the components in the core is summarized, and the lumped parameter method is used to make the single heat-generating component of the core equivalent to a cylindrical shape. Meanwhile, the simplified thermal resistance network method is used to analyze the heat transfer process of the heat pipe, and the core parameters of the heat pipe heat exchanger are designed. Secondly, the dynamic characteristics of HPRs with reactivity disturbance and flow disturbance are analyzed separately. Finally, the TD3 algorithm, with excellent performance in continuous control, is selected as the core algorithm of HPR power control, and the application of the reinforcement learning control method in HPR power regulation is explored.

4. Research on Power Control Method of HPR

The current control method for practical application of reactor power control is mainly PID control. However, tuning the parameters of the PID controller is a tedious procedure and depends on the experience of the designer. Furthermore, the parameter adjustment of the PID controller is generally performed for full power conditions, which can meet the requirements for the power control of large-size nuclear power plants that mainly operate stably at full power conditions. However, for the HPR, which requires the ability to track load changes, it is difficult to obtain the same control performance under different power levels using a set of fixed parameters for the PID controller. Therefore, it is necessary to study an intelligent optimal control algorithm applicable to the HPR.

RL control is a form of direct adaptive optimization control [

33], and the training is a process of searching for optimal control strategies under the control rule constraints and the guidance of optimization objectives, which does not require priori knowledge. RL based control can adapt to the impact of variations in the characteristics of the control object and the associated uncertainties, thereby facilitating the attainment of the optimization objective of the control system. The TD3 algorithm is a kind of reinforcement learning algorithm with good convergence and can be used for the continuous control problem, and it can be applied to the power optimization control of HPRs.

4.1. TD3 Algorithm

The TD3 algorithm is a model-free, off-line, policy-based reinforcement learning method [

34]. The TD3 algorithm is an improvement on the DDPG algorithm. And the DDPG algorithm originates from the DQN algorithm, combining the actor–critic network with the DQN algorithm. It not only solves the problems that the actor–critic network can only view one-sidedly and finds difficult to converge but also can handle the continuous control problem. However, the DQN algorithm is prone to overestimate the Q value, which also appears in the DDPG algorithm. To solve this problem, the DDQN algorithm eliminates overestimation through separating action selection and target Q value prediction. The TD3 algorithm uses three key techniques: the double network, target policy smoothing regularization, and the delayed policy update to further optimize the DDPG algorithm and avoid the overestimation problem.

A double network refers to the use of two sets of critic networks, thus expanding the four neural networks of the DDPG algorithm into six neural networks of the TD3 algorithm. The network architecture of TD3 is shown in

Figure 10. Through setting a dual critic network, the TD3 algorithm takes the minimum of output values

and

of two target networks as the prediction income, so as to calculate the temporal difference error, which makes the TD3 algorithm able to effectively deal with the overestimation problem.

Target policy smoothing regularization is a regularization strategy based on the SARSA algorithm, which aims to solve the overestimated problem of value networks. The TD3 algorithm accomplishes the operation of target policy smoothing regularization through adding random noise to the output of the actor target network.

The delayed policy update means that the update of the actor network lags behind the critic network. The actor network is updated through maximizing the cumulative expected reward, and it needs to use the critic network to evaluate the value of the action. If the critic network is unstable, the actor network will naturally oscillate as well. Therefore, the Critic network needs to be updated more frequently than the actor network, i.e., it should wait for the critic network to stabilize before updating the actor network. The update strategy adopted in the TD3 algorithm is to update the critic network once at each step, while the actor network is updated every d steps.

The pseudo-code of the TD3 algorithm [

35] is as follows. The algorithm first needs to create the actor and critic neural networks and randomly initialize the network parameters. At the beginning of the training, the algorithm undergoes a process of accumulating initial experiences, during which the algorithm will not be trained. The current critic networks are gradually updated in the direction of smaller TD error, while the current actor network of is updated in the direction of actions with higher reward. Compared with completely updating the target network in DQN, the method of updating TD3 algorithm is soft, that is, it merges two sets of network parameters using a small constant τ (0 < τ ≪ 1). The soft update method of the TD3 algorithm results in a small change in the parameters of the target network and a relatively flat change in the calculated target value. This allows the algorithm to maintain some stability even if the target network is updated frequently.

| Algorithm: TD3 | |

Create Actor network and Critic network &

with randomly initializing the parameters , and respectively. | |

Create target network , and , which set the

parameters: , . | |

| Create an empty experience replay buffer . | |

Initialize sampling time , maximum simulation time , number of episodes , and

calculate the maximum steps . | |

![Applsci 13 11284 i001 Applsci 13 11284 i001]() |

4.2. Settings of TD3 Algorithm

When using the TD3 algorithm to create the HPR controller, it is necessary to focus on the states , the structure of the actor–critic network, the reward functions, the decay strategy of the learning rate, and other hyperparameters.

During the training and use of an RL agent, it needs to continuously acquire the states of the environment to determine the next action. There is a limited number of states acquired by the intelligent body. On the one hand, too many states will lead to useful information being overwhelmed; on the other hand, the more states, the larger the size of the neural network which has more parameters need to be trained, thus leading to an increase in training time. Therefore, in this paper, based on practical needs and experience, 5 states are selected as a set of states

. The meaning of each state is shown in

Table 2.

are the basic states of the controlled parameters, which represent the actual and set values of nuclear power, respectively.

is the speed of control drums at the previous moment. The speed of control drums is both the output and the input of the agent, which is beneficial for the agent to learn the advantages and disadvantages of the control action to produce a better control strategy.

is the difference between the actual nuclear power and the load power, indicating the deviation in the nuclear power. And

indicates the rate of change in nuclear power and represents how fast the power changes.

Both the actor network and the critic network adopt the double network structure form of the DQN algorithm; that is, each network exists in both the current network and target network, and the current network has the same network structure as the target network, but the network parameters are different. Therefore, the TD3 algorithm contains two types of neural networks and a total of six neural networks. The structures of the actor network and the critic network are shown in

Figure 11. The actor network uses a three-layer fully connected neural network, and the activation function uses the ReLU function and the tanh function, where the tanh function is used to restrict the output of the actor network between −1 and 1. The critic network has two branches, one for receiving the observed states and the other for receiving the control drums’ speed. The two branches are combined by ADD layers and processed by a three-layer fully connected network to obtain the evaluation values of states and actions.

As for the learning rates of actor networks and critic networks, they cannot be set too large to prevent the network from hovering around the optimal value and not converging. At the same time, too small a learning rate will lead to slow convergence of the network and even fall into a local optimum. In order to set the learning rate of the network reasonably, this paper adopts the exponential decay of learning rate strategy shown in Equation (14) to adjust the learning rate dynamically. The learning rate will decay at certain episodic intervals. However, the learning rate cannot be decayed infinitely, and the lower limit of learning rate decay should be set appropriately.

where

Lr is the learning rate,

ep is the size of the current training curtain, and

I is the episode interval between two adjacent learning rate decays.

Reward is an extremely important part of reinforcement learning that allows the algorithm to evaluate what actions to take to get better results. In this paper, through designing a reasonable reward function, the reinforcement learning algorithm is provided with appropriate rewards and punishments, so that the algorithm learns a better control strategy and converges quickly. See Equation (15); the reward function consists of three parts, which are the load tracking part

, a control drums part

, and the nuclear power overrun part

.

Load tracking means that the controller controls the nuclear power to stabilize at the set power. The amount of the reward for load tracking is proportional to the absolute value of the deviation between the nuclear power and the load power, as in Equation (16). Where

is the actual nuclear power at the time

,

is a positive number that represents the adjustable scaling factor of the load tracking reward. Since the algorithm is updated in the direction of increasing reward value, a negative sign is used in Equation (16) to ensure that the smaller the power deviation, the larger the reward

.

The control drum is a kind of electric mechanical device with service life. If its speed changes frequently, it will accelerate the aging of the control drum and increase the possibility of its malfunction or even failure. Therefore, this paper introduces

to optimize the rotation strategy of the control drums, as in Equation (17), where

and

are, respectively, the control drum’s rotation speed at the time

and

, and

is the positive scale factor of the control drum reward term.

In the process of regulating nuclear power, if the power of the nuclear power deviates too much from the set power, then this control is bound to fail. In order for the agent to learn the strategy to deal with the above situation in time, the nuclear power overrun part

in Equation (18) is used, where

is the penalty value given in case of nuclear power overrunning,

is the initial nuclear power, and “

” refers to 20% of the full power.

In summary, in order to make the TD3 algorithm applicable to HPR power control, the hyperparameters of the algorithm are set in this paper, and most of them are listed in

Table 3.

6. Conclusions

In this paper, a lightweight dynamic model of the “MegaPower” HPR is established through equating the core of the HPR, designing the heat pipe heat exchanger, and building the thermal resistance network based on the lumped parameter idea. After the simulation, the absolute value of the steady-state error between the model solution and the reference value in the steady-state full power case does not exceed 3.2%, and the model can be used for the analysis of the dynamic characteristics and the study of the power control method of the HPR. Through introducing a reactivity step disturbance of −10 PCM and a 5% step disturbance of mass flow rate to the HPR, the dynamic characteristics of the HPR are analyzed. It shows that the HPR can reach the new steady state using its own temperature negative feedback characteristics and has good self-stability and self-regulation. But its adjustment time is long, and the parameters of each part are accompanied by oscillation changes. In order to improve the anti-disturbance and load tracking capability of the HPR, this paper designs an RL controller for reactor power control based on the TD3 algorithm, with reasonable settings of the states , the structure of the neural network, the reward functions, and other hyperparameters of the TD3 algorithm. To verify the RL controller, the control performance of the RL control was compared with that of the uncontrolled case in a step simulation from 100%FP to 90%FP, and the control performance of the RL controller and the PID controller under the compound condition and the condition of significant load power fluctuations was also compared. The results show that the RL controller can quickly regulate the reactor power and suppress the impact of disturbances, and the settle time of nuclear power is less than 51.70 s and the steady-state error is less than 2.37% in both simulations, which means that the RL controller can improve the dynamic and steady-state performance of the HPR and has the ability to control nuclear power in all operating conditions.

The influence of the SCO2 Brayton cycle on reactor power control is not considered in this paper. On the basis of this paper, some propositional research can be carried out from the following aspects in the future: On the one hand, appropriate collaborative control strategies and methods can be proposed based on the safe operation requirements of the HPR and SCO2 Brayton cycle. On the other hand, in view of the possibility that the HPR nuclear power plant will operate in the unmanned condition in the future, autonomous control strategies and other key technologies, such as situational awareness technology and autonomous fault-tolerant control technology, can be studied.