Creating Interactive Scenes in 3D Educational Games: Using Narrative and Technology to Explore History and Culture

Abstract

1. Introduction

2. State of the Art

3. Materials and Methods

4. Results

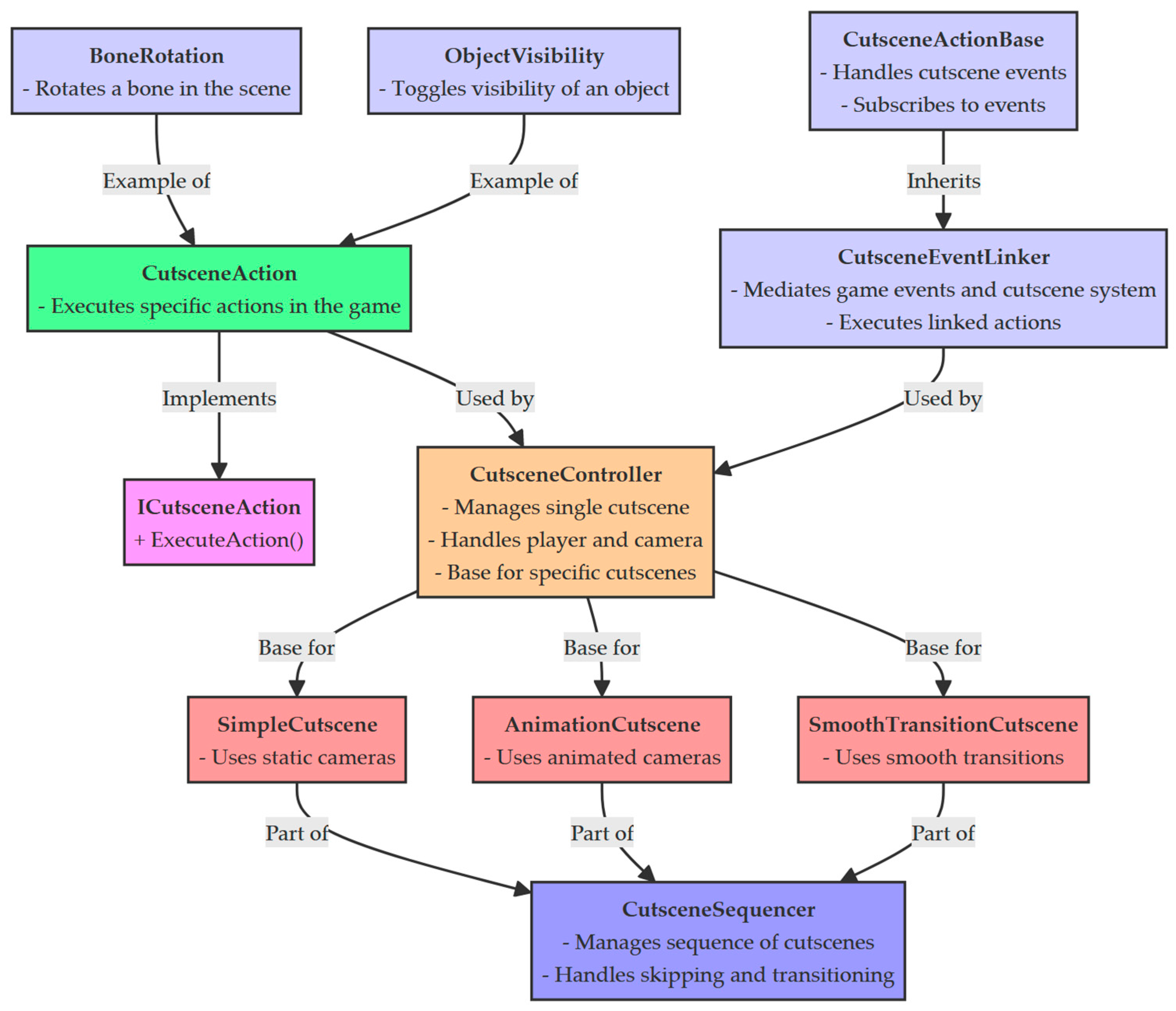

4.1. Sequencer Structure

4.1.1. CutsceneEventLinker

4.1.2. ICutsceneAction

4.1.3. CutsceneAction

4.1.4. CutsceneController

4.1.5. CutsceneSequencer

4.2. Example Application of the Sequencer

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Alexandri, A.; Drosos, V.; Tsolis, D.; Alexakos, C.E. The impact of 3D serious games on cultural education. In Proceedings of the 10th International Conference on Education and New Learning Technologies, Palma, Spain, 2–4 July 2018; pp. 8557–8566. [Google Scholar] [CrossRef]

- Drosos, V.; Alexakos, C.; Alexandri, A.; Tsolis, D. Evaluating 3D serious games on cultural education. In Proceedings of the 2018 9th International Conference on Information, Intelligence, Systems and Applications (IISA), Zakynthos, Greece, 23–25 July 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Drosos, V.; Alexandri, A.; Tsolis, D.; Alexakos, C.A. 3D serious game for cultural education. In Proceedings of the 2017 8th International Conference on Information, Intelligence, Systems and Applications (IISA), Larnaca, Cyprus, 27–30 August 2017; pp. 1–5. [Google Scholar] [CrossRef]

- Floryan, M.; Woolf, B. Rashi Game: Towards an Effective Educational 3D Gaming Experience. In Proceedings of the 2011 IEEE 11th International Conference on Advanced Learning Technologies, Athens, GA, USA, 6–8 July 2011; pp. 430–432. [Google Scholar] [CrossRef]

- Eridani, D.; Santosa, P. The development of 3D educational game to maximize children’s memory. In Proceedings of the 2014 International Conference on Information Technology and Electrical Engineering (ICITEE), Yogyakarta, Indonesia, 7–8 October 2014; pp. 1–4. [Google Scholar] [CrossRef]

- Schmoll, L. The use of games in foreign language teaching: From traditional to digital. Stud. Digit. J. 2016, 5. [Google Scholar] [CrossRef]

- Lee, J. Effects of Fantasy and Fantasy Proneness on Learning and Engagement in a 3D Educational Game. Doctoral Dissertation, The University of Texas at Austin, Austin, TX, USA, 2015. [Google Scholar]

- Tazouti, Y.; Boulaknadel, S.; Fakhri, Y. ImALeG: A Serious Game for Amazigh Language Learning. Int. J. Emerg. Technol. Learn. 2019, 14, 108–117. [Google Scholar] [CrossRef]

- Li, J. Research and Analysis of 3D games. Highlights Sci. Eng. Technol. 2023, 31, 3847–3861. [Google Scholar] [CrossRef]

- Boden, A.; Buchholz, A.; Petrovic, M.; Weiper, F.J. 3D VR Serious Games for Production & Logistics. In Proceedings of the 18. AALE-Konferenz, Pforzheim, Germany, 9–11 March 2022. [Google Scholar] [CrossRef]

- Klopp, M.; Dörringer, A.; Eigler, T.; Bartel, P.; Hochstetter, M.; Weishaupt, A.; Geirhos, P.; Abke, J.; Hagel, G.; Elsebach, J.; et al. Development of an Authoring Tool for the Creation of Individual 3D Game-Based Learning Environments. In Proceedings of the 5th European Conference on Software Engineering Education, Seeon, Germany, 19–21 June 2023. [Google Scholar] [CrossRef]

- Ruan, X.; Cho, D.-M. Relation between Game Motivation and Preference to Cutscenes. Cartoon. Animat. Stud. 2014, s36, 573–592. [Google Scholar] [CrossRef]

- Ruan, X.; Cho, D. Virtual Camera Movement Bring the Innovation and Creativity in Action Video Games for the Player. Korea Open Access J. 2013, 13, 3544. [Google Scholar] [CrossRef]

- Hasani, M.F.; Udjaja, Y. Immersive Experience with Non-Player Characters Dynamic Dialogue. In Proceedings of the 2021 1st International Conference on Computer Science and Artificial Intelligence (ICCSAI), Jakarta, Indonesia, 28 October 2021. [Google Scholar] [CrossRef]

- Weir, N.; Thomas, R.; D’Amore, R.; Hill, K.; Van Durme, B.; Jhamtani, H. Ontologically Faithful Generation of Non-Player Character Dialogues. arXiv 2022, arXiv:2212.10618. [Google Scholar] [CrossRef]

- Barker, D. A story without words: Challenges crafting narrative in the videogame Rise. TEXT J. 2018, 22, 1–12. [Google Scholar] [CrossRef]

- Ulaş, E.S. Virtual environment design and storytelling in video games. J. Gaming Virtual Worlds 2014, 4, 75–88. [Google Scholar] [CrossRef] [PubMed]

- Malysheva, Y. Dynamic quest generation in Micro Missions. In Proceedings of the 2012 IEEE 4th International Conference on Games and Virtual Worlds for Serious Applications (VS-GAMES), Genoa, Italy, 29–31 October 2012; pp. 1–4. [Google Scholar] [CrossRef]

- Zook, A.; Lee-Urban, S.; Drinkwater, M.; Riedl, M.O. Skill-based Mission Generation: A Data-driven Temporal Player Modeling Approach. In Proceedings of the Third Workshop on Procedural Content Generation in Games, Raleigh, NC, USA, 29 May–1 June 2012. [Google Scholar] [CrossRef]

- Sánchez, J.A.; Pérez, T. A Conceptual Model for an OWL Ontology to Represent the Knowledge of Transmedia Storytelling. In Challenges and Opportunities for Knowledge Organization in the Digital Age; Ergon-Verlag: Baden-Baden, Germany, 2018. [Google Scholar] [CrossRef]

- Götz, U. On the Evolution of Narrative Mechanics in Open-World Games. Media Stud. 2021, 82, 161–176. [Google Scholar] [CrossRef]

- Chauvin, S. A Narrative Model for Emerging Video Games. Doctoral Dissertation, CNAM, Paris, France, 2019. Available online: https://dblp.org/rec/phd/hal/Chauvin19.html (accessed on 18 May 2024).

- Wolf, M.J.P. The Potential of Procedurally-Generated Narrative in Video Games. In Clash of Realities 2015/16: On the Art, Technology and Theory of Digital Games, Proceedings of the 6th and 7th Conference; Transcript Verlag: Bielefeld, Germany, 2017; p. 145. [Google Scholar] [CrossRef]

- Valls-Vargas, J. Narrative Extraction, Processing and Generation for Interactive Fiction and Computer Games. In Proceedings of the AAAI Conference on Artificial Intelligence and Interactive Digital Entertainment, Boston, MA, USA, 14–18 October 2013; pp. 37–40. [Google Scholar] [CrossRef]

- Li, C.; Zhang, H.; Yang, X. A new nonlinear compact difference scheme for a fourth-order nonlinear Burgers type equation with a weakly singular kernel. J. Appl. Math. Comput. 2024, 70, 2045–2077. [Google Scholar] [CrossRef]

- Wang, W.; Zhang, H.; Zhou, Z.; Yang, X. A fast compact finite difference scheme for the fourth-order diffusion-wave equation. Int. J. Comput. Math. 2024, 101, 170–193. [Google Scholar] [CrossRef]

- Shi, Y.; Yang, X. Pointwise error estimate of conservative difference scheme for supergeneralized viscous Burgers’ equation. Electron. Res. Arch. 2024, 32, 1471–1497. [Google Scholar] [CrossRef]

- Wu, L.; Zhang, H.; Yang, X.; Wang, F. A second-order finite difference method for the multi-term fourth-order integral–differential equations on graded meshes. Comput. Appl. Math. 2022, 41, 313. [Google Scholar] [CrossRef]

- Zhou, Z.; Zhang, H.; Yang, X. CN ADI fast algorithm on non-uniform meshes for the three-dimensional nonlocal evolution equation with multi-memory kernels in viscoelastic dynamics. Appl. Math. Comput. 2024, 474, 128680. [Google Scholar] [CrossRef]

- Yang, X.; Qiu, W.; Chen, H.; Zhang, H. Second-order BDF ADI Galerkin finite element method for the evolutionary equation with a nonlocal term in three-dimensional space. Appl. Numer. Math. 2022, 172, 497–513. [Google Scholar] [CrossRef]

- Shi, Y.; Yang, X. A time two-grid difference method for nonlinear generalized viscous Burgers’ equation. J. Math. Chem. 2024, 62, 1323–1356. [Google Scholar] [CrossRef]

- Wang, F.; Yang, X.; Zhang, H.; Wu, L. A time two-grid algorithm for the two dimensional nonlinear fractional PIDE with a weakly singular kernel. Math. Comput. Simul. 2022, 199, 38–59. [Google Scholar] [CrossRef]

- Zhang, H.; Jiang, X.; Wang, F.; Yang, X. The time two-grid algorithm combined with difference scheme for 2D nonlocal nonlinear wave equation. J. Appl. Math. Comput. 2024, 70, 1127–1151. [Google Scholar] [CrossRef]

- Yang, X.; Zhang, Z. Analysis of a New NFV Scheme Preserving DMP for Two-Dimensional Sub-diffusion Equation on Distorted Meshes. J. Sci. Comput. 2024, 99, 80. [Google Scholar] [CrossRef]

- Yang, X.; Zhang, Z. On conservative, positivity preserving, nonlinear FV scheme on distorted meshes for the multi-term nonlocal Nagumo-type equations. Appl. Math. Lett. 2024, 150, 108972. [Google Scholar] [CrossRef]

- Attachment 1. Kaźmierczak Rafał, Skowroński Robert, Kowalczyk Cezary, Grunwald Grzegorz (2024) an Example Implementation of the Sequencer in the Form of an Intro to Educational Materials. Available online: https://zenodo.org/records/11058107?token=eyJhbGciOiJIUzUxMiJ9.eJpZCI6IjgxYzlhMjc1LWVjOWItNDUyMy1hMjk5LTVlNWRiNmYyNjUyMiIsImRhdGEiOnt9LCJyYW5kb20iOJiZjZiZTMyNWM4NzY0ZTRjNTU2ZDAyZDg0NWYzYTE3ZSJ9.wSH6F7ZKnxpzQdeB-fPOk_9GA0auTe2ZVabsQzeR2_0QNt68dXjpcrpGjR7teShjFGe2Cddw4BYYSHfGxC6IA (accessed on 1 May 2024). [CrossRef]

| Aspect | AnimationCutscene.cs | SimpleCutscene.cs | SmoothTransitionCutscene.cs |

|---|---|---|---|

| Method | Uses animation to control camera movement | Uses linear interpolation to move the camera from its initial to its final position | Uses easing functions to smoothly transition the camera from its initial to its final position |

| Linear Interpolation | x = (1 − t) × a + t × b x: position, rotation, FOV (field of view) of the camera a, b: parameter values in keyframes t: animation time | x = (1 − t) × a + t × b x: camera position a: initial position b: final position t: time since the start of the cutscene | N/A |

| Time Normalization | t = (currentTime − startTime)/duration currentTime: current animation time startTime: animation start time duration: animation duration | t = currentTime/duration currentTime: current time since the start of the cutscene duration: duration of the cutscene | t = currentTime/duration currentTime: current time since the start of the cutscene duration: duration of the cutscene |

| Easing Functions | N/A | N/A | f(t) = t^3 × (3 − 2 × t) f(t): weight used for interpolation t: time since the start of the cutscene |

| Position Calculation | cameraPosition = (1 − t) × startPosition + t × endPosition | cameraPosition = (1 − t) × startPosition + t × endPosition | cameraPosition = (1 − f(t)) × startPosition + f(t) × endPosition |

| Rotation Calculation | cameraRotation = (1 − t) × startRotation + t × endRotation | N/A | N/A |

| Calculating FOV | cameraFOV = (1 − t) × startFOV + t × endFOV | N/A | N/A |

| Transformation Update | cameraTransform.position = cameraPosition cameraTransform.rotation = cameraRotation camera.fieldOfView = cameraFOV | cameraTransform.position = cameraPosition | cameraTransform.position = cameraPosition |

| Component | Function | Interactions |

|---|---|---|

| CutsceneEventLinker.cs | Mediates between game events and the cutscene system |

|

| ICutsceneAction | Defines basic behaviors for actions used in cutscenes |

|

| CutsceneAction | Executes specific actions within cutscenes based on ICutsceneAction |

|

| CutsceneController | Manages the launching and controlling of cutscenes |

|

| CutsceneSequencer | Manages the sequence of cutscenes, coordinating their launch in the proper order |

|

| Element | Description | Implementation Mechanisms | Examples of Use |

|---|---|---|---|

| Event Listening | Registration and response to events generated in the game environment | Use of UnityEvents or custom signals to detect events | Player entering a specified area |

| Mapping Events to Actions | Defining relationships between events and cutscene actions | Dictionary (e.g., Dictionary <TEvent, TAction>) mapping events to actions | Defeating an opponent initiates a dialogue cutscene |

| Initiating Cutscenes | Launching specific cutscenes or action sequences in response to detected events | Calling methods in CutsceneController to launch actions | Launching an animation upon achieving a goal |

| Communication with CutsceneController | Effective exchange of information and commands between CutsceneEventLinker and CutsceneController | Direct connections and method calls between components | Quickly initiating cutscenes in response to events |

| Method | Method Description | Application | Impact on Process |

|---|---|---|---|

| ExecuteAction() | Activates the main functionality of the action | Launching animations, playing sounds, displaying dialogs | Initiates the cutscene action process, introducing the primary narrative or interactive element to the scene |

| Element | Characteristic | Examples of Implementation | Role in the Cutscene System |

|---|---|---|---|

| Action Execution | Implements the logic needed to perform a specified action | Animation, dialog, camera setting changes | Directly triggers interactions with the player or alters the game environment in response to the cutscene scenario |

| Flexibility | Capability to easily incorporate new features and actions | Adding new types of dialogs, interactive elements | Allows for the creation of complex cutscenes without the need to modify the existing system architecture |

| Interaction with Player and Game | Actions can affect the player and game elements, creating a dynamic narrative. | Dialog choices that influence plot development, environmental changes | Enriches the player’s experience through interactive narration and the game world’s response to player actions |

| Key Methods | ExecuteAction() | DialogAction.ExecuteAction() displays dialog; AnimationAction.ExecuteAction() triggers animation | Forms the basis for controlling the flow of actions, enabling the management of state and responses to changes in the scene |

| Implementation | Tailored to the specific needs of the cutscene action | DialogAction, AnimationAction | Provides mechanisms necessary to execute diverse actions, allowing for smooth transitions and coordination |

| Element | Function | Operating Mechanism | Impact on Gameplay |

|---|---|---|---|

| Action Initiation | Launches specific CutsceneActions in response to game events or script instructions | CutsceneController activates actions such as animations or dialogs based on a sequence defined by CutsceneSequencer | Creates smooth narrative transitions and engaging interactive elements, enriching the player’s experience |

| State Control | Manages the state of actions (start, pause, stop) | Uses methods like Execute(), Pause(), and Stop() to control the flow of actions | Ensures that cutscenes are presented at the appropriate pace, enhancing immersion and narrative coherence |

| Coordination with CutsceneSequencer | Works with CutsceneSequencer to manage the order and conditions of action execution | Receives information from CutsceneSequencer about the order and conditions of actions, coordinating their execution | Allows dynamic adjustment of cutscenes to player decisions and other variables, creating non-linear narratives |

| Response to Player Interactions | Adapts to player actions by modifying the flow of cutscenes | Capable of changing the course of a cutscene in response to player choices or other game events | Enhances gameplay by adding interactive elements and influencing the story from the player’s perspective |

| Implementation of Conditional Mechanisms | Decides on the execution of actions based on meeting specific conditions | Uses conditional logic to trigger or change the order of actions | Introduces complexity and depth to the narrative, allowing for the creation of branched story paths |

| Component | Function | Operating Mechanism | Impact on the Game |

|---|---|---|---|

| Sequence Management of Controllers | Organizes scene controllers in a predetermined order or based on defined conditions | CutsceneSequencer creates and maintains a list or queue of actions (CutsceneControllers), which are activated sequentially | Enables the creation of smooth and complex narrative scenarios, improving the quality of narration and player engagement |

| Conditional Control | Decides on the initiation of actions based on the fulfillment of specific conditions | Uses programming logic to assess conditions (e.g., player choices, game events) before initiating actions | Allows the dynamic adaptation of scenes to player actions and other game variables, creating an interactive and non-linear narrative |

| Coordination with CutsceneController | Collaborates with CutsceneController for effective initiation and management of cutscene actions | CutsceneSequencer communicates the sequence and conditions for initiating actions to CutsceneController | Ensures smooth implementation and execution of scenes, maintaining consistency of experience and integration with gameplay |

| Flow Control | Manages the flow of scenes, including their initiation, stopping, and transition to the next scene | Flow control mechanisms allow for smooth transitions between scenes and their temporal control | Maintains the appropriate pace of narration, affecting player immersion and engagement with the game’s storyline |

| Feature | Custom Sequencer | Unreal Engine Sequencer | Unity Timeline |

|---|---|---|---|

| Dynamic Camera Changes | Yes, supports dynamic camera transitions and positions | Yes, allows for complex camera animations and transitions | Yes, supports camera animations and transitions |

| Integration with Multiple Tools | Moderate, can be extended with Unity’s existing tools and assets | High, integrates seamlessly with Unreal’s tools (e.g., Blueprints, VFX, Audio) | High, integrates with Unity’s wide array of tools and assets |

| User Interface Flexibility | Basic UI, skip button functionality, can be improved with custom UI | Advanced, user-friendly with drag-and-drop functionality | Advanced, intuitive UI with drag-and-drop and keyframe editing |

| Custom Event Handling | Yes, supports custom events with ICutsceneAction interface | Limited to predefined events, complex custom events need scripting | Yes, but more complex to create custom events compared to custom system |

| Ease of Use | Moderate, requires scripting knowledge for customization | High, user-friendly with visual scripting (Blueprints) | High, user-friendly with visual editing and scripting |

| Performance | Efficient, but dependent on Unity’s performance optimizations | High, optimized for performance in complex scenes | High, benefits from Unity’s performance optimization |

| Sound Integration | Yes, integrates sound with adjustable delays | Yes, advanced sound integration and mixing | Yes, supports sound integration and control |

| Modularity | Yes, modular with CutsceneControllers for different scenes. Easy to rearrange and modify the order of cutscenes | Yes, highly modular and reusable components | Yes, modular and reusable components |

| Customization | High, allows for custom scripts and behavior | High, customizable with Blueprints and C++ | High, customizable with C# and custom scripts |

| VR Compatibility | Yes, supports VR with camera tracking control | Yes, supports VR and AR applications | Yes, supports VR and AR applications |

| Sequential Control | Yes, allows sequential control of cutscenes through CutsceneControllers | Yes, strong sequential control with timeline and keyframes | Yes, robust sequential control with timeline and keyframes |

| Extensibility | High, can be extended with additional features and improvements | High, highly extensible with plugins and additional modules | High, extensible with Unity Asset Store and custom scripts |

| Debugging and Testing | Moderate, basic debugging tools, can improve with custom logs | High, comprehensive debugging and profiling tools | High, robust debugging and profiling tools |

| Custom Actions | Yes, easy to define custom actions with ICutsceneAction interface | Limited, requires extensive scripting or Blueprints | Yes, but more scripting required compared to custom approach |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kaźmierczak, R.; Skowroński, R.; Kowalczyk, C.; Grunwald, G. Creating Interactive Scenes in 3D Educational Games: Using Narrative and Technology to Explore History and Culture. Appl. Sci. 2024, 14, 4795. https://doi.org/10.3390/app14114795

Kaźmierczak R, Skowroński R, Kowalczyk C, Grunwald G. Creating Interactive Scenes in 3D Educational Games: Using Narrative and Technology to Explore History and Culture. Applied Sciences. 2024; 14(11):4795. https://doi.org/10.3390/app14114795

Chicago/Turabian StyleKaźmierczak, Rafał, Robert Skowroński, Cezary Kowalczyk, and Grzegorz Grunwald. 2024. "Creating Interactive Scenes in 3D Educational Games: Using Narrative and Technology to Explore History and Culture" Applied Sciences 14, no. 11: 4795. https://doi.org/10.3390/app14114795

APA StyleKaźmierczak, R., Skowroński, R., Kowalczyk, C., & Grunwald, G. (2024). Creating Interactive Scenes in 3D Educational Games: Using Narrative and Technology to Explore History and Culture. Applied Sciences, 14(11), 4795. https://doi.org/10.3390/app14114795