In this section, many experiments are conducted to illustrate the efficacy of the proposed networks. We first introduce the characteristics of the datasets for seatbelt detection scenarios and diverse evaluation indicators in

Section 4.1; in

Section 4.2, the experimental settings and implementation configuration are presented. In

Section 4.3, we carry out various ablative experiments to demonstrate the rationality of every element. Finally, the improved models are compared with the prevalent networks.

4.1. Dataset and Evaluation Metrics

A dataset is a principal condition for implementing experiments and evaluating the performance of algorithms in seatbelt detection tasks. Hence, this paper gathered pictures from a traffic department. The pictures were taken by cameras positioned in different parts of a road, encompassing various lighting conditions. The dataset contains various types of motor vehicles. The quantity of samples utilized in various instances is illustrated in

Table 1. Specifically, the training and validation experiments for windshield positioning involve 4000 and 363 samples, with 366 samples employed for the test. Moreover, 3333, 400, and 250 images were utilized in the training, validation, and test steps of the seatbelt classification algorithm, respectively. Additionally,

Figure 8 showcases several samples used in the experiment.

To quantitatively judge the behavior of different approaches and ensure the fairness of the comparative experiment, the evaluation metrics, such as Accuracy, Precision, Recall, F1 score, and Mean Average Precision (mAP), were introduced.

where

indicates a true positive,

represents a true negative,

denotes a false positive, and

is a false negative.

where

indicates

,

represents

,

denotes the total amount of detection categories, and

is the average accuracy of class

. By calculating the area below the P-R curve for each category, the corresponding

can be determined, and then the mAP can be determined by averaging the

values for each category.

Moreover, Params, GFLOPs, and Size are opted to judge the computational complexity of model. Params stands for the quantity of parameters within a model and the spatial complexity is denoted in millions (M). GFLOPs refer to a network with a billion floating-point operations per second, indicating the temporary complexity in Gigabytes (G).

4.3. Ablation Studies and Analysis

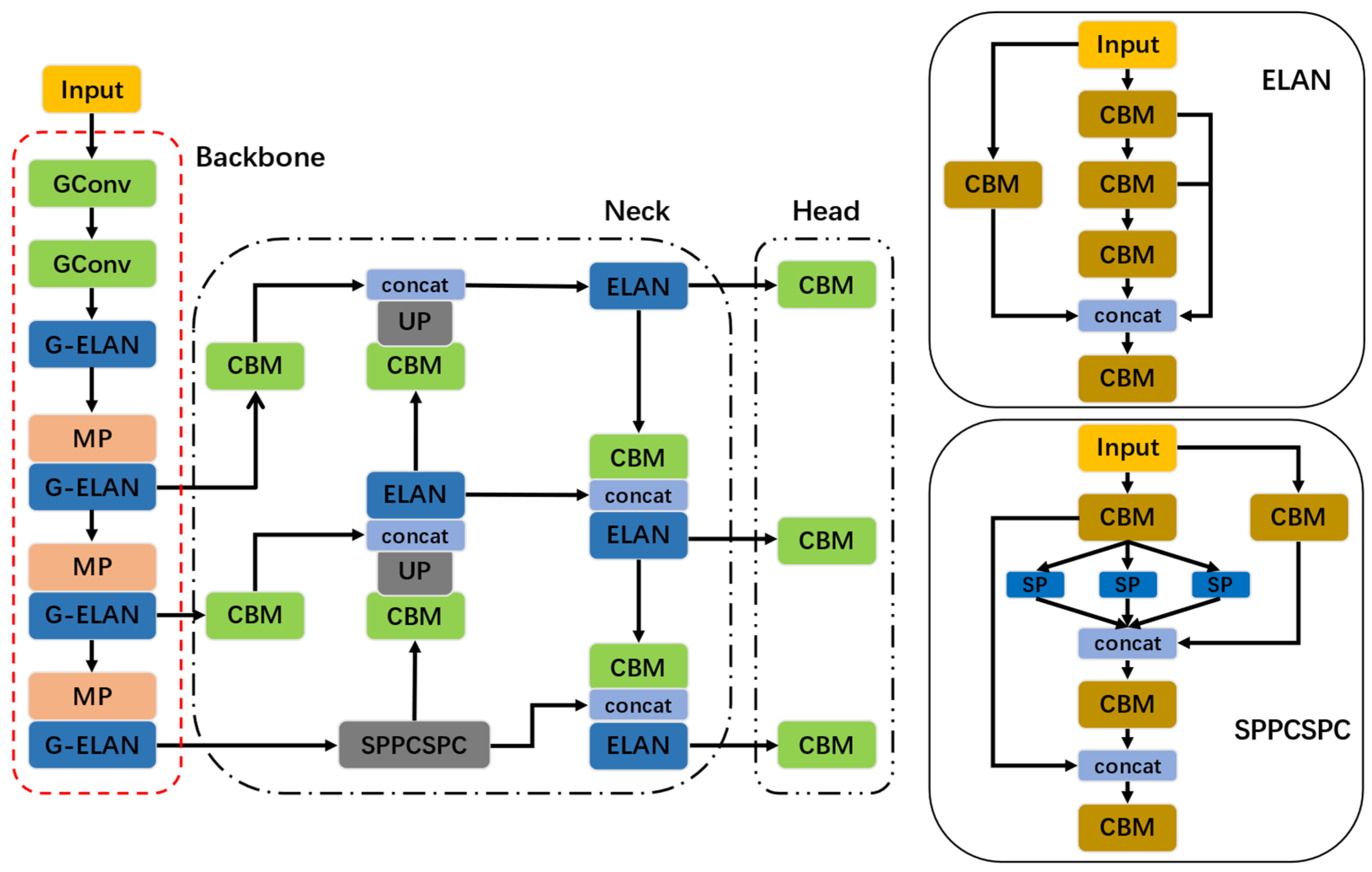

In the windshield detection phase, the influences of different advancements on the network are investigated. Specifically, the improved ELAN based on the Ghost module and Mish activation function is sequentially applied to initial YOLOv7-tiny to verify rationality and performance.

Table 3 represents the obtained experimental results.

Comparing the original network with G-YOLOv7 in

Table 3, the conclusion that can be drawn is that when utilizing the lightweight technique in the experiments, the mAP of G-YOLOv7 slightly decreases, but the number of parameters and computational operations is considerably diminished. However, it can be found that the mAP of M-YOLOv7 is improved, while there is no significant change in Params, confirming the effectiveness of introducing the Mish activation function. Furthermore, when comparing the initial model with GM-YOLOv7, the

[email protected]:0.95 improved by 3.1%, while the network’s parameters and computational operations significantly decreased by 20% and 24.6%, respectively, implying the predominance of the improvements employed in this paper. This is because the improved ELAN has the advantages of optimizing network structure and lowering parameters and computation overheads. Simultaneously, the Mish activation function possesses a stronger non-linear representation, thereby improving the network’s performance.

Moreover, the detection performance of the GM-YOLOv7 and YOLOv7-tiny networks is compared and presented in

Figure 9. By comparing two separate sets of images, it can be observed that the object detection probability of the GM-YOLOv7 network has effectively improved. In summary, the GM-YOLOv7 network not only slims down the structure of network but also upgrades performance, creating an excellent balance between detection accuracy and being lightweight.

In the seatbelt detection phase, the ablation results based on ResNet are represented in

Table 4. The results pointed out that the ResNet baseline model achieved scores of 94% for accuracy, 94.07 for the F1 score, 25.5 M in Params, and 4.1 in GFLOPs.

For the analysis of

Table 4, when the TA is merely inserted into ResNet, the improved network can automatically adjust the weight values of various channels and positions on feature maps, enabling better focus on the feature information of various types of seatbelts in the complicated traffic background. As a result, the accuracy and F1 score improved by 2.75% and 3.69%, respectively. However, the model embedded with TA increased the number of computational operations by about 24.4% compared to ResNet, requiring vast scale matrix operations and increasing inference time.

In order to alleviate the problem of network feature redundancy and lower the model’s volume and computing overhead, channel pruning approaches with various pruning ratios were introduced. When setting the pruning rate to 25%, the accuracy and F1 score increased by 4.25% and 4.18%, respectively, compared with baseline. This is principally because the channel pruning method reduces several redundant parameters that may interfere with the model’s learning. Additionally, it mitigates overfitting to a certain extent, enhances the generalization capability, and lowers the quantity of parameters by 48% compared to baseline. Furthermore, when the pruning ratio was increased to 50%, the Params and GFLOPs significantly decreased by 60% and 44%, respectively, while the accuracy and F1 score merely increased by 0.25% and 0.11% compared to the baseline model. The accuracy and F1 score of the model with a 25% pruning ratio were 4% and 4.07% higher, respectively, than the model with a 50% pruning ratio at the expense of a 25% increase in Params and GFLOPs. By comparing the experimental results of a 25% and 50% pruning ratio, the conclusion that a network with a lower pruning factor is more likely to improve precision and performance than a radical one could be drawn. Therefore, the 25% pruning ratio was eventually selected to obtain RST-Net due to the salient differences in the quantity of parameters and network performance.

Furthermore, to validate the rationality of triplet attention, a variety of prevalent attention mechanisms commonly used in computer visual scenarios were selected for comparative experiments, including SE, CBAM, CA, Efficient Channel Attention (ECA) [

41], and Shuffle Attention (SA) [

42].

Table 5 exhibits the experimental results.

For the observation of

Table 5, it is obvious that the model embedded with TA outperforms the existing prevalent attention modules. The argument that TA can provide better behavior is discussed as follows. The classical channel attention mechanisms such as SE and ECA merely model the interaction among various channels without focusing on the spatial information, leading to inadequate feature extraction and suboptimal performance. Moreover, although CBAM and SA calculate both channel and spatial attention, inter-channel and spatial information failed to be completely utilized. In contrast to these attention mechanisms, TA leverages inter-dimensional information, leading to improved feature representation and enhanced performance.

4.4. Model Comparations

For the windshield detection scenario, single-stage detection models such as YOLOv3 [

43], YOLOX [

44], and YOLOv5 were utilized to train model weights under an identical environment and dataset. The comparison between these models and the GM-YOLOv7 network primarily focused on

[email protected]:0.95, Params, and GFLOPs, which are crucial comparison indicators.

Table 6 displays the comparison results of diverse detectors.

From

Table 6, by comparing the

[email protected]:0.95, the proposed lightweight network outperforms YOLOv3-tiny, YOLOx-s, and YOLOv5-s by 22.4%, 4.6%, and 4.2%, respectively. This reveals that the GM-YOLOv7 network effectively utilizes the feature extraction of the improved ELAN at the expense of minimal computation overhead. The proposed network achieves the greatest detection performance in terms of

[email protected]:0.95 while preserving the minimum Params and GFLOPs. Compared to the widely used YOLOv5-s, GM-YOLOv7 demonstrates improved performance while considerably decreasing the Params and GFLOPs by 31% and 38%, respectively, enabling the algorithm to be more appropriate for deployment in mobile devices. Through contrasting the above principal evaluation indexes, GM-YOLOv7 exhibits significant advantages over other popular networks in the light of detection precision, Params, and GFLOPs, making it a reliable and effective choice for windshield detection tasks.

For the seatbelt detection scenario, experimental comparisons between the proposed network and multiple CNNs, including AlexNet, DenseNet, EfficientNet [

45], ResNeXt [

46], and Wide ResNet [

47], were conducted.

Table 7 displays the results of these experiments.

From

Table 7, it is beyond dispute that RST-Net is more efficient than several prominent algorithms, providing powerful support for practical applications. Although the Recall of RST-Net was lower than Wide ResNet by a small amount, its Params and GFLOPs were merely 0.19 times and 0.2 times that of Wide ResNet, respectively. Additionally, RST-Net achieves an F1 score that is 10.72% higher than AlexNet, 1.48% higher than ResNeXt, and 1.04% higher than Wide ResNet, while its Params and GFLOPs were considerably smaller than the above models. Furthermore, when contrasting the major performance indexes comprehensively, RST-Net demonstrates preferable performance, especially in the light of the Params, further substantiating the efficiency of the improvements proposed in this study.

Additionally, to verify the merit of RST-Net for seatbelt detection, Gradient-weighted Class Activation Mapping (Grad-CAM) [

48] was employed to depict visualization results of various models employed in this chapter.

Figure 10 displays visualization results. The first three rows show a driver that wore a seatbelt, and the last three rows exhibit a situation where a driver did not use a seatbelt. Obviously, RST-Net has the capability to concentrate more attention on the salient feature information of the seatbelt, even in darker and low-contrast images. Additionally, RST-Net not only distinguishes whether the driver is wearing the seatbelt through focusing on the relevant regions where seatbelt features may be present, but also screens out the irrelevant areas located in an image’s background, thereby improving detection accuracy. On the contrary, the other models either do not work well or lack generalization.