Featured Application

We present the ViPRAS system (Virtual Planning for Robotic-Assisted Surgery). It is the result of the project of the same name, in which we combine the advantages of collaborative virtual spaces with robot-assisted surgery. The system is designed to facilitate the surgical planning process through a virtual recreation of both the patient and the surgical robot. We believe this approach could improve surgical planning, allowing for better surgeries and thus better patient recovery.

Abstract

Robotic Assisted Surgery (RAS) represents an important step forward in the field of minimally invasive surgery. However, the learning curve of RAS is steep, and a systematic planning of surgical robot setups should be performed to leverage the features of RAS. For this reason, in this paper we show the design and implementation of a mixed-platform collaborative system, creating an interactive virtual shared environment that simulates RAS during the surgery planning phase. The proposed system allows one or more experts to plan together the different phases of an RAS-based surgical procedure, while integrating different levels of immersion to enhance computer-assisted training. We have tested our system with a total of four domain experts. Our results show that experts found the system excellent in terms of usability and useful to prepare and discuss surgical planning with RAS.

1. Introduction

In recent years, the number of health-related computer applications has increased due to the improved technological capabilities of smart devices and the integration of highly interactive paradigms, such as virtual and extended realities (VR, XR). While VR allows user immersion in a pure virtual environment, XR is an umbrella term that covers different interaction paradigms that combine the real and virtual worlds [1]. In collaborative virtual shared spaces, the XR paradigm has the advantage that it allows direct vision of other people, as well as of other elements that can be of interest while collaborating, such as printed documents. However, the misalignment of the real and virtual worlds in XR might cause discomfort and/or distract focus from the virtual objects, which is not the case in VR. Other related interaction paradigms are Augmented Reality (AR), which combine the real and virtual worlds taking into consideration the spatial alignment between the two worlds, and Mixed Reality (MR), which is a continuum between a purely virtual and a purely real environment [2].

In this research, we focus on integrating a collaborative virtual environment in a mixed-platform fashion [3], to help surgeons perform the planning phase of Robotic Assisted Surgery (RAS). The learning curve of RAS is steep [4], and some phases, including the surgery planning, require specific training to leverage the features of these expensive devices [5]. Thus, it is of the utmost importance to help surgeons overcome these difficulties. Such mixed-platform architecture, which includes VR and XR interaction paradigms, can offer new points of view to observe virtual information representing the surgery process, so that instead of seeing it on a monitor, it can be displayed in the space around the user. In our system, the volumetric information (which can be derived, for instance, from a Computed Tomography scan) can be observed as if the patients themselves were lying on the surgery table, being able to hide irrelevant elements, highlighting those that are important, or taking measurements.

In this sense, we aim to improve the planning process of RAS, providing tools that allow overcoming the most difficult aspects of this type of surgery. Three key aspects have been identified: the positioning of the surgical robot (the trocars that are introduced into the patient and the robot arms), access to the first-person perspective of the surgeon, and the possibility of performing collaborative, even remote, planning with other specialists. The placement of the robot and the incisions through which the robot arms are inserted are key to the correct performance of the surgical process. To that end, we have designed and implemented a mixed-platform collaborative system, creating an interactive virtual shared environment that represents RAS during the planning phase. This virtual operation room allows one or more experts to plan together the different phases of the surgical procedure. Therefore, the main contribution of this work is not only a new virtual planning system for RAS, but also one in which users can design the placement of the robotic arms and check if the position is adequate from the point of view they will be in at the time of surgery, and all this in a collaborative way. It is important to emphasize that our work is not aimed at simulating the surgical procedure. Other works focus on the 3D reconstruction of internal soft tissues [6] in order to simulate surgery or aid surgeons during a surgical intervention. However, the focus of our work is to aid surgeons in the planning phase of RAS.

The paper is structured as follows: firstly, we review the state of the art in similar technologies, focusing on those aspects related to the current work. In the section dedicated to the materials and methods, we detail the design and the technical aspects of our implementation. The next section reports on the method of validating our system. Finally, we show the results, the discussion, and the conclusions.

2. Related Work

Training in robotic surgery entails two fundamental aspects: skill and knowledge [7]. While skill training is usually achieved using simulators, which allow the surgeon’s skills to be polished without putting a patient at risk, knowledge training usually involves recording RAS procedures and then displaying them as teaching elements. In both cases, the use of virtual 3D models, the interaction paradigms of mixed, virtual, extended, and augmented reality technologies, or even the use of printed 3D models, can play a relevant role [8]. In this regard, Virtual Surgery Planning (VSP) can be defined as the use of virtual information to improve the surgical planning process. VSP has been explored before in the academic literature, as in [9] where VSP was used to aid robot-assisted laparoscopic partial nephrectomy, or in [10] where VSP was used in mandibular reconstruction. Both works rely solely on virtual 3D models and do not exploit complex interaction mechanisms like those we propose. However, both works conclude that VSP is viable and can improve the ability of surgeons to plan surgical procedures. Some other academic works have explored the use of virtual models for RAS planning, such as [11], where IRISTM is used and assessed. This proprietary system enables physicians to view a 3D model of the patient anatomy on which they will be operating and helps devise a plan for the procedure. The authors conclude that virtual planning can predict most of the operative findings in robot-assisted nephrectomy. In contrast to what we propose, none of these three works are collaborative.

As reported in the literature, the use of VSP can help decide the type of surgical approach [12], understand the depth of a tumour [12], identify the best positions and orientations for the trocars [13], increase the surgeon’s confidence [8], or even predict the outcome of the surgical intervention [11]. Moreover, the possibility of collaborative or even remote planning can significantly increase its benefits, optimizing the surgical process and allowing better interdisciplinary communication [14]. However, most of the works published in the academic literature focus on non-collaborative applications. There are, nevertheless, some exceptions. For instance, in [15], a collaborative VR environment to assist liver surgeons in tumour surgery planning is presented. The system allows surgeons to define and adjust virtual resections on patient-specific organ 3D surfaces and 2D image slices, while changes in both modalities are synchronized. Additionally, the system includes a real-time risk map visualisation that displays safety margins around tumours.

Another work in which collaborative planning is proposed is [16]. The authors use a collaborative face-to-face system using MR devices in which various surgeons can jointly inspect a 3D model of the target tissues in cases of laparoscopic liver and heart surgery. This application includes tools for model transformation, marker positioning, 2D medical image viewing, and section planes. However, the collaboration is in situ and is symmetrical, unlike ours, which can be either symmetrical or asymmetrical and can be both in situ and remotely.

There are other works that apply collaborative MR to the surgical field. For instance, the ARTEMIS system proposed in [17] enables skilled surgeons and novices to work together in the same virtual space, while approaching the problem of remote collaboration through a hybrid interface: on the one hand, expert surgeons in remote sites use VR to access a 3D reconstruction of a patient’s body and instruct novice surgeons on complex procedures; on the other hand, novice surgeons in the field focus on saving the patient’s life while being guided through an AR interface. This work is interesting because it combines AR and VR, but focuses on surgical telementoring and not on surgical planning for RAS, as we propose. Similarly, in [18], the benefits of an AR-based telementoring system on the coaching and confidence of medical personnel are explored. Among others details, the authors discovered that the system allowed the performance of leg fasciotomies with fewer errors. Other works involving AR collaborative work are discussed in [19]. In [7], a method is proposed for performing virtual annotations on RAS videos, so that certain elements of the surgical process are tracked and highlighted during the teaching sessions.

In a similar way to the works in [15,16,17,18], our system allows collaboration in a virtual world, but additionally allows different interaction paradigms (including high, medium and low levels of immersion [20]) and the possibility to collaborate both in situ and remotely. Additionally, very few tools are aimed at surgery planning. In this sense, it is worth mentioning that the work described in [16] does aim at surgery planning with the use of an MR environment. The study includes the results of a qualitative user evaluation and clinical use-cases in laparoscopic liver surgery and heart surgery. The authors report a very high general acceptance of their system. Similarly, our work focuses on surgery planning but for RAS—unlike [16] which focuses on laparoscopic surgery—where training in both knowledge and skills is of interest, and also considers a mixed-platform approach. Thus, we bring new insights into collaborative RAS planning mediated with different levels of immersion, because, to the best of our knowledge, this is the first asymmetric collaborative mixed-reality system that enables surgeons to perform virtual surgery planning of robot-assisted surgery.

3. Materials and Methods

This section describes the proposed system, focusing on the architecture, the technology used, the interaction paradigm of each platform, and the visualisation tools and functionalities available.

3.1. System Description

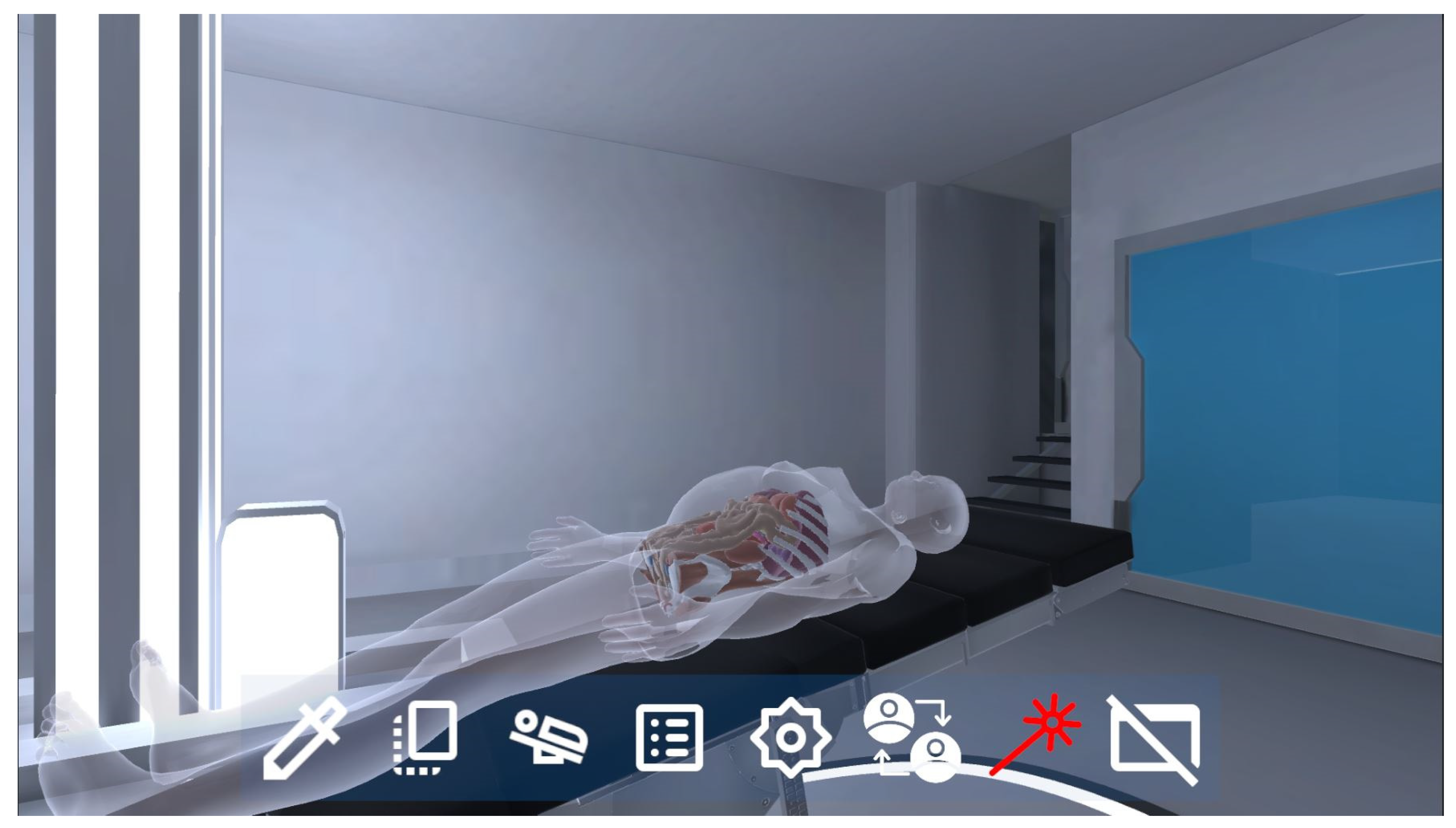

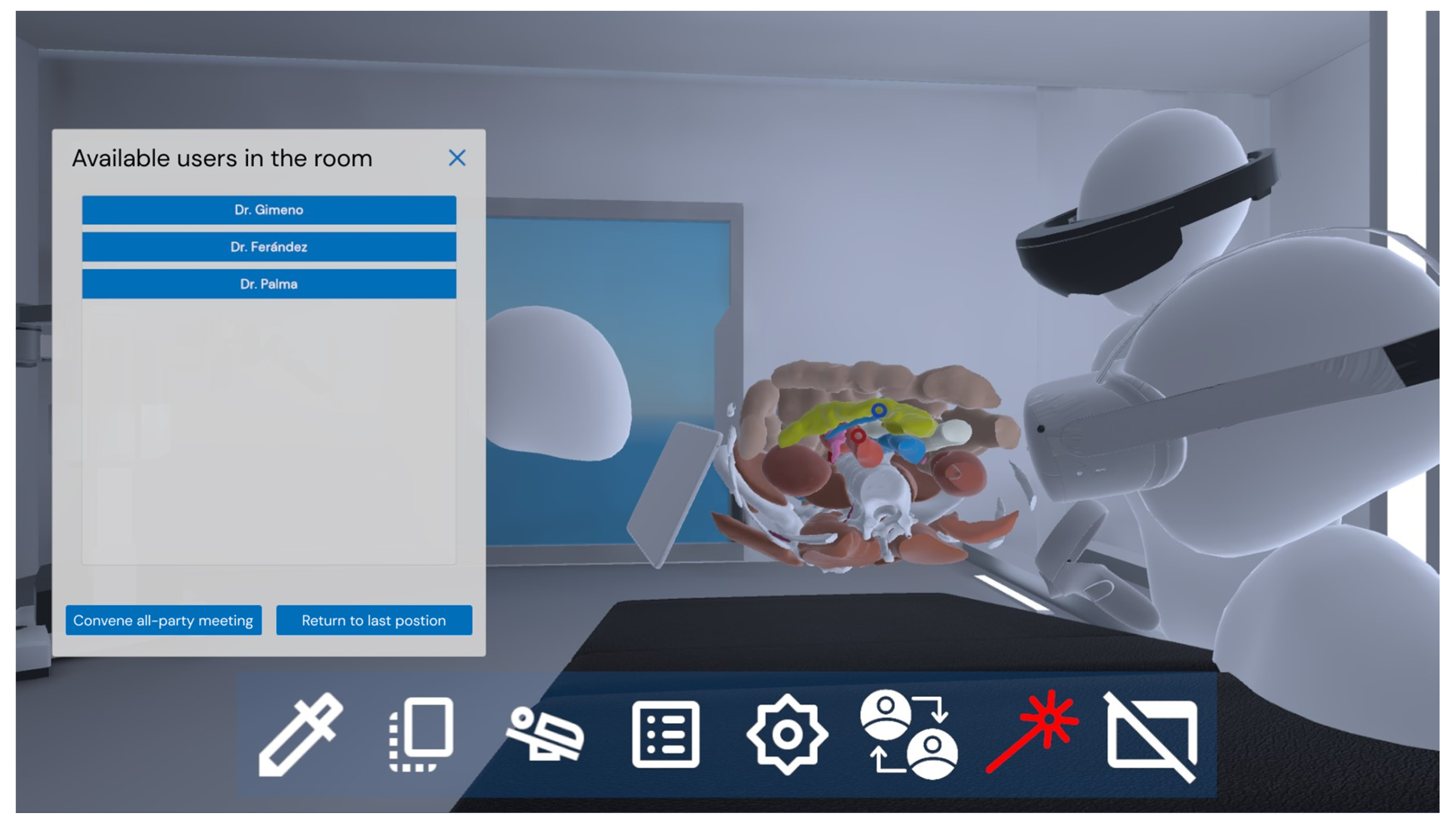

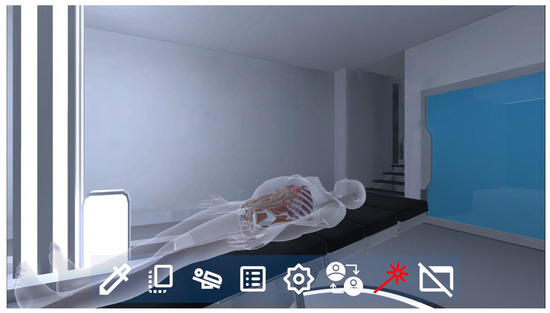

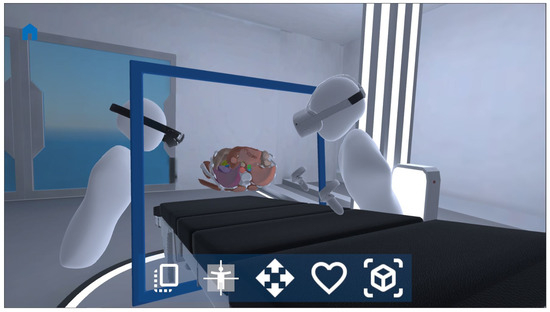

All of the components of the proposed virtual RAS planner focus on being able to perform the surgical planning process, which is recreated by means of a virtual operating room. The virtual operating room includes an operating table and a 3D model representing the patient. Our work is focused on RAS-type surgeries as it includes tools capable of simulating the placement of a surgical robot on a virtual patient represented by a segmented 3D model. To create a versatile system that can adjust to each clinical case, segmented 3D models generated from medical images can be imported. For this demonstration, a medical CT image study of the abdominal area from The Cancer Archive Imaging (TCIA) open repository was used [21]. The 3D model of this case was segmented using the open software Slicer [22] and the automatic segmentation tool Total Segmentator [23]. This tool can segment more than 100 different tissues in the human body from CT images. However, the ViPRAS system can also accept models from other software as long as the 3D model meshes are in STL or OBJ format, both widely known 3D file formats. Figure 1 shows a snapshot of the virtual RAS planner. It is important to highlight that our system is able to show a generic scenario that can be used as a teaching resource but can also represent a real clinical case of a particular person who is about to be operated on. This way, the surgeons have customised 3D models of the patient and are able to study their case and plan the surgical intervention accordingly. This of the utmost importance for the success of the surgical process.

Figure 1.

The proposed virtual operation room for RAS planning.

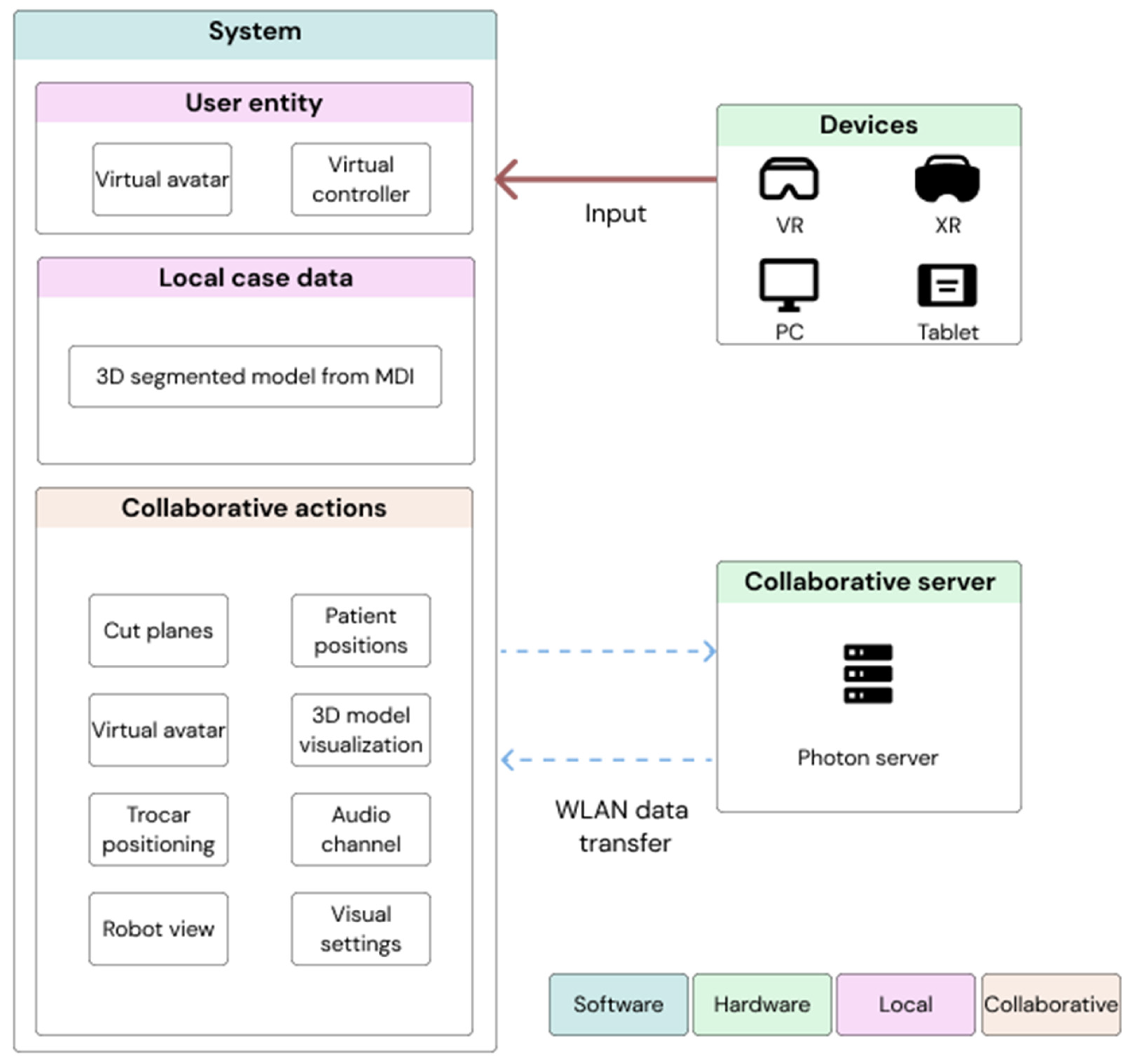

3.2. Architecture

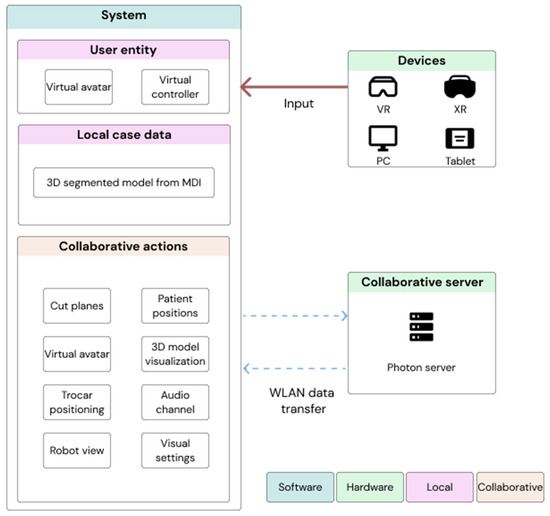

Our collaborative system is designed to be simultaneously accessible from multiple types of computing platforms: PC, tablet, VR, and XR. VR devices, such as Meta Quest 2 [24] or HTC Vive [25] allow an immersive experience but prevent interaction with real elements. XR devices such as Microsoft HoloLens 2 [26] also allow navigating through the virtual world, but the experience is not as immersive, allowing the real world to also be seen.

All devices must maintain a stable connection to the Internet during the use of the application. In the case of VR and XR devices, the OpenXR standard is used to increase the compatibility of the system with the largest number of devices. Unity3D [27] was chosen for development and visualisation.

Regarding the communication system, Photon Engine [28] technology is used for the creation of the shared space. The system is based on the serialization of the states of the objects of the virtual world, being updated only when any alteration has occurred. This architecture reduces the communication and data processing load, favouring a high frame rate and low latency (crucial aspects in VR and MR devices). Figure 2 summarizes the architecture. As can be seen, the system includes local storage where the customised segmented 3D model is stored alongside all the annotations that surgeons may make. This local case data storage will also be used to save information about the planning process generated by the surgeons. The collaborative experience is managed through a collaborative Photon On-Premises server application. The server requires a standard PC with a Windows 8 (or higher) operating system and a reliable internet connection. This structure ensures that clinical data never leaves our network, as it may contain sensitive patient information. The server is configured for load balancing up to a maximum of 10 attendees per room. If the maximum number is reached, the user will be directed to an anteroom where he/she can wait until the main room is free or create a duplicate of the main room where the case can be analysed in parallel. During remote collaboration sessions, the same server is responsible for activating and managing the voice channel that broadcasts conversations between users. To ensure correct transmission of voice messages, the system can filter messages from white noise to transmit only the speech sections. This implementation favours overall load balancing on the server. Finally, the system can be accessed from different devices, so that each user has a different avatar and controller system. The collaboration is asymmetric because not all the devices have the same interaction capabilities, as explained in the next section.

Figure 2.

System architecture.

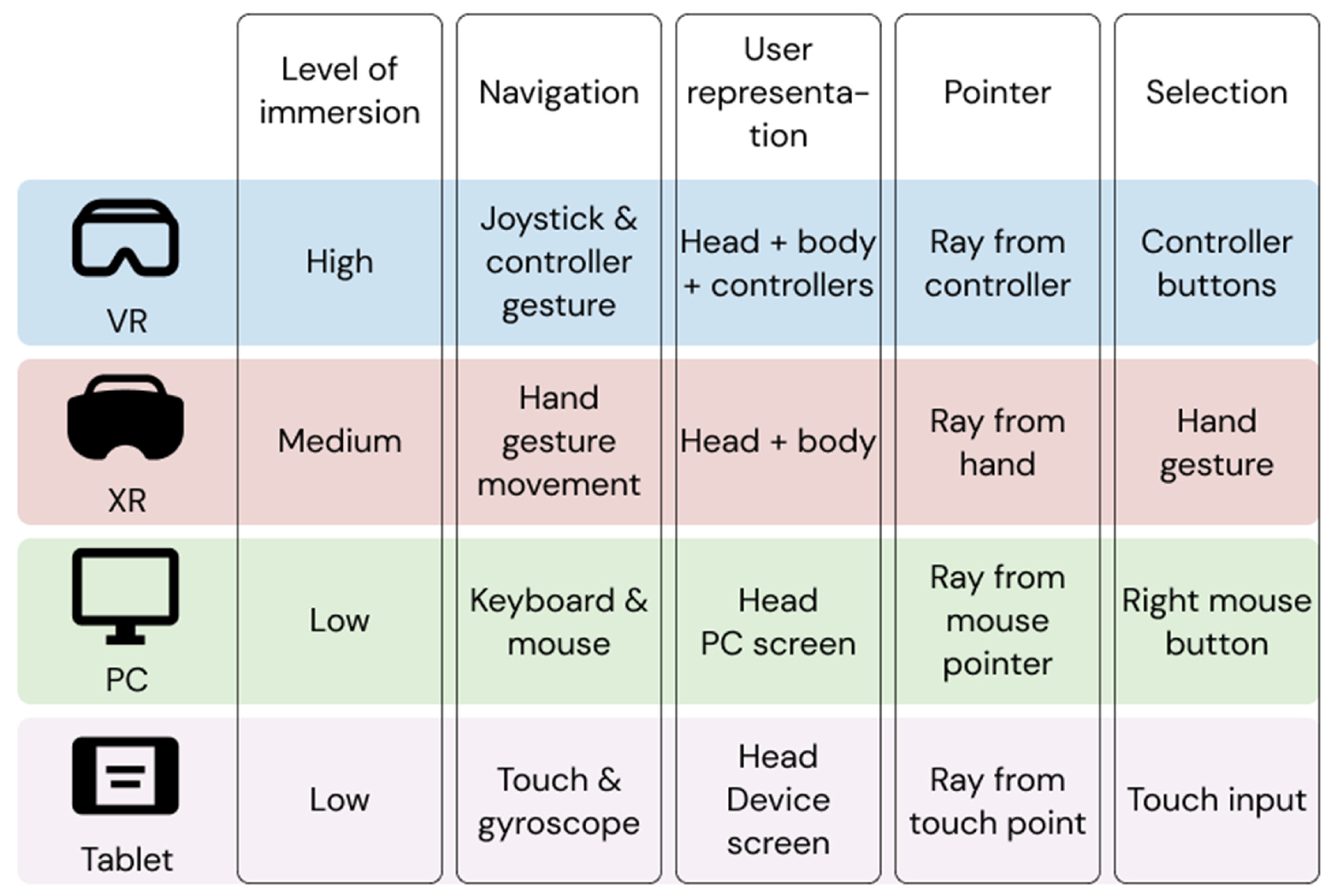

3.3. Interaction

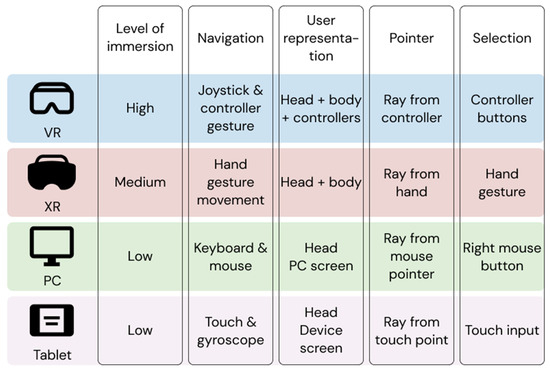

Our virtual RAS planning software presents a different interaction system for each of the platforms on which it is available, in order to get the most out of each of them. The goal of all these interaction systems is to provide an easy-to-use application with a light cognitive load for the user. As can be seen in Figure 3, the PC platform employs a keyboard and mouse-based interaction while tablets use device-specific input systems to avoid dependence on additional equipment. In the case of immersive VR devices, a solution using the controllers of the VR platform is chosen. These allow the user to interact with the elements of the scene with high precision thanks to their tracking capabilities that allow casting virtual beams. In addition, the controllers have a set of buttons that can be used to assign specific quick actions to the currently activated tool or for generic system actions, such as resetting the position of the tool to the current position. In the case of XR, hand tracking and gesture detection are used to interact with the virtual elements. This may require more time for novice users to adapt to the device; however, users are only required to wear an HMD on their head, leaving their hands free in case they need to use them for any other task. This is important as XR devices render virtual elements on top of a view of the real world, so the specialist is free to consult any other data and interact with it, without the need for it to be previously introduced into the system.

Figure 3.

Mixed-platform feature list of the virtual RAS planner.

Regardless of the platform used to access the collaborative experience, all users will have a pointer system which enables them to accurately transmit information of interest to the other attendees. This tool can be activated or deactivated according to the user’s needs at any given moment, and its control will be adjusted to the capabilities of the platform used. Each user will be assigned a representative colour in the initial connection phase so that each of the pointers can be clearly distinguished in situations where more than one user is using this interaction tool.

The user is represented in the operating room by an avatar, which has a different representation system depending on the level of immersion offered by the platform used by each user. In addition, this avatar will allow the rest of the users to visually capture data such as the user’s name, their representative colour, or their transformation in the virtual space. Thanks to the tracking systems included in the high and medium immersion devices, the avatar faithfully represents the movements of both the torso and the arms, allowing the rest of the users to visualise gestures or direct interactions with the environment. However, in low immersion devices, avatar positioning is governed by the transformation of the user’s camera, prioritising the user’s point of view as the information to be shared visually. Figure 3 summarizes the interaction mechanisms that each of the devices can provide. Regardless of the platform used, each user can hear the 3D sound of other attendees through the shared virtual space during remote sessions. Users can locate the speaking avatar just by its voice, without having to look away from the task at hand. This technique enhances the feeling of immersion for users.

It is important to note that the collaboration is asymmetric, providing a control mode that is tailored to each type of compatible device. All users can use all tools, regardless of the platform they are using, although with some devices (particularly those that can offer a high level of immersion; see Figure 3) the different capabilities offered by the ViPRAS system are easier to use. The control of the different tools is adapted to the native interaction of the device, making the behaviour more natural and taking advantage of the benefits of each platform. The authors of [29] analyse the comparison between a symmetrical versus an asymmetrical collaborative system for data analysis between PC and VR devices. The results demonstrate the importance of the UI/UX design being adapted to each platform and its impact on reducing the mental workload required to complete tasks. Therefore, it is crucial to develop a system that can be easily adapted to various platforms. This approach was followed in the design of interaction systems and avatars for each platform, as described in the following section.

3.4. Tools for Surgery Planning

In order to be able to carry out the surgical planning collaboratively, several tools have been included in the virtual planning application for customising the visualisation of the elements of the 3D segmented model. Among them is the section planes tool, with which users can freely place different planes to obtain a 2D cross-section view of the patient. This tool makes it possible to replicate the characteristic visualisation of the medical image by bidimensional slices using either the anatomical planes (axial, coronal, sagittal) or any custom plane, since the application includes a free transformation plane that makes it possible to obtain slices at any angle and position. On high and medium immersion platforms, users can directly “grab” the geometry of the cutting plane in order to bring more realism and precision to their positioning. Figure 4 shows this tool.

Figure 4.

Section planes tool, where users can interact directly with the planes, visualising the internal structures of the sectioned elements. Each of the elements that make up the segmented 3D model are independent interactive elements so that each user can modify their visual configuration between solid, transparent, or hidden format. This feature allows users to freely configure which view is optimal at any given moment. In order to facilitate the task of visual configuration of all the elements that compose it, a set of tools has been created to automate the processes. Firstly, a configurator has been designed to automatically apply the type of visualisation selected by the user to all the elements of the model that share the selected type of tissue. The configurations tool has also been implemented, which allows the visual state of all the elements of the segmented 3D model to be saved so that it can be applied directly in any subsequent phase.

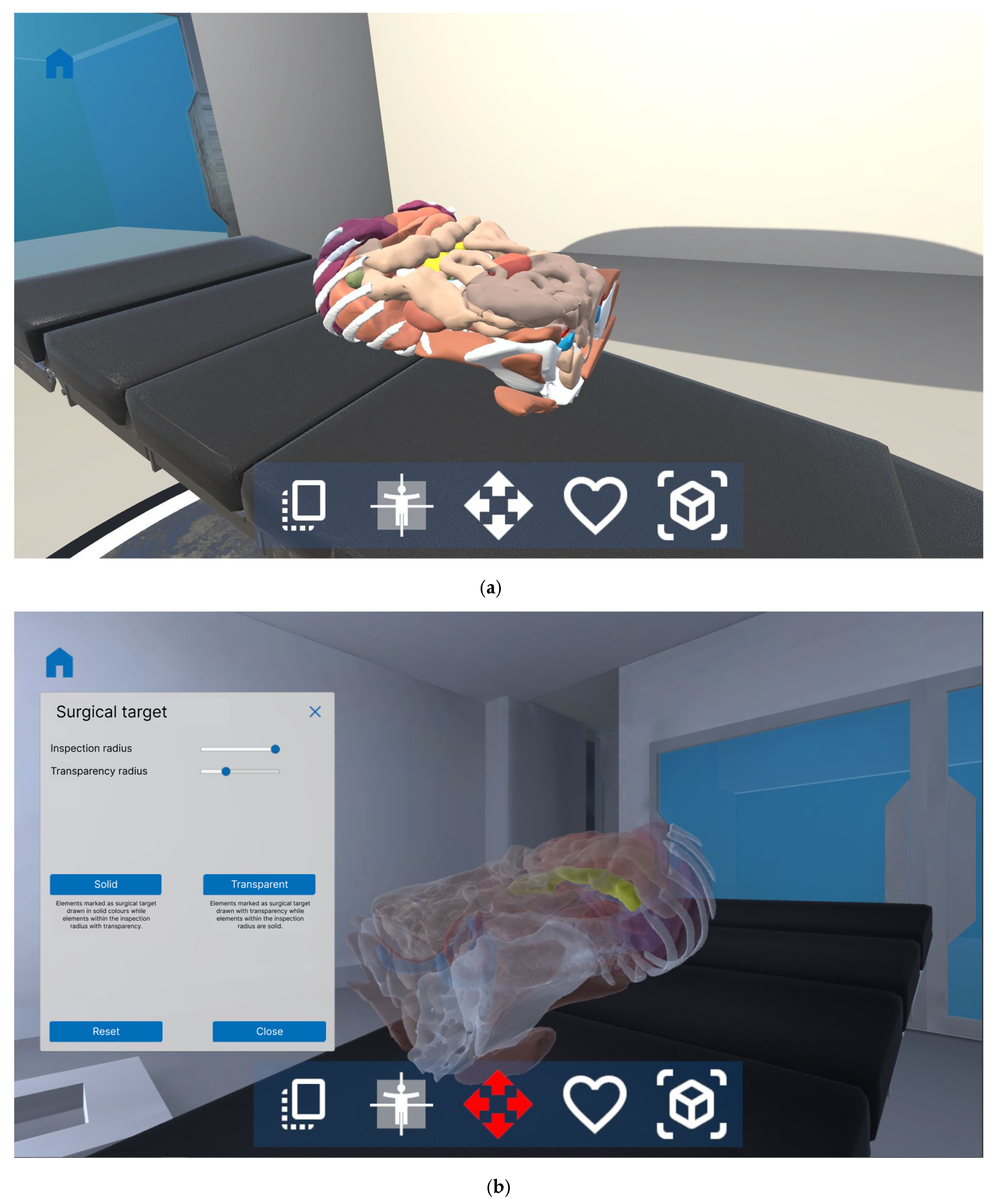

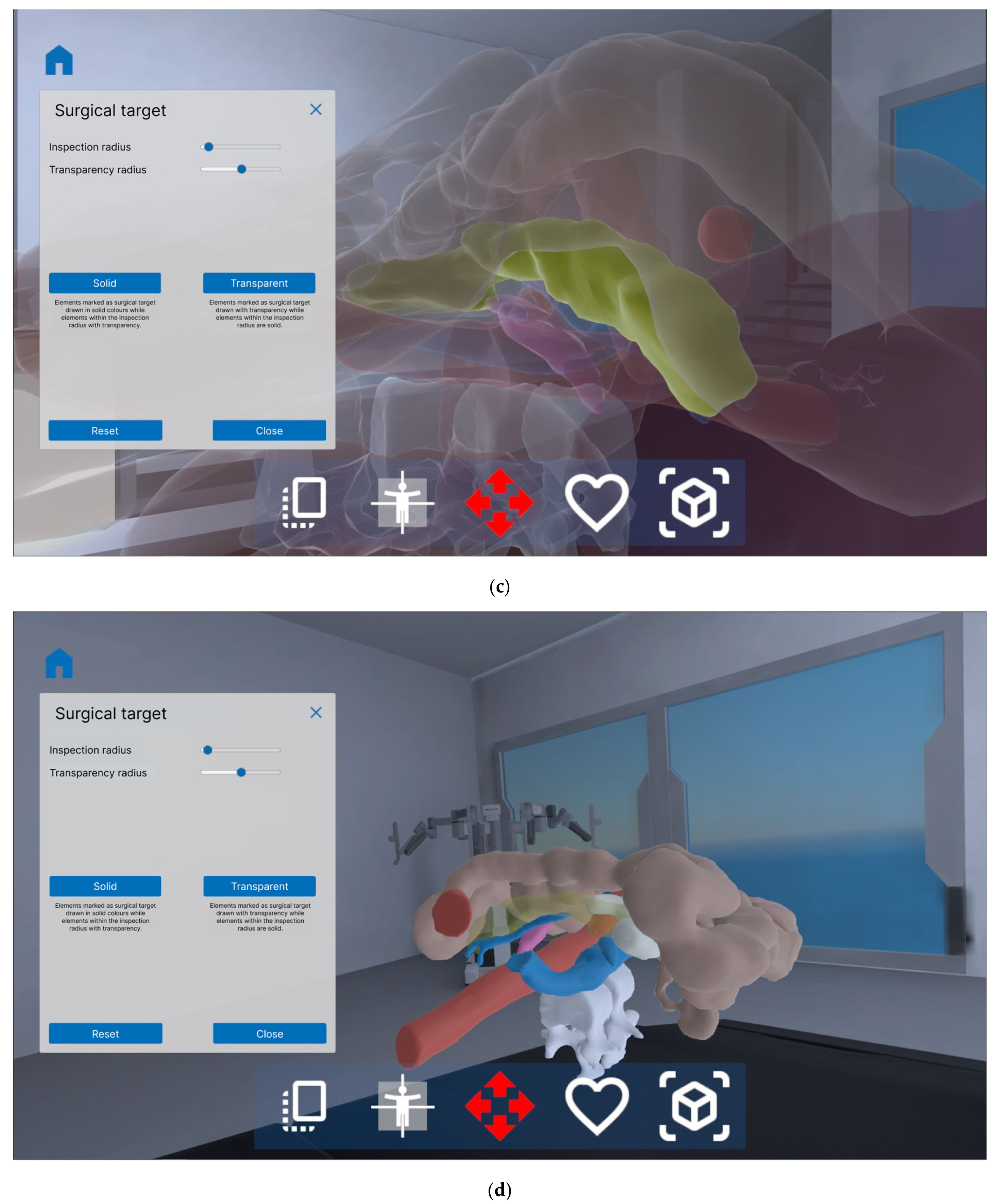

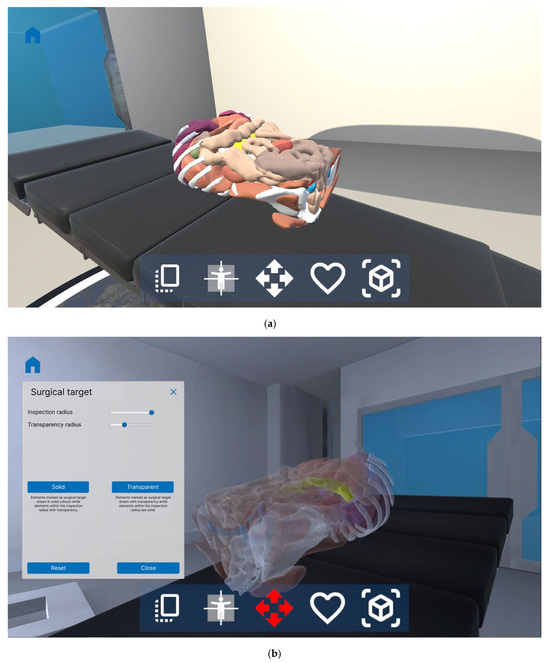

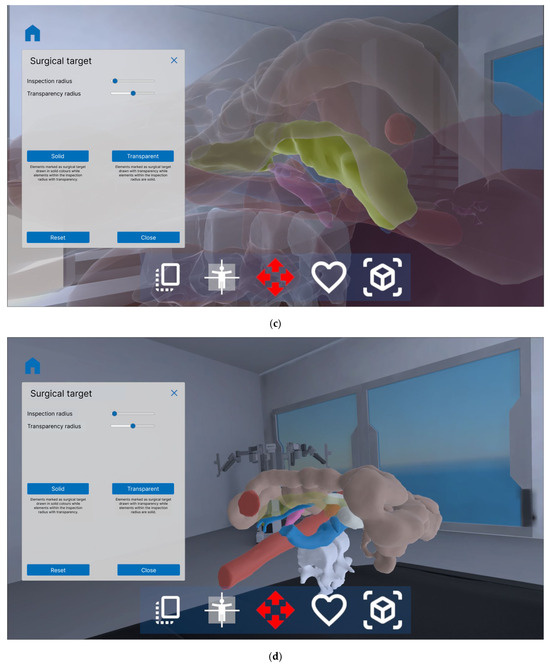

It is also worth mentioning the surgical target tool, which automatically performs visual transformations of all elements within the radius selected by the user, with the element marked as the target as the centre. This tool makes it easy to visualise structures and volumes that may be in contact or even embedded within the surgical target, as in the case of tumours with nearby structures. Objects located outside the area indicated by the user are deactivated so as not to obstruct the view of the main area. Two visualisation modes can be applied to the remaining elements, always alternating between solid and transparent materials. The solid mode allows the selected element to be drawn as the surgical target with total opacity while the rest of the visible elements are painted with the level of transparency selected by the user. In transparent mode, the inverse configuration is established, with the surgical target being the element displayed with transparency. Figure 5 shows this tool.

Figure 5.

Surgical target tool, where (a) shows the segmented 3D model before applying the tool; (b) the surgical target is activated in solid mode, applying solid visualisation to the selected target element and transparent visualisation to all structures within the radius of action; (c) a reduction of the radius is applied; (d) the surgical target is activated in transparent mode, applying transparent visualisation to the selected element and solid visualisation to all structures within the radius of action, to see if the adjacent structures are inserted in the surgical target.

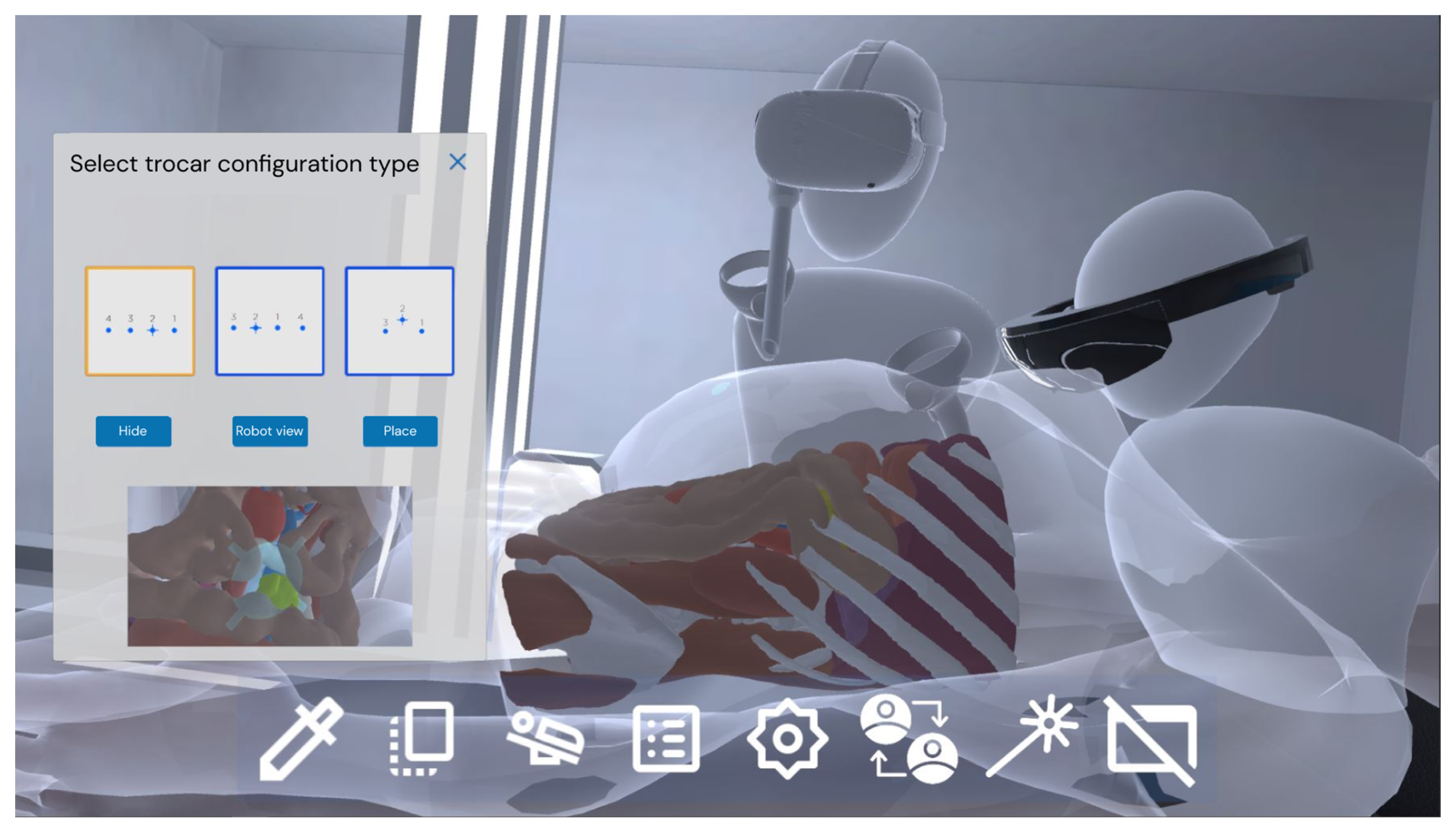

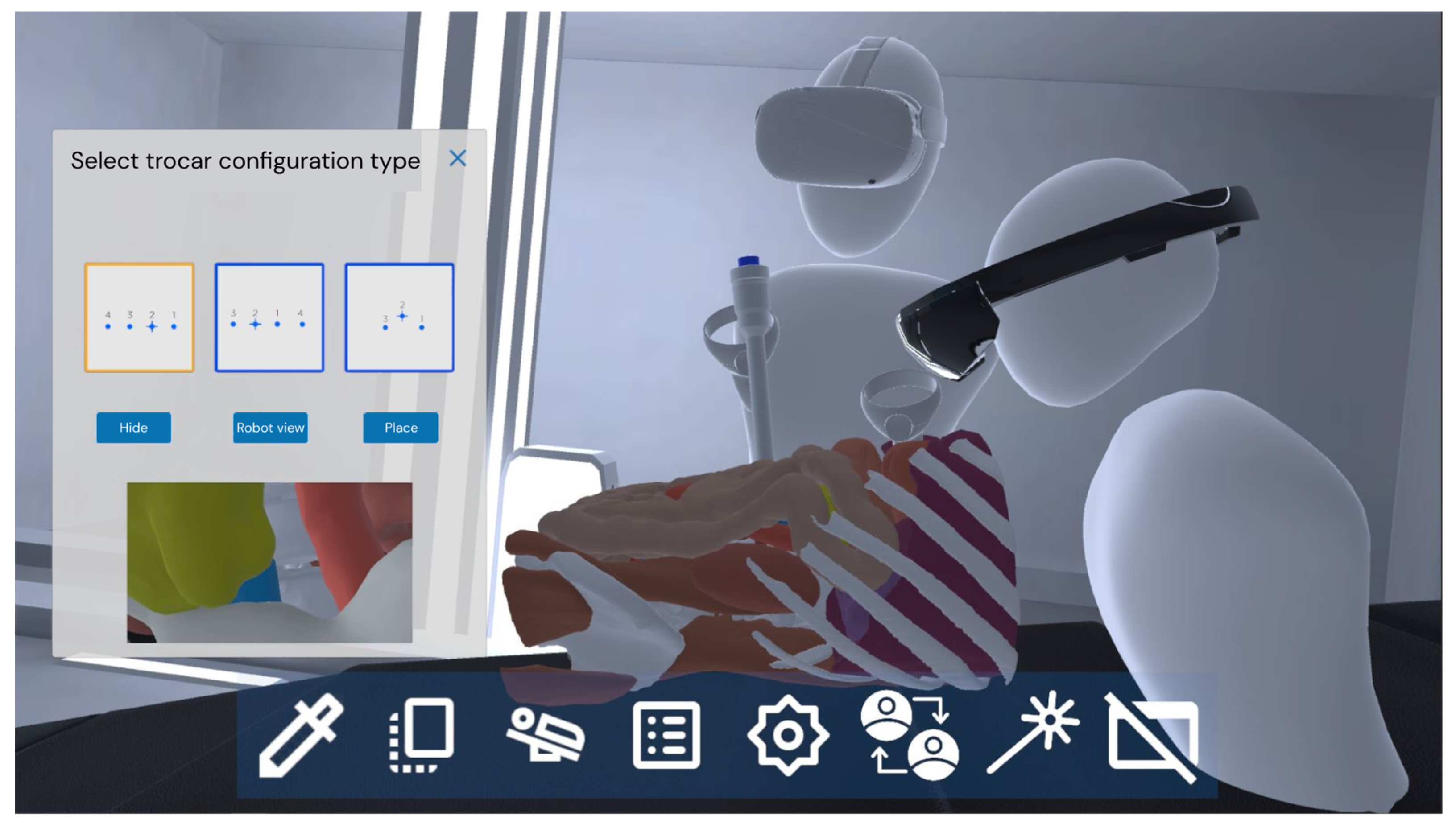

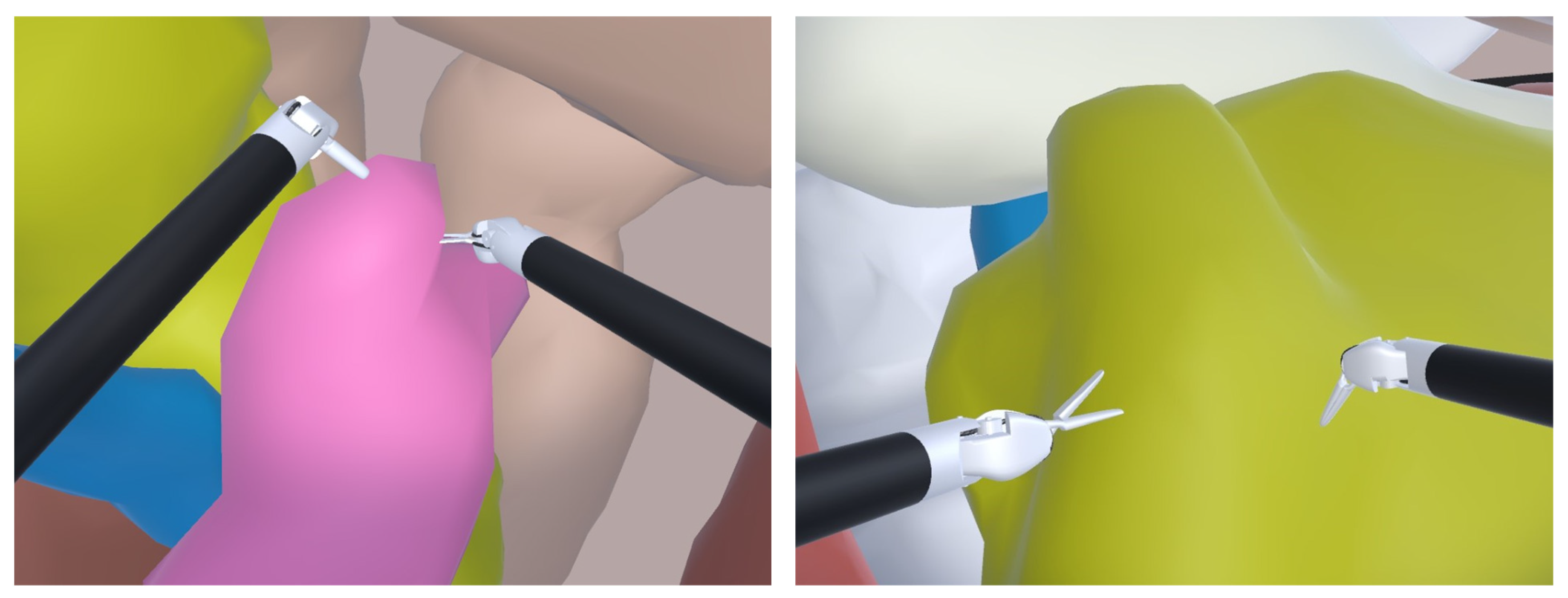

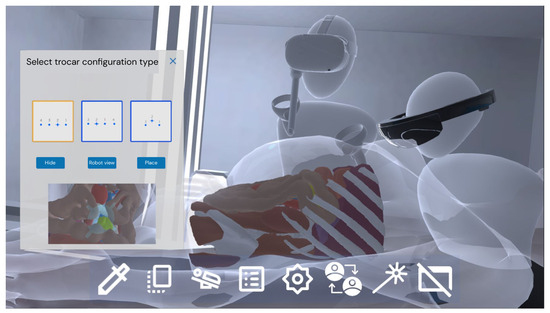

In RAS, the positioning of the trocars is of great importance as it determines the workspace of the surgeon during the intervention. This process is performed “blindly” by the surgeon as the patient’s skin occludes all internal tissues. To be able to plan this aspect, a “virtual trocar” tool is included to simulate its placement, the best position of the patient, and the different configurations. In addition, users can easily change their viewpoint to switch to the RAS console view as if they were using the real robot. From the point of view of the robot, it is possible to activate the virtual robotic arms to simulate the workspace that would be available to the surgeon with the configuration of the robot that is currently selected. The movement of the robotic arms is performed by the movement of the controllers, while the clutching function is mapped to the controller buttons. Figure 6 and Figure 7 show the “virtual trocar” tool. Figure 8 shows this feature with a generic RAS simulator that allows surgeons to check the kinematic constraints of the robot arms and identify potential collisions between the arms of the robot and the anatomy. This RAS simulator was also used in [7]. With this important tool, the surgeons can improve the planning process.

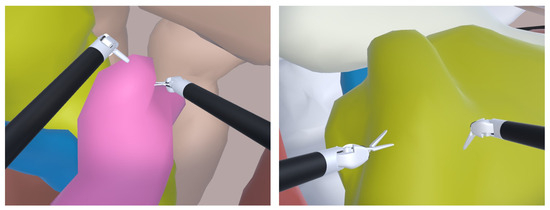

Figure 6.

Virtual trocar tool, with which users in XR mode can freely position the trocar directly with their hand movements. All participants in the room can visualise in real time the first-person view of the robot that would be obtained thanks to the trocar pose.

Figure 7.

Virtual trocar tool, with which users in VR mode can freely position the trocar directly with 6-DOF VR controllers. As in the XR case, all participants in the room can visualise in real time the first-person view of the robot that would be obtained thanks to the trocar pose.

Figure 8.

Two different snapshots of the console view of the virtual trocar tool after activating the virtual robotic arms. A simulated version of the console of the robot is utilized. The view also includes the robot arms, which can be moved to check the dexterity and workspace of the surgical tools.

As seen in Figure 1, our collaborative system allows the use of skin deformation due to inflation in the abdominal area, in order to aid in the trocar placement process. A projection system is used on the inflated skin to identify the entry point and direction of the trocar during positioning and configuration. However, our system utilizes rigid segmented 3D models and does not involve the use of deformable meshes to adjust tissue positioning based on inflation levels in other organs different to the skin.

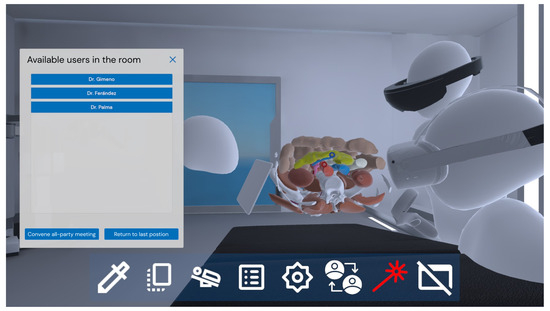

Finally, meeting functions have been added, allowing the position and point of view of any of the users in the room to be replicated (see Figure 9). Thus, the different participants in the planning process can navigate directly between the points of view of other participants, being able to visualise and understand precisely the elements that other participants highlight. In turn, each participant can invite the other users for an explanatory meeting. Once the invitation has been accepted, the users are automatically transferred so that they share the same viewpoint as the presenter during the meeting, as if it were a virtual tour. With this functionality, users can focus on conveying and understanding the information rather than navigating the scene.

Figure 9.

Meeting functions, with which each user can share their point of view and use that of others.

4. Experimental Study

An experiment was conducted to validate the system for clinical practice at Hospital General de Valencia, Spain. For this purpose, four domain experts were recruited, three men and one woman. All of them were experienced surgeons of this hospital, with more than two years of experience performing surgical procedures with a Da Vinci X surgical robot [30]. Informed consent was obtained from all four experts.

For the assessment process, two devices were used: a Microsoft HoloLens 2, as an example of an XR device, and a Meta Quest 2, as an example of an immersive VR device. All four experts used both devices. A third person, not included in the assessment process, was also present in the virtual space using a laptop, in order to add complexity to the collaborative experience and project this third view onto a big screen so that all external observers were able to see the use of the tool. Finally, another PC was used as a server to host Photon. Figure 10 shows a picture of the experimental validation, in which we presented to the surgeons a virtual patient with a complex tumour in the abdominal wall that infiltrated adjacent tissue, in order for them to assess the use of the collaborative virtual RAS planner.

Figure 10.

Experimental validation.

The procedure followed during the experimental session consisted of the following steps:

- Short briefing about the interaction of each platform and the use of the tools.

- Free collaborative session with a 3D model segmented from a CT scan of the abdomen, where the following steps were conducted:

- First, experts #1 and #2 tested the system simultaneously for 15 min, one with the XR platform and the other with the VR platform.

- Then, experts #1 and #2 exchanged platforms and repeated the testing.

- Afterwards, experts #3 and #4 tested the system simultaneously for 15 min, one with the XR platform and the other with the VR platform.

- Finally, experts #3 and #4 exchanged platforms and repeated the testing.

- The four experts filled in an online questionnaire to assess the proposed system.

To assess the usability of the tool, the SUS questionnaire was used [31]. In questions SUS-1 to SUS-10, the range 1–5 means: 1: strongly disagree, 5: strongly agree, where odd questions are formulated in a positive way (thus, the best possible score is 5) and even questions are formulated in a negative way (the best possible score is 1). To assess the tools of the application in a more specific way, we also used a satisfaction questionnaire, in which we asked eight specific questions tailored for this application. This questionnaire focuses on the tools provided by the application and on the usefulness of the collaborative use of the virtual planner. Table 1 lists the questions of the satisfaction questionnaire, which were asked in a 7-point Likert scale (1: strongly disagree, 7: strongly agree).

Table 1.

Satisfaction questionnaire.

5. Results

Table 2 depicts the detailed results of the SUS questionnaire. As can be seen, the resulting SUS score is 83.75, which can be considered excellent, according to [32]. Domain experts do not seem to have usability problems with the application, since none of the answers report an unexpected negative result.

Table 2.

Results of the SUS.

Table 3 depicts the results of the satisfaction questionnaire. We can also report a positive assessment by the domain experts, since all but one question provides a mean result that is higher than 6. The differences between the four experts are small, which means that they agree on the usefulness of the proposed tools. The three specific tools that are assessed in this questionnaire (section planes, surgical target, and virtual trocar) are positively perceived by the domain experts, especially the “section planes” tool. Q8 shows the poorest results since some of the domain experts already had a grasp of the potential benefits that virtual environments can provide to the surgical field. Q3 and Q4, which measure the collaborative aspects of the application, are also positively answered, which means that, despite the asymmetry of the mixed-platform collaboration and the potential complexity of such a heterogeneous shared space, surgeons think they can leverage these shared asymmetrical capabilities.

Table 3.

Results of the satisfaction questionnaire.

Domain experts also commented on the reasons for their answers. All of them agreed that navigation in VR was “very easy to use”, because it resembles the use of the surgical robot. They all liked the first-person perspective of the trocar. One participant regarded this option as “very useful”. They also considered it useful to collaborate with other people in a virtual shared environment, something they did not consider as possible for the planning task. Finally, they also reported that the visualisation of the tissues was “confusing when the point of view was inside the body”.

Overall, the impression of the domain experts is that the application is useful for preparation and discussion of surgical planning with RAS. They suggested incorporating the visualisation of medical imaging into the application, in order to better tailor the surgical planning process to each patient. They also suggested adding the possibility of introducing annotations. These features and an improved tissue visualisation will be added to the system soon as we prepare a full-scale evaluation with a larger population and a real-case scenario.

6. Conclusions and Future Work

The role of RAS in surgical practice is becoming increasingly important. This type of surgical technology has peculiarities that make RAS planning different from other types of surgery. In addition, the use of new technologies can help facilitate the surgical planning process for this type of procedure. Therefore, it is necessary to provide tools customised for this type of procedure to facilitate surgical planning. For this reason, this article presents a collaborative MR-based tool that allows one or more experts to plan together the different phases of an RAS-based surgical procedure. The proposed system is a mixed-platform collaborative virtual recreation of a surgery room and allows multiple users to work together, both locally and remotely, using different interaction tools and computing devices. To the best of our knowledge, this is the first mixed-platform asymmetrical collaborative virtual RAS planner published in the academic literature.

An experimental test with four RAS domain experts was performed with good results. None of the users had problems using the application, and the researchers observed that all of them were pleasantly surprised by the proposed tools. The results obtained from the evaluation support this initial impression, since the mean usability score is 83.75 and the answers obtained from the satisfaction questionnaires were also positive. Nevertheless, we acknowledge that our research is still in TRL5 [33] and a validation process with a larger number of participants would be required to deploy our system for surgical planning experiences in hospitals.

After this initial success, we plan to deploy the system in an operational environment and perform a more extensive validation to reach TRL7 and begin to introduce it in real environments. Thus, future work includes the evaluation of the tool with a larger group of domain experts. This is, of course, difficult because this surgical technology is not widely available and it is not easy to find surgeons that have expertise in RAS. As the domain experts suggested, future work will also include incorporating medical imaging into the application, in order to tailor the surgical planning process to each patient. We will also introduce annotations in order for the surgeons to introduce textual and audio information into the system, so that all the comments and steps agreed upon by the surgical team can be properly recorded and subsequently consulted by any surgeon. It is also important to emphasize that our system could be used for laparoscopic surgery too, although this technique presents differences in the approach, and there are also different types of laparoscopy, so it would be necessary to adapt the system for each type.

Author Contributions

Conceptualization, J.G. and S.C.-Y.; methodology, C.P.; software, B.P.; validation, B.P., J.G. and I.C.; formal analysis, S.C.-Y.; investigation, B.P. and S.C.-Y.; resources, I.C.; data curation, C.P.; writing—original draft preparation, B.P., C.P. and S.C.-Y.; writing—review and editing, J.G. and S.C.-Y.; visualisation, B.P.; supervision, J.G.; project administration, S.C.-Y.; funding acquisition, S.C.-Y. and J.G. All authors have read and agreed to the published version of the manuscript.

Funding

Belén Palma, Jesús Gimeno, Inmaculada Coma and Sergio Casas are supported by Spain’s Agencia Estatal de Investigación and the Spanish Ministry of Science and Innovation, under the ViPRAS project (Virtual Planning for Robotic-Assisted Surgery)-PID2020-114562RA-I00 (funded by MCIN/AEI/10.13039/501100011033). Cristina Portalés is supported by the Spanish government postdoctoral grant Ramón y Cajal, under grant No. RYC2018-025009-I.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

Conflicts of Interest

The authors have no relevant financial or non-financial interests to declare that are relevant to the content of this article. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Wirth, M.; Mehringer, W.; Gradl, S.; Eskofier, B.M. Extended Realities (XRs): How Immersive Technologies Influence Assessment and Training for Extreme Environments. In Engineering and Medicine in Extreme Environments; Cibis, T., McGregor AM, C., Eds.; Springer International Publishing: Cham, Switzerland, 2022; pp. 309–335. ISBN 978-3-030-96921-9. [Google Scholar]

- Milgram, P.; Kishino, F. A Taxonomy of Mixed Reality Visual Displays. IEICE Trans. Inf. Syst. 1994, 77, 1321–1329. [Google Scholar]

- Ens, B.; Lanir, J.; Tang, A.; Bateman, S.; Lee, G.; Piumsomboon, T.; Billinghurst, M. Revisiting Collaboration through Mixed Reality: The Evolution of Groupware. Int. J. Hum.-Comput. Stud. 2019, 131, 81–98. [Google Scholar] [CrossRef]

- Fleming, C.A.; Ali, O.; Clements, J.M.; Hirniak, J.; King, M.; Mohan, H.M.; Nally, D.M.; Burke, J. Surgical Trainee Experience and Opinion of Robotic Surgery in Surgical Training and Vision for the Future: A Snapshot Study of Pan-Specialty Surgical Trainees. J. Robot. Surg. 2021, 16, 1073–1082. [Google Scholar] [CrossRef] [PubMed]

- Hayashibe, M.; Suzuki, N.; Hashizume, M.; Kakeji, Y.; Konishi, K.; Suzuki, S.; Hattori, A. Preoperative Planning System for Surgical Robotics Setup with Kinematics and Haptics. Int. J. Med. Robot. Comput. Assist. Surg. 2005, 1, 76–85. [Google Scholar] [CrossRef] [PubMed]

- Maier-Hein, L.; Mountney, P.; Bartoli, A.; Elhawary, H.; Elson, D.; Groch, A.; Kolb, A.; Rodrigues, M.; Sorger, J.; Speidel, S. Optical Techniques for 3D Surface Reconstruction in Computer-Assisted Laparoscopic Surgery. Med. Image Anal. 2013, 17, 974–996. [Google Scholar] [CrossRef] [PubMed]

- Portalés, C.; Gimeno, J.; Salvador, A.; García-Fadrique, A.; Casas-Yrurzum, S. Mixed Reality Annotation of Robotic-Assisted Surgery Videos with Real- Time Tracking and Stereo Matching. Comput. Graph. 2023, 110, 125–140. [Google Scholar] [CrossRef]

- Checcucci, E.; Amparore, D.; Fiori, C.; Manfredi, M.; Ivano, M.; Di Dio, M.; Niculescu, G.; Piramide, F.; Cattaneo, G.; Piazzolla, P.; et al. 3D Imaging Applications for Robotic Urologic Surgery: An ESUT YAUWP Review. World J. Urol. 2020, 38, 869–881. [Google Scholar] [CrossRef] [PubMed]

- Lasser, M.S.; Doscher, M.; Keehn, A.; Chernyak, V.; Garfein, E.; Ghavamian, R. Virtual Surgical Planning: A Novel Aid to Robot-Assisted Laparoscopic Partial Nephrectomy. J. Endourol. 2012, 26, 1372–1379. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.-Y.; Kim, W.S.; Choi, E.C.; Nam, W. The Role of Virtual Surgical Planning in the Era of Robotic Surgery. Yonsei Med. J. 2016, 57, 265–268. [Google Scholar] [CrossRef] [PubMed]

- Ghazi, A.; Sharma, N.; Radwan, A.; Rashid, H.; Osinski, T.; Frye, T.; Tabayoyong, W.; Bloom, J.; Joseph, J. Can Preoperative Planning Using IRISTM Three-Dimensional Anatomical Virtual Models Predict Operative Findings during Robot-Assisted Partial Nephrectomy? Asian J. Urol. 2023, 10, 431–439. [Google Scholar] [CrossRef] [PubMed]

- Emmerling, M.; Tan, H.-J.; Shirk, J.; Bjurlin, M.A. Applications of 3-Dimensional Virtual Reality Models (Ceevra®) for Surgical Planning of Robotic Surgery. Urol. Video J. 2023, 18, 100220. [Google Scholar] [CrossRef]

- Karnam, M.; Zelechowski, M.; Cattin, P.C.; Rauter, G.; Gerig, N. Workspace-Aware Planning of a Surgical Robot Mounting in Virtual Reality. In International Workshop on Medical and Service Robots; Springer: Berlin/Heidelberg, Germany, 2023; pp. 13–19. [Google Scholar]

- Laskay, N.M.; George, J.A.; Knowlin, L.; Chang, T.P.; Johnston, J.M.; Godzik, J. Optimizing Surgical Performance Using Preoperative Virtual Reality Planning: A Systematic Review. World J. Surg. 2023, 47, 2367–2377. [Google Scholar] [CrossRef]

- Chheang, V.; Saalfeld, P.; Huber, T.; Huettl, F.; Kneist, W.; Preim, B.; Hansen, C. Collaborative Virtual Reality for Laparoscopic Liver Surgery Training. In Proceedings of the 2019 IEEE International Conference on Artificial Intelligence and Virtual Reality (AIVR), San Diego, CA, USA, 9–11 December 2019; pp. 1–17. [Google Scholar]

- Kumar, R.P.; Pelanis, E.; Bugge, R.; Brun, H.; Palomar, R.; Aghayan, D.L.; Fretland, Å.A.; Edwin, B.; Elle, O.J. Use of Mixed Reality for Surgery Planning: Assessment and Development Workflow. J. Biomed. Inform. 2020, 112, 100077. [Google Scholar] [CrossRef]

- Gasques, D.; Johnson, J.G.; Sharkey, T.; Feng, Y.; Wang, R.; Xu, Z.R.; Zavala, E.; Zhang, Y.; Xie, W.; Zhang, X.; et al. ARTEMIS: A Collaborative Mixed-Reality System for Immersive Surgical Telementoring. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Online Virtual, 8–13 May 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 1–14. [Google Scholar]

- Rojas-Muñoz, E.; Cabrera, M.E.; Lin, C.; Andersen, D.; Popescu, V.; Anderson, K.; Zarzaur, B.L.; Mullis, B.; Wachs, J.P. The System for Telementoring with Augmented Reality (STAR): A Head-Mounted Display to Improve Surgical Coaching and Confidence in Remote Areas. Surgery 2020, 167, 724–731. [Google Scholar] [CrossRef]

- Sereno, M.; Wang, X.; Besançon, L.; McGuffin, M.J.; Isenberg, T. Collaborative Work in Augmented Reality: A Survey. IEEE Trans. Vis. Comput. Graph. 2022, 28, 2530–2549. [Google Scholar] [CrossRef] [PubMed]

- García-Pereira, I.; Gimeno, J.; Pérez, M.; Portalés, C.; Casas, S. MIME: A Mixed-Space Collaborative System with Three Immersion Levels and Multiple Users. In Proceedings of the 2018 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Munich, Germany, 16–20 October 2018; pp. 179–183. [Google Scholar]

- Prior, F.W.; Clark, K.; Commean, P.; Freymann, J.; Jaffe, C.; Kirby, J.; Moore, S.; Smith, K.; Tarbox, L.; Vendt, B. TCIA: An Information Resource to Enable Open Science. In Proceedings of the 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Osaka, Japan, 3–7 July 2013; pp. 1282–1285. [Google Scholar]

- Fedorov, A.; Beichel, R.; Kalpathy-Cramer, J.; Finet, J.; Fillion-Robin, J.-C.; Pujol, S.; Bauer, C.; Jennings, D.; Fennessy, F.; Sonka, M. 3D Slicer as an Image Computing Platform for the Quantitative Imaging Network. Magn. Reson. Imaging 2012, 30, 1323–1341. [Google Scholar] [CrossRef] [PubMed]

- Wasserthal, J.; Breit, H.-C.; Meyer, M.T.; Pradella, M.; Hinck, D.; Sauter, A.W.; Heye, T.; Boll, D.T.; Cyriac, J.; Yang, S. Totalsegmentator: Robust Segmentation of 104 Anatomic Structures in Ct Images. Radiol. Artif. Intell. 2023, 5, e230024. [Google Scholar] [CrossRef] [PubMed]

- Meta Platforms, Inc., Menlo Park, CA, USA. Available online: https://www.meta.com (accessed on 10 April 2024).

- HTC Corporation, Taoyuan, Taiwan. Available online: https://www.htc.com (accessed on 10 April 2024).

- Microsoft Corporation, Redmond, WA, USA. Available online: https://www.microsoft.com (accessed on 10 April 2024).

- Unity Software Inc., San Francisco, CA, USA. Available online: https://www.unity.com (accessed on 10 April 2024).

- Photon Engine, Exit Games, Hamburg, Germany. Available online: http://www.photonengine.com/ (accessed on 10 April 2024).

- Tong, W.; Xia, M.; Wong, K.K.; Bowman, D.A.; Pong, T.-C.; Qu, H.; Yang, Y. Towards an Understanding of Distributed Asymmetric Collaborative Visualization on Problem-Solving. In Proceedings of the 2023 IEEE Conference Virtual Reality and 3D User Interfaces (VR), Shanghai, China, 25–29 March 2023; pp. 387–397. [Google Scholar]

- Intuitive Surgical, Inc., Sunnyvale, CA, USA. Available online: https://www.intuitive.com/ (accessed on 10 April 2024).

- Brooke, J. SUS-A Quick and Dirty Usability Scale. Usability Eval. Ind. 1996, 189, 4–7. [Google Scholar]

- Bangor, A.; Kortum, P.; Miller, J. Determining What Individual SUS Scores Mean: Adding an Adjective Rating Scale. J. Usability Stud. 2009, 4, 114–123. [Google Scholar]

- European Comission Technology Readiness Levels (TRL). Available online: https://ec.europa.eu/research/participants/data/ref/h2020/wp/2014_2015/annexes/h2020-wp1415-annex-g-trl_en.pdf (accessed on 10 April 2024).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).