Revolutionizing Open-Pit Mining Fleet Management: Integrating Computer Vision and Multi-Objective Optimization for Real-Time Truck Dispatching

Abstract

:1. Introduction

Applications of Computer Vision Technology

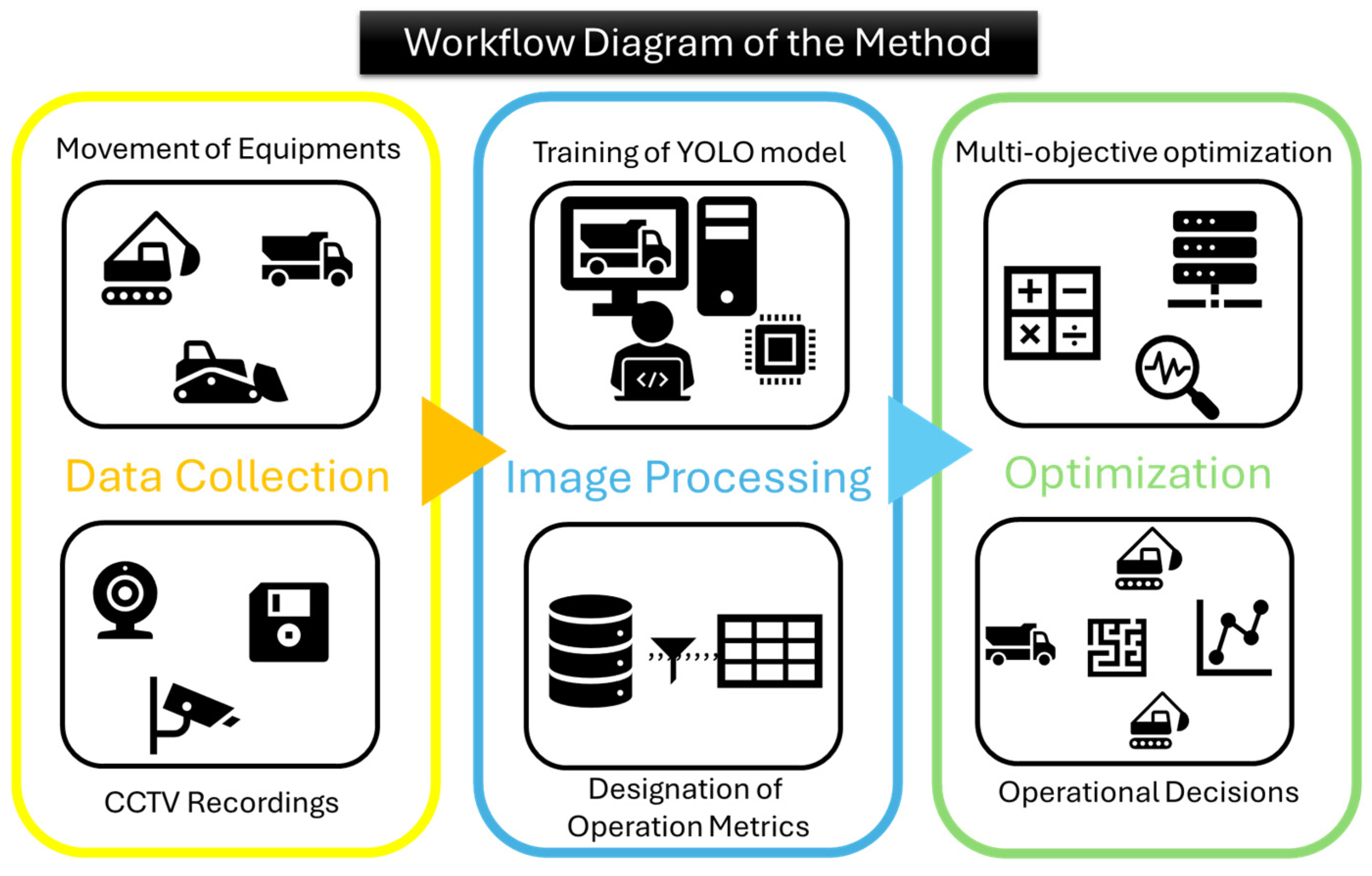

2. Materials and Methods

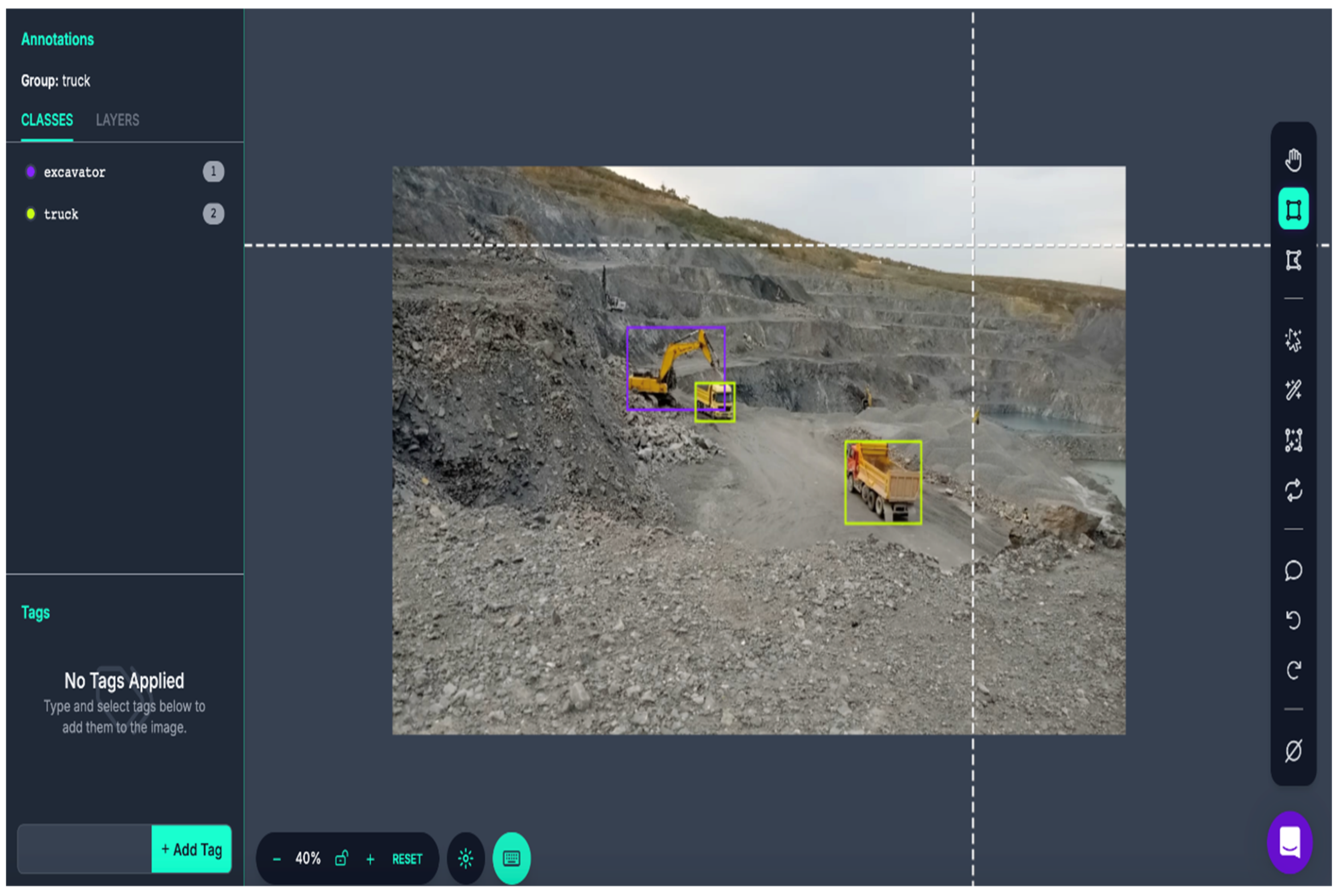

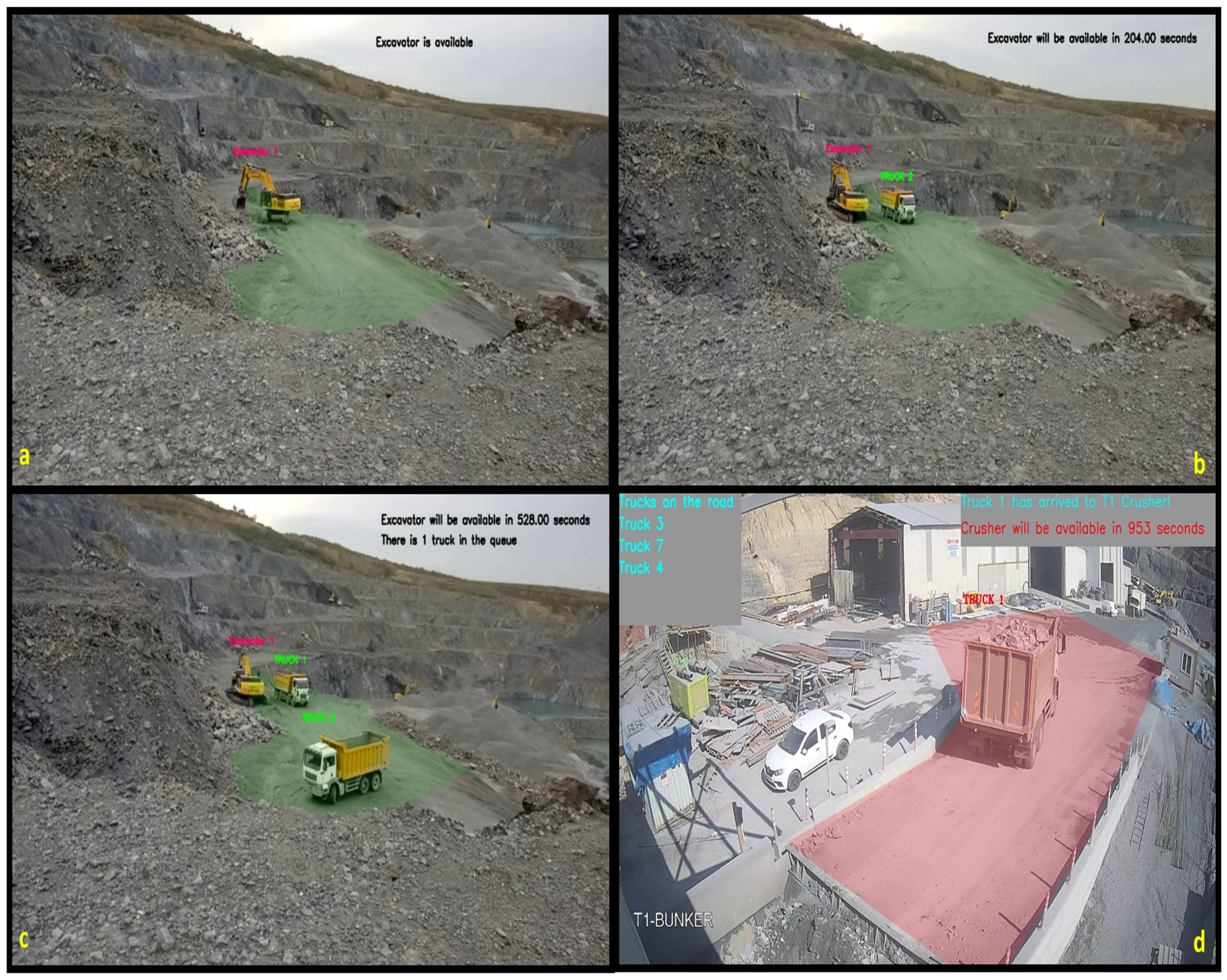

2.1. Image Detection with YOLO Model

- No trucks in the green area: If the green area is devoid of trucks, the excavator is deemed ready for operation. In this scenario, the ready time is assigned a value of zero seconds, indicating immediate availability for new assignments.

- One truck in the green area: When a single truck is present within the green area, the excavator’s ready time corresponds to the loading time of the truck. For the case study, this loading time is standardized to 300 s.

- Multiple trucks in the green area: If more than one truck occupies the green area, the additional trucks are classified as being “in queue”. In this case, the ready time of the excavator is incrementally extended by the sum of the maneuvering and loading times for each queued truck. This approach accounts for the additional delays caused by processing the queued trucks sequentially.

2.2. Multi-Objective Optimization

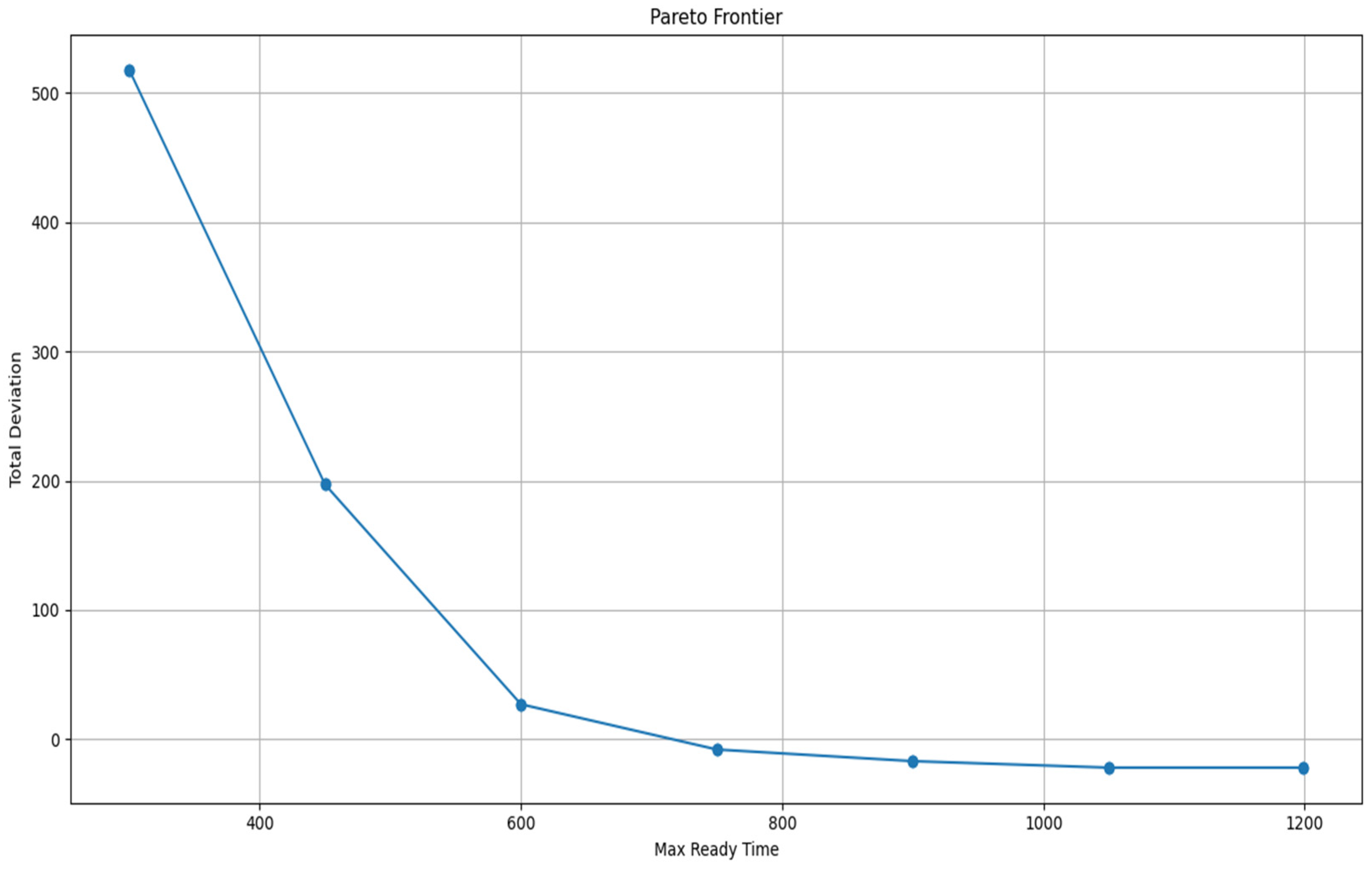

2.2.1. Pareto Frontier Analysis with ε-Constraint Approach

2.2.2. Binary Integer Programming Model and Definition of Parameters

- Parameters;: Production target for excavator e.This represents the desired amount of material (e.g., tons of ore or soil) that excavator e should process or load onto trucks within a given time period. It is a goal the model aims to meet as closely as possible.: Ready time for excavator e at a given timestamp.This is the time at which excavator e is available to start working (e.g., after maintenance or previous tasks). It affects scheduling and assignment decisions.: Travel time from dump d to excavator e.This measures the time it takes for a truck to travel from a specific dump site d to excavator e. It is critical for calculating total time in the system and ensuring efficient assignments.

- Decision Variables:: Binary decision variableThis variable indicates whether truck t at dump d is assigned to excavator e (1 = yes, 0 = no). It is binary because each truck can only be assigned or not assigned, with no partial assignments allowed.: Deviation from the production target for excavator e.This variable captures how much the actual production (based on truck assignments) deviates from the target for excavator e. It is split into positive and negative components in the model to measure over- or under-achievement.

- Objective Functions:Minimization of total deviation from production targets. This objective aims to ensure that the production achieved by each excavator is as close as possible to its target . The positive deviation , exceeding the target) and negative deviation falling short) are summed across all excavators e.Minimization of the total ready time of excavators. This objective seeks to reduce the combined ready time and travel time associated with truck assignments, making the operation more efficient.

- Constraints:Each truck can only be assigned to one excavator. This ensures that a truck t is assigned to exactly one combination of dump d and excavator e, preventing double-booking or unassigned trucks.Each dump site can have one truck requiring an excavator assignment at the same time. This limits each dump site d to having at most one truck assigned to it at any given time, reflecting capacity or operational constraints at the dump.Decision variables of the trucks on the road are set to zero at the given time. This constraint forces to be 0 for trucks that are currently on the road (unavailable), ensuring that only available trucks are assigned.Deviation constraints: These define the positive and negative deviations from the production target for each excavator. The positive deviation measures how much production exceeds , while the negative deviation measures how much it falls short. These are calculated based on the number of trucks assigned.Binary variable constraint:Epsilon constraint: This limits the total ready time and travel time to a threshold ε, often used in multi-objective optimization to prioritize one objective (e.g., ) while constraining the other

2.3. Application of Method in an Open-Pit Mine

3. Results

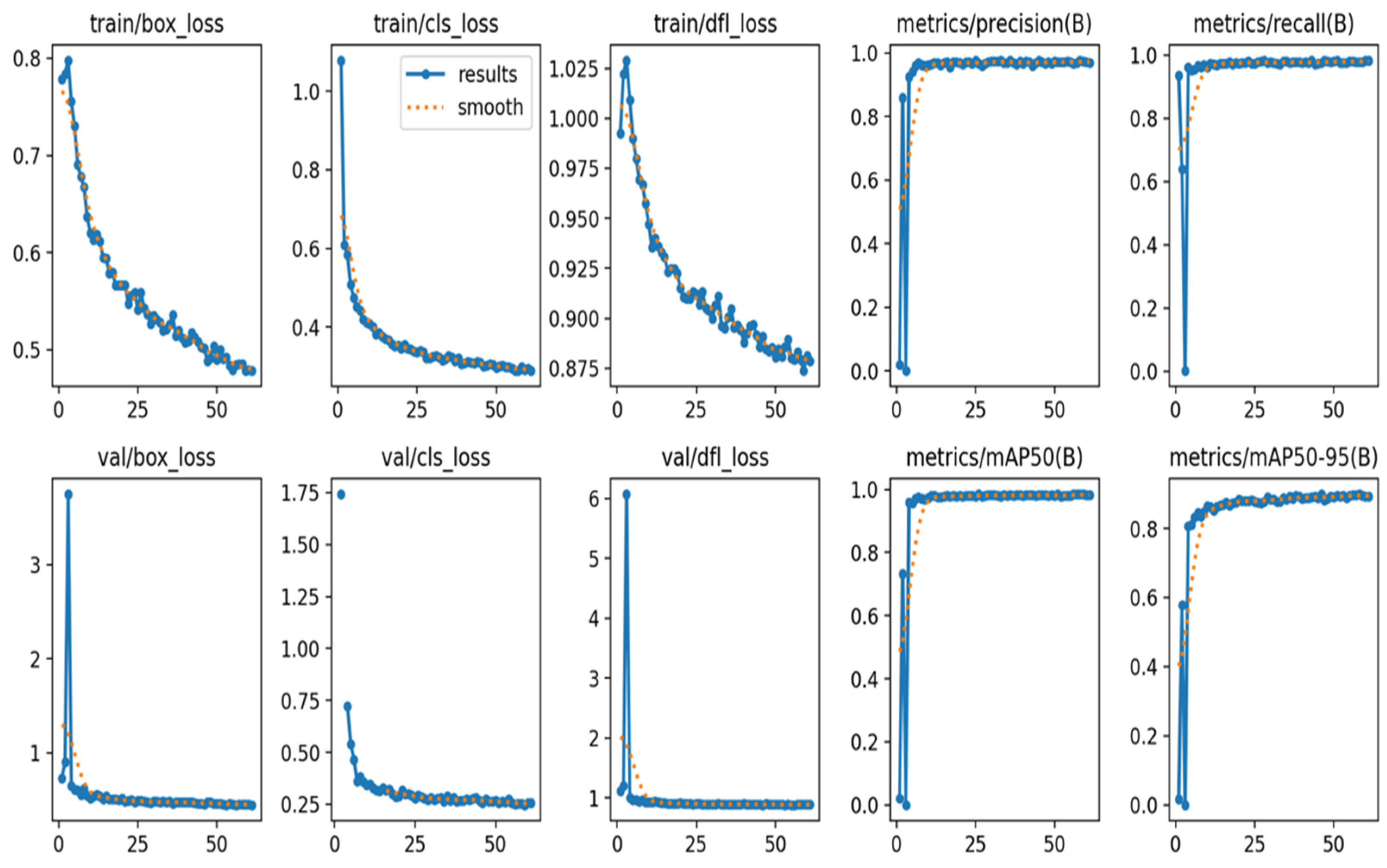

3.1. Performance Analysis of the Trained Model

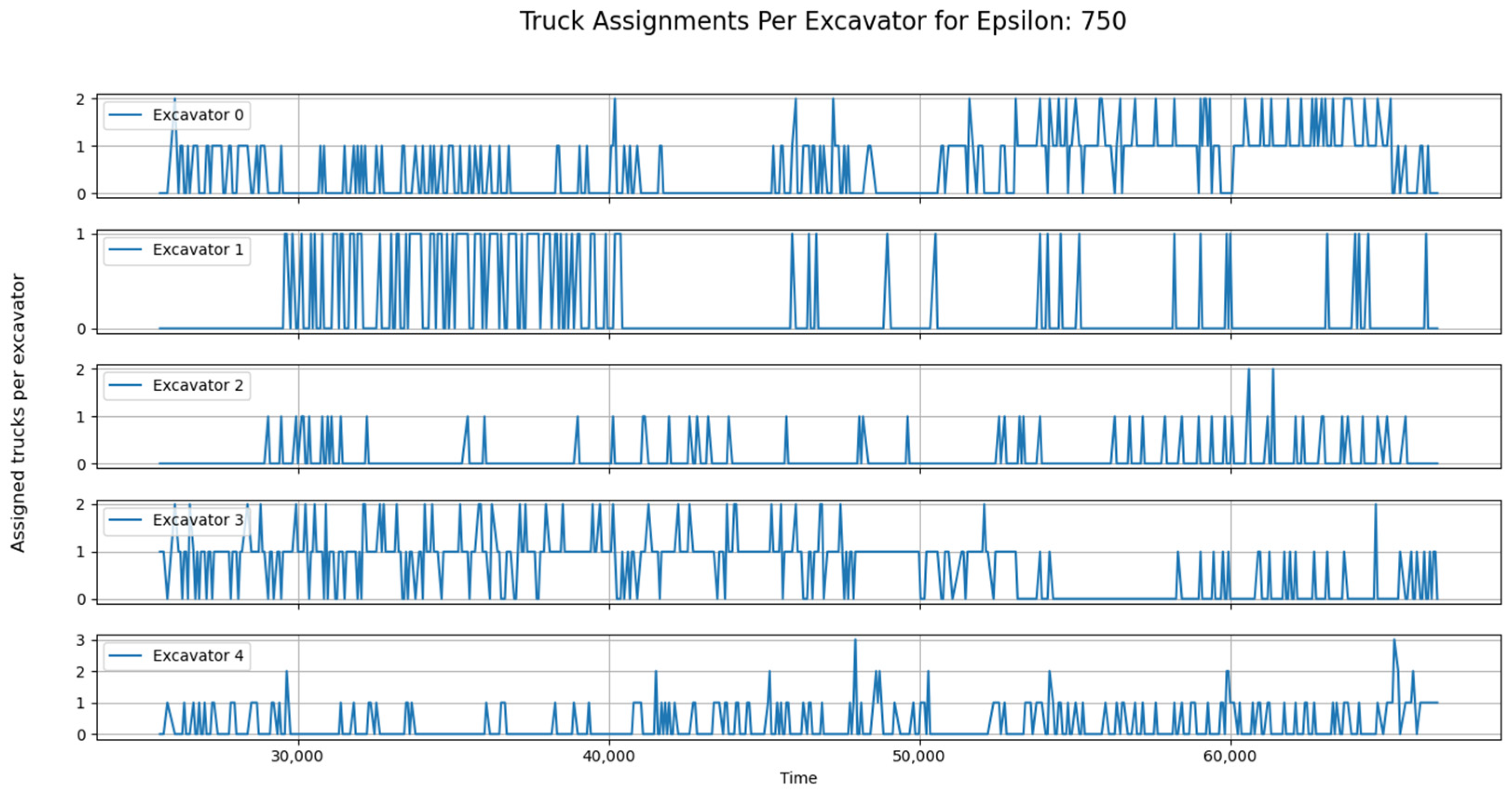

3.2. Results of Multi-Objective Optimization Model

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| YOLO | You Only Look Once |

| CCTV | Closed-Circuit Television |

| CV | Computer Vision |

| OB | Overburden |

| ML | Machine Learning |

| CNN | Convolutional Neural Network |

| mAP | Mean Average Precision |

| AI | Artificial Intelligence |

References

- Ellinger, A.; Woerner, C.; Scherer, R. Automatic Segmentation of Bulk Material Heaps Using Color, Texture, and Topography from Aerial Data and Deep Learning-Based Computer Vision. Remote Sens. 2023, 15, 211. [Google Scholar] [CrossRef]

- Radulescu, M.; Dalal, S.; Lilhore, U.K.; Saimiya, S. Optimizing mineral identification for sustainable resource extraction through hybrid deep learning enabled FinTech model. Resour. Policy 2024, 89, 104692. [Google Scholar] [CrossRef]

- Bendaouia, A.; Abdelwahed, E.H.; Qassimi, S.; Boussetta, A.; Benzakour, I.; Benhayoun, A.; Amar, O.; Bourzeix, F.; Baïna, K.; Cherkaoui, M.; et al. Hybrid features extraction for the online mineral grades determination in the flotation froth using Deep Learning. Eng. Appl. Artif. Intell. 2024, 129, 107680. [Google Scholar] [CrossRef]

- Abrarov, A. Flotation Froth Monitoring Using Unsupervised Multiple Object Tracking Methods. J. Miner. Mater. Sci. 2023, 4, 1054. [Google Scholar] [CrossRef]

- Singh, P.; Murthy, V.M.S.R.; Kumar, D.; Raval, S. Enhancing dragline operations supervision through computer vision: Real time height measurement of dragline spoil piles dump using YOLO. Geomat. Nat. Hazards Risk 2024, 15, 2322492. [Google Scholar] [CrossRef]

- Chow, B.H.Y.; Reyes-Aldasoro, C.C. Automatic gemstone classification using computer vision. Minerals 2022, 12, 60. [Google Scholar] [CrossRef]

- Imam, M.; Baïna, K.; Tabii, Y.; Ressami, E.M.; Adlaoui, Y.; Benzakour, I.; Abdelwahed, E.H. The Future of Mine Safety: A Comprehensive Review of Anti-Collision Systems Based on Computer Vision in Underground Mines. Sensors 2023, 23, 4294. [Google Scholar] [CrossRef] [PubMed]

- Huang, M.Q.; Ninić, J.; Zhang, Q.B. BIM, machine learning and computer vision techniques in underground construction: Current status and future perspectives. Tunn. Undergr. Space Technol. 2021, 108, 103677. [Google Scholar] [CrossRef]

- Sharma, H.; Kumar, H.; Mangla, S.K. Enablers to computer vision technology for sustainable E-waste management. J. Clean. Prod. 2023, 412, 137396. [Google Scholar] [CrossRef]

- Dilek, E.; Dener, M. Computer Vision Applications in Intelligent Transportation Systems: A Survey. Sensors 2023, 23, 2938. [Google Scholar] [CrossRef] [PubMed]

- Oudah, M.; Al-Naji, A.; Chahl, J. Hand Gesture Recognition Based on Computer Vision: A Review of Techniques. J. Imaging 2020, 6, 73. [Google Scholar] [CrossRef] [PubMed]

- Bhargava, A.; Bansal, A. Fruits and vegetables quality evaluation using computer vision: A review. J. King Saud Univ. Comput. Inf. Sci. 2021, 33, 243–257. [Google Scholar] [CrossRef]

- Spencer, B.F., Jr.; Hoskere, V.; Narazaki, Y. Advances in Computer Vision-Based Civil Infrastructure Inspection and Monitoring. Engineering 2019, 5, 199–222. [Google Scholar] [CrossRef]

- Li, J.; Green-Miller, A.R.; Hu, X.; Lucic, A.; Mohan, M.M.; Dilger, R.N.; Condotta, I.C.; Aldridge, B.; Hart, J.M.; Ahuja, N. Barriers to computer vision applications in pig production facilities. Comput. Electron. Agric. 2022, 200, 107227. [Google Scholar] [CrossRef]

- Alsakka, F.; Assaf, S.; El-Chami, I.; Al-Hussein, M. Computer vision applications in offsite construction. Autom. Constr. 2023, 154, 104980. [Google Scholar] [CrossRef]

- Fang, W.; Ding, L.; Love, P.E.; Luo, H.; Li, H.; Peña-Mora, F.; Zhong, B.; Zhou, C. Computer vision applications in construction safety assurance. Autom. Constr. 2020, 110, 103013. [Google Scholar] [CrossRef]

- Shah, N.; Bhagat, N.; Shah, M. Crime forecasting: A machine learning and computer vision approach to crime prediction and prevention. Vis. Comput. Ind. Biomed. Art 2021, 4, 9. [Google Scholar] [CrossRef] [PubMed]

- Terven, J.; Cordova-Esparza, D.; Romero-Gonzales, J. A Comprehensive Review of YOLO Architectures in Computer Vision: From YOLOv1 to YOLOv8 and YOLO-NAS. Mach. Learn. Knowl. Extr. 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Maity, S.; Chakraborty, A.; Singh, P.K.; Sarkar, R. Performance Comparison of Various YOLO Models for Vehicle Detection: An Experimental Study. In Lecture Notes in Networks and Systems; Springer Nature: Singapore, 2023. [Google Scholar] [CrossRef]

- Ehrgott, M. Multicriteria Optimization, 2nd ed.; Springer Nature: Dordrecht, The Netherlands, 2005. [Google Scholar]

- Zhou, J.; Zou, J.; Zheng, J.; Yang, S.; Gong, D.; Pei, T. An infeasible solutions diversity maintenance epsilon constraint handling method for evolutionary constrained multiobjective optimization. Soft Comput. 2021, 25, 8051–8062. [Google Scholar] [CrossRef]

| Hyperparameter | Value |

|---|---|

| Batch Size | 16 |

| Optimizer | AdamW |

| Learning rate | 0.01 |

| Weight decay | 0.0005 |

| Momentum | 0.937 |

| Drop out | 0.0 |

| Excavator IDs | # of Required Assignment |

|---|---|

| 0 | 280 |

| 1 | 74 |

| 2 | 50 |

| 3 | 249 |

| 4 | 246 |

| Excavator IDs | 0 | 1 | 2 | 3 | 4 |

|---|---|---|---|---|---|

| Dump Site IDs | |||||

| 0 | 230 | 250 | 300 | 450 | 500 |

| 1 | 250 | 350 | 500 | 400 | 200 |

| 2 | 350 | 500 | 500 | 400 | 200 |

| 3 | 500 | 370 | 700 | 650 | 550 |

| 4 | 1000 | 500 | 200 | 500 | 750 |

| 5 | 500 | 1000 | 500 | 210 | 1000 |

| 6 | 210 | 500 | 1000 | 210 | 500 |

| Time of the Shift (s) | 25,560 | 25,680 | 25,800 | 26,040 | 26,160 | 26,220 | 26,280 | |

|---|---|---|---|---|---|---|---|---|

| Truck IDs | 3 | 0 | 0 | 0 | 3 | 0 | 0 | 0 |

| 5 | 0 | 5 | 0 | 0 | 0 | 5 | 0 | |

| 6 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 9 | 0 | 0 | 0 | 9 | 0 | 0 | 0 | |

| 10 | 0 | 0 | 0 | 0 | 0 | 10 | 0 | |

| 11 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 12 | 0 | 0 | 12 | 0 | 0 | 0 | 0 | |

| 13 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 14 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| 25 | 0 | 0 | 0 | 0 | 25 | 0 | 0 | |

| Excavator Ready Times (s) | 0 | 138 | 18 | 2 | 36 | 116 | 56 | 76 |

| 1 | 4268 | 4148 | 4028 | 3788 | 3668 | 3608 | 3548 | |

| 2 | 3633 | 3513 | 3393 | 3153 | 3033 | 2973 | 2913 | |

| 3 | 93 | 72 | 33 | 36 | 71 | 11 | 34 | |

| 4 | 91 | 222 | 102 | 93 | 36 | 78 | 18 | |

| Time of the Shift (s) | 25,560 | 25,680 | 25,800 | 26,040 | 26,160 | |

|---|---|---|---|---|---|---|

| Truck IDs | 1 | 99 | 99 | 99 | 99 | 99 |

| 2 | 99 | 99 | 99 | 99 | 99 | |

| 3 | 99 | 99 | 99 | 1 | 99 | |

| 4 | 99 | 99 | 99 | 99 | 99 | |

| 5 | 99 | 1 | 99 | 99 | 99 | |

| 6 | 99 | 99 | 99 | 99 | 99 | |

| 7 | 99 | 99 | 99 | 99 | 99 | |

| 8 | 99 | 99 | 99 | 99 | 99 | |

| 12 | 99 | 99 | 6 | 99 | 99 | |

| 13 | 99 | 99 | 99 | 99 | 99 | |

| 14 | 99 | 99 | 99 | 99 | 99 | |

| 15 | 1 | 99 | 99 | 99 | 99 | |

| 16 | 99 | 99 | 99 | 2 | 99 | |

| Class | Images | Instances | Precision | Recall | mAP50 | mAP50–95 |

|---|---|---|---|---|---|---|

| all | 124 | 289 | 0.966 | 0.978 | 0.99 | 0.911 |

| excavator | 119 | 137 | 0.95 | 0.975 | 0.986 | 0.912 |

| truck | 106 | 152 | 0.982 | 0.98 | 0.993 | 0.91 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hasözdemir, K.; Meral, M.; Kahraman, M.M. Revolutionizing Open-Pit Mining Fleet Management: Integrating Computer Vision and Multi-Objective Optimization for Real-Time Truck Dispatching. Appl. Sci. 2025, 15, 4603. https://doi.org/10.3390/app15094603

Hasözdemir K, Meral M, Kahraman MM. Revolutionizing Open-Pit Mining Fleet Management: Integrating Computer Vision and Multi-Objective Optimization for Real-Time Truck Dispatching. Applied Sciences. 2025; 15(9):4603. https://doi.org/10.3390/app15094603

Chicago/Turabian StyleHasözdemir, Kürşat, Mert Meral, and Muhammet Mustafa Kahraman. 2025. "Revolutionizing Open-Pit Mining Fleet Management: Integrating Computer Vision and Multi-Objective Optimization for Real-Time Truck Dispatching" Applied Sciences 15, no. 9: 4603. https://doi.org/10.3390/app15094603

APA StyleHasözdemir, K., Meral, M., & Kahraman, M. M. (2025). Revolutionizing Open-Pit Mining Fleet Management: Integrating Computer Vision and Multi-Objective Optimization for Real-Time Truck Dispatching. Applied Sciences, 15(9), 4603. https://doi.org/10.3390/app15094603