Evaluating the Benefits and Implementation Challenges of Digital Health Interventions for Improving Self-Efficacy and Patient Activation in Cancer Survivors: Single-Case Experimental Prospective Study

Abstract

1. Introduction

2. Materials and Methods

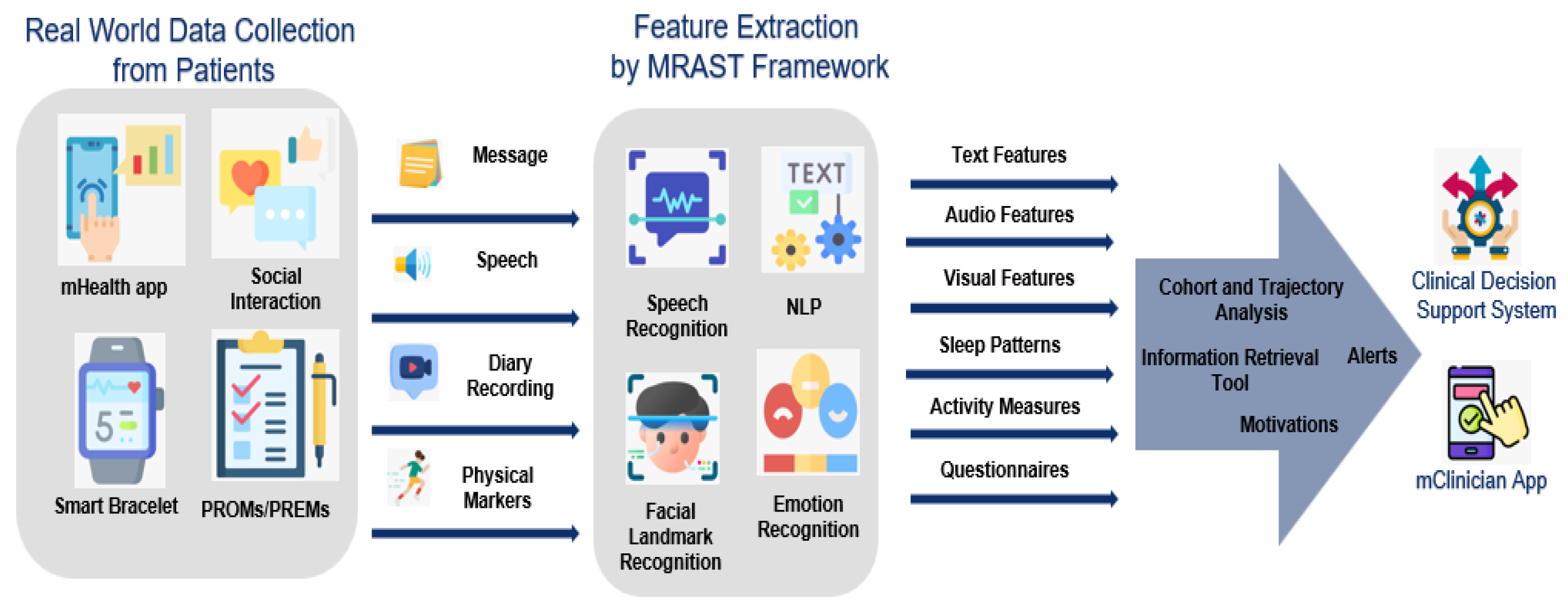

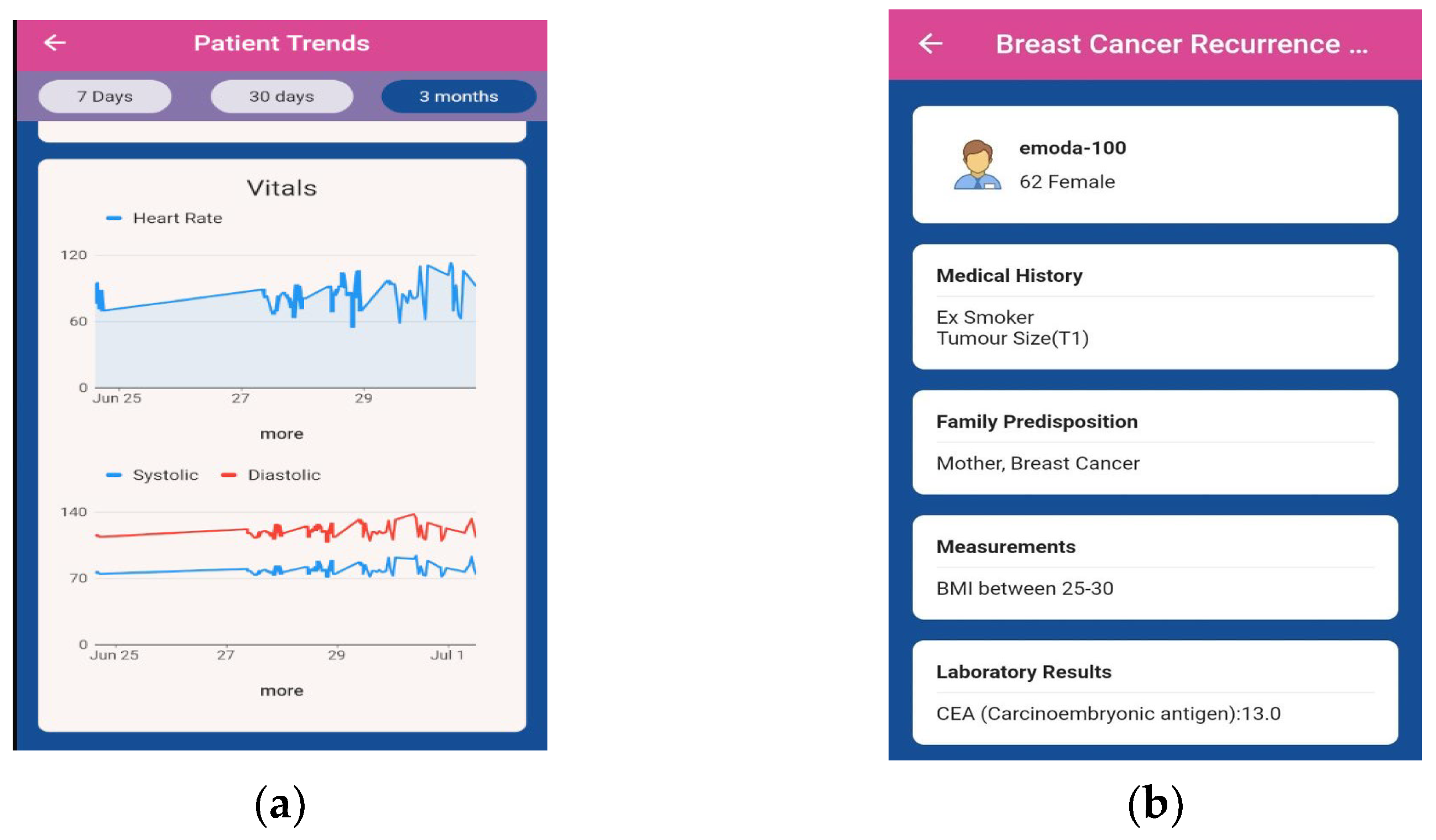

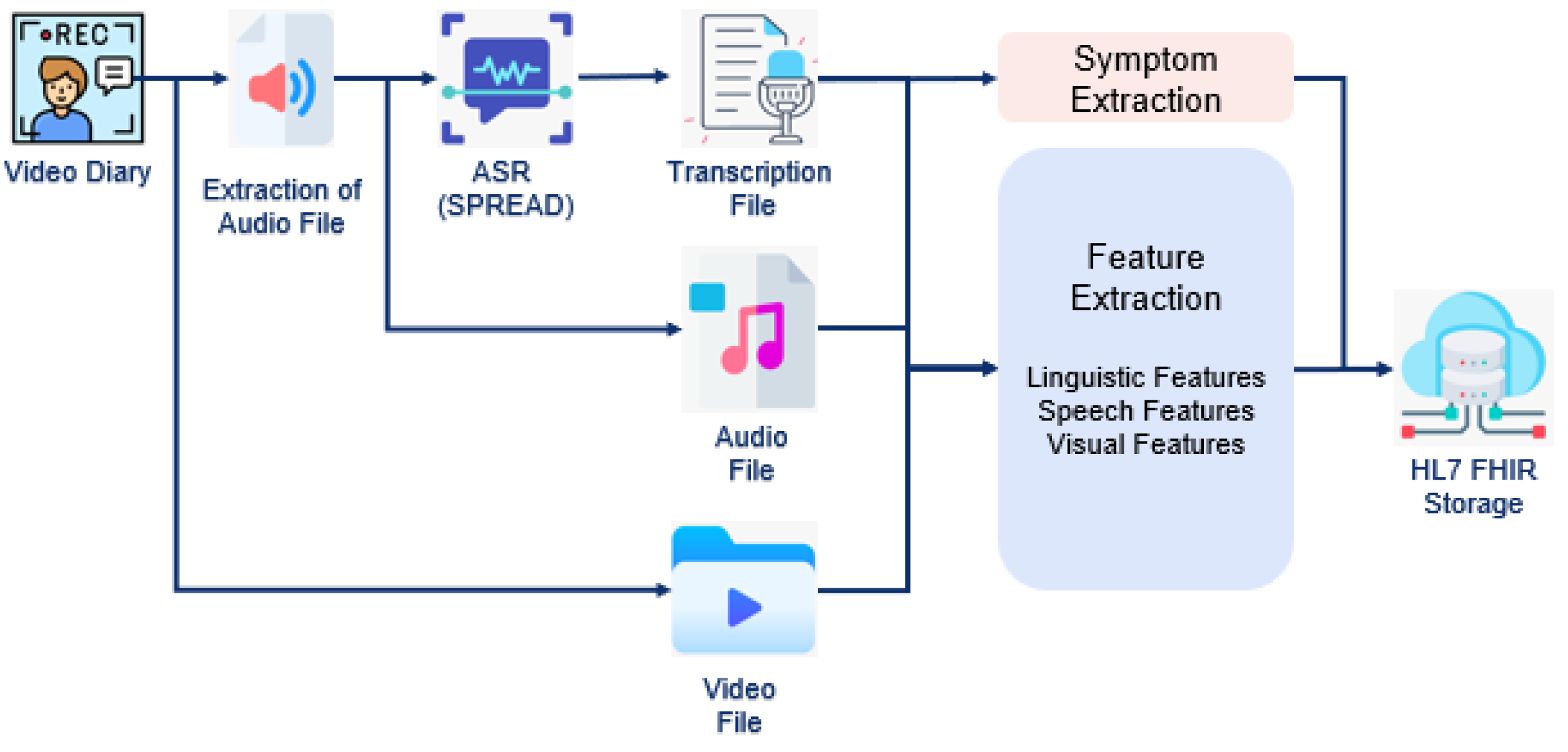

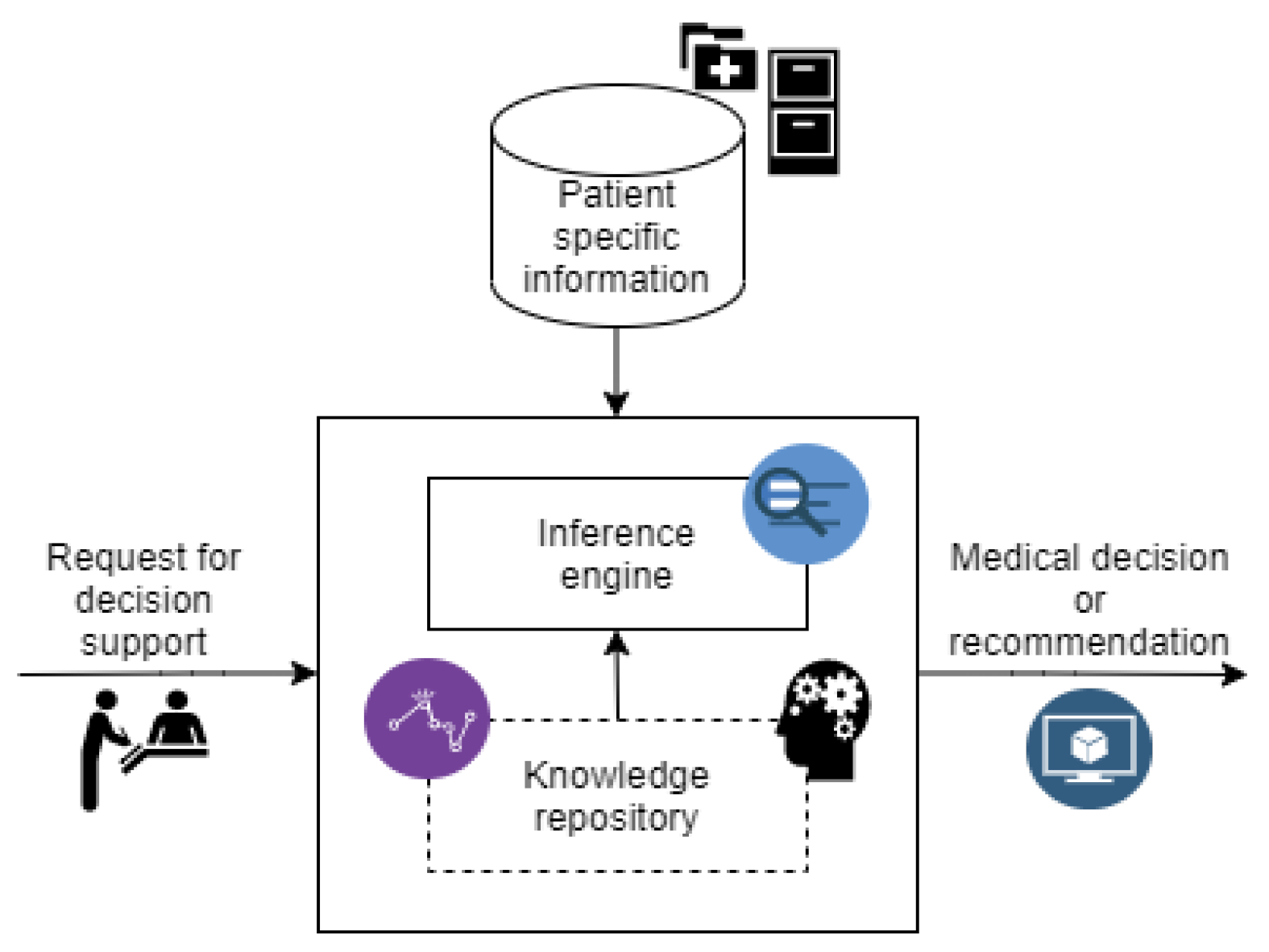

2.1. PERSIST Project

Digital Interventions

2.2. Clinical Trial

2.2.1. Trial Design

2.2.2. Participants

2.2.3. Sample Size Calculation

2.2.4. Recruitment

2.2.5. Data Collection

2.2.6. Outcomes

2.2.7. Statistical Analysis

2.2.8. Ancillary Analyses Design

2.2.9. Ethical Considerations

3. Results

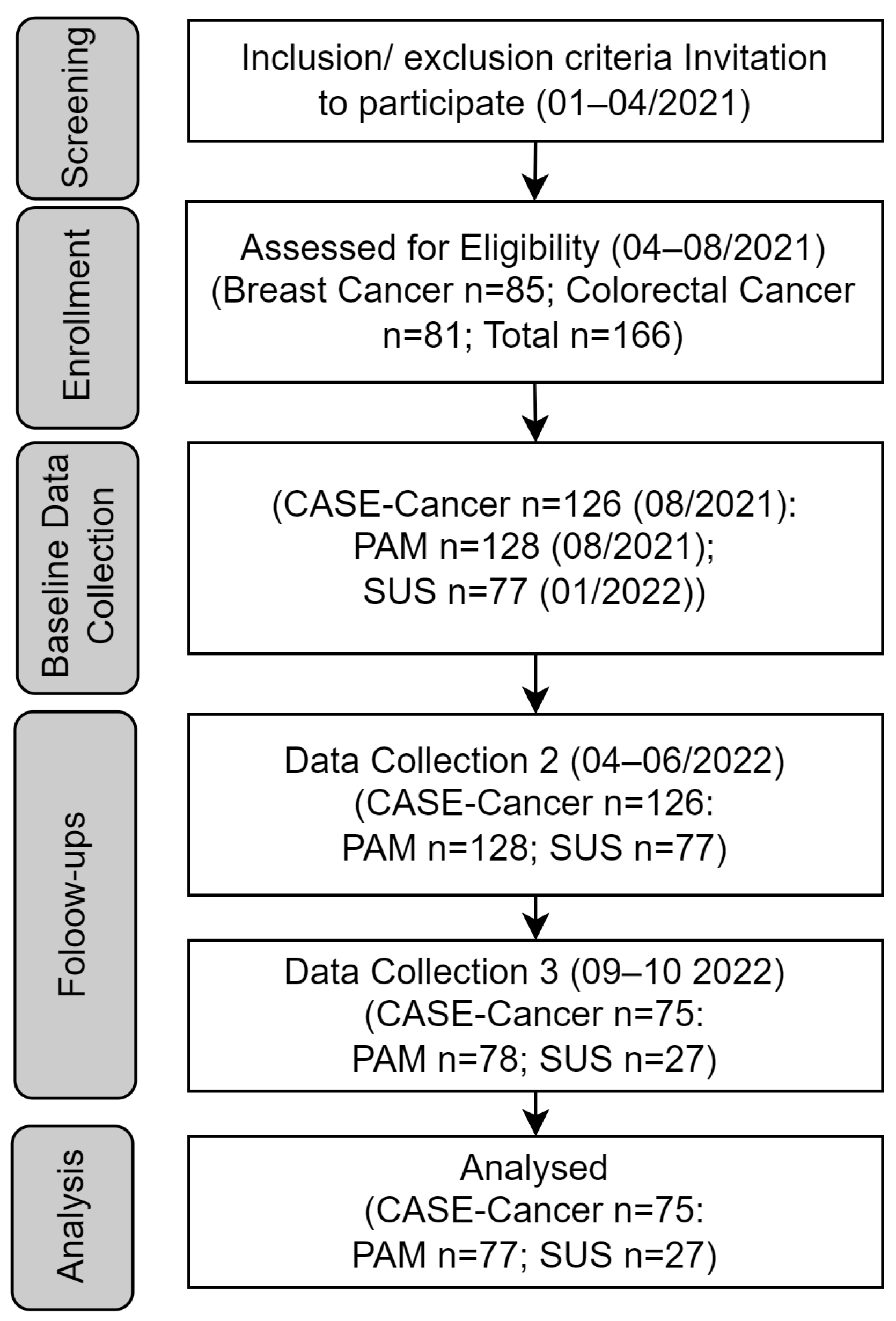

3.1. Participant Flow

3.2. Baseline Data

3.3. Outcomes and Estimation

3.3.1. Perceived Self-Efficacy of Patients by CASE-Cancer

3.3.2. Activation Levels of Patients by PAM

3.3.3. User Acceptance of mHealth App by SUS

3.4. Ancillary Analyses

3.4.1. General Feedback from Patients

3.4.2. General Feedback from Clinicians

4. Discussion

4.1. Principal Findings

4.2. Comparison to Prior Work

4.3. Strengths and Limitations

4.4. Future Directions

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ANOVA | Analysis of variance |

| ASR | Automatic Speech Recognition |

| CASE-Cancer | Communication and Attitudinal Self-Efficacy scale for cancer |

| CDSS | Clinical Decision Support System |

| CHU | Centre Hospitalier Universitaire De Liege |

| CI | Confidence Interval |

| CTCs | Circulating Tumor Cells |

| DHI | Digital health intervention |

| HL7 FHIR | Health Level 7 Fast Healthcare Interoperability Resources |

| ICD | International Classification of Diseases |

| MRAST | Multimodal Risk Assessment and Symptom Tracking |

| PAM | Patient Activation Measure |

| PERSIST | Acronym of project ‘Patients-centered SurvivorShIp care plan after Cancer treatments based on Big Data and Artificial Intelligence technologies’ |

| PMI | Precision Medicine Initiative |

| PREMs | Patient Reported Experience Measures |

| PROMs | Patient-reported outcome measures |

| REUH | Riga East Clinical University Hospital |

| SERGAS | Complejo Hospitalario Universitario de Ourense |

| SUS | System Usability Scale |

| UKCM | University Medical Centre Maribor |

| UL | University of Latvia |

References

- Chan, R.J.; Crawford-Williams, F.; Crichton, M.; Joseph, R.; Hart, N.H.; Milley, K.; Druce, P.; Zhang, J.; Jefford, M.; Lisy, K.; et al. Effectiveness and Implementation of Models of Cancer Survivorship Care: An Overview of Systematic Reviews. J. Cancer Surviv. 2023, 17, 197–221. [Google Scholar] [CrossRef]

- Shaffer, K.M.; Turner, K.L.; Siwik, C.; Gonzalez, B.D.; Upasani, R.; Glazer, J.V.; Ferguson, R.J.; Joshua, C.; Low, C.A. Digital Health and Telehealth in Cancer Care: A Scoping Review of Reviews. Lancet Digit. Health 2023, 5, e316–e327. [Google Scholar] [CrossRef]

- Bradbury, K.; Steele, M.; Corbett, T.; Geraghty, A.W.A.; Krusche, A.; Heber, E.; Easton, S.; Cheetham-Blake, T.; Slodkowska-Barabasz, J.; Müller, A.M.; et al. Developing a Digital Intervention for Cancer Survivors: An Evidence-, Theory- and Person-Based Approach. npj Digit. Med. 2019, 2, 85. [Google Scholar] [CrossRef] [PubMed]

- Hübner, J.; Welter, S.; Ciarlo, G.; Käsmann, L.; Ahmadi, E.; Keinki, C. Patient Activation, Self-Efficacy and Usage of Complementary and Alternative Medicine in Cancer Patients. Med. Oncol. 2022, 39, 192. [Google Scholar] [CrossRef]

- Huang, Q.; Wu, F.; Zhang, W.; Stinson, J.; Yang, Y.; Yuan, C. Risk Factors for Low Self-care Self-efficacy in Cancer Survivors: Application of Latent Profile Analysis. Nurs. Open 2022, 9, 1805–1814. [Google Scholar] [CrossRef] [PubMed]

- Mazanec, S.R.; Sattar, A.; Delaney, C.P.; Daly, B.J. Activation for Health Management in Colorectal Cancer Survivors and Their Family Caregivers. West. J. Nurs. Res. 2016, 38, 325–344. [Google Scholar] [CrossRef]

- Albrecht, K.; Droll, H.; Giesler, J.M.; Nashan, D.; Meiss, F.; Reuter, K. Self-efficacy for Coping with Cancer in Melanoma Patients: Its Association with Physical Fatigue and Depression. Psychooncology 2013, 22, 1972–1978. [Google Scholar] [CrossRef]

- Barlow, J.H.; Bancroft, G.V.; Turner, A.P. Self-Management Training for People with Chronic Disease: A Shared Learning Experience. J. Health Psychol. 2005, 10, 863–872. [Google Scholar] [CrossRef] [PubMed]

- Moradian, S.; Maguire, R.; Liu, G.; Krzyzanowska, M.K.; Butler, M.; Cheung, C.; Signorile, M.; Gregorio, N.; Ghasemi, S.; Howell, D. Promoting Self-Management and Patient Activation Through eHealth: Protocol for a Systematic Literature Review and Meta-Analysis. JMIR Res. Protoc. 2023, 12, e38758. [Google Scholar] [CrossRef]

- Hailey, V.; Rojas-Garcia, A.; Kassianos, A.P. A Systematic Review of Behaviour Change Techniques Used in Interventions to Increase Physical Activity among Breast Cancer Survivors. Breast Cancer 2022, 29, 193–208. [Google Scholar] [CrossRef]

- Aapro, M.; Bossi, P.; Dasari, A.; Fallowfield, L.; Gascón, P.; Geller, M.; Jordan, K.; Kim, J.; Martin, K.; Porzig, S. Digital Health for Optimal Supportive Care in Oncology: Benefits, Limits, and Future Perspectives. Support. Care Cancer 2020, 28, 4589–4612. [Google Scholar] [CrossRef] [PubMed]

- Elkefi, S.; Trapani, D.; Ryan, S. The Role of Digital Health in Supporting Cancer Patients’ Mental Health and Psychological Well-Being for a Better Quality of Life: A Systematic Literature Review. Int. J. Med. Inf. 2023, 176, 105065. [Google Scholar] [CrossRef] [PubMed]

- Marthick, M.; McGregor, D.; Alison, J.; Cheema, B.; Dhillon, H.; Shaw, T. Supportive Care Interventions for People with Cancer Assisted by Digital Technology: Systematic Review. J. Med. Internet Res. 2021, 23, e24722. [Google Scholar] [CrossRef]

- Burbury, K.; Wong, Z.; Yip, D.; Thomas, H.; Brooks, P.; Gilham, L.; Piper, A.; Solo, I.; Underhill, C. Telehealth in Cancer Care: During and beyond the COVID-19 Pandemic. Intern. Med. J. 2021, 51, 125–133. [Google Scholar] [CrossRef]

- Powley, N.; Nesbitt, A.; Carr, E.; Hackett, R.; Baker, P.; Beatty, M.; Huddleston, R.; Danjoux, G. Effect of Digital Health Coaching on Self-Efficacy and Lifestyle Change. BJA Open 2022, 4, 100067. [Google Scholar] [CrossRef]

- Van Der Hout, A.; Holtmaat, K.; Jansen, F.; Lissenberg-Witte, B.I.; Van Uden-Kraan, C.F.; Nieuwenhuijzen, G.A.P.; Hardillo, J.A.; Baatenburg De Jong, R.J.; Tiren-Verbeet, N.L.; Sommeijer, D.W.; et al. The eHealth Self-Management Application ‘Oncokompas’ That Supports Cancer Survivors to Improve Health-Related Quality of Life and Reduce Symptoms: Which Groups Benefit Most? Acta Oncol. 2021, 60, 403–411. [Google Scholar] [CrossRef]

- Courneya, K.S. Physical Activity and Cancer Survivorship: A Simple Framework for a Complex Field. Exerc. Sport Sci. Rev. 2014, 42, 102–109. [Google Scholar] [CrossRef] [PubMed]

- Bruggeman, A.R.; Kamal, A.H.; LeBlanc, T.W.; Ma, J.D.; Baracos, V.E.; Roeland, E.J. Cancer Cachexia: Beyond Weight Loss. J. Oncol. Pract. 2016, 12, 1163–1171. [Google Scholar] [CrossRef]

- Blanchard, C.M.; Courneya, K.S.; Stein, K. Cancer Survivors’ Adherence to Lifestyle Behavior Recommendations and Associations with Health-Related Quality of Life: Results from the American Cancer Society’s SCS-II. J. Clin. Oncol. 2008, 26, 2198–2204. [Google Scholar] [CrossRef]

- Henry-Amar, M.; Busson, R. Does Persistent Fatigue in Survivors Relate to Cancer? Lancet Oncol. 2016, 17, 1351–1352. [Google Scholar] [CrossRef]

- Soto-Ruiz, N.; Escalada-Hernández, P.; Martín-Rodríguez, L.S.; Ferraz-Torres, M.; García-Vivar, C. Web-Based Personalized Intervention to Improve Quality of Life and Self-Efficacy of Long-Term Breast Cancer Survivors: Study Protocol for a Randomized Controlled Trial. Int. J. Environ. Res. Public Health 2022, 19, 12240. [Google Scholar] [CrossRef] [PubMed]

- Merluzzi, T.V.; Pustejovsky, J.E.; Philip, E.J.; Sohl, S.J.; Berendsen, M.; Salsman, J.M. Interventions to Enhance Self-efficacy in Cancer Patients: A Meta-analysis of Randomized Controlled Trials. Psychooncology 2019, 28, 1781–1790. [Google Scholar] [CrossRef] [PubMed]

- Ekstedt, M.; Schildmeijer, K.; Wennerberg, C.; Nilsson, L.; Wannheden, C.; Hellström, A. Enhanced Patient Activation in Cancer Care Transitions: Protocol for a Randomized Controlled Trial of a Tailored Electronic Health Intervention for Men with Prostate Cancer. JMIR Res. Protoc. 2019, 8, e11625. [Google Scholar] [CrossRef] [PubMed]

- Patients-Centered SurvivorShIp Care Plan After Cancer Treatments Based on Big Data and Artificial Intelligence Technologies. Available online: https://cordis.europa.eu/project/id/875406 (accessed on 1 June 2023).

- Mlakar, I.; Lin, S.; Aleksandraviča, I.; Arcimoviča, K.; Eglītis, J.; Leja, M.; Salgado Barreira, Á.; Gómez, J.G.; Salgado, M.; Mata, J.G.; et al. Patients-Centered SurvivorShIp Care Plan after Cancer Treatments Based on Big Data and Artificial Intelligence Technologies (PERSIST): A Multicenter Study Protocol to Evaluate Efficacy of Digital Tools Supporting Cancer Survivors. BMC Med. Inform. Decis. Mak. 2021, 21, 243. [Google Scholar] [CrossRef]

- Arioz, U.; Smrke, U.; Plohl, N.; Mlakar, I. Scoping Review on the Multimodal Classification of Depression and Experimental Study on Existing Multimodal Models. Diagnostics 2022, 12, 2683. [Google Scholar] [CrossRef]

- Rojc, M.; Ariöz, U.; Šafran, V.; Mlakar, I. Multilingual Chatbots to Collect Patient-Reported Outcomes. In Chatbots—The AI-Driven Front-Line Services for Customers; Babulak, E., Ed.; IntechOpen: London, UK, 2023. [Google Scholar] [CrossRef]

- Manzo, G.; Pannatier, Y.; Duflot, P.; Kolh, P.; Chavez, M.; Bleret, V.; Calvaresi, D.; Jimenez-del-Toro, O.; Schumacher, M.; Calbimonte, J.-P. Breast Cancer Survival Analysis Agents for Clinical Decision Support. Comput. Methods Programs Biomed. 2023, 231, 107373. [Google Scholar] [CrossRef]

- Calvo-Almeida, S.; Serrano-Llabrés, I.; Cal-González, V.M.; Piairo, P.; Pires, L.R.; Diéguez, L.; González-Castro, L. Multichannel Fluorescence Microscopy Images CTC Detection: A Deep Learning Approach. In Proceedings of the International Conference of Computational Methods in Sciences and Engineering ICCMSE 2022, Virtual, 26–29 October 2022; AIP Publishing: Long Island, NY, USA, 2024; p. 030007. [Google Scholar] [CrossRef]

- Krasny-Pacini, A.; Evans, J. Single-Case Experimental Designs to Assess Intervention Effectiveness in Rehabilitation: A Practical Guide. Ann. Phys. Rehabil. Med. 2018, 61, 164–179. [Google Scholar] [CrossRef]

- Pope, Z.; Lee, J.E.; Zeng, N.; Lee, H.Y.; Gao, Z. Feasibility of Smartphone Application and Social Media Intervention on Breast Cancer Survivors’ Health Outcomes. Transl. Behav. Med. 2019, 9, 11–22. [Google Scholar] [CrossRef]

- Quintiliani, L.M.; Mann, D.M.; Puputti, M.; Quinn, E.; Bowen, D.J. Pilot and Feasibility Test of a Mobile Health-Supported Behavioral Counseling Intervention for Weight Management Among Breast Cancer Survivors. JMIR Cancer 2016, 2, e4. [Google Scholar] [CrossRef]

- Wolf, M.S.; Chang, C.-H.; Davis, T.; Makoul, G. Development and Validation of the Communication and Attitudinal Self-Efficacy Scale for Cancer (CASE-Cancer). Patient Educ. Couns. 2005, 57, 333–341. [Google Scholar] [CrossRef]

- Brooke, J. SUS: A ‘Quick and Dirty’ Usability Scale. Usability Eval. Ind. 1996, 189, 4–7. [Google Scholar]

- Hibbard, J.H.; Stockard, J.; Mahoney, E.R.; Tusler, M. Development of the Patient Activation Measure (PAM): Conceptualizing and Measuring Activation in Patients and Consumers. Health Serv. Res. 2004, 39, 1005–1026. [Google Scholar] [CrossRef] [PubMed]

- Alpert, J.M.; Amin, T.B.; Zhongyue, Z.; Markham, M.J.; Murphy, M.; Bylund, C.L. Evaluating the SEND eHealth Application to Improve Patients’ Secure Message Writing. J. Cancer Educ. 2024, 40, 182–191. [Google Scholar] [CrossRef]

- Pomey, M.-P.; Nelea, M.I.; Normandin, L.; Vialaron, C.; Bouchard, K.; Côté, M.-A.; Duarte, M.A.R.; Ghadiri, D.P.; Fortin, I.; Charpentier, D.; et al. An Exploratory Cross-Sectional Study of the Effects of Ongoing Relationships with Accompanying Patients on Cancer Care Experience, Self-Efficacy, and Psychological Distress. BMC Cancer 2023, 23, 369. [Google Scholar] [CrossRef] [PubMed]

- Baik, S.H.; Oswald, L.B.; Buscemi, J.; Buitrago, D.; Iacobelli, F.; Perez-Tamayo, A.; Guitelman, J.; Penedo, F.J.; Yanez, B. Patterns of Use of Smartphone-Based Interventions Among Latina Breast Cancer Survivors: Secondary Analysis of a Pilot Randomized Controlled Trial. JMIR Cancer 2020, 6, e17538. [Google Scholar] [CrossRef]

- Hibbard, J.H.; Mahoney, E.R.; Stockard, J.; Tusler, M. Development and Testing of a Short Form of the Patient Activation Measure. Health Serv. Res. 2005, 40, 1918–1930. [Google Scholar] [CrossRef] [PubMed]

- Ng, Q.X.; Liau, M.Y.Q.; Tan, Y.Y.; Tang, A.S.P.; Ong, C.; Thumboo, J.; Lee, C.E. A Systematic Review of the Reliability and Validity of the Patient Activation Measure Tool. Healthcare 2024, 12, 1079. [Google Scholar] [CrossRef]

- Lewis, J.R. The System Usability Scale: Past, Present, and Future. Int. J. Hum. Comput. Interact. 2018, 34, 577–590. [Google Scholar] [CrossRef]

- Bauer, A.M.; Iles-Shih, M.; Ghomi, R.H.; Rue, T.; Grover, T.; Kincler, N.; Miller, M.; Katon, W.J. Acceptability of mHealth Augmentation of Collaborative Care: A Mixed Methods Pilot Study. Gen. Hosp. Psychiatry 2018, 51, 22–29. [Google Scholar] [CrossRef]

- Clare, L.; Wu, Y.-T.; Teale, J.C.; MacLeod, C.; Matthews, F.; Brayne, C.; Woods, B.; CFAS-Wales Study Team. Potentially Modifiable Lifestyle Factors, Cognitive Reserve, and Cognitive Function in Later Life: A Cross-Sectional Study. PLoS Med. 2017, 14, e1002259. [Google Scholar] [CrossRef]

- Santiago, J.A.; Potashkin, J.A. Physical Activity and Lifestyle Modifications in the Treatment of Neurodegenerative Diseases. Front. Aging Neurosci. 2023, 15, 1185671. [Google Scholar] [CrossRef] [PubMed]

- Collins, F.S.; Varmus, H. A New Initiative on Precision Medicine. N. Engl. J. Med. 2015, 372, 793–795. [Google Scholar] [CrossRef] [PubMed]

| Reasons for Leaving | Times Mentioned |

|---|---|

| Personal life situation | 11 |

| Device malfunction, technical problems | 10 |

| Participation takes too much time | 9 |

| Does not like the system in general | 7 |

| Complaints about app | 6 |

| Induces stress, anxiety | 6 |

| Not specified | 4 |

| Reminds of cancer | 3 |

| No need for follow-up | 2 |

| Light at night from bracelet | 2 |

| Tired of participating | 2 |

| Patient died | 2 |

| Recurrence | 1 |

| UL | UKCM | CHU | SERGAS | TOTAL | |

|---|---|---|---|---|---|

| Recruited Patients | 46 | 40 | 41 | 39 | 166 |

| Mean Age (years) | 54.17 | 56.3 | 54.92 | 54.85 | 55.03 |

| Std. Dev. Age (years) | 11.31 | 8.34 | 11.06 | 10.5 | 10.34 |

| Breast Cancer Cases | 24 | 20 | 21 | 20 | 85 |

| Colorectal Cancer Cases | 22 | 20 | 20 | 19 | 81 |

| Male | 7 | 11 | 7 | 12 | 37 |

| Female | 39 | 29 | 34 | 27 | 129 |

| Factor 1: Understand and Participate in Care | Factor 2: Maintain Positive Attitude | Factor 3: Seek and Obtain Information | ||||

|---|---|---|---|---|---|---|

| Recruitment | Last Follow-Up | Recruitment | Last Follow-Up | Recruitment | Last Follow-Up | |

| N | 75 | 75 | 75 | 75 | 75 | 75 |

| Mean | 13.73 | 13.75 | 13.28 | 13.17 | 13.81 | 13.55 |

| Median | 14 | 14 | 14 | 14 | 15 | 14 |

| Std. Deviation | 1.9 | 2.01 | 2.3 | 2.44 | 2.31 | 2.21 |

| Minimum | 9 | 9 | 6 | 4 | 7 | 8 |

| Maximum | 16 | 16 | 16 | 16 | 16 | 16 |

| Percentiles 25 | 12 | 12 | 12 | 12 | 12 | 12 |

| 50 | 14 | 14 | 14 | 14 | 15 | 14 |

| 70 | 16 | 15 | 15 | 15 | 16 | 16 |

| p-value | 0.98 | 0.66 | 0.25 | |||

| 95% C.I. | [−0.99 to 0.50] | [−0.50 to 0.99] | [−1.00 to 1.31 × 10−5] | |||

| Level | Recruitment (N = 78) n (%) | Last Follow-Up (N = 78) n (%) | p-Value |

|---|---|---|---|

| Level 1 | 5 (6.4) 95% C.I.: [2.2 to 14.9] | 6 (7.7) 95% C.I.: [3.0 to 16.6] | 1.0 |

| Level 2 | 15 (19.2) 95% C.I.: [11.7 to 30.8] | 16 (20.5) 95% C.I.: 12.7 to 32.3] | 1.0 |

| Level 3 | 33 (42.3) 95% C.I.: [32.6 to 55.9] | 28 (35.9) 95% C.I.: [26.4 to 49.3] | 0.49 |

| Level 4 | 25 (32.1) 95% C.I.: [22.9 to 45.2] | 28 (35.9) 95% C.I.: [26.4 to 49.3] | 0.65 |

| Score Group | Frequency | Percent | |

|---|---|---|---|

| Score at recruitment | ≤50 | 3 | 11.11 |

| 50–70 | 10 | 37.04 | |

| 70–85 | 10 | 37.04 | |

| >85 | 4 | 14.82 | |

| Score at last follow-up | ≤50 | 5 | 18.52 |

| 50–70 | 10 | 37.04 | |

| 70–85 | 6 | 22.22 | |

| >85 | 6 | 22.22 |

| Question 1 | Time Point | Mean (SD) | Median |

|---|---|---|---|

| 1st Question—How do you rate your experience with participation in the PERSIST project (in general)? | Initial | 7.41 (1.64) | 8 |

| Middle | 7.75 (1.70) | 8 | |

| Final | 7.69 (1.53) | 8 | |

| 2nd Question—Are the instructions and explanations about the project from personnel understandable? | Initial | 8.53 (1.67) | 9.5 |

| Middle | 8.53 (1.16) | 8.5 | |

| Final | 8.47 (1.24) | 8 | |

| 3rd Question—How does the participation in the PERSIST project make you feel? | Initial | 8.13 (1.86) | 8 |

| Middle | 8.19 (1.55) | 8 | |

| Final | 8.06 (1.69) | 8 |

| Questions | Initial vs. Middle | Initial vs. Final | Middle vs. Final |

|---|---|---|---|

| 1st Question | 0.39 | 0.35 | 0.93 |

| 2nd Question | 0.87 | 0.67 | 0.55 |

| 3rd Question | 0.55 | 0.24 | 0.55 |

| Question 1 | Time Point | Mean (SD) | Median |

|---|---|---|---|

| 1st Question—How do you rate the emotion wheel/detection in the app? From 1 (bad, confusing) to 10 (super, interesting) | Initial | 6.60 (2.40) | 7 |

| Middle | 6.35 (2.68) | 7.5 | |

| Final | 6.85 (2.21) | 8 | |

| 2nd Question—How do you rate your experience with questionnaires in the app? From 1 (bad) to 10 (excellent) | Initial | 7.60 (1.64) | 8 |

| Middle | 7.25 (2.02) | 8 | |

| Final | 7.60 (1.79) | 8 | |

| 3rd Question—How do you rate your experience with diary recording? From 1 (bad, confusing) to 10 (super, interesting) | Initial | 6.65 (2.46) | 7 |

| Middle | 7.00 (2.75) | 8 | |

| Final | 7.00 (2.70) | 8 | |

| 4th Question—How do you rate your experience with the mHealth app? From 1 (really bad) to 10 (excellent) | Initial | 7.60 (1.67) | 7.5 |

| Middle | 7.35 (1.90) | 8 | |

| Final | 7.90 (1.55) | 8 | |

| 5th Question—Are the instructions and explanations about mHealth app usage understandable? From 1 (completely confusing) to 10 (completely clear) | Initial | 8.60 (1.31) | 9 |

| Middle | 8.60 (1.27) | 9 | |

| Final | 8.25 (1.33) | 8 | |

| 6th Question—Do you follow up on your gathered data in the mHealth app? From 1 (not at all) to 10 (all the time) | Initial | 7.35 (2.89) | 8 |

| Middle | 6.80 (2.78) | 7.5 | |

| Final | 6.90 (2.53) | 8 | |

| 7th Question—Does the mHealth app affect your behavior? From 1 (not at all) to 10 (I modify my behavior after looking at the data) | Initial | 5.50 (3.05) | 5 |

| Middle | 5.75 (2.69) | 6 | |

| Final | 6.15 (2.98) | 6 |

| Questions | Initial vs. Middle | Initial vs. Final | Middle vs. Final |

|---|---|---|---|

| 1st Question | >0.99 | 0.23 | 0.23 |

| 2nd Question | 0.49 | 0.84 | 0.62 |

| 3rd Question | 0.3 | 0.51 | 0.71 |

| 4th Question | 0.89 | 0.14 | 0.18 |

| 5th Question | 0.91 | 0.08 | 0.06 |

| 6th Question | 0.7 | 0.19 | 0.34 |

| 7th Question | 0.71 | 0.45 | 0.71 |

| Questions 1 | Time Point | Mean (SD) | Median |

|---|---|---|---|

| 1st Question—How do you rate your experience with smart bracelets? | Initial | 6.87 (2.23) | 7 |

| Middle | 6.00 (2.10) | 6 | |

| Final | 6.93 (1.53) | 7 | |

| 2nd Question—How do you rate your experience with mobile phone? | Initial | 6.80 (2.15) | 7 |

| Middle | 7.33 (1.99) | 8 | |

| Final | 6.87 (2.10) | 7 |

| Question | Initial vs. Middle | Initial vs. Final | Middle vs. Final |

|---|---|---|---|

| 1st Question | 0.03 | >0.99 | 0.035 |

| 2nd Question | 0.09 | 0.5 | 0.28 |

| Score Group First | Frequency | Percent | |

|---|---|---|---|

| Score at recruitment | ≤50 | 7 | 43.75 |

| 50–70 | 6 | 37.50 | |

| 70–85 | 2 | 12.50 | |

| >85 | 1 | 6.25 | |

| Last follow-up | ≤50 | 7 | 41.18 |

| 50–70 | 7 | 41.18 | |

| 70–85 | 2 | 11.76 | |

| >85 | 1 | 5.88 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Arioz, U.; Smrke, U.; Šafran, V.; Lin, S.; Nateqi, J.; Bema, D.; Polaka, I.; Arcimovica, K.; Lescinska, A.M.; Manzo, G.; et al. Evaluating the Benefits and Implementation Challenges of Digital Health Interventions for Improving Self-Efficacy and Patient Activation in Cancer Survivors: Single-Case Experimental Prospective Study. Appl. Sci. 2025, 15, 4713. https://doi.org/10.3390/app15094713

Arioz U, Smrke U, Šafran V, Lin S, Nateqi J, Bema D, Polaka I, Arcimovica K, Lescinska AM, Manzo G, et al. Evaluating the Benefits and Implementation Challenges of Digital Health Interventions for Improving Self-Efficacy and Patient Activation in Cancer Survivors: Single-Case Experimental Prospective Study. Applied Sciences. 2025; 15(9):4713. https://doi.org/10.3390/app15094713

Chicago/Turabian StyleArioz, Umut, Urška Smrke, Valentino Šafran, Simon Lin, Jama Nateqi, Dina Bema, Inese Polaka, Krista Arcimovica, Anna Marija Lescinska, Gaetano Manzo, and et al. 2025. "Evaluating the Benefits and Implementation Challenges of Digital Health Interventions for Improving Self-Efficacy and Patient Activation in Cancer Survivors: Single-Case Experimental Prospective Study" Applied Sciences 15, no. 9: 4713. https://doi.org/10.3390/app15094713

APA StyleArioz, U., Smrke, U., Šafran, V., Lin, S., Nateqi, J., Bema, D., Polaka, I., Arcimovica, K., Lescinska, A. M., Manzo, G., Pannatier, Y., Calvo-Almeida, S., Ravnik, M., Horvat, M., Flis, V., Montero, A. M., Calderón-Cruz, B., Arjona, J. A., Chavez, M., ... Mlakar, I. (2025). Evaluating the Benefits and Implementation Challenges of Digital Health Interventions for Improving Self-Efficacy and Patient Activation in Cancer Survivors: Single-Case Experimental Prospective Study. Applied Sciences, 15(9), 4713. https://doi.org/10.3390/app15094713