Abstract

In recent years, artificial intelligence (AI) has emerged as a valuable resource for teaching and learning, and it has also shown promise as a tool to help solve problems. A tool that has gained attention in education is ChatGPT, which supports teaching and learning through AI. This research investigates the difficulties faced by ChatGPT in comprehending and responding to chemistry problems pertaining to the topic of Introduction to Material Science. By employing the theoretical framework proposed by Holme et al., encompassing categories such as transfer, depth, predict/explain, problem solving, and translate, we evaluate ChatGPT’s conceptual understanding difficulties. We presented ChatGPT with a set of thirty chemistry problems within the Introduction to Material Science domain and tasked it with generating solutions. Our findings indicated that ChatGPT encountered significant conceptual knowledge difficulties across various categories, with a notable emphasis on representations and depth, where difficulties in representations hindered effective knowledge transfer.

1. Introduction

Artificial intelligence (AI), including the popular ChatGPT, has garnered significant attention from educational researchers due to its potential in the field of education [,]. ChatGPT has been recognized for its problem-solving capabilities across various disciplines []. This raises the question of whether ChatGPT can acquire a comprehensive conceptual understanding of concepts and relationships across diverse disciplines.

In a recent study, West [] compared the performance of ChatGPT 3.5 and ChatGPT 4.0 in terms of their understanding of introductory physics. The author observed that ChatGPT 3.5 occasionally responded like an expert physicist but lacked consistency, often displaying both impressive mastery and complete incoherence of the same topics. In contrast, ChatGPT 4.0 demonstrated several facets of true “understanding” such as the ability to engage in metaphorical thinking and utilize multiple representations. However, the author cautioned against claiming that ChatGPT 4.0 exhibited a comprehensive understanding of introductory physics on a global scale.

In this research, we investigated the conceptual understanding of ChatGPT in the field of chemistry, specifically focusing on the topic of Introduction to Material Science. In this study, we explored how ChatGPT performed when presented with problems typically assigned to college students enrolled on an Introduction to Material Science course. Two of the main aspects of the present research, AI and conceptual knowledge, are related to sustainable education.

1.1. Theoretical Background and Literature Review

1.1.1. Artificial Intelligence and ChatGPT in Education

Gocen and Aydemir [] highlighted several benefits of AI in education, including the ability to facilitate personalized learning at one’s own pace and the potential to make informed decisions using vast amounts of data. However, they also acknowledged the concern that an overemphasis on utilitarian perspectives may overshadow humanistic values.

Dwivedi et al. [] emphasized that AI, through its ability to predict and adapt to changes, offers systematic reasoning based on inputs and learning from expected outcomes. According to the Centre for Learning, Teaching and Development [], ChatGPT serves various functions in the classroom, including its use in higher education to support instructional activities while maintaining academic integrity. These activities encompass creative writing, problem solving, research projects, group projects, and classroom debates. Moreover, Aljanabi et al. [] argued that ChatGPT has opened possibilities regarding its use in education, including as an assistant for academic writing, a search engine, an assistant in coding, a detector of security vulnerabilities, and a social media expert and agent.

Firat [] found that, according to their frequency of recurrence, educators perceived ChatGPT as enabling “evolution of learning and education systems”, “changing role of educators”, “impact on assessment and evaluation”, “ethical and social considerations”, “future of work and employability”, “personalized learning”, “digital literacy and AI integration”, “AI as an extension of the human brain”, and “importance of human characteristics”.

Additionally, Dai et al. [] highlighted the potential of ChatGPT to provide personalized support in real-time, delivering tailored explanations and scaffolding to sensitively and coherently address individual learners’ difficulties.

Many educational applications of AI are available, including intelligent tutoring, technology-based learning platforms, and automated rating systems []. Research has shown that ChatGPT increases learning motivation and engagement among students []. In the study by Orrù et al. [], ChatGPT demonstrated its problem-solving capabilities by achieving highly probable outcomes in both practice problems and pooled problems, ranking among the top 5% of answer combinations.

1.1.2. Conceptual Knowledge of Chemistry

Cracolice et al. [] asserted that although learning algorithms can be valuable for problem solving, they should not be the sole method used to teach chemistry. Roth [] argued that meaningful conceptual knowledge requires a deeper understanding that goes beyond factual knowledge and necessitates a fresh perspective on the subject. This understanding should encompass prediction and the ability to construct explanations. Puk and Stibbards [] further emphasized that conceptual understanding involves recognizing the connections between different concepts. Additionally, Lansangan et al. [] suggested that representations can be effective in assessing knowledge in a discipline, providing alternatives to traditional assessment methods. These representations could take the form of written or drawn symbols, iconic gestures or diagrams, and spoken, gestured, written, or drawn indices.

Holme et al. [] conducted a study involving instructors who taught chemistry in higher-education settings, aiming to define conceptual understanding in chemistry. As a result, they identified five categories that define conceptual understanding:

- Transfer: the ability to apply core chemistry ideas to novel chemical situations.

- Depth: the capacity to reason about core chemistry ideas using skills that extend beyond rote memorization or algorithmic problem solving.

- Predict/explain: the capability of extending situational knowledge to predict and/or explain the behavior of chemical systems.

- Problem solving: demonstrating critical thinking and reasoning while solving problems, including those involving laboratory measurements.

- Translate: the ability to translate across different scales and representations.

These categories provide a framework to understand and evaluate conceptual understanding in the context of chemistry education. Considering the solution of chemistry problems by ChatGPT, we took into account that the problems could be from various levels of knowledge, according to Bloom’s taxonomy.

1.1.3. Bloom’s Knowledge Taxonomy

Researchers have utilized Bloom’s taxonomy of knowledge since the suggestion of this taxonomy. The taxonomy consists of six levels: remembering, understanding, application, analysis, synthesis, and evaluation. Educational researchers have utilized this taxonomy to measure the level of students’ knowledge in specific contexts. Prasad [] discussed how Bloom’s taxonomy could be used as a method to assess the critical thinking of students. A study of the textbook questions using Bloom’s taxonomy found that about 40% emphasized higher-order thinking []. Daher and Sleem [] used Bloom’s taxonomy to examine the level of traditional, video-based, and 360-degree video-based learning in a social studies classroom. They found that students who learned from the video and the 360-degree video contexts had significantly higher scores in the synthesis knowledge level than students who learned in a traditional context.

1.2. Research Goals and Rationale

AI, including ChatGPT, has been recognized as a valuable tool to support students’ learning in the field of science, particularly in chemistry [,]. However, for AI to effectively support learning, it needs to possess conceptual knowledge of scientific concepts and their relationships, specifically within the domain of chemistry. Previous research has examined various aspects related to conceptual knowledge in chemistry.

In this research, we aimed to investigate conceptual knowledge of ChatGPT in chemistry, specifically focusing on the topic of Introduction to Material Science. To guide our investigation, we adopted the theoretical framework proposed by Holme et al. [], which identifies key components of conceptual knowledge in Chemistry, including depth, predict/explain, problem solving, and translate.

The present study contributes to the future implementation of AI and ChatGPT in education, particularly in the sciences, ensuring their effective use. Furthermore, the findings of this research could inform the development of AI tools that exhibit fewer difficulties in solving scientific problems and possess enhanced conceptual knowledge relevant to the sciences. All the previous arguments indicate the relationship between the present research with sustainable education, where a more profound performance of AI generative tools contributes to the prevalence of sustainable education.

1.3. Research Question

What are the conceptual knowledge difficulties encountered by ChatGPT when solving chemistry problems pertaining to the topic of Introduction to Material Science?

2. Methodology

2.1. Research Context

The research was conducted within the framework of an Introduction to Material Science course, which serves as an introductory course to fundamental topics and concepts in the field of chemistry. The course encompasses essential elements, including particles, atoms, molecules, ions, chemical bonds, the periodic table, and the chemical and physical properties of substances. Furthermore, it covers basic chemical reactions, including their definitions, properties, and the principles of chemical kinetics. By participating in this course, students acquire a solid foundation in the fundamental principles of chemistry and develop a comprehensive understanding of its key components.

2.2. Data Collection Tools

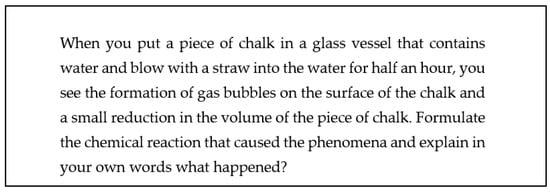

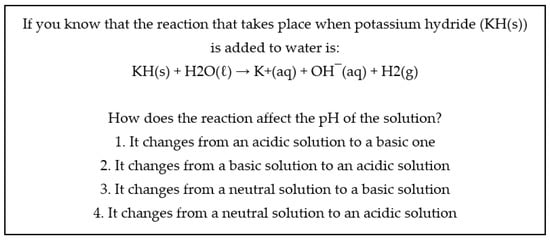

We gathered responses from ChatGPT on a set of chemistry problems pertaining to the topic of Introduction to Material Science. The problem set consisted of two types: open-ended problems, as exemplified by Figure 1; and multiple-choice problems, as exemplified by Figure 2.

Figure 1.

An open-ended chemistry problem.

Figure 2.

A multiple-choice chemistry problem.

2.3. Data Analysis Tools

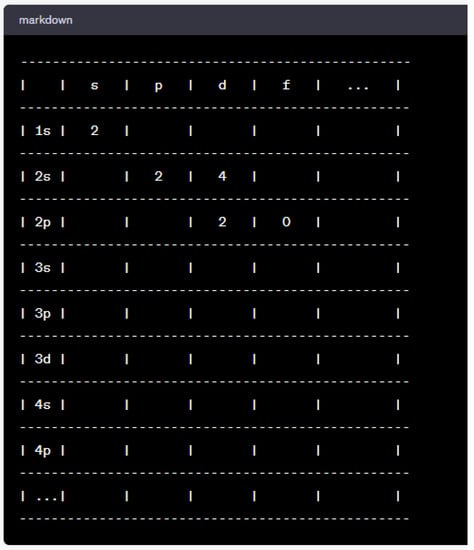

We conducted an analysis of ChatGPT’s responses to the chemistry problems using the theoretical framework proposed by Holme et al. []. Specifically, we examined the conceptual understanding difficulties faced by ChatGPT in relation to the categories outlined in the framework: transfer, depth, predict/explain, problem solving, and translate. To provide a comprehensive overview, Table 1 presents the themes associated with each category, along with the corresponding difficulties observed during the analysis.

Table 1.

Themes of the conceptual knowledge categories.

2.4. Validity and Reliability

We conducted an analysis of 30 chemistry problems pertaining to the topic of Introduction to Material Science, consisting of 15 open-ended problems and 15 multiple-choice problems. From this dataset, we analyzed the responses generated by ChatGPT for 5 open-ended problems and 8 multiple-choice problems. Our analysis did not reveal any new types of difficulties related to conceptual understanding. This suggests that we reached saturation concerning the conceptual understanding categories and their properties [,].

To ensure a reliable data analysis, two experienced coders utilized the adopted conceptual framework to code the ChatGPT answers. Their coding process involved identifying sentences indicative of conceptual knowledge themes. The Cohen’s Kappa coefficients obtained for the coder agreement ranged from 0.89 to 0.91 for the conceptual understanding categories, which was considered acceptable. These results further support the reliability of our data analysis.

3. Results

In the following section, we present the solutions provided by ChatGPT for various chemistry problems. Throughout the analysis, we highlighted the difficulties encountered by ChatGPT specifically related to the five aspects of conceptual understanding as outlined by Holme et al. [].

Table 2 provides detailed information about the problem types, Bloom’s level of the given chemical problems, and the specific conceptual difficulties encountered by the generative AI.

Table 2.

Problem types, Bloom’s level of the given problems, and the specific conceptual difficulties encountered by the generative AI.

Table 2 shows that, generally speaking, ChatGPT encountered no conceptual difficulties in solving remembering problems; it encountered depth difficulties of the understanding type when solving understanding, application, and analysis problems; it encountered transfer difficulties when solving synthesis problems; and it encountered translation difficulties when solving evaluation problems.

The following section provides a comprehensive description of the various conceptual difficulties encountered by ChatGPT.

3.1. Difficulties Related to the Depth Issue

The depth category comprises two sub-categories that pertain to the conceptual challenges encountered by ChatGPT: awareness of chemical rules and understanding the nature of specific compounds. In the following sections, we provide a detailed description of each sub-category.

3.1.1. First Depth Issue: Awareness of the Chemical Rules

Table 3 features a problem in which ChatGPT’s response exhibited a difficulty associated with the awareness of chemical rules.

Table 3.

ChatGPT’s difficulty with awareness of chemical rules.

Based on the explanation, it appeared that ChatGPT lacked awareness of the rules governing displacement reactions of halides. This highlighted a depth-related issue regarding ChatGPT’s understanding of the chemistry rules associated with such reactions. Specifically, ChatGPT was not aware that the reactivity of halogens decreases in the following order: F2 > Cl2 > Br2 > I2.

3.1.2. Second Depth Issue: Awareness of the Nature of a Specific Compound

Table 4 includes a problem that, when ChatGPT answered, the answer included a difficulty related to the awareness of the nature of a specific compound.

Table 4.

ChatGPT’s difficulty with awareness of the nature of a specific compound.

It appeared that there may have been a misunderstanding as KH should not be considered an acidic solution. It is right that most of the inorganic compounds with H are acidic, but KH (potassium hydride) reacts as a basic compound when added to water.

3.2. Difficulties Related to the Problem-Solving Issue

Table 5 includes a problem that, when ChatGPT answered, the answer included a difficulty related to the problem-solving category.

Table 5.

ChatGPT’s difficulty with problem solving.

During the problem-solving process, ChatGPT demonstrated correct reasoning. However, there were errors in the arithmetic computations, leading to an incorrect answer.

Upon receiving the problem again after a 15 min interval, ChatGPT provided another incorrect answer. The mistakes were attributed to miscalculations in the determination of q and the number of moles of the product.

When informed of its mistake in calculating q, ChatGPT responded politely:

“I apologize for the mistake in my previous response. Thank you for bringing it to my attention. Here is the corrected calculation”. The answer again included a mistake in the calculation. We requested ChatGPT to reconsider the calculation: “are you sure in calculating q: q = 0.3 kg × 4.18 J/(g·°C) × 2.2 °C = 2.90 kJ?”. ChatGPT replied: “I apologize again for the mistake. Thank you for bringing it to my attention. The correct calculation for q is…”

In this instance, ChatGPT provided an incorrect summation. Instead of the accurate value of 2.7558 KJ, it mistakenly calculated the sum as 2.90 KJ.

ChatGPT acknowledged the repeated miscalculation and apologized, stating: “I apologize for any confusion my previous mistake may have caused”.

In this case, the difficulty encountered by ChatGPT related to problem solving and critical thinking applied to the given problem. The expression (200 g + 100 g) * 4.18 J/g·K * 2.2 °C indicated that q should be greater than 2400 (300 * 4 * 2). Therefore, ChatGPT should have recognized that the previously provided answer was illogical.

3.3. Difficulties Related to the Explanation Issue

Table 6 includes a problem that, when ChatGPT answered, the answer included a difficulty related to the explanation category.

Table 6.

ChatGPT’s difficulty with explanation.

In the given solution, the chemical reaction balancing was correct. However, the conclusion drawn about the type of solution produced and the accompanying explanation were both incorrect. It should be noted that the dissociation of Cu(NO3)2 does not yield HNO3.

The accurate answer was that the solution should shift from basic to neutral due to the chemical reaction. Cu(OH)2(s) forms a precipitate and the Ksp (Cu(OH)2) at 25 °C is 1.6 × 10–19, resulting in a negligible amount of OH− ions. Consequently, the solution becomes neutral.

3.4. Difficulties Related to the Translation Issue

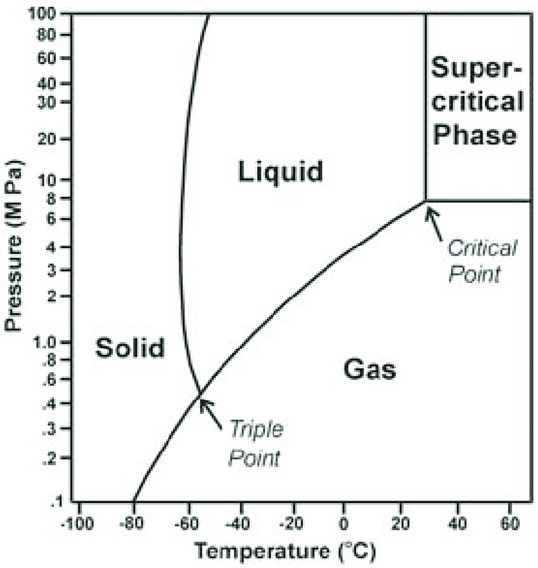

ChatGPT encountered challenges in the translation category, particularly when the problem involved an image. Table 7 presents a problem where ChatGPT’s response exhibited a difficulty associated with the translation category.

Table 7.

ChatGPT’s difficulty related to translation.

The last paragraph in the solution is not correct, as ChatGPT does not have the capability to read or process images. ChatGPT informed us that it is unable to perform image analyses due to its limitations. Therefore, ChatGPT faced difficulties in translating from a graphical representation to a symbolic representation.

3.5. Difficulties Related to the Transfer Issue

Table 8 presents a problem where ChatGPT’s response exhibited a difficulty associated with the awareness of the transfer from the context of a chemical compound to that of an everyday compound such as chalk.

Table 8.

ChatGPT’s difficulty related to awareness of the transfer from a chemical to a chalk element.

The above answer failed to mention the decomposition of the bicarbonate as the source of carbon dioxide released on the surface of the chalk. Instead of acknowledging this specific process, which would have shown ChatGPT’s ability to transfer from the chemical compound context to the chalk context, the generative AI simply stated that carbon dioxide is a byproduct.

4. Discussion

Researchers have, since the advent of the computer, been interested in the potential of technology in various aspects of education [,,]. Recently, educational researchers have shown increasing interest in the potential of AI, including ChatGPT, for teaching and learning [,,,,]. The objective of this study was to examine the conceptual knowledge difficulties encountered by ChatGPT when addressing chemistry problems within a specific topic.

ChatGPT encountered no conceptual difficulties in solving remembering problems, which could have been due to the fact that the remembering problem entailed only returning to the content with which ChatGPT was supplied. It encountered depth difficulties of the understanding type when solving understanding, application, and analysis problems. ChatGPT encountered transfer difficulties when solving synthesis problems and it encountered translation difficulties when solving evaluation problems. The previous two types of difficulty may have been due the textual nature of ChatGPT as the two difficulties were conditioned by the ability to translate from one representation into another [].

The findings revealed that although ChatGPT did not face conceptual difficulties in 13 out of 30 chemistry problems, it faced challenges across all five categories of conceptual knowledge, with a higher prevalence of difficulties observed in the depth category. According to Holme et al. [], the depth category encompasses the ability to reason about chemistry concepts beyond simple memorization or algorithmic problem-solving. In our research, these challenges manifested as difficulties in understanding chemical rules, comprehending the nature of specific compounds, and grasping the reasons behind chemical phenomena. These characteristics can lead to an unclear understanding of the subject matter [], highlighting the need to enhance the functioning of AI, including ChatGPT, to improve its capability to address scientific questions and minimize misconceptions.

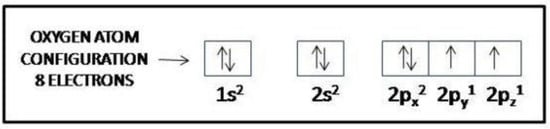

Regarding representations, in addition to the argument above, ChatGPT, being text-based generative AI, can encounter difficulties in this aspect. The limitations stem from its inability to directly generate or display visual figures. To illustrate this, we engaged ChatGPT in a conversation about representations in chemistry. It mentioned various types of representations, including chemical formulas, structural formulas, ball-and-stick models, space-filling models, Lewis dot structures, electron configurations, reaction equations, and the periodic table. However, when we specifically requested a figure illustrating electron configurations, ChatGPT acknowledged its limitations and apologized for its inability to generate or display visual figures. Nevertheless, it offered to describe the concept and provide an example. For instance, the electron configuration of oxygen (O) is represented as 1s^2 2s^2 2p^4.

Upon comparing this textual representation with the one in Figure 3, it became evident that ChatGPT would benefit from advancing beyond a purely text-based approach, incorporating symbol and figure-based capabilities to enhance its understanding and communication of chemistry concepts.

Figure 3.

The electron configuration for oxygen.

When we requested ChatGPT to provide a figure illustrating the electron configuration of oxygen once again, it presented the representation shown in Figure 4. This indicated that ChatGPT had the potential to generate visual representations and had improved its capabilities in this regard.

Figure 4.

Electron configuration of oxygen provided by ChatGPT.

When prompted to provide contexts for using the multi-line representation of electron configurations, ChatGPT identified three scenarios: visualizing energy levels, comparing electron configurations, and identifying valence electrons.

To address the limitations of ChatGPT’s text-based capabilities, researchers have proposed the use of converters that can transform text into graphical representations, as suggested by Jiang et al. [].

In previous studies, there have been mixed findings regarding ChatGPT’s performance as a learner. Huh [] demonstrated that ChatGPT’s performance in parasitology fell short compared with that of a Korean student, while Juhi et al. [] found that ChatGPT’s predictions and explanations for drug–drug interactions were only partially accurate. On the other hand, Kung et al. conducted a study in which ChatGPT successfully completed the United States Medical Licensing Examination without human assistance.

Although the present study did not directly compare ChatGPT’s performance with that of college students in solving chemistry problems, it identified the conceptual knowledge difficulties that ChatGPT encountered when answering these problems. These difficulties could be attributed to ChatGPT’s nature as generative AI as it had not previously been exposed to the specific chemistry problems presented. The future development of ChatGPT is expected to enhance its ability to handle new scientific problems, including those in the field of chemistry.

5. Conclusions

ChatGPT was requested to solve 30 chemistry problems, where it solved 13 out of 30 problems (43.33% of the problems) without showing conceptual difficulties. This showed that ChatGPT could be a promising accompanier of the learner in solving chemistry problems. Thus, it is recommended that ChatGPT be integrated into the chemistry classroom in order to support students’ learning.

ChatGPT was also requested to answer questions regarding its ability in issues related to conceptual understanding in chemistry, which revealed that it could work with representations but not with sound ones. New generative AI has the potential to work with representations, which could lead to deeper knowledge of various disciplines, including chemistry.

The aim of this research was to examine the conceptual understanding challenges faced by ChatGPT when responding to chemistry questions on the topic of Introduction to Material Science. The findings revealed that ChatGPT encountered difficulties across all aspects of conceptual understanding, with particular challenges observed in the depth category and the translation category. As ChatGPT operates as text-based generative AI, it is recommended that the conversion of text models into pictorial or graphical models is explored using tools such as Graphologue, as suggested by Jiang et al. []. Implementing such recommendations would empower learners in the era of generative AI [].

The difficulties encountered by ChatGPT in comprehending and responding to chemistry problems within the Introduction to Material Science domain highlight the limitations of AI tools in complex subject areas. Whilst ChatGPT shows promise as a teaching and learning resource, improvements are needed to enhance its conceptual understanding and analytical abilities, especially in representations and problem-solving skills. More advanced algorithms and training data encompassing a wider range of chemistry problems may be necessary to address these limitations.

This research sheds light on the conceptual understanding difficulties ChatGPT faced in the chemistry discipline, specifically in an Introduction to Material Science course. The tool encountered challenges in comprehending complex representations and generating in-depth, explainable solutions. These findings emphasize the need for ongoing research and development to refine AI tools like ChatGPT and enhance their capabilities to maximize their potential as adequate educational resources in chemistry education.

In summary, our findings revealed that ChatGPT encountered significant conceptual knowledge difficulties across various categories, with a particular emphasis on representations and depth of understanding. Difficulties in representations hindered the effective transfer of knowledge. ChatGPT struggled to comprehend and interpret complex chemical structures or formulas, resulting in inaccurate or incomplete solutions. This limitation was most noticeable when problems required a high level of depth and critical thinking, suggesting a need for further improvements in ChatGPT’s analytical capabilities. Furthermore, ChatGPT faced challenges in predicting and explaining the answers it provided. The tool often generated solutions that needed clear explanations or reasoning, making it difficult for users to understand the underlying principles behind the solutions. This limitation may hinder effective learning and its potential as an educational resource. Developers of generative AI could benefit from carefully reading epistemic studies on the role of transformers and knowledge, which could help them to develop more complex generative AI tools that address fewer conceptual understanding difficulties.

Author Contributions

Conceptualization, A.R.; methodology, W.D.; software, A.R.; formal analysis, W.D., H.D. and A.R.; investigation, H.D. and A.R.; data curation, A.R.; writing—original draft preparation, W.D., H.D. and A.R. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Gocen, A.; Aydemir, F. Artificial Intelligence in Education and Schools. Res. Educ. Media 2020, 12, 13–21. [Google Scholar] [CrossRef]

- Dai, Y.; Liu, A.; Lim, C.P. Reconceptualizing ChatGPT and generative AI as a student-driven innovation in higher education. In Proceedings of the 33rd CIRP Design Conference, Sydney, Australia, 17–19 May 2023. [Google Scholar] [CrossRef]

- The Centre for Learning, Teaching, and Development. ChatGPT for Learning and Teaching; University of the Witwatersrand: Johannesburg, South Africa, 2023. [Google Scholar]

- West, C.G. AI and the FCI: Can ChatGPT project an understanding of introductory physics? arXiv 2023, arXiv:2303.01067. [Google Scholar]

- Dwivedi, Y.K.; Kshetri, N.; Hughes, L.; Slade, E.L.; Jeyaraj, A.; Kar, A.K.; Wright, R. “So what if ChatGPT wrote it?” Multidisciplinary perspectives on opportunities, challenges and implications of generative conversational AI for research, practice and policy. Int. J. Inf. Manag. 2023, 71, 102642. [Google Scholar] [CrossRef]

- Aljanabi, M.; Ghazi, M.; Ali, A.H.; Abed, S.A. ChatGpt: Open Possibilities. Iraqi J. Comput. Sci. Math. 2023, 4, 62–64. [Google Scholar] [CrossRef]

- Firat, M. What ChatGPT means for universities: Perceptions of scholars and students. J. Appl. Learn. Teach. 2023, 6, 1. [Google Scholar] [CrossRef]

- Adiguzel, T.; Kaya, M.H.; Cansu, F.K. Revolutionizing education with AI: Exploring the transformative potential of ChatGPT. Contemp. Educ. Technol. 2023, 15, ep429. [Google Scholar] [CrossRef] [PubMed]

- Muñoz, S.A.; Gayoso, G.G.; Huambo, A.C.; Tapia, R.D.; Incaluque, J.L.; Aguila, O.E.; Cajamarca, J.C.; Acevedo, J.E.; Rivera, H.V.; Arias-Gonzáles, J.L. Examining the Impacts of ChatGPT on Student Motivation and Engagement. Soc. Space 2023, 23, 1–27. [Google Scholar]

- Orrù, G.; Piarulli, A.; Conversano, C.; Gemignani, A. Human-like problem-solving abilities in large language models using ChatGPT. Front. Artif. Intell. 2023, 6, 1199350. [Google Scholar] [CrossRef]

- Cracolice, M.S.; Deming, J.C.; Ehlert, B. Concept Learning versus Problem Solving: A Cognitive Difference. J. Chem. Educ. 2008, 85, 873. [Google Scholar] [CrossRef]

- Roth, K.J. Developing Meaningful Conceptual Understanding in Science. In Dimensions of Thinking and Cognitive Instruction; Jones, B.F., Idol, L., Eds.; Lawrence Erlbaum Associates: Hillsdale, NJ, USA, 1990; pp. 139–175. [Google Scholar]

- Puk, T.; Stibbards, A. Growth in Ecological Concept Development and Conceptual Understanding in Teacher Education: The Discerning Teacher. Int. J. Environ. Sci. Educ. 2011, 6, 191–211. [Google Scholar]

- Lansangan, R.V.; Orleans, A.V.; Camacho, V.M.I. Assessing conceptual understanding in chemistry using representation. Adv. Sci. Lett. 2018, 24, 7930–7934. [Google Scholar] [CrossRef]

- Holme, T.A.; Luxford, C.J.; Brandriet, A. Defining Conceptual Understanding in General Chemistry. J. Chem. Educ. 2015, 92, 1477–1483. [Google Scholar] [CrossRef]

- Prasad, G.N.R. Evaluating student performance based on bloom’s taxonomy levels. J. Phys. Conf. Ser. 2021, 1797, 012063. [Google Scholar] [CrossRef]

- Assaly, I.R.; Smadi, O.M. Using Bloom’s Taxonomy to Evaluate the Cognitive Levels of Master Class Textbook’s Questions. Engl. Lang. Teach. 2015, 8, 100. [Google Scholar] [CrossRef]

- Daher, W.; Sleem, H. Middle School Students’ Learning of Social Studies in the Video and 360-Degree Videos Contexts. IEEE Access 2021, 9, 78774–78783. [Google Scholar] [CrossRef]

- Cooper, G. Examining Science Education in ChatGPT: An Exploratory Study of Generative Artificial Intelligence. J. Sci. Educ. Technol. 2023, 32, 444–452. [Google Scholar] [CrossRef]

- Leon, A.; Vidhani, D. ChatGPT Needs a Chemistry Tutor Too. 2023. Available online: https://chemrxiv.org/engage/api-gateway/chemrxiv/assets/orp/resource/item/642f2351a41dec1a5699bf9f/original/chat-gpt-needs-a-chemistry-tutor-too.pdf (accessed on 1 July 2023).

- Daher, W. Saturation in Qualitative Educational Technology Research. Educ. Sci. 2023, 13, 98. [Google Scholar] [CrossRef]

- Daher, W.; Ashour, W.; Hamdan, R. The Role of ICT Centers in the Management of Distance Education in Palestinian Universities during Emergency Education. Educ. Sci. 2022, 12, 542. [Google Scholar] [CrossRef]

- Daher, W.; Baya’a, N.; Jaber, O.; Shahbari, J.A. A Trajectory for Advancing the Meta-Cognitive Solving of Mathematics-Based Programming Problems with Scratch. Symmetry 2020, 12, 1627. [Google Scholar] [CrossRef]

- Daher, W.; Mokh, A.A.; Shayeb, S.; Jaber, R.; Saqer, K.; Dawood, I.; Bsharat, M.; Rabbaa, M. The Design of Tasks to Suit Distance Learning in Emergency Education. Sustainability 2022, 14, 1070. [Google Scholar] [CrossRef]

- Abuzant, M.; Ghanem, M.; Abd-Rabo, A.; Daher, W. Quality of Using Google Classroom to Support the Learning Processes in the Automation and Programming Course. Int. J. Emerg. Technol. Learn. (iJET) 2021, 16, 72–87. [Google Scholar] [CrossRef]

- Toukiloglou, P.; Xinogalos, S. A Systematic Literature Review on Adaptive Supports in Serious Games for Programming. Information 2023, 14, 277. [Google Scholar] [CrossRef]

- Munir, H.; Vogel, B.; Jacobsson, A. Artificial Intelligence and Machine Learning Approaches in Digital Education: A Systematic Revision. Information 2022, 13, 203. [Google Scholar] [CrossRef]

- Lameras, P.; Arnab, S. Power to the Teachers: An Exploratory Review on Artificial Intelligence in Education. Information 2022, 13, 14. [Google Scholar] [CrossRef]

- Raschka, S.; Patterson, J.; Nolet, C. Machine Learning in Python: Main Developments and Technology Trends in Data Science, Machine Learning, and Artificial Intelligence. Information 2020, 11, 193. [Google Scholar] [CrossRef]

- How, M.-L.; Cheah, S.-M.; Chan, Y.-J.; Khor, A.C.; Say, E.M.P. Artificial Intelligence-Enhanced Decision Support for Informing Global Sustainable Development: A Human-Centric AI-Thinking Approach. Information 2020, 11, 39. [Google Scholar] [CrossRef]

- Rahmawati, D.; Purwantoa, P.; Subanji, S.; Hidayanto, E.; Anwar, R.B. Process of Mathematical Representation Translation from Verbal into Graphic. Int. Electron. J. Math. Educ. 2017, 12, 367–381. [Google Scholar] [CrossRef] [PubMed]

- Goris, T.V.; Dyrenfurth, M.J. How Electrical Engineering Technology Students Understand Concepts of Electricity. Comparison of misconceptions of freshmen, sophomores, and seniors. In Proceedings of the 2013 ASEE Annual Conference & Exposition, Atlanta, GA, USA, 23–26 June 2013; pp. 23–668. [Google Scholar] [CrossRef]

- Jiang, P.; Rayan, J.; Dow, S.P.; Xia, H. Graphologue: Exploring Large Language Model Responses with Interactive Diagrams. arXiv 2023, arXiv:2305.11473. [Google Scholar]

- Huh, S. Are ChatGPT’s knowledge and interpretation ability comparable to those of medical students in Korea for taking a parasitology examination?: A descriptive study. J. Educ. Evaluation Health Prof. 2023, 20, 1. [Google Scholar] [CrossRef]

- Juhi, A.; Pipil, N.; Santra, S.; Mondal, S.; Behera, J.K.; Mondal, H.; Behera, J.K., IV. The capability of ChatGPT in predicting and explaining common drug-drug interactions. Cureus 2023, 15, e36272. [Google Scholar] [CrossRef]

- Gašević, D.; Siemens, G.; Sadiq, S. Empowering learners for the age of artificial intelligence. Comput. Educ. Artif. Intell. 2023, 4, 100130. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).